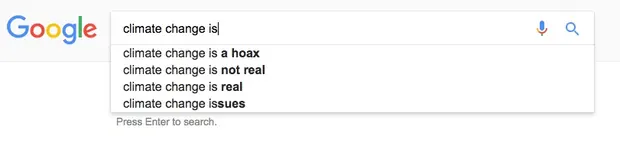

It was only a few years ago that Google used to highly rank:

- Lots of untrue, anti-vax propaganda

- Inaccurate medical info

- Hyper-partisan political content

- Conspiracy theories about the climate change, holocaust, flat-earth, & more

A thread about how that changed.

- Lots of untrue, anti-vax propaganda

- Inaccurate medical info

- Hyper-partisan political content

- Conspiracy theories about the climate change, holocaust, flat-earth, & more

A thread about how that changed.

Trigger warning: the catalyst was a school shooting.

Specifically, the Sandy Hook elementary school shooting, in which a gunman massacred 20 first-grade elementary students. Six and seven year olds. It& #39;s almost too terrifying to comprehend; I choke up even thinking about it.

Specifically, the Sandy Hook elementary school shooting, in which a gunman massacred 20 first-grade elementary students. Six and seven year olds. It& #39;s almost too terrifying to comprehend; I choke up even thinking about it.

The awfulness of that event was compounded by the rise of an Internet-spread conspiracy theory.

For those unfamiliar, NY Mag did an in-depth piece on the horrifying hoax, and how Google& #39;s search results were the starting point for many of its amplifiers: https://nymag.com/intelligencer/2016/09/the-sandy-hook-hoax.html">https://nymag.com/intellige...

For those unfamiliar, NY Mag did an in-depth piece on the horrifying hoax, and how Google& #39;s search results were the starting point for many of its amplifiers: https://nymag.com/intelligencer/2016/09/the-sandy-hook-hoax.html">https://nymag.com/intellige...

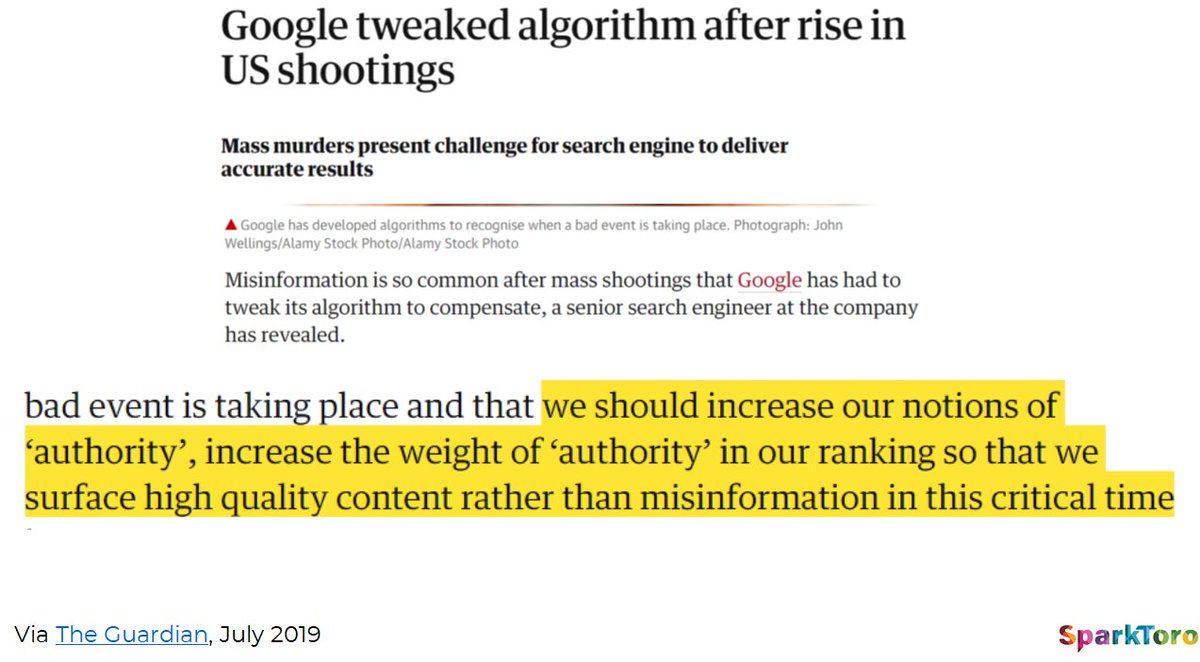

Mass shootings like Sandy Hook spurred Google& #39;s engineers to change how they weighted:

Popularity signals (links, clicks, engagement, etc)

VS.

Accuracy signals (factual info, trustworthy sources, etc)

Excellent reporting on that here: https://www.theguardian.com/technology/2019/jul/02/google-tweaked-algorithm-after-rise-in-us-shootings">https://www.theguardian.com/technolog...

Popularity signals (links, clicks, engagement, etc)

VS.

Accuracy signals (factual info, trustworthy sources, etc)

Excellent reporting on that here: https://www.theguardian.com/technology/2019/jul/02/google-tweaked-algorithm-after-rise-in-us-shootings">https://www.theguardian.com/technolog...

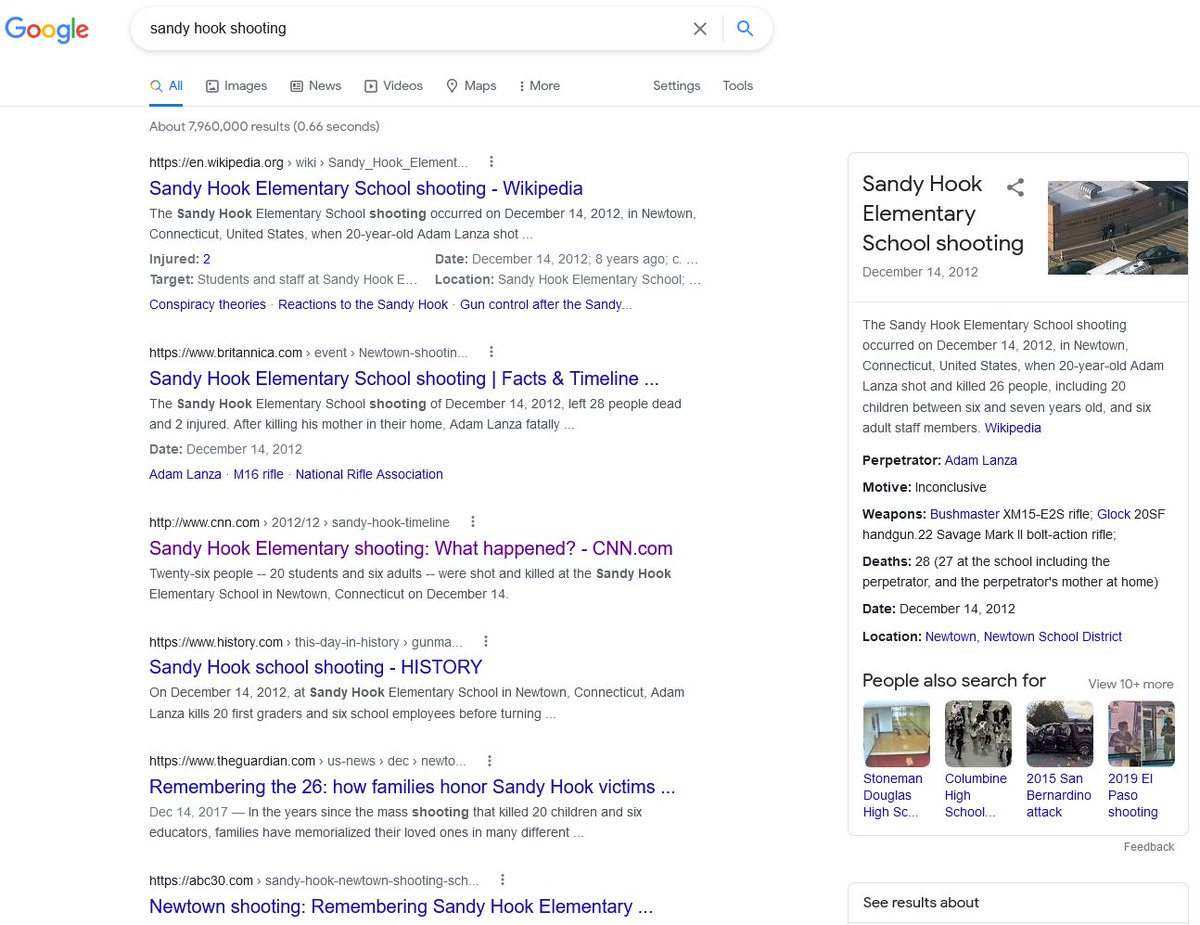

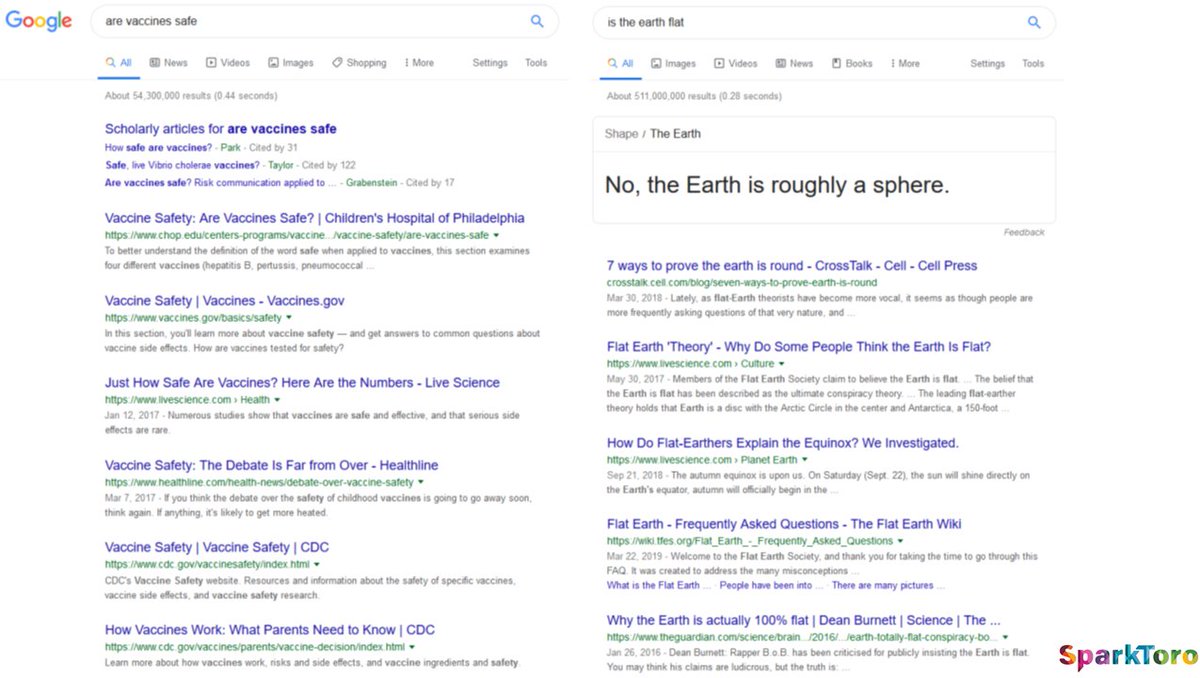

You& #39;ll no longer find misinformation or disinformation in searches that previously surfaced a lot of it (e.g. https://www.google.com/search?q=sandy+hook+shooting)">https://www.google.com/search...

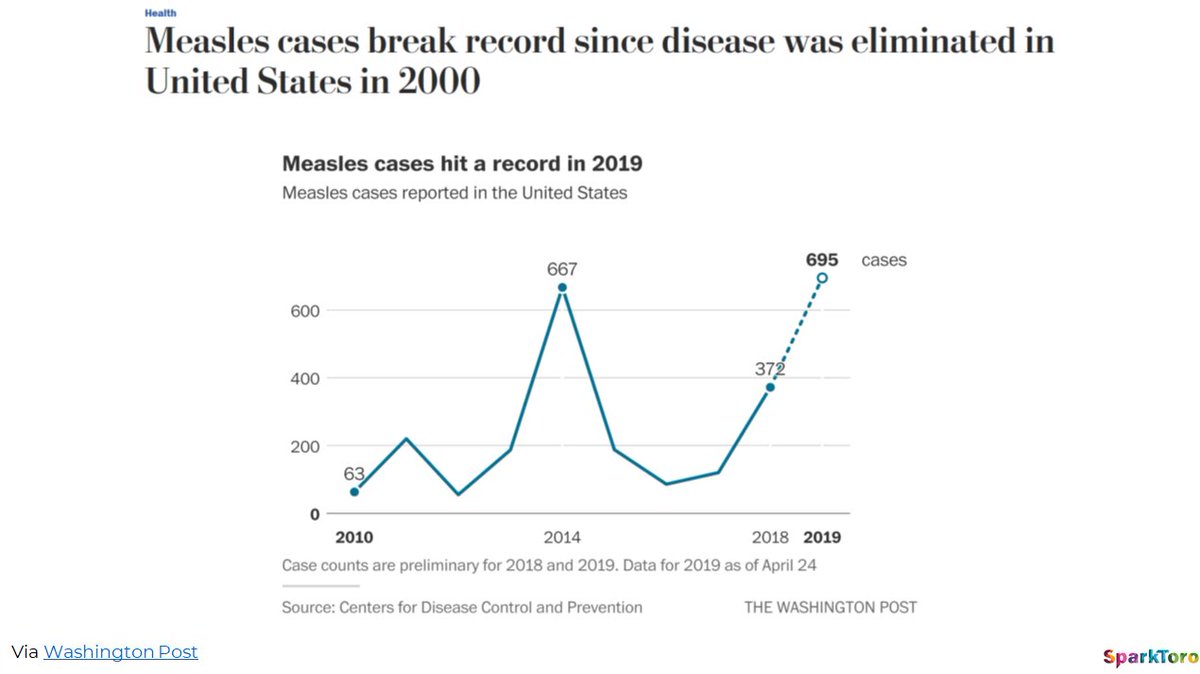

Tragically, in the medical world, Facebook & Google& #39;s efforts around this have been too little, too late.

Anti-vax conspiracy content, and its spread in search results and social media, can be directly correlated (time series + geo) to the rise in Western vaccine hesitancy.

Anti-vax conspiracy content, and its spread in search results and social media, can be directly correlated (time series + geo) to the rise in Western vaccine hesitancy.

Google& #39;s trying, and they deserve to be commended for stepping up here. But the Pandora& #39;s Box was opened.

I& #39;m hopeful that thanks to these algorithmic shifts toward accurate, truthful content > engagement-optimized conspiracy hoaxes, it& #39;ll be harder for future misinfo to spread.

I& #39;m hopeful that thanks to these algorithmic shifts toward accurate, truthful content > engagement-optimized conspiracy hoaxes, it& #39;ll be harder for future misinfo to spread.

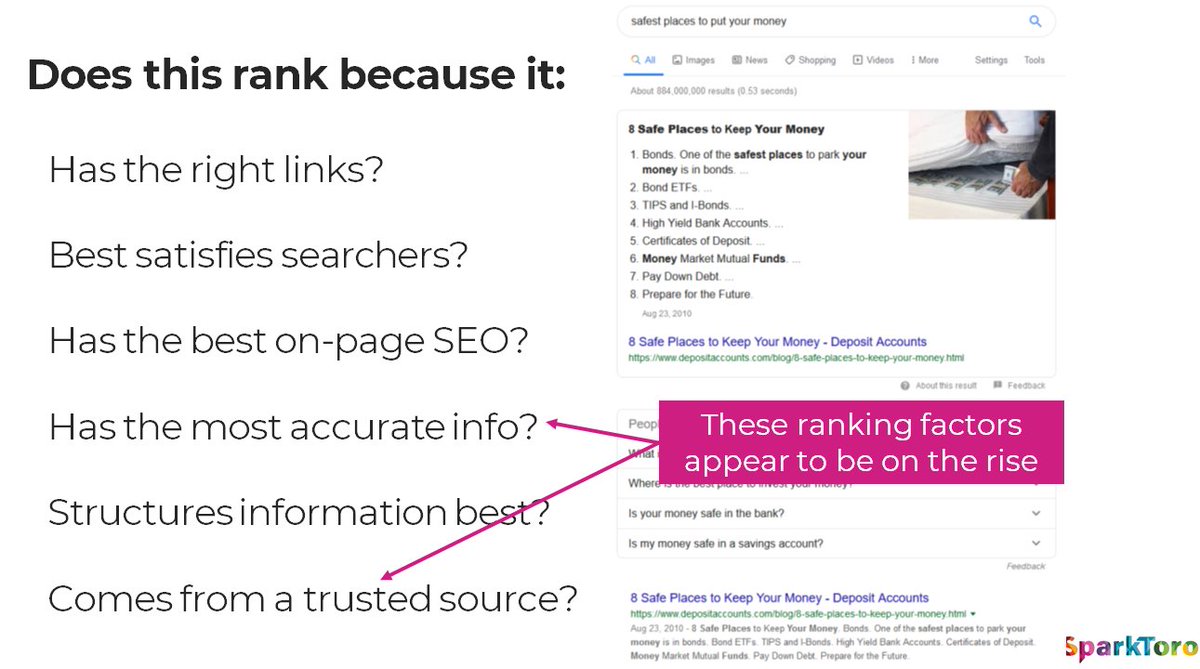

You can see what this has done to sites with medical misinfo like Mercola ( https://twitter.com/CyrusShepard/status/1143412325925265408).

The">https://twitter.com/CyrusShep... same is true for many sites whose accuracy and trustworthiness in sectors like medicine, finance, politics, history, etc. is subpar (or intentionally misleading).

The">https://twitter.com/CyrusShep... same is true for many sites whose accuracy and trustworthiness in sectors like medicine, finance, politics, history, etc. is subpar (or intentionally misleading).

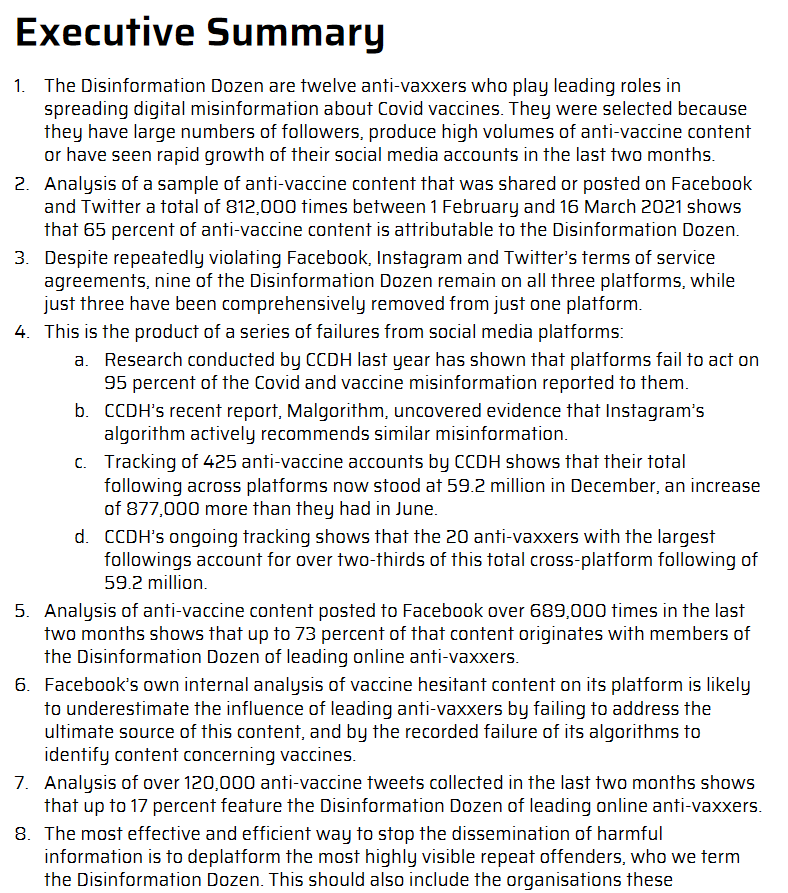

Good example is the "disinformation dozen," whose work can be directly attributed to more than 65% of all vaccine misinformation on Facebook & Twitter: https://www.cbsnews.com/news/vaccine-disinformation-social-media-center-for-countering-digital-hate-report/

Google">https://www.cbsnews.com/news/vacc... has demoted *all* of these sites (best I can tell).

Google">https://www.cbsnews.com/news/vacc... has demoted *all* of these sites (best I can tell).

If you& #39;re in these sectors, it might pay to ask if your content fits the criteria Google& #39;s now requiring.

(NOTE: I& #39;m not in SEO! If interested, you should follow folks like @lilyraynyc, @Marie_Haynes, & @dr_pete who are far more knowledgeable on these topics)

(NOTE: I& #39;m not in SEO! If interested, you should follow folks like @lilyraynyc, @Marie_Haynes, & @dr_pete who are far more knowledgeable on these topics)

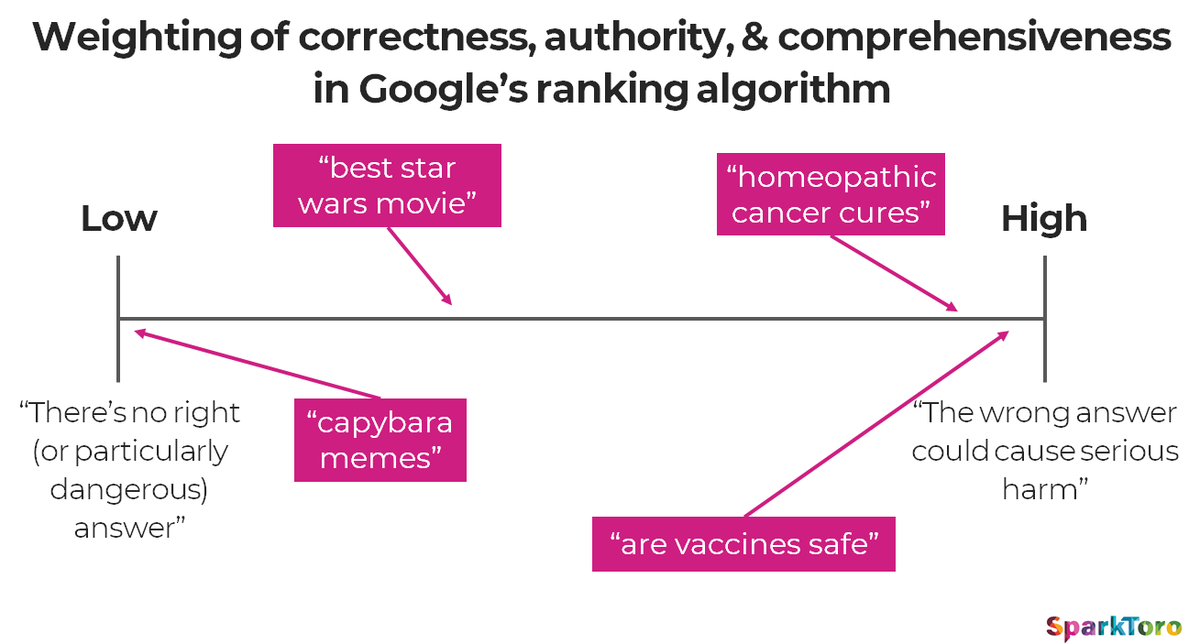

IMO, weighting of these factual / content quality signals happens on a scale and depends on the search query.

In some sectors ("capybara memes") there& #39;s not much need for trustworthy sources > high-engagement ones. In other sectors ("are vaccines safe?") it really matters.

In some sectors ("capybara memes") there& #39;s not much need for trustworthy sources > high-engagement ones. In other sectors ("are vaccines safe?") it really matters.

All of the above is from a 2019 presentation I gave prior to @SparkToro& #39;s launch. I& #39;ve made the full version available here: https://drive.google.com/file/d/1498Hi1rA8SzaU1iWw807zW4kGZsI8KWd/view?usp=sharing">https://drive.google.com/file/d/14...

Read on Twitter

Read on Twitter