A toy example of why test sensitivity and specificity matter in serosurveys.

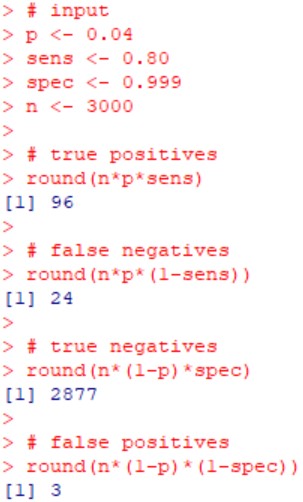

Imagine population seroprevalence is 4%. Test sensitivity is 80%. Specificity is 99.9%. For a random sample of 3000 participants, you would expect ~100 positives, 3% of which will be false positives.

Imagine population seroprevalence is 4%. Test sensitivity is 80%. Specificity is 99.9%. For a random sample of 3000 participants, you would expect ~100 positives, 3% of which will be false positives.

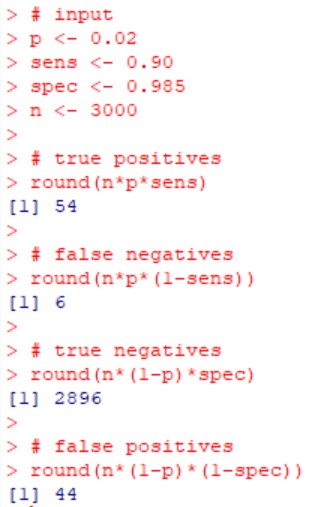

Now imagine that seroprevalence is 2% (lower). Test sensitivity is 90% (higher). Specificity is 98.5% (lower). For a random sample of 3000 participants, you would expect ~100 positives, 45% of which will be false positives.

Same number of positives, different seroprevalence.

Same number of positives, different seroprevalence.

How do we whether we are in the 2% or 4% seroprevalence setting? It depends on the sensitivity/specificity of the test. But the tests are so new, that we aren& #39;t sure how well they work. Which is why the safest conclusion is that seroprevalence is "low," no more precise than that.

Want to learn more about sensitivity and specificity? My thread here. https://twitter.com/nataliexdean/status/1238518203736903680?s=20">https://twitter.com/nataliexd...

Also, a public apology for making people look at grainy screenshots of R code!

Lots of nice tutorials out there on this topic.

https://online.stat.psu.edu/stat507/node/71/

https://online.stat.psu.edu/stat507/n... href=" http://sphweb.bumc.bu.edu/otlt/MPH-Modules/BS/BS704_Probability/BS704_Probability4.html">https://sphweb.bumc.bu.edu/otlt/MPH-...

Lots of nice tutorials out there on this topic.

https://online.stat.psu.edu/stat507/node/71/

Read on Twitter

Read on Twitter