Very interesting new preprint by Eran Bendavid and colleagues reports seroprevalence estimates from Santa Clara county. Great to have seroprevalence work start to emerge, but I& #39;d be skeptical of the 2-4% seroprevalence result. 1/8 https://www.medrxiv.org/content/10.1101/2020.04.14.20062463v1">https://www.medrxiv.org/content/1...

@nataliexdean gives an excellent overview here and includes a few caveats to keep in mind. 2/8 https://twitter.com/nataliexdean/status/1251309217215942656">https://twitter.com/nataliexd...

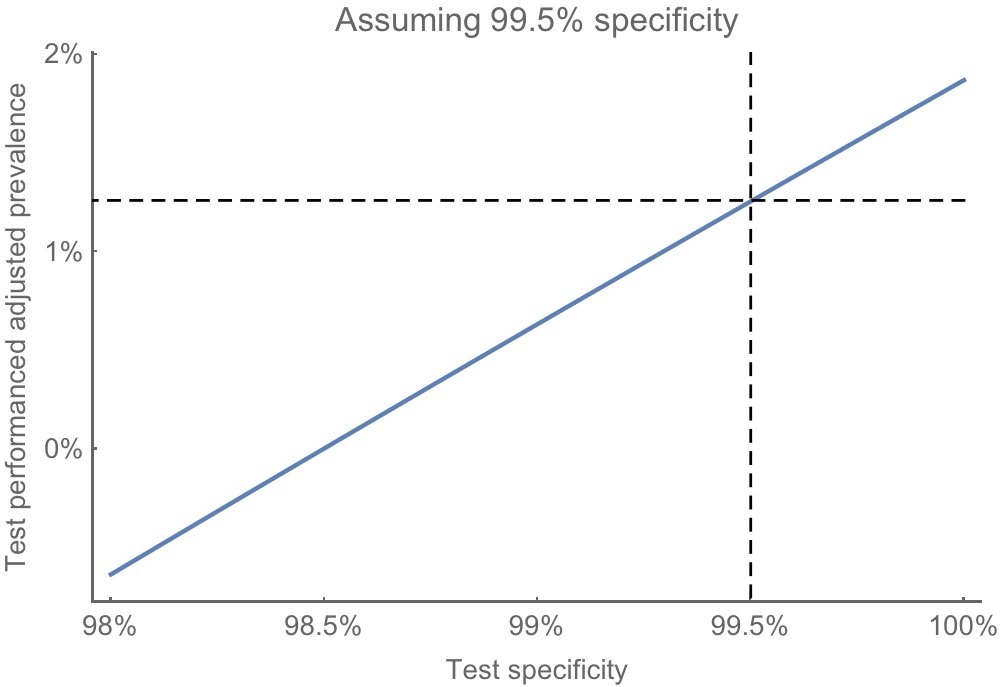

I& #39;d pay particular attention to the dependence of results on test performance. The authors assume that the antibody test has 99.5% specificity (point estimate) based on manufacturer + Stanford validation samples where 399 out of 401 pre-COVID samples showed as negative. 3/8

Using equation from the appendix we can see how the estimate of prevalence varies with test specificity. A specificity of 99.5% converts an observed 1.5% positive to an estimated prevalence of 1.3%. 4/8

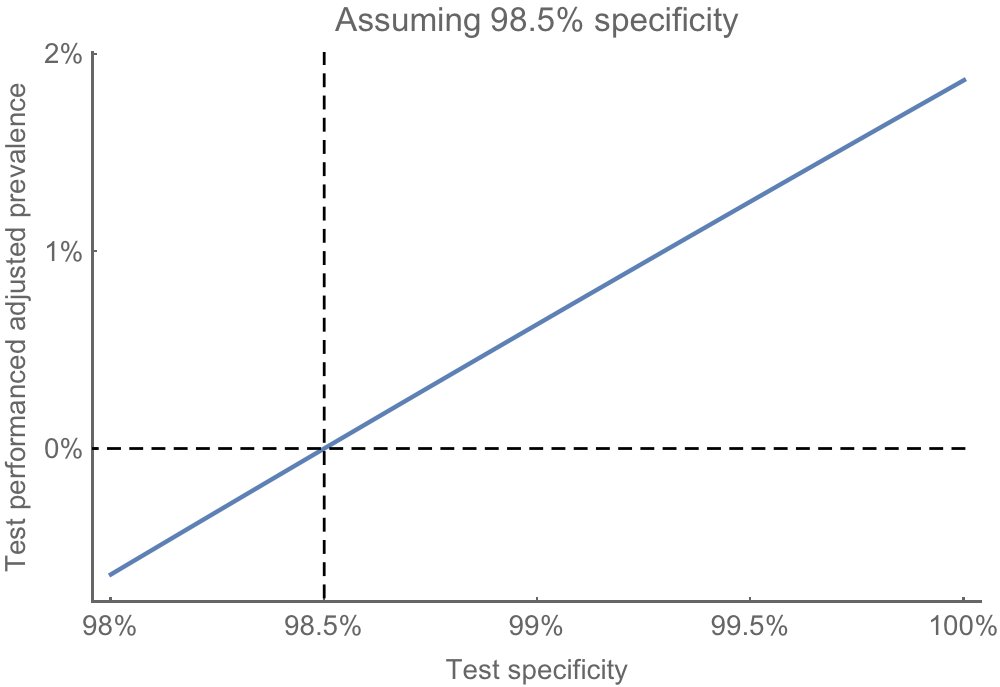

However, if we assume that the test is just slightly worse and has specificity of 98.5%, then, with observed 1.5% positivity, we& #39;d estimate a prevalence of 0%. 5/8

Given how sensitive these results are to performance of the assay, I don& #39;t think it& #39;s safe to conclude that infections are "50-85-fold more than the number of confirmed cases". 7/8

Again, important to have this work being done. I& #39;d just urge caution in interpretation. I will note again that I& #39;ve been using a 10-20X ratio of cases-to-infections, but will be great to have more data here (I& #39;d be happy to be wrong). 8/8 https://twitter.com/trvrb/status/1249414308355649536">https://twitter.com/trvrb/sta...

See this thread for a corrected confidence interval to the seroprevalence estimate: https://twitter.com/jjcherian/status/1251279161534091266">https://twitter.com/jjcherian...

And see here for a full posterior seroprevalence estimate that takes into account uncertainty in sensitivity and specificity of the assay: https://twitter.com/richardneher/status/1251484978854146053">https://twitter.com/richardne...

Read on Twitter

Read on Twitter