just recently learned about Okapi BM25 as a better information retrieval function for search engines (after the widely used "vanilla" TF/IDF) and it is hands down the best comp sci name I& #39;ve come across. "Okapi BM25", damn – some history  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="collectie" aria-label="Emoji: collectie">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="collectie" aria-label="Emoji: collectie">

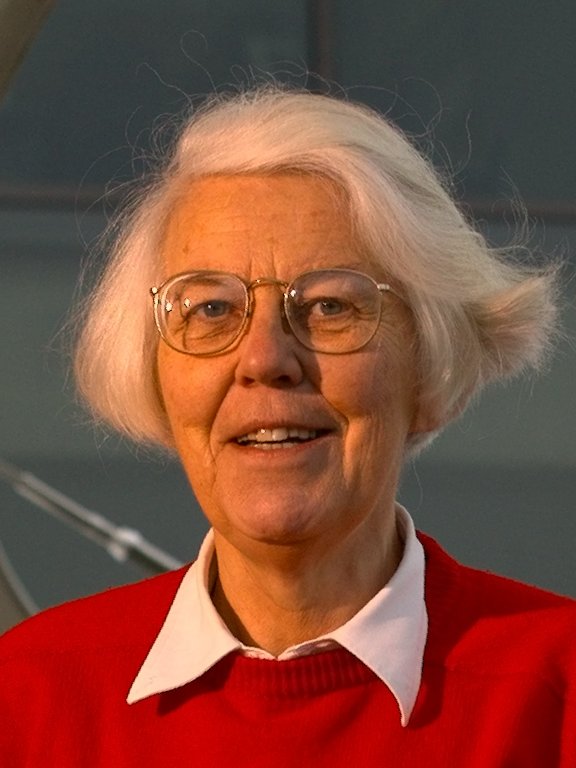

Karen Spärck Jones was a pioneering British computer scientist responsible for the concept of inverse document frequency (1972); Elasticsearch still uses TF/IDF as part of its full-text scoring algorithm as of today. it& #39;s cheap to run and tremendously useful

TF/IDF stands for "term frequency/inverse document frequency". the main ideas behind it are:

1. a document is more relevant if the term we& #39;re looking for appears often in that document (e.g., "quick" has a frequency of 2 in "the quick brown fox jumps over the quick dog")

1. a document is more relevant if the term we& #39;re looking for appears often in that document (e.g., "quick" has a frequency of 2 in "the quick brown fox jumps over the quick dog")

but only a frequency of 1 in "the quick brown fox" (hence less relevant)

2. a document is more relevant if the term it is matching is not as common (e.g., documents with term "quick" will likely be more relevant than documents with more general term "the")

2. a document is more relevant if the term it is matching is not as common (e.g., documents with term "quick" will likely be more relevant than documents with more general term "the")

another better example would be, if you have a blog about birds the word "sparrow" will be more relevant than "bird", since it is likely many documents will mention "bird" (maybe all of them), but only one or two will mention [house] "sparrow" (cute Passer domesticus species)

the (simplified) formula as explained in Elasticsearch: The Definitive Guide is as follows:

scoring(q, d) =

∑ (

tf(t in d)

idf(t)²

t.getBoost()

norm(t,d)

) (t in q)

scoring(q, d) =

∑ (

tf(t in d)

idf(t)²

t.getBoost()

norm(t,d)

) (t in q)

essentially, we score results of a query over a set of documents by taking into account the term frequency, inverse document frequency, boosting and field-length norm

by boosting, we can attribute more relevance to certain terms

by boosting, we can attribute more relevance to certain terms

field-length norm indicates relevance of term in the context of that document – the bigger the document, the less relevant it is (e.g., if "quick" appears in text "quick: a novel" it is more relevant than it appearing in bigger text "the quick brown fox jumps over the lazy dog")

enter Okapi BM25, the TF/IDF scoring default in ES – whilst vanilla TF/IDF favours term frequency within a document and penalizes term prevalence across documents, Okapi BM 25 takes it further and weighs in average document size and term frequency saturation

average document size is important as a way to normalize field-length norm. "quick" is only more relevant in "quick: a novel" if that& #39;s the average size for our set of documents

term frequency saturation is used to slow down (even set an upper bound) on term frequency relevance. "quick" is relevant if it appears twice in a document. it is more relevant if it appears 3 times. but appearing 4, 5+ times is not much more relevant between them. it& #39;s saturated

the name of the actual ranking function is BM25 (for "best matching 25"). the fuller name, Okapi BM25, includes the name of the first system to use it, which was the Okapi information retrieval system, implemented at London& #39;s City University in the 1980s and 1990s

Read on Twitter

Read on Twitter

![another better example would be, if you have a blog about birds the word "sparrow" will be more relevant than "bird", since it is likely many documents will mention "bird" (maybe all of them), but only one or two will mention [house] "sparrow" (cute Passer domesticus species) another better example would be, if you have a blog about birds the word "sparrow" will be more relevant than "bird", since it is likely many documents will mention "bird" (maybe all of them), but only one or two will mention [house] "sparrow" (cute Passer domesticus species)](https://pbs.twimg.com/media/FU7ULiEXEAAC54q.jpg)