A small thread on analysing text rendering capability of #imagen.

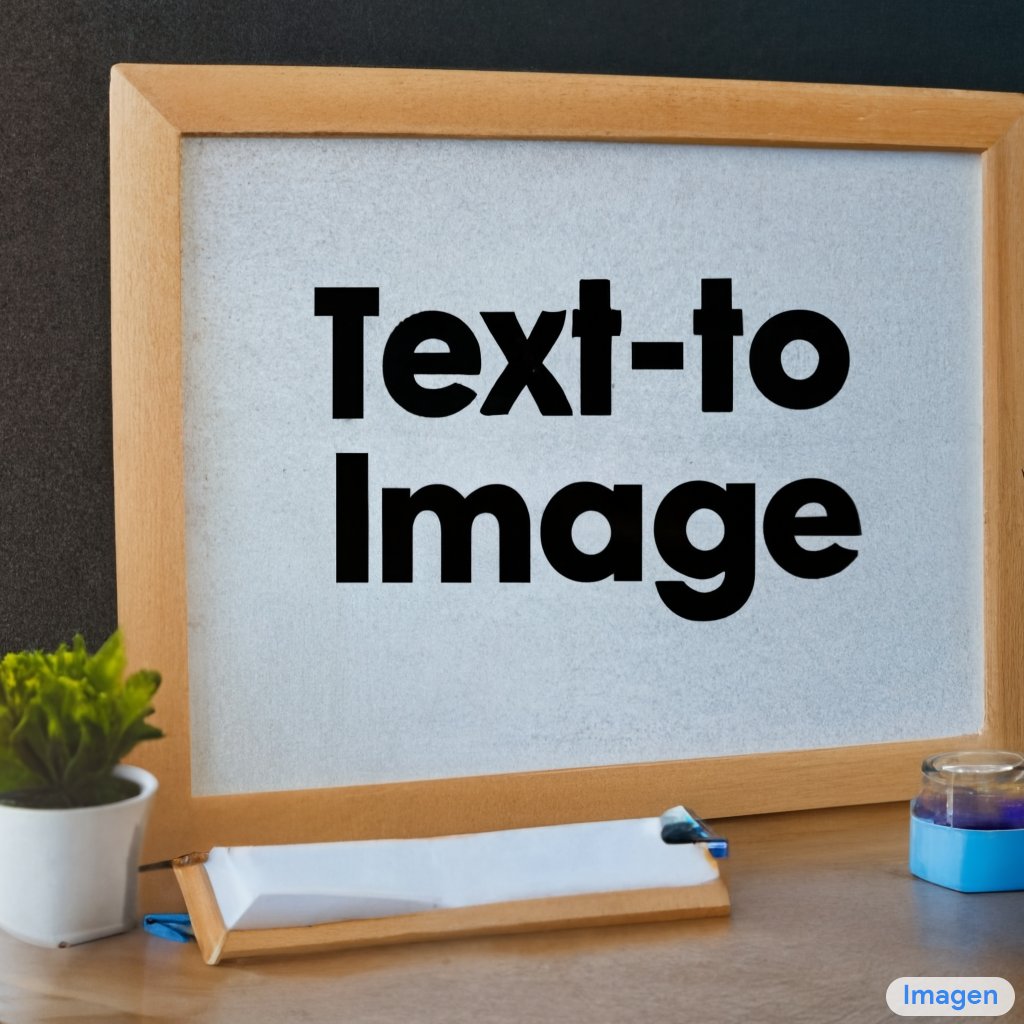

Starting with a simple example, Imagen is able to reliably render text without any need for rigorous cherrypicking. e.g. these are non-cherry picked examples for writing "Text-to-Image" on a storefront.

Starting with a simple example, Imagen is able to reliably render text without any need for rigorous cherrypicking. e.g. these are non-cherry picked examples for writing "Text-to-Image" on a storefront.

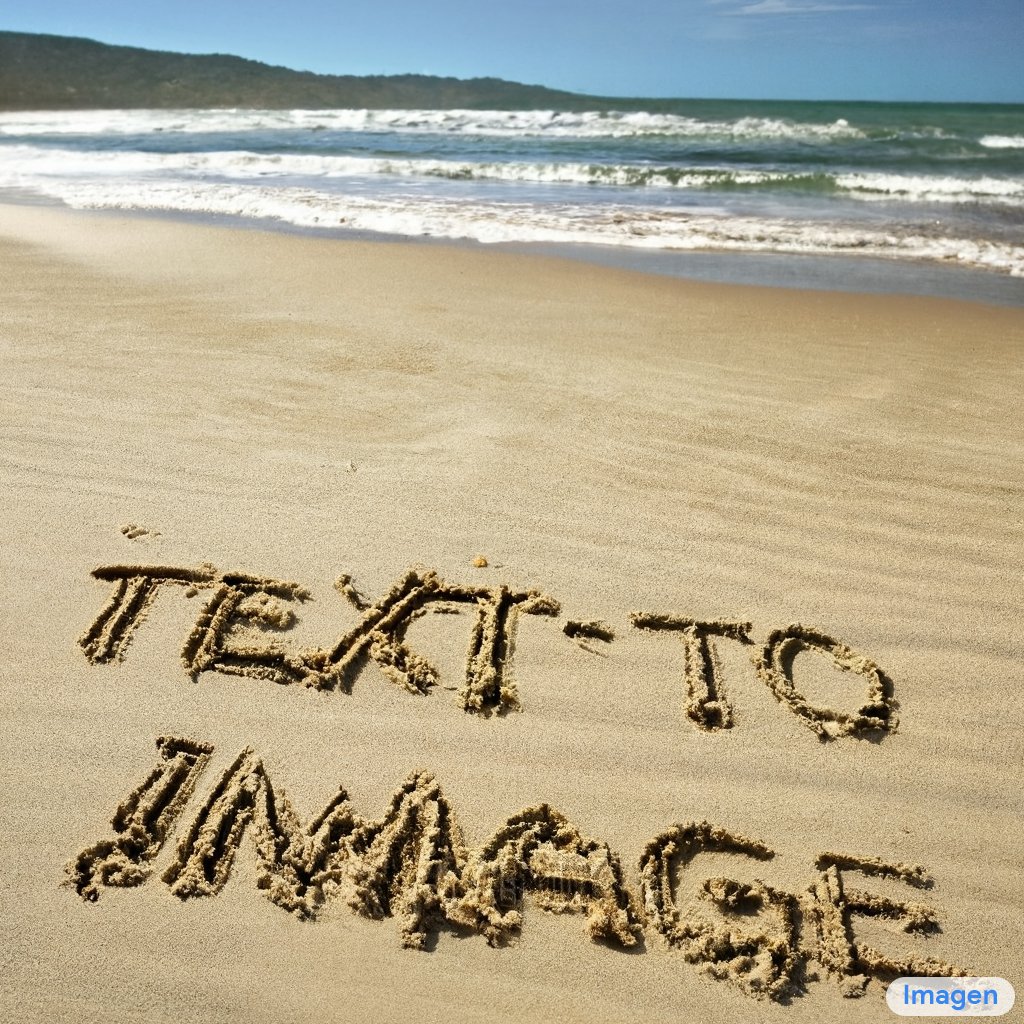

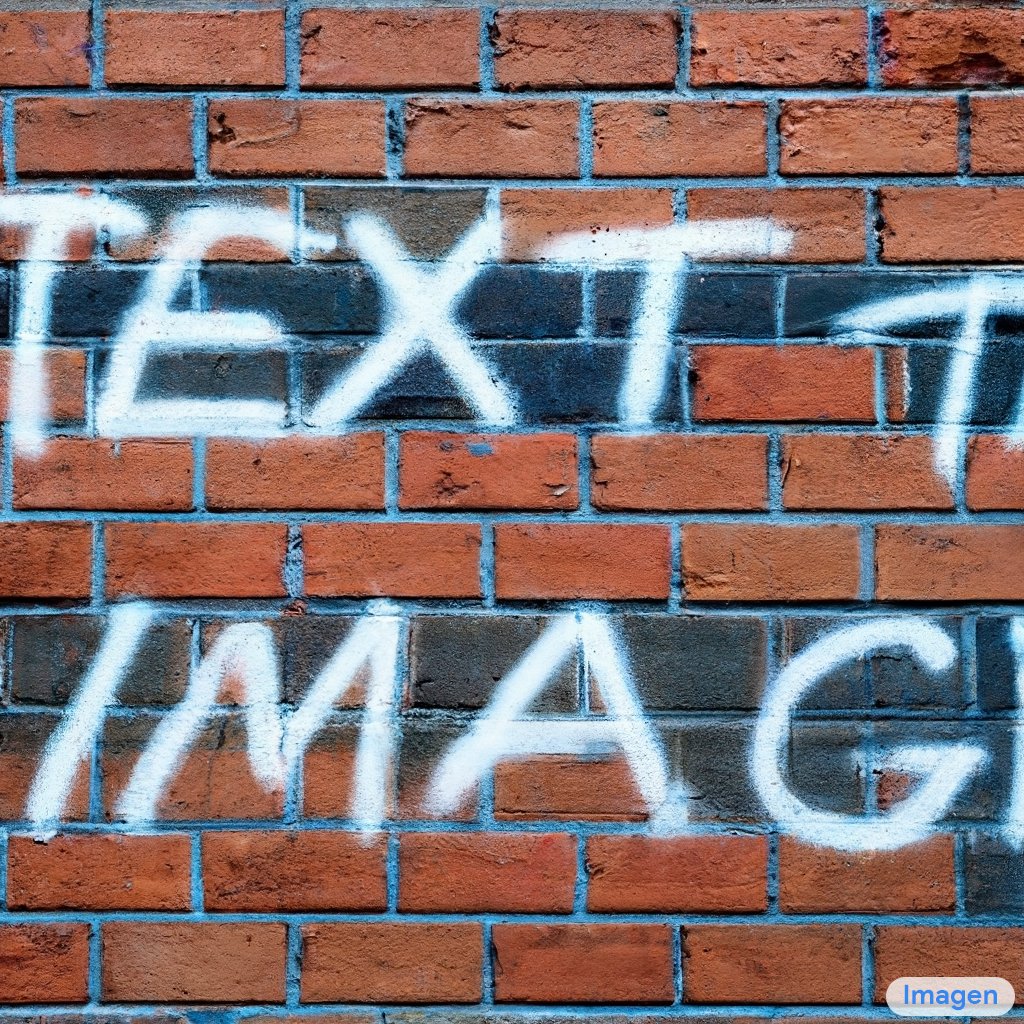

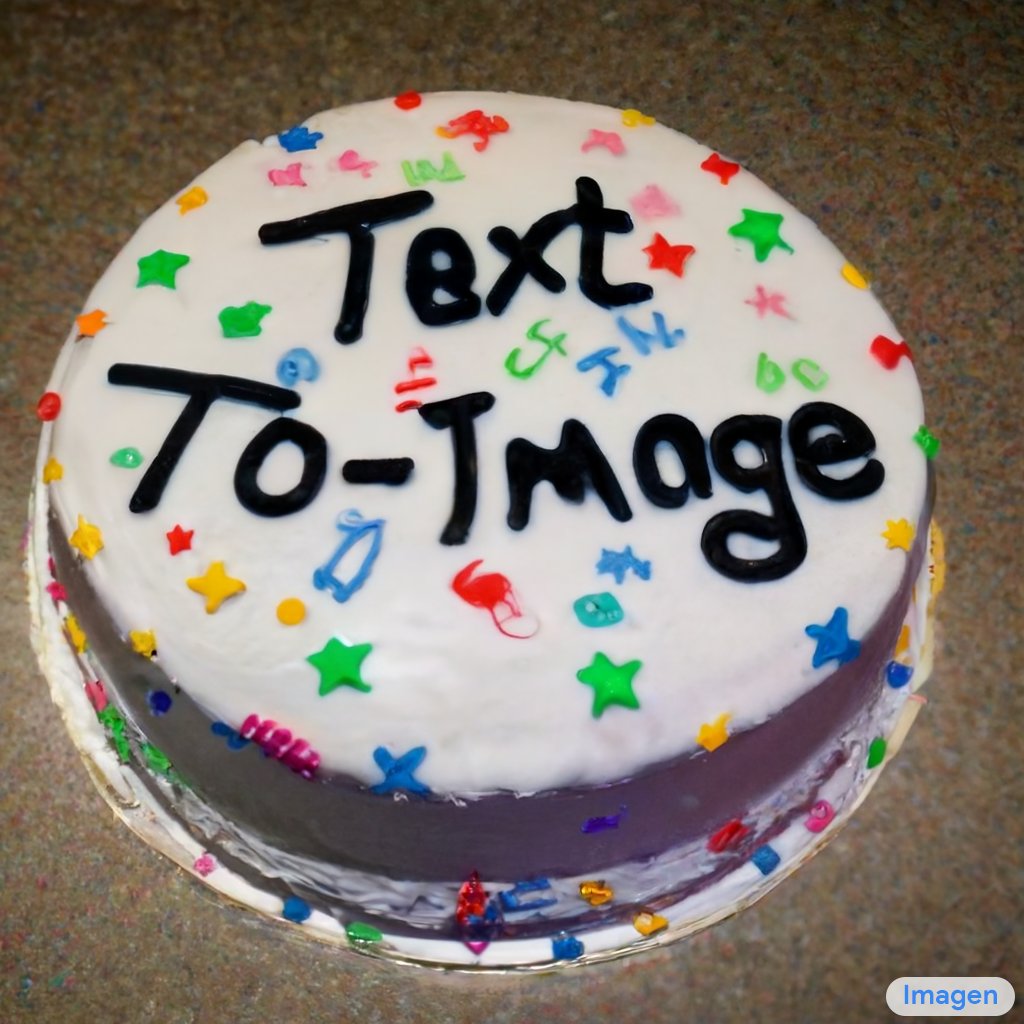

Imagen is also capable of writing text in a variety of

interesting settings. Here are some examples (1 sample picked out of 8 for each prompt).

interesting settings. Here are some examples (1 sample picked out of 8 for each prompt).

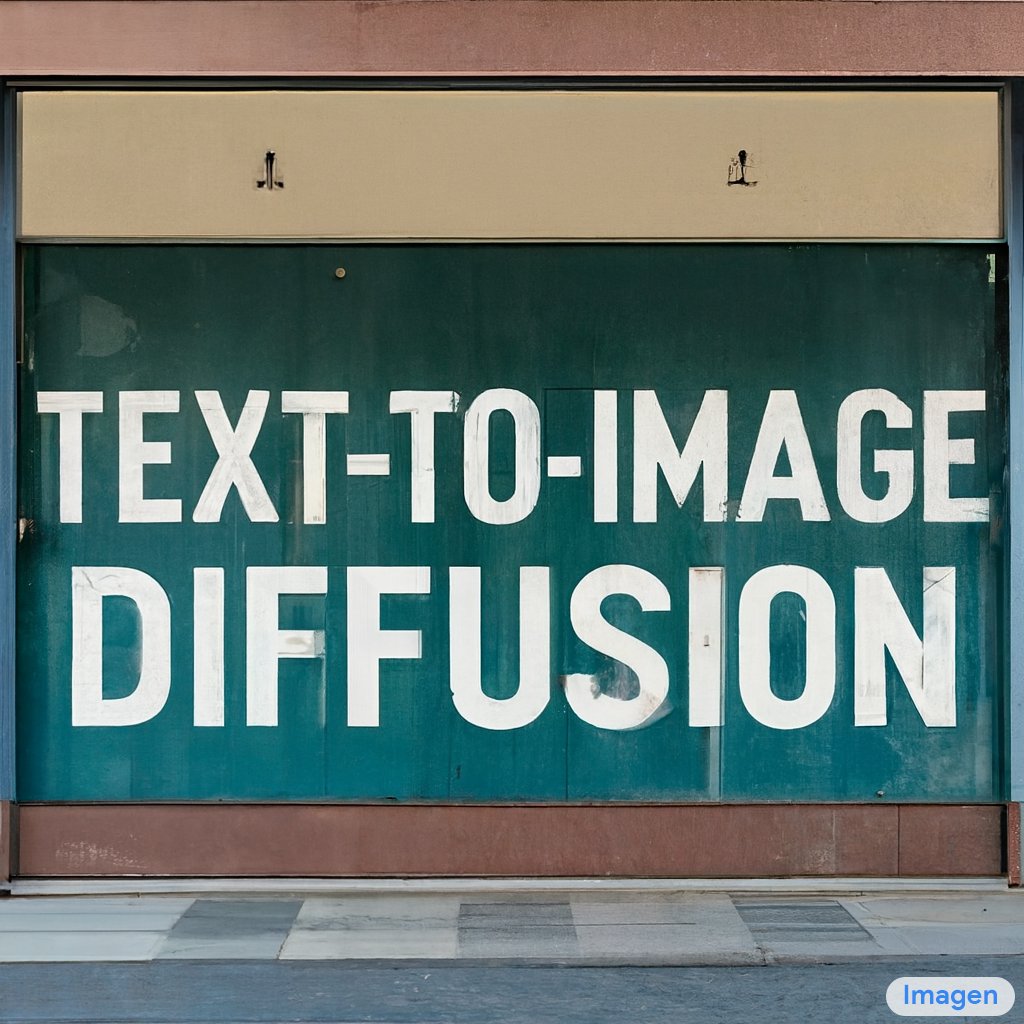

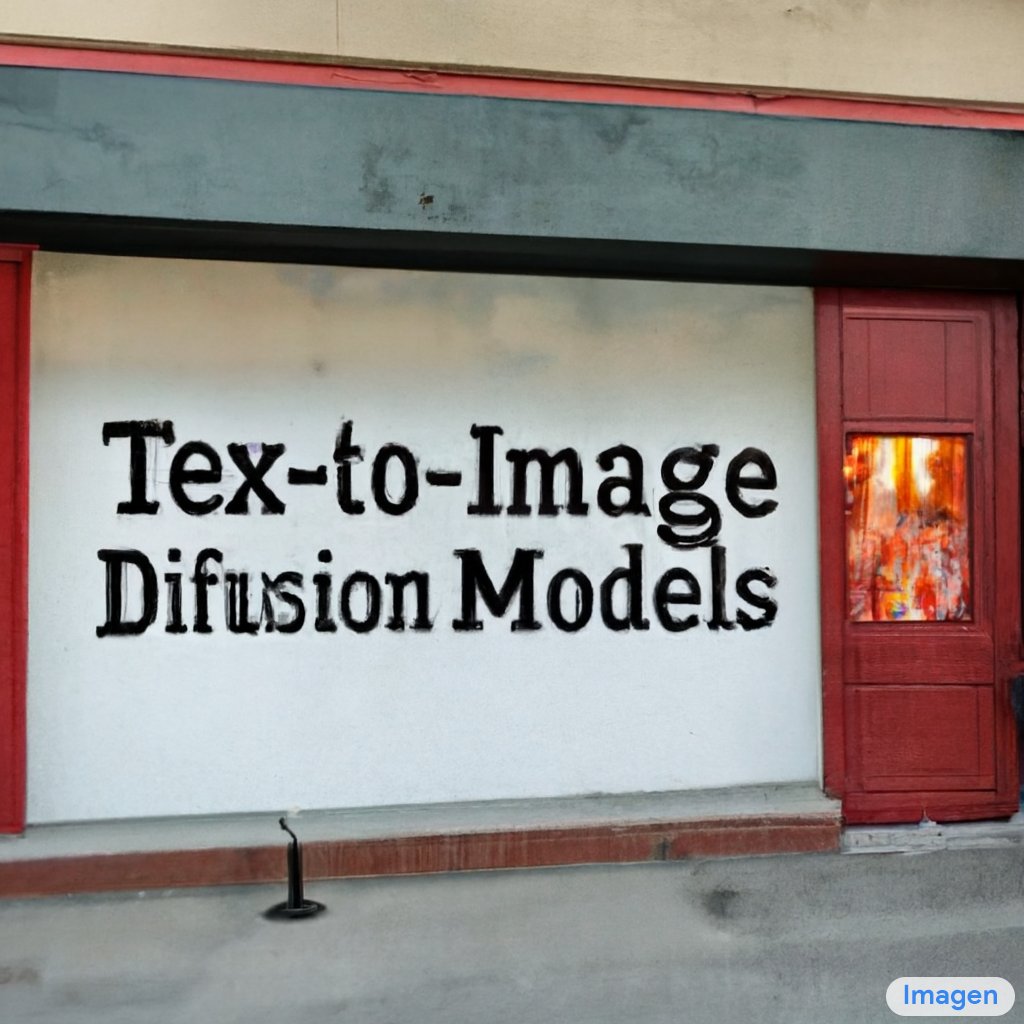

As the text gets longer, it naturally becomes more and more difficult to render correctly, but Imagen is pretty good for complex text prompts.

"Text", "Text-to-Image", "Text-to-Image Diffusion", "Text-to-Image Diffusion Models"

"Text", "Text-to-Image", "Text-to-Image Diffusion", "Text-to-Image Diffusion Models"

The images get worse with very long text renderings, e.g. "Photorealistic Text-to-Image Diffusion Models"

We are still able to find good samples within 8 random generations.

We are still able to find good samples within 8 random generations.

Read on Twitter

Read on Twitter