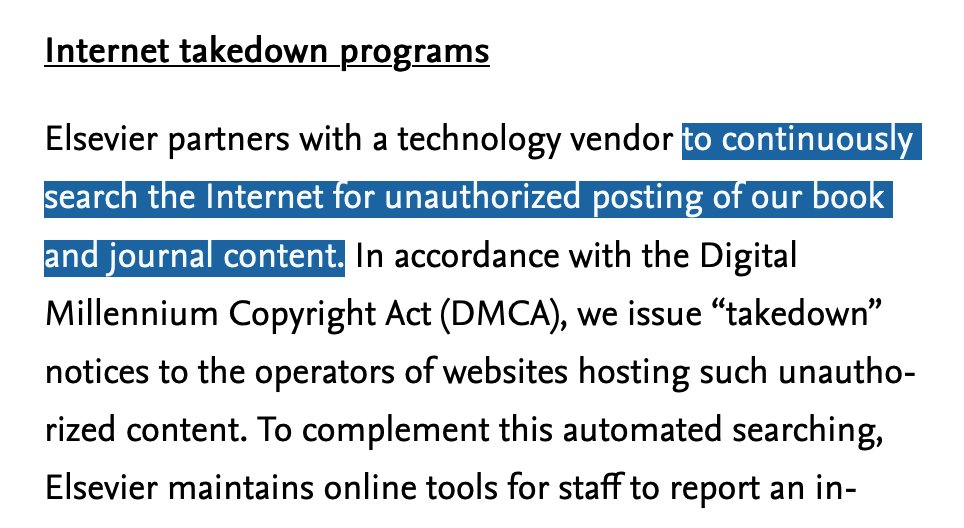

More fun publisher surveillance:

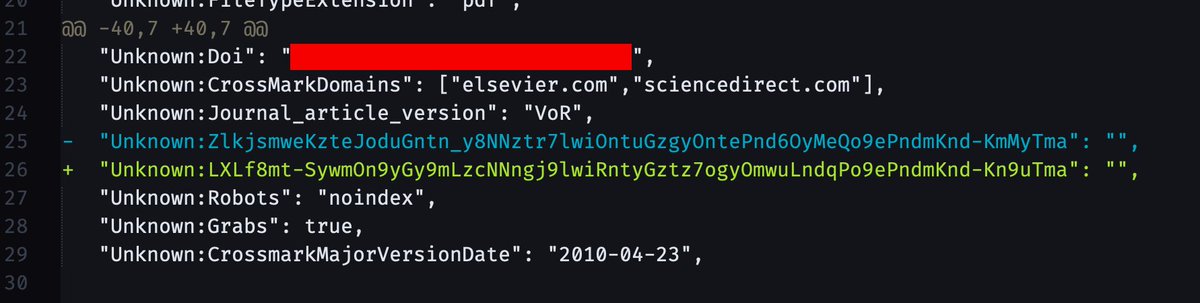

Elsevier embeds a hash in the PDF metadata that is *unique for each time a PDF is downloaded*, this is a diff between metadata from two of the same paper. Combined with access timestamps, they can uniquely identify the source of any shared PDFs.

Elsevier embeds a hash in the PDF metadata that is *unique for each time a PDF is downloaded*, this is a diff between metadata from two of the same paper. Combined with access timestamps, they can uniquely identify the source of any shared PDFs.

You can see for yourself using exiftool.

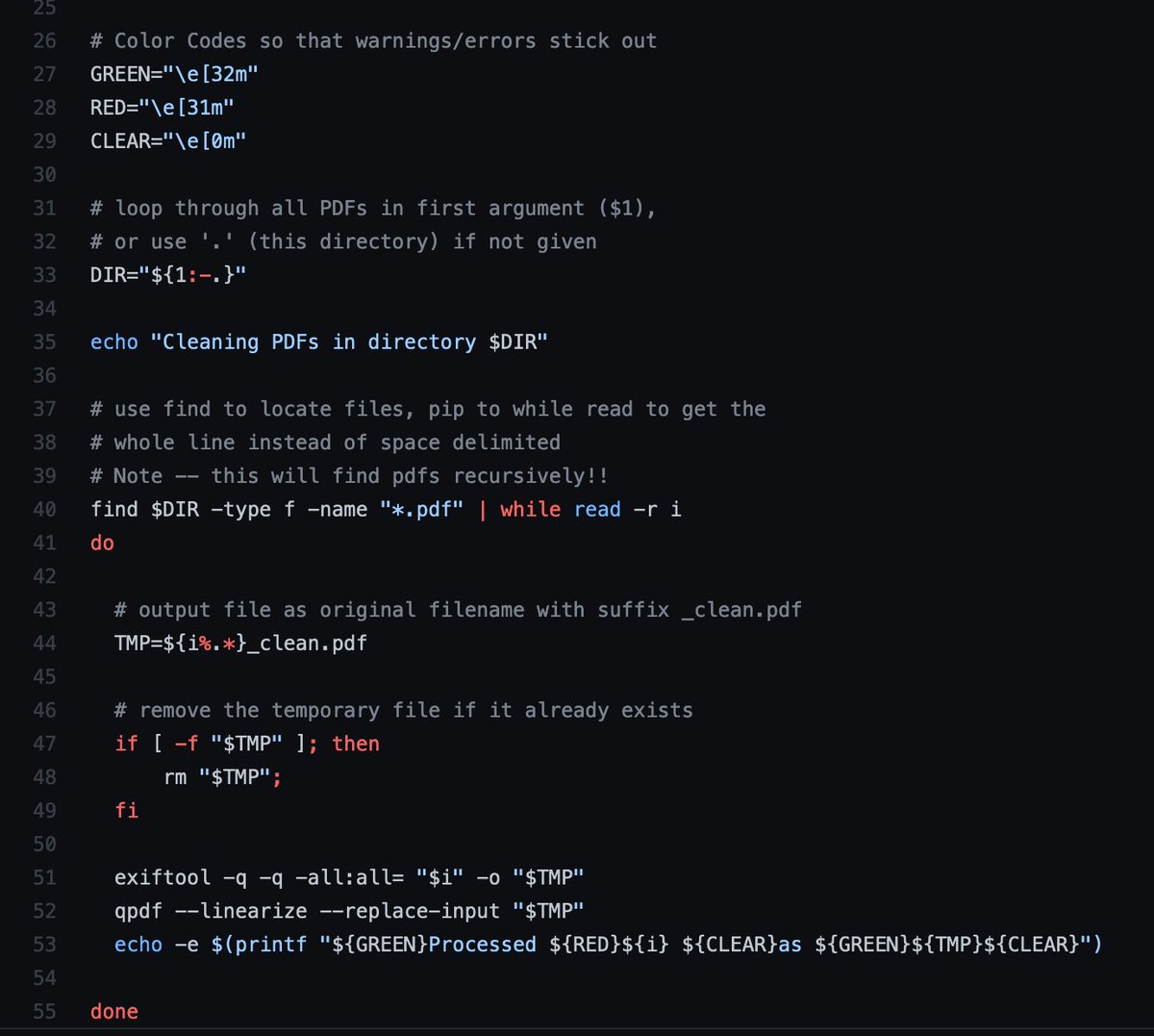

To remove all of the top-level metadata, you can use exiftool and qpdf:

exiftool -all:all= <path.pdf> -o <output1.pdf>

qpdf --linearize <output1.pdf> <output2.pdf>

To remove *all* metadata, you can use dangerzone or mat2

To remove all of the top-level metadata, you can use exiftool and qpdf:

exiftool -all:all= <path.pdf> -o <output1.pdf>

qpdf --linearize <output1.pdf> <output2.pdf>

To remove *all* metadata, you can use dangerzone or mat2

Also present in the metadata are NISO tags for document status indicating the "final published version" (VoR), and limits on what domains it should be present on. Elsevier scans for PDFs with this metadata, so good idea to strip it any time you& #39;re sharing a copy.

Links:

exiftool: https://www.exiftool.org/

qpdf:">https://www.exiftool.org/">... https://qpdf.sourceforge.io/

dangerzone">https://qpdf.sourceforge.io/">... (GUI, render PDF as images, then re-OCR everything): https://dangerzone.rocks/

mat2">https://dangerzone.rocks/">... (render PDF as images, don& #39;t OCR): https://0xacab.org/jvoisin/mat2 ">https://0xacab.org/jvoisin/m...

exiftool: https://www.exiftool.org/

qpdf:">https://www.exiftool.org/">... https://qpdf.sourceforge.io/

dangerzone">https://qpdf.sourceforge.io/">... (GUI, render PDF as images, then re-OCR everything): https://dangerzone.rocks/

mat2">https://dangerzone.rocks/">... (render PDF as images, don& #39;t OCR): https://0xacab.org/jvoisin/mat2 ">https://0xacab.org/jvoisin/m...

here& #39;s a shell script that recursively removes metadata from pdfs in a provided (or current) directory as described above. For mac/*nix-like computers, and you need to have qpdf and exiftool installed:

https://gist.github.com/sneakers-the-rat/172e8679b824a3871decd262ed3f59c6">https://gist.github.com/sneakers-...

https://gist.github.com/sneakers-the-rat/172e8679b824a3871decd262ed3f59c6">https://gist.github.com/sneakers-...

The metadata appears to be preserved on papers from sci-hub. since it works by using harvested academic credentials to download papers, this would allow publishers to identify which accounts need to be closed/secured https://twitter.com/json_dirs/status/1486135162505072641?t=Wg5XAzujycz79Cop_ap8vQ&s=19">https://twitter.com/json_dirs...

for any security researchers out there, here are a few more "hashes" that a few have noted do not appear to be random and might be decodable. exiftool apparently squashed the whitespace so there is a bit more structure to them than in the OP: https://gist.github.com/sneakers-the-rat/6d158eb4c8836880cf03191cb5419c8f">https://gist.github.com/sneakers-...

https://twitter.com/kmagnacca/status/1486209676979032064?t=GT8fV5QG-4SGTkLadYpCNQ&s=19">https://twitter.com/kmagnacca...

https://twitter.com/SchmiegSophie/status/1486206774159970305?t=GT8fV5QG-4SGTkLadYpCNQ&s=19">https://twitter.com/SchmiegSo...

this is the way to get the correct tags:

(on mac i needed to install gnu grep with homebrew `brew install grep` and then use `ggrep` )

will follow up with dataset tomorrow. https://twitter.com/horsemankukka/status/1486268962119761924?s=20">https://twitter.com/horsemank...

(on mac i needed to install gnu grep with homebrew `brew install grep` and then use `ggrep` )

will follow up with dataset tomorrow. https://twitter.com/horsemankukka/status/1486268962119761924?s=20">https://twitter.com/horsemank...

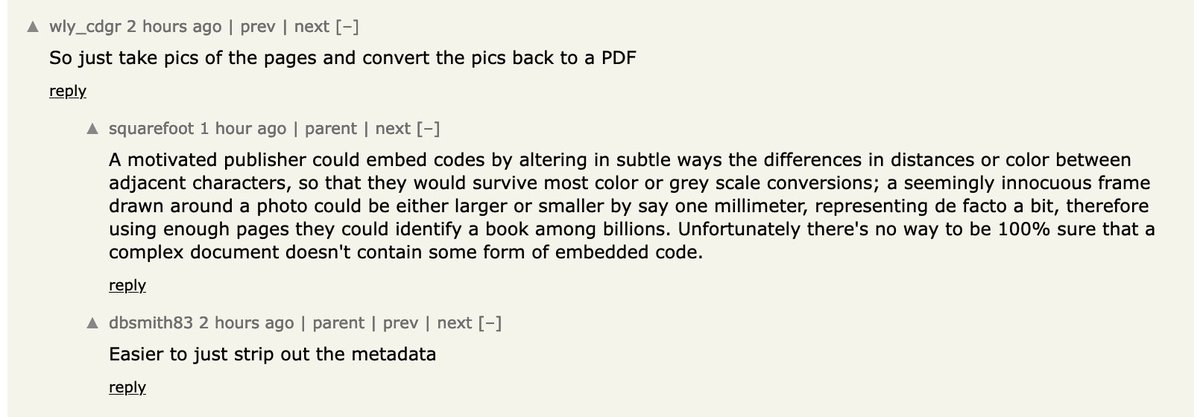

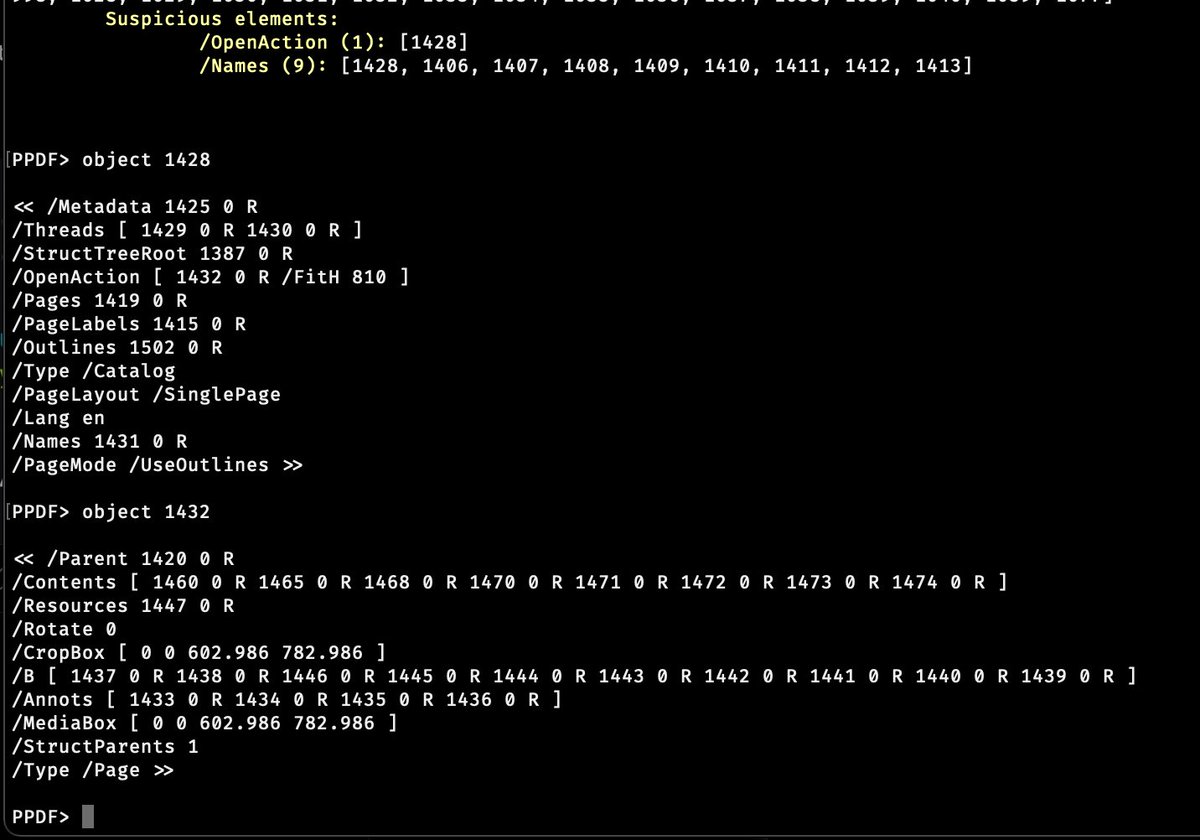

of course there& #39;s smarter watermarking, the metadata is notable because you could scan billions of pdfs fast. this comment on HN got me thinking about this PDF /OpenAction I couldn& #39;t make sense of earlier, on open, access metadata, so something with sizes and layout...

updated the above gist with correctly extracted tags, and included python code to extract your own, feel free to add them in the comments. since we don& #39;t know what they contain yet not adding other metadata. definitely patterned, not a hash, but idk yet. https://twitter.com/json_dirs/status/1486289288115359747?t=QwmBvbOgh2fCkjSOZSh3Fw&s=19">https://twitter.com/json_dirs...

you go to school to study "the brain" and then the next thing you know you& #39;re learning how to debug surveillance in PDF rendering to understand how publishers have so contorted the practice of science for profit. how can there be "normal science" when this is normal?

Read on Twitter

Read on Twitter