@cogeotiff vs. @zarr_dev vs. @tiledb !

Last week @_VincentS_ asked if there was any content on comparing COGS vs ZARR.

https://twitter.com/_VincentS_/status/1455933487136100356

Here">https://twitter.com/_VincentS... is some info on internal testing we did at @SatelligenceEO

Last week @_VincentS_ asked if there was any content on comparing COGS vs ZARR.

https://twitter.com/_VincentS_/status/1455933487136100356

Here">https://twitter.com/_VincentS... is some info on internal testing we did at @SatelligenceEO

We use @googlecloud for imagery processing.

We built our own EarthEngine-like tools that do anything we need inside a @kubernetesio cluster and uses cloud storage as a data store.

All went fine, until one day it didn& #39;t.

We built our own EarthEngine-like tools that do anything we need inside a @kubernetesio cluster and uses cloud storage as a data store.

All went fine, until one day it didn& #39;t.

After preprocessing satellite data, we stored data as 512x512 chunks, each a single-band non-COG tif.

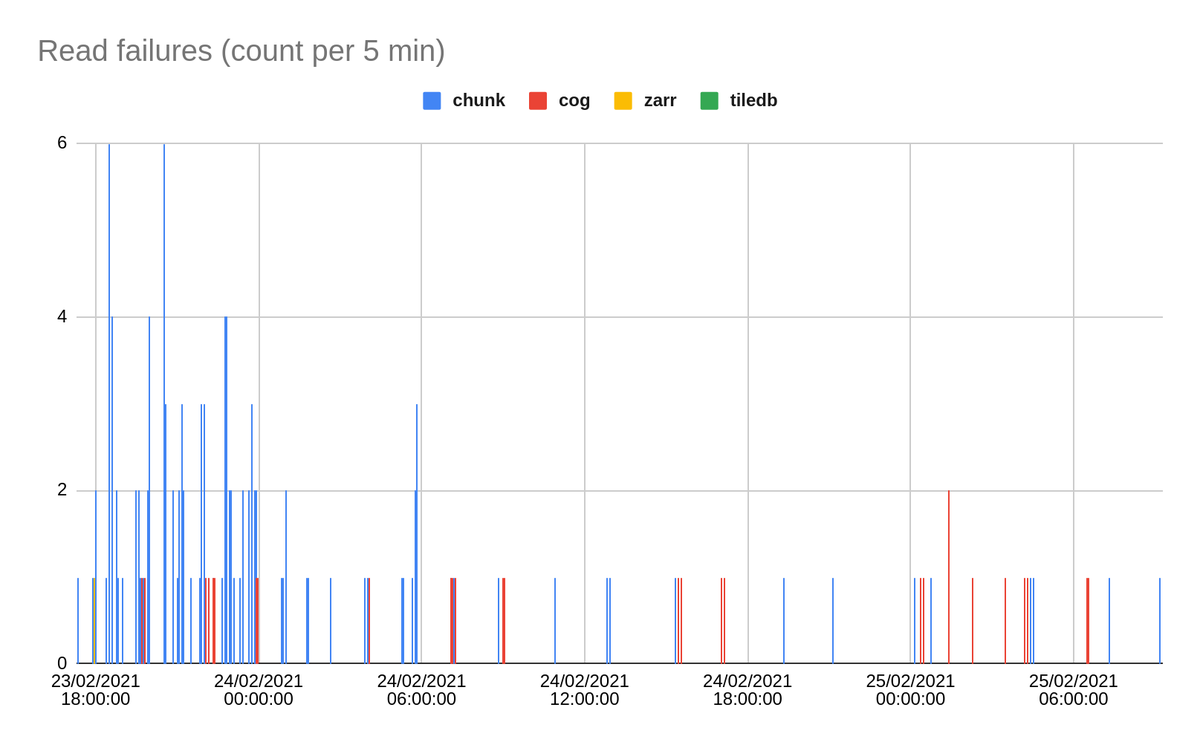

With thousands of jobs running simultaneously, we were seeing lots of GDAL read failures and sometimes also corrupted data when reads were successful.

More load = more errors

With thousands of jobs running simultaneously, we were seeing lots of GDAL read failures and sometimes also corrupted data when reads were successful.

More load = more errors

We tried to work around this by adding retries, and reading the same data twice to check whether the actual data was read correctly

-> Performance down the drain.

We thought this might be a Google specific issue, but we could not really find anything on the matter.

-> Performance down the drain.

We thought this might be a Google specific issue, but we could not really find anything on the matter.

Enter Vincent, one of our Twitterless engineers

He compared COG, ZARR and TileDB for performance and errors on a single S2 scene.

The test:

Read random 512x512 data for single band

Compare checksums of original scene and stored data

Log:time, checksum errors and read failures

He compared COG, ZARR and TileDB for performance and errors on a single S2 scene.

The test:

Read random 512x512 data for single band

Compare checksums of original scene and stored data

Log:time, checksum errors and read failures

Then add some heat by scaling up.

15:00 - 17:30 : scale to 500 jobs (34 vm nodes)

17:30 - 20:50 : scale to 1500 jobs (100 nodes)

20:50 - end : run with 100 jobs (8 nodes)

Time is indicated in the result graphs

15:00 - 17:30 : scale to 500 jobs (34 vm nodes)

17:30 - 20:50 : scale to 1500 jobs (100 nodes)

20:50 - end : run with 100 jobs (8 nodes)

Time is indicated in the result graphs

RESULTS

Chunks = old fashioned tifs, stored in 512x512 files

COG, ZARR and TileDB are single scene stored in a single file.

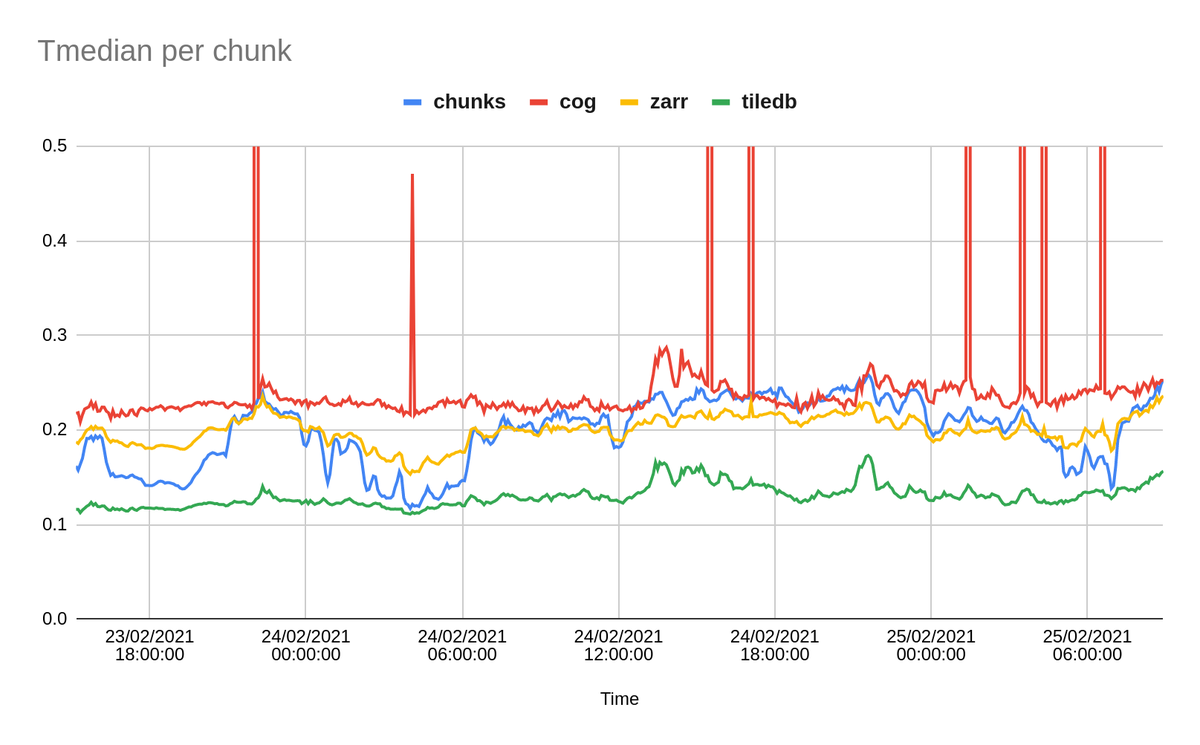

first up: READ TIME

COGS perform the worst. TileDB both fastest and most stable

Chunks = old fashioned tifs, stored in 512x512 files

COG, ZARR and TileDB are single scene stored in a single file.

first up: READ TIME

COGS perform the worst. TileDB both fastest and most stable

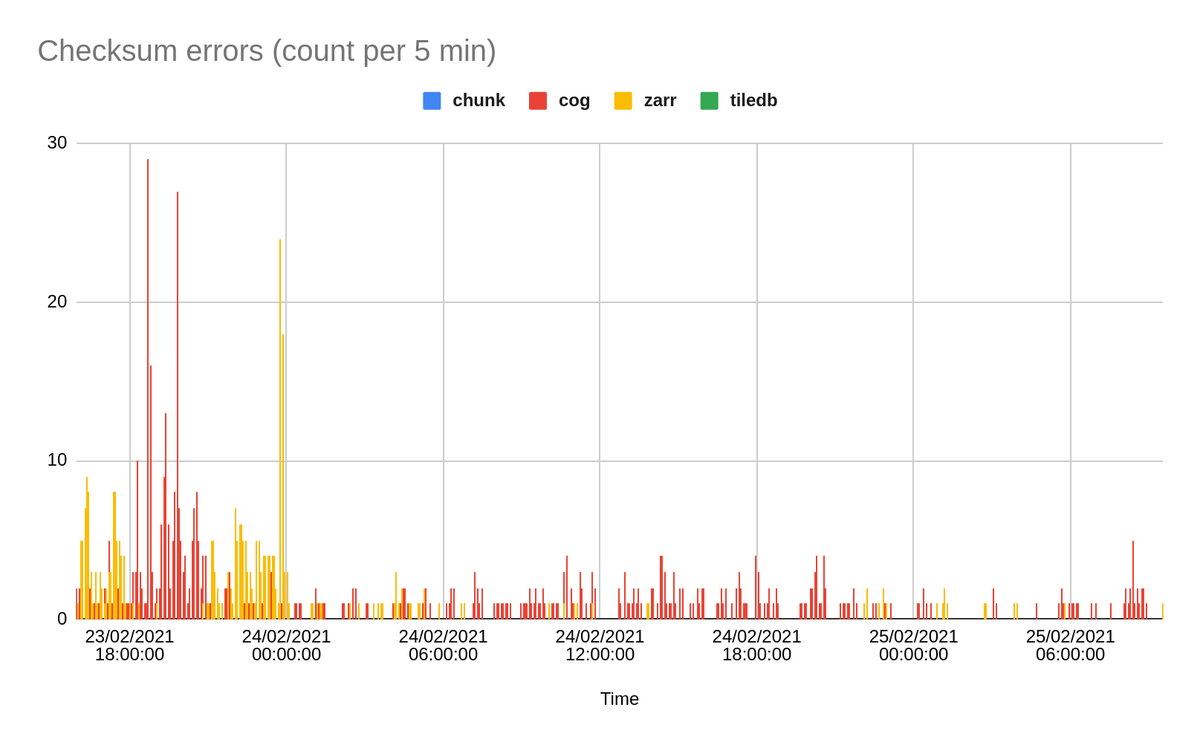

Second: CHECKSUM ERRORS

COG and ZARR both get checksum errors and more under heavy load (between 17:30-21:00) TileDB no errors

COG and ZARR both get checksum errors and more under heavy load (between 17:30-21:00) TileDB no errors

In conclusion we chose TilleDB, because it& #39;s the most stable, highest performant format for our situation. Additional TileDB benefits include

* Storing xd data (so also timeseries 4D data)

* Ability to do time travelling, because each write has its own timestamp!

* Storing xd data (so also timeseries 4D data)

* Ability to do time travelling, because each write has its own timestamp!

These results are of course quite a specific for our specific environment, which might turn out different for doing similar stuff on e.g. AWS, Azure etc!

Read on Twitter

Read on Twitter