The camera-ready version of our SIGIR 2021 paper "When Fair Ranking Meets Uncertain Inference." by myself, @Ritam_Dutt and @bowlinearl is up on Arxiv: https://arxiv.org/abs/2105.02091

A">https://arxiv.org/abs/2105.... thread https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">:

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">:

A">https://arxiv.org/abs/2105.... thread

A huge majority of theoretical Fair Machine Learning papers assume that the demographic information of the people in the datasets is available to the fair algorithm, which then purports to intervene to achieve some measure of fairness, based on the use case and legal landscape.

However, in practice, this assumption does not translate. A very recent line of work in the area points out that not only might the race/gender or other demographic data not be available, it might also be illegal to ask for such data from your users.

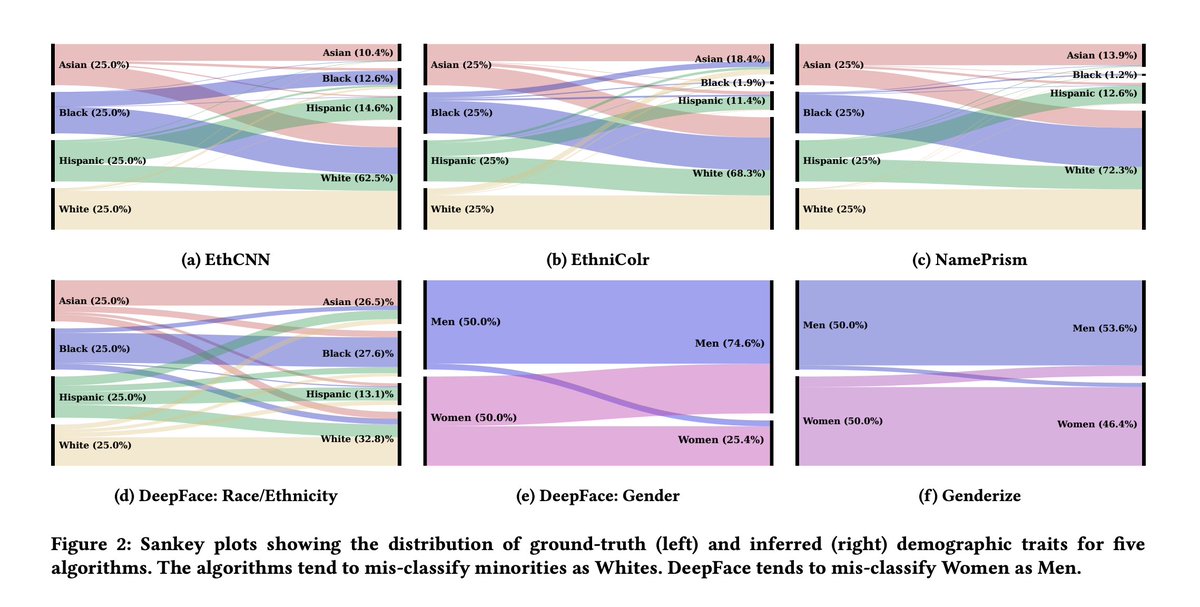

On the other hand, it is also illegal to discriminate, especially in cases like fair housing or credit lending (in US). In practice, this means that companies are resorting to using ad-hoc measures to predict the race and gender information from names, zip codes, images, etc.

This is not even a hypothetical scenario. Despite repeated complaints about its accuracy, the BISG algorithm has become a de-facto standard in the lending industry: https://www.autofinancenews.net/allposts/comp-reg/cfpbs-bisg-algorithm-not-designed-to-determine-race-creator-says/">https://www.autofinancenews.net/allposts/...

That brings me to our paper. In this work, we wanted to empirically investigate what actually happens when you attempt to do fair ranking on a dataset by first predicting the protected attributes.

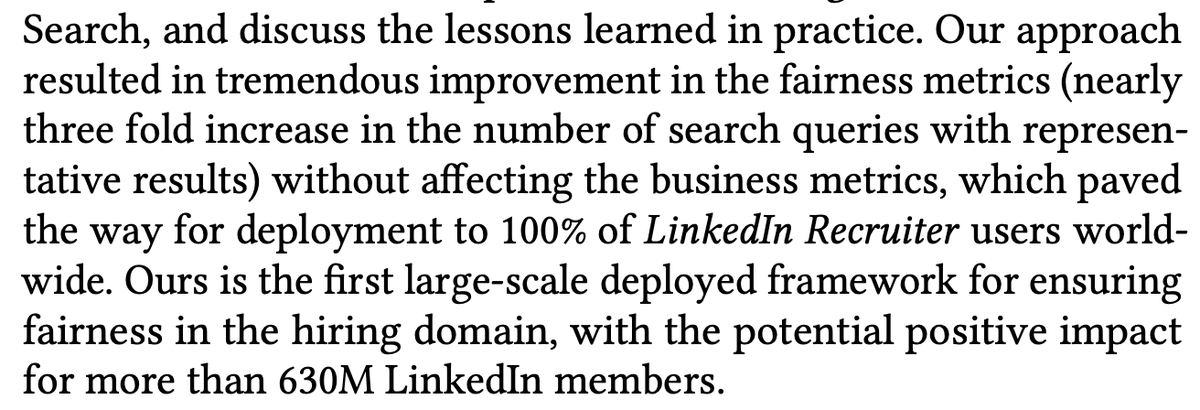

The ranking algorithm we chose is DetConstSort by Geyik et Al at LinkedIn: https://arxiv.org/pdf/1905.01989.pdf.">https://arxiv.org/pdf/1905.... In the paper, they note: "Ours is the first large-scale deployed framework for ensuring fairness in the hiring domain."

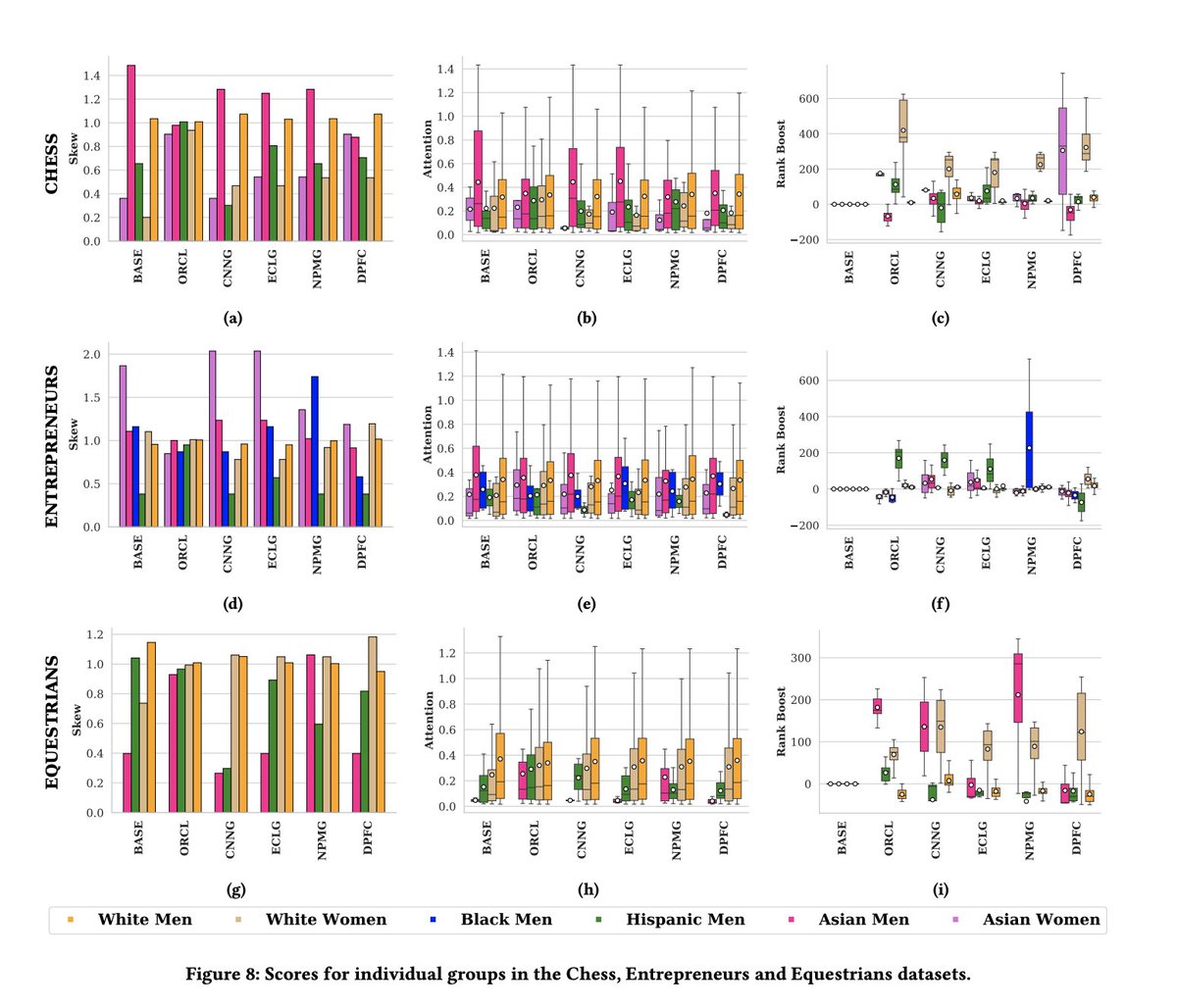

What would happen if someone were to first predict the protected attributes of a list of people and then pass it to DetConstSort to fairly re-rank it? Turns out that the effects can be pretty insidious, based on how bad the protected attribute classifiers are.

The errors in prediction of these race and gender classifiers was passed on to the ranking algorithm, causing very problematic artifacts: Minority groups mispredicted as majority groups were pushed down in the rankings and vice versa.

In some cases, this "fair" re-ranking led to outcomes that were objectively worse for minority groups than if one didn& #39;t have fairness interventions at all. The effects were also pretty hard to predict, since each misprediction harmed everyone else that was being re-ranked.

We end the paper with some thoughts about a possible solution - akin to what @Airbnb is doing - to involve humans instead of algorithmically predicting sensitive attributes, since "perceived" attributes actually dictate how people are treated in real life. https://www.airbnb.com/resources/hosting-homes/a/a-new-way-were-fighting-discrimination-on-airbnb-201">https://www.airbnb.com/resources...

As someone who has had internship experience with fair algorithms in the industry at @fiddlerlabs, I can tell you how this is currently an acute problem for data scientists in companies who really want to do better but actually unknowingly make things worse due to this.

Read on Twitter

Read on Twitter