just a reminder that AHS having ~10 computer servers when you know tens of thousands of people are going to rush your website is reckless and stupid.

especially if you use archaic & outdated website technology behind the scenes.

also: the answer to this isn& #39;t privatization.

especially if you use archaic & outdated website technology behind the scenes.

also: the answer to this isn& #39;t privatization.

WhatsApp was doing 1 million simultaneous connections to a single server back in 2011. The very next year they were able to double it to 2m.

That& #39;s 9 years ago.

Here& #39;s the blog post announcing it:

https://blog.whatsapp.com/1-million-is-so-2011">https://blog.whatsapp.com/1-million...

That& #39;s 9 years ago.

Here& #39;s the blog post announcing it:

https://blog.whatsapp.com/1-million-is-so-2011">https://blog.whatsapp.com/1-million...

It may seem like they used some billion dollar super computer to power this, or a fleet of expensive machines, but it wasn& #39;t that fancy.

Intel(R) Xeon(R) CPU X5675 @ 3.07GHz

They used two of those.

Each one nowadays goes for ~$100 used on Amazon.

They also had 100GB of RAM.

Intel(R) Xeon(R) CPU X5675 @ 3.07GHz

They used two of those.

Each one nowadays goes for ~$100 used on Amazon.

They also had 100GB of RAM.

This may seem very expensive to replicate with newer processors, but it& #39;s easily possible to simulate a similar level of power with a server rental provider.

This is how Amazon is making all their money nowadays. They are the largest provider of virtual computing power.

This is how Amazon is making all their money nowadays. They are the largest provider of virtual computing power.

But because nobody needs to feed into that beast, there are other smaller companies that offer just as much core functionality for cheaper rates.

My favourite is @digitalocean

My favourite is @digitalocean

WhatsApp had 24 cores & 100GB of RAM, so let& #39;s one up them and do 40 virtual cores & 160GB of RAM from @digitalocean

Rental fee is $1.78 USD/hr, or $1,200USD per month.

Rental fee is $1.78 USD/hr, or $1,200USD per month.

After the surge is over, this kind of capacity is overkill, so it& #39;s easily possible to scale down.

It just takes a couple button presses in their control panel and a restart of the virtual machine.

It just takes a couple button presses in their control panel and a restart of the virtual machine.

Obviously, hardware isn& #39;t the biggest factor here, it& #39;s also what someone decides to put on the hardware. The tasks that are funnelling all the 1s and 0s to the processor.

WhatApp used a popular language for this kind of high volume called Erlang.

WhatApp used a popular language for this kind of high volume called Erlang.

What is more popular nowadays (and my personal favourite) is called Go ( @golang). It was invented at Google and it powers the majority of YouTube.

Dozens of other big name sites & apps started using it as well, like Lyft, Twitter, Netflix, Dropbox, etc.

Dozens of other big name sites & apps started using it as well, like Lyft, Twitter, Netflix, Dropbox, etc.

I can& #39;t explain why without getting way too technical, but it& #39;s just the best way to work to get software to behave in a way to handle thousands or hundreds of thousands of requests/second.

Or like this guy found out, a million of requests simultaneously: https://www.freecodecamp.org/news/million-websockets-and-go-cc58418460bb/">https://www.freecodecamp.org/news/mill...

Or like this guy found out, a million of requests simultaneously: https://www.freecodecamp.org/news/million-websockets-and-go-cc58418460bb/">https://www.freecodecamp.org/news/mill...

There is of course the connection with legacy infrastructure & data contained in those systems.

The obvious solution there is to cache or stage the data in a way that it can be accessed relentlessly from this monster of a machine, without taking down the legacy infrastructure.

The obvious solution there is to cache or stage the data in a way that it can be accessed relentlessly from this monster of a machine, without taking down the legacy infrastructure.

It& #39;s also import not to rely on any channels of communication between the legacy infrastructure and this surge capacity system.

That doesn& #39;t mean it can& #39;t happen, it just can& #39;t happen for every user who& #39;s visiting.

That doesn& #39;t mean it can& #39;t happen, it just can& #39;t happen for every user who& #39;s visiting.

eg. does the MyAlberta Digital ID system really need to speak to old, slow, unoptimized servers at the actual Service Alberta central registry to validate the credentials?

No. That information is static and it should be cached in server that can handle the traffic.

No. That information is static and it should be cached in server that can handle the traffic.

Once this absolute unit of a virtual machine is done handling, say, a request to book someone& #39;s vaccine, then and only then should it pass over information to legacy systems.

Transfers between this god-like mercenary of a machine should be sent in batches of hundreds or thousands of people to avoid crashing the systems.

It& #39;s very inexpensive to send 2MB of data once, compared to sending 10KB of data 10,000 times.

It& #39;s very inexpensive to send 2MB of data once, compared to sending 10KB of data 10,000 times.

The last thing I want to add to this rant nobody asked for is, it& #39;s actually a bad idea to put all your eggs in one basket.

It& #39;s possible and very much better to split up the system into dozens of smaller pieces.

It& #39;s possible and very much better to split up the system into dozens of smaller pieces.

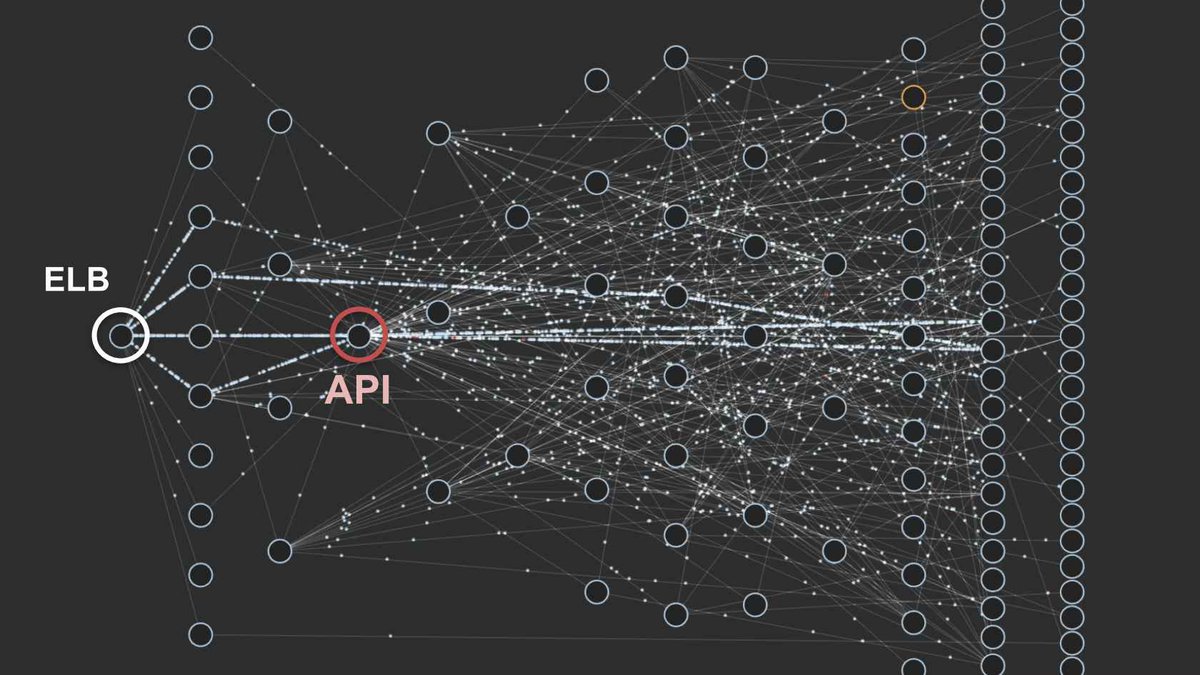

Just look at a top level view of Netflix& #39;s service. This is an actual picture from their monitoring system.

There& #39;s no single point of failure.

There& #39;s no single point of failure.

They actually created a tool called "Chaos Monkey" that purposely kills parts of their system just to test how robust and reliable each component is.

And even more important, how the other remaining systems respond to navigate around the problematic server.

And even more important, how the other remaining systems respond to navigate around the problematic server.

The old fashion way is to keep things in a monolithic system, and maybe have a few auxiliary machines to help with load.

This is how slow-moving gov& #39;t and big companies do things still. Old. Gross. Slow. Prone to failure. Monolithic.

What the world needs is more microservices.

This is how slow-moving gov& #39;t and big companies do things still. Old. Gross. Slow. Prone to failure. Monolithic.

What the world needs is more microservices.

As cool as it is knowing I will always have access to cat videos and discount items from Amazon, it would be great if people can have this same level of reliability from critically important things like applying for financial benefits and booking their vaccine appointment.

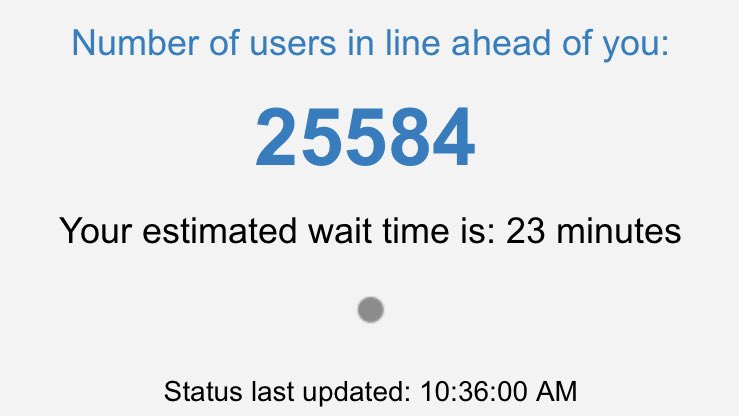

We have the technology and systems available that we don& #39;t need a virtual line.

But do we actually have the will in government to fix this?

Because this is embarrassing:

But do we actually have the will in government to fix this?

Because this is embarrassing:

it& #39;s dropped a bit since then:

queue is empty at the moment

link goes straight to taking in information

link goes straight to taking in information

queue is still empty

I didn& #39;t get any downtime alerts on my monitoring tool, so it seems like today was a success for AHS staying online

I didn& #39;t get any downtime alerts on my monitoring tool, so it seems like today was a success for AHS staying online

someone asked me where I got ~10 servers from, and it was when Shandro was saying they added additional network capacity during the crash in Feb.

I& #39;m presuming they added a few more servers since then.

I& #39;m presuming they added a few more servers since then.

. @AHS_media, Shandro gave you a blank cheque to provide infrastructure to ensure no delays & to ensure no downtime.

Thanks for figuring out the downtime part for today, but a queue to me still looks like a symptom of a bigger problem.

We can do better, so why not?

Thanks for figuring out the downtime part for today, but a queue to me still looks like a symptom of a bigger problem.

We can do better, so why not?

Read on Twitter

Read on Twitter