1/ My notes from #applyconf https://abs.twimg.com/emoji/v2/... draggable="false" alt="✍️" title="Schreibende Hand" aria-label="Emoji: Schreibende Hand">It covers:

https://abs.twimg.com/emoji/v2/... draggable="false" alt="✍️" title="Schreibende Hand" aria-label="Emoji: Schreibende Hand">It covers:

- Feature Stores

- Evolution of MLOps

- Scaling ML Platforms

- Novel Techniques

- Impactful Internal Projects

Thanks @TectonAI and partners for organizing this ML excellent #DataEngineering exhibition. Enjoy! https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten"> http://jameskle.com/writes/tecton-apply2021">https://jameskle.com/writes/te...

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten"> http://jameskle.com/writes/tecton-apply2021">https://jameskle.com/writes/te...

- Feature Stores

- Evolution of MLOps

- Scaling ML Platforms

- Novel Techniques

- Impactful Internal Projects

Thanks @TectonAI and partners for organizing this ML excellent #DataEngineering exhibition. Enjoy!

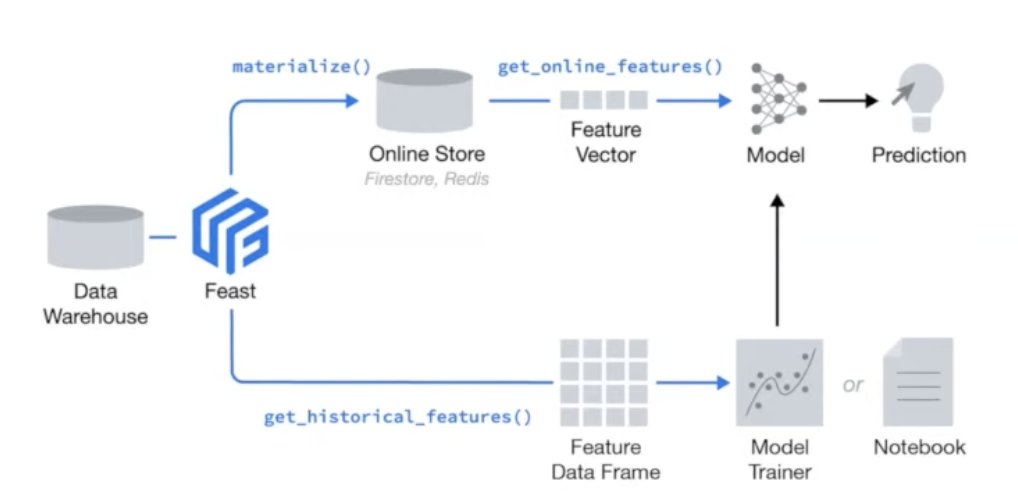

2/ @willpienaar on @feast_dev 0.10:

It is the fastest way to serve features in production:

- Zero configuration

- Local mode

- No infrastructure

- Extensible

It is the fastest way to serve features in production:

- Zero configuration

- Local mode

- No infrastructure

- Extensible

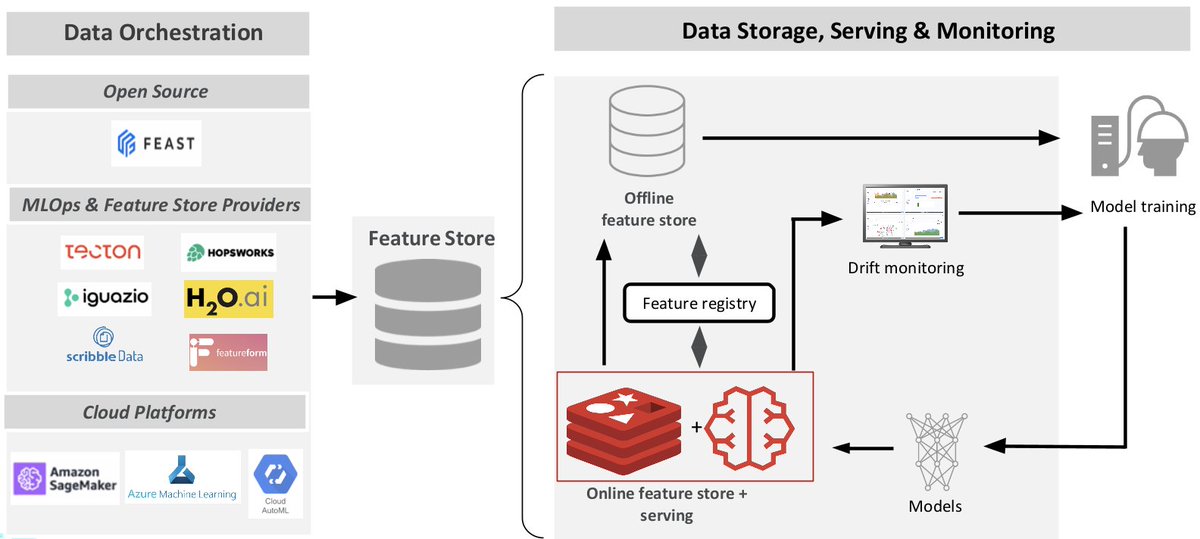

3/ @taimurrashid on @RedisLabs as an online feature store:

As an in-memory open-source database, Redis supports a variety of high-performance ops, analytics, or hybrid use cases. But it can also either empower ML models or function as an AI serving and monitoring platform.

As an in-memory open-source database, Redis supports a variety of high-performance ops, analytics, or hybrid use cases. But it can also either empower ML models or function as an AI serving and monitoring platform.

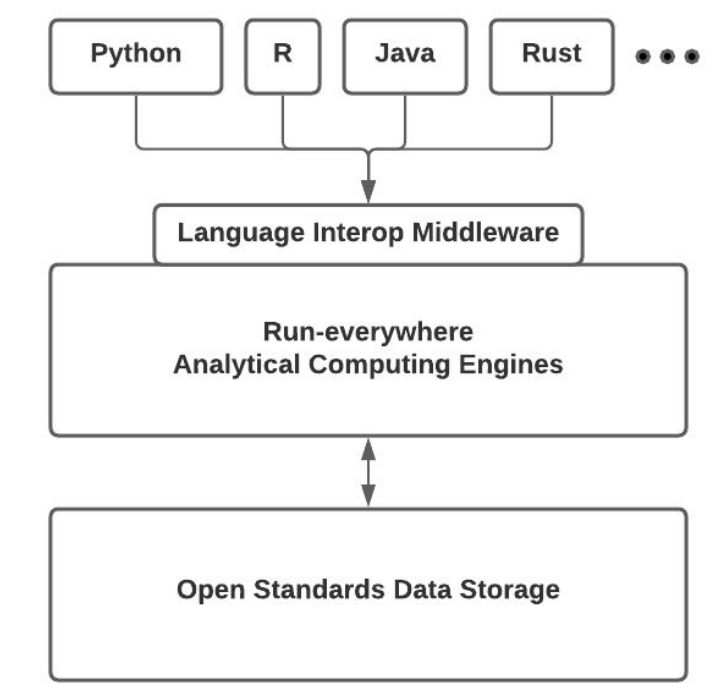

4/ @wesmckinn on the idealized #computing utopia:

- Programming languages are treated as first-class citizens

- Compute engines become more portable and usable

- Data is accessed efficiently and productively wherever you need it

- Programming languages are treated as first-class citizens

- Compute engines become more portable and usable

- Data is accessed efficiently and productively wherever you need it

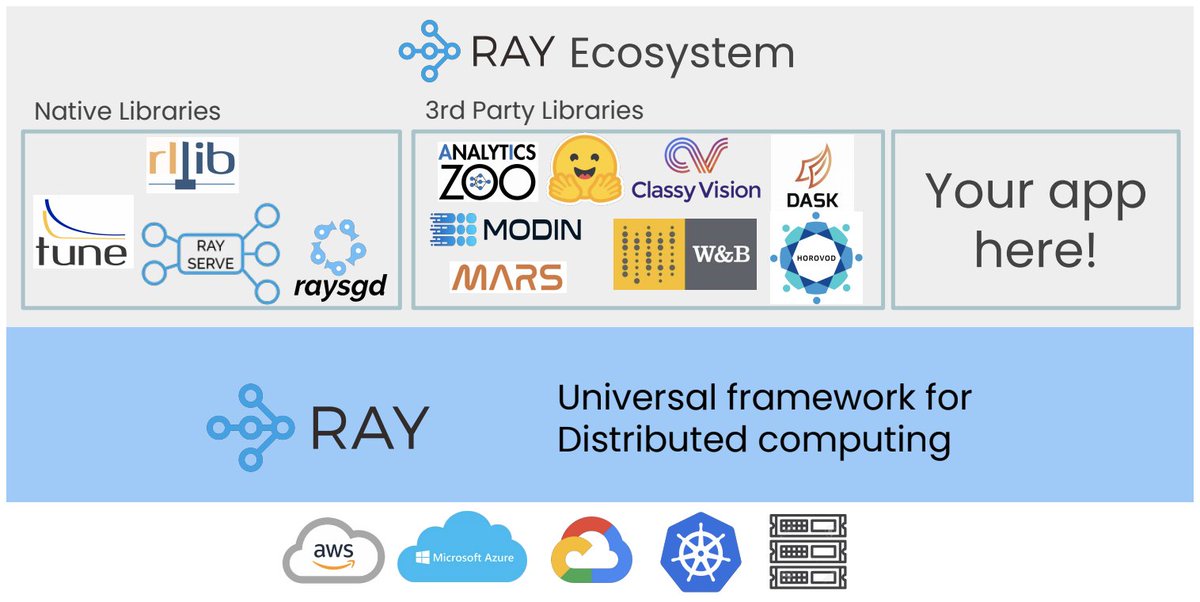

5/ @waleedk on 3rd-generation production ML architecture:

- Complete programmability without any pipeline

- Programming language as the interface

- Focus on libraries and open up the power of ML to more users

- Complete programmability without any pipeline

- Programming language as the interface

- Focus on libraries and open up the power of ML to more users

6/ @chipro on #OnlineLearning between https://abs.twimg.com/emoji/v2/... draggable="false" alt="🇨🇳" title="Flagge von China" aria-label="Emoji: Flagge von China">and

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🇨🇳" title="Flagge von China" aria-label="Emoji: Flagge von China">and https://abs.twimg.com/emoji/v2/... draggable="false" alt="🇺🇸" title="Flagge der Vereinigten Staaten" aria-label="Emoji: Flagge der Vereinigten Staaten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🇺🇸" title="Flagge der Vereinigten Staaten" aria-label="Emoji: Flagge der Vereinigten Staaten">

- Chinese companies have a more mature adoption of this approach

- Chinese companies are younger, thus having less legacy infrastructure to adopt new ways of doing things

- China has a bigger national effort to win the AI race

- Chinese companies have a more mature adoption of this approach

- Chinese companies are younger, thus having less legacy infrastructure to adopt new ways of doing things

- China has a bigger national effort to win the AI race

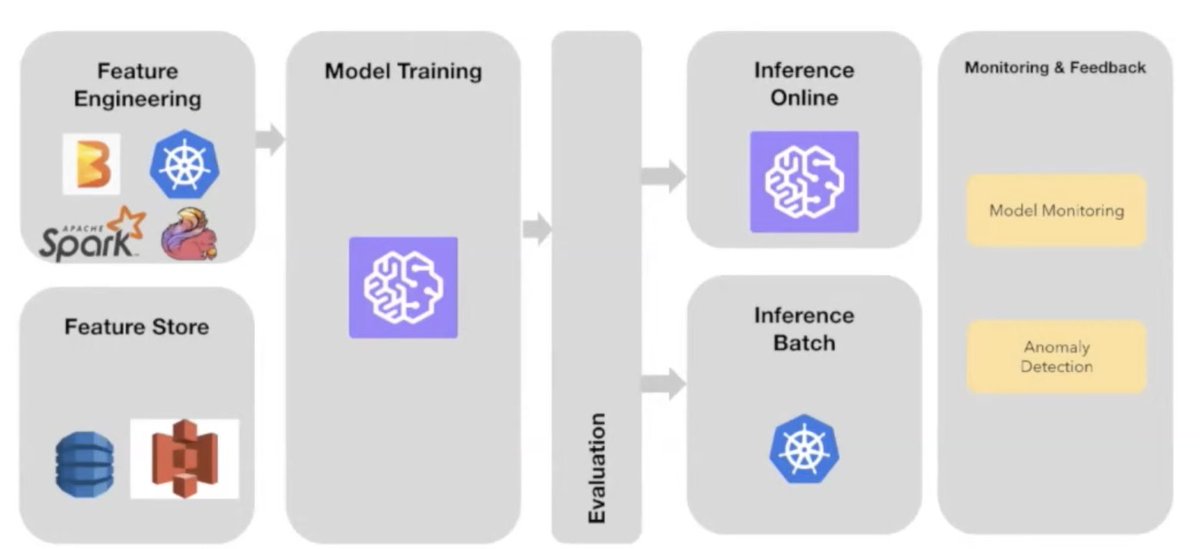

7/ @DoorDashEng on tactics to scale ML platform:

- Isolate use cases whenever possible

- Reserve time to work on scaling up rather than scaling out

- Pen down infrastructure dependencies and implications

- Isolate use cases whenever possible

- Reserve time to work on scaling up rather than scaling out

- Pen down infrastructure dependencies and implications

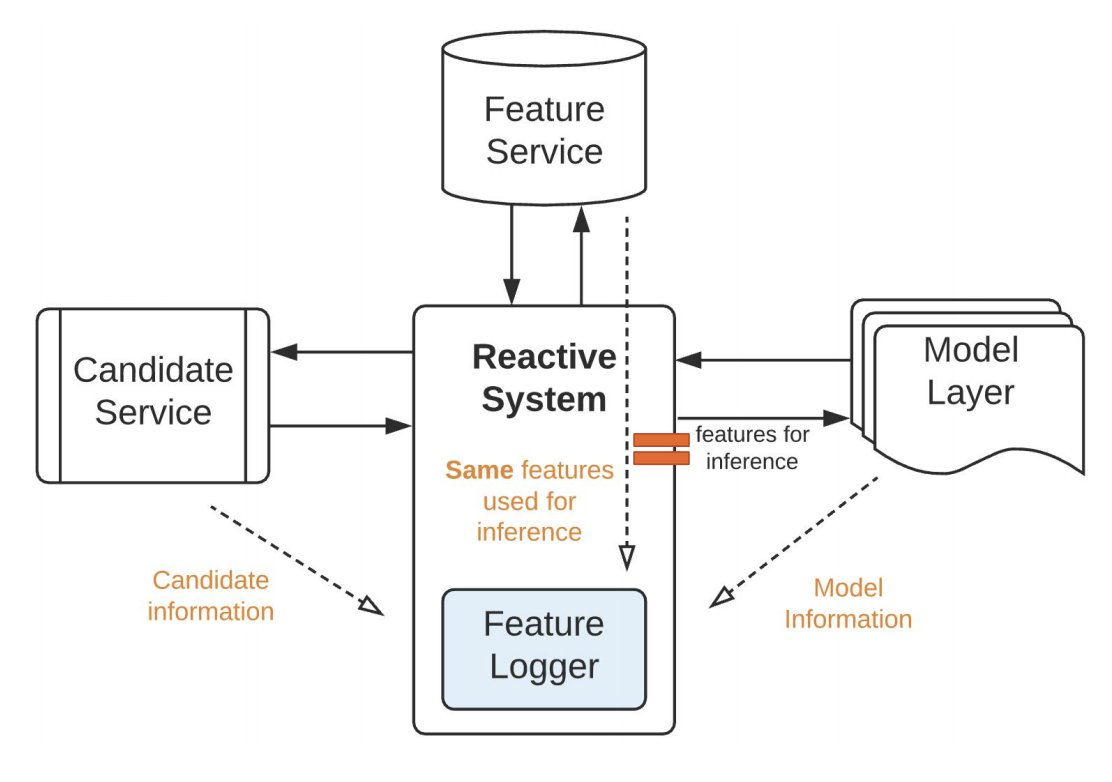

8/ @codeascraft on developing a real-time ML pipeline:

- Use real-time feature logging to capture in-session/trending activities

- Build a typed unified feature store to share features across models from many domains

- Serve features at scale to power in-house reactive systems

- Use real-time feature logging to capture in-session/trending activities

- Build a typed unified feature store to share features across models from many domains

- Serve features at scale to power in-house reactive systems

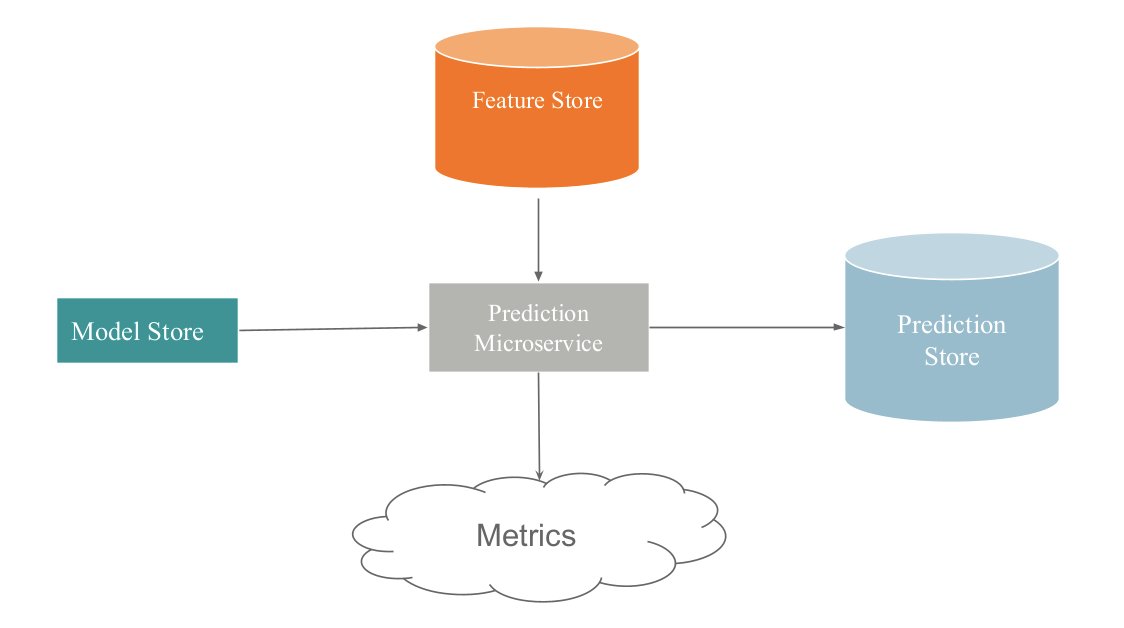

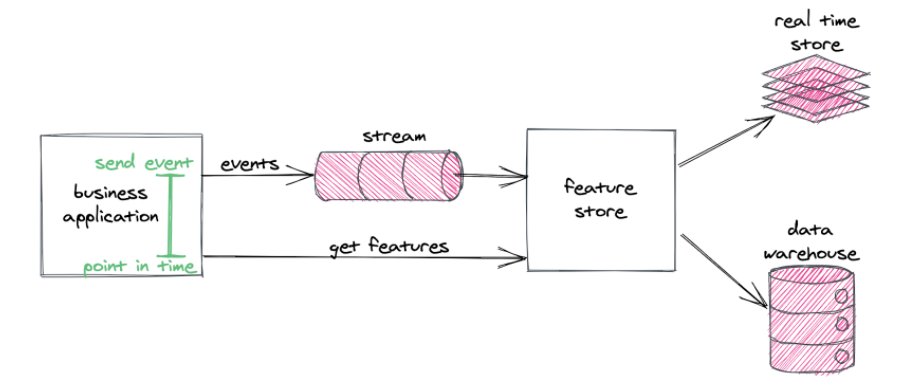

9/ @IntuitDev on building real-time personalization pipeline:

- A featurization pipeline for featurization using streaming data and online storage for inference

- A model inference pipeline for deployment and hosting, feature fetching from the feature store, and model monitoring

- A featurization pipeline for featurization using streaming data and online storage for inference

- A model inference pipeline for deployment and hosting, feature fetching from the feature store, and model monitoring

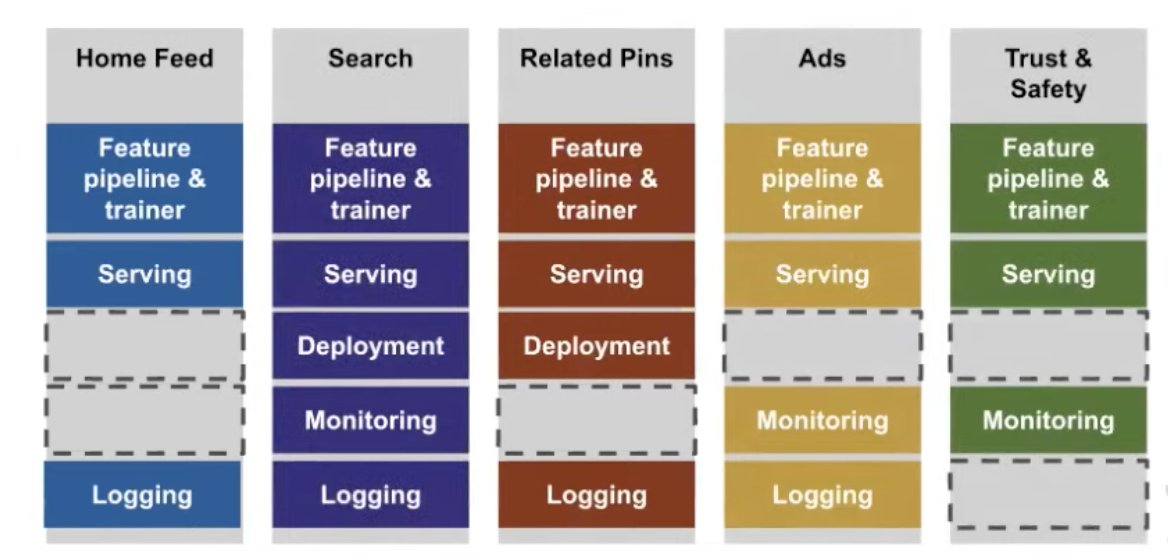

10/ @PinterestEng on the unification of its ML platform:

- Figure out a technical path to establish a foundation for platform standardization and code migration

- Identify bottom-up incentives

- Mix in top-down alignment

- Figure out a technical path to establish a foundation for platform standardization and code migration

- Identify bottom-up incentives

- Mix in top-down alignment

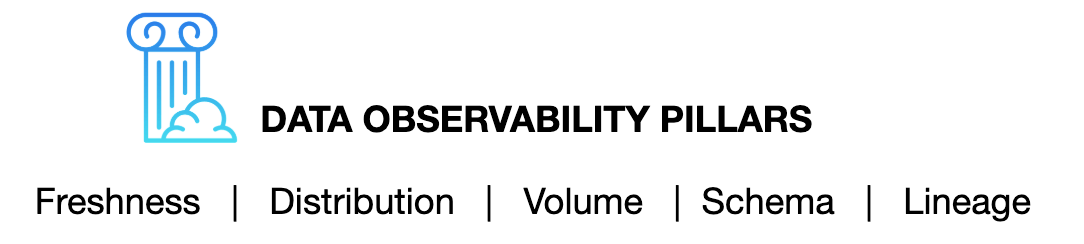

11/ @BM_DataDowntime on #DataObservability in practice:

- Set baseline expectations about your data

- Monitor for anomalies across the five pillars

- Collect rich metadata about your most critical data assets to lineage

- Map lineage between upstream and downstream dependencies

- Set baseline expectations about your data

- Monitor for anomalies across the five pillars

- Collect rich metadata about your most critical data assets to lineage

- Map lineage between upstream and downstream dependencies

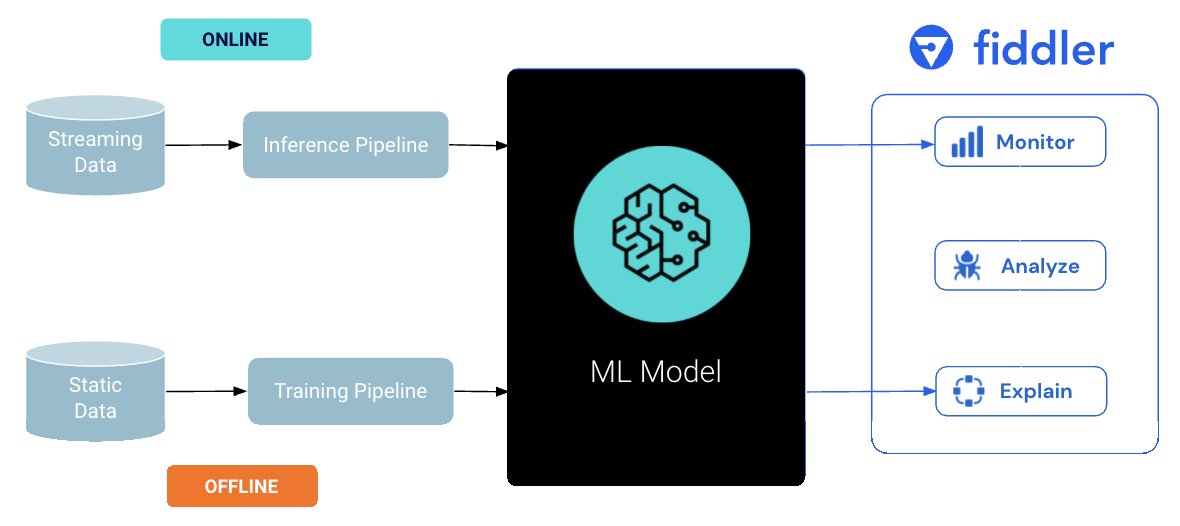

12/ @krishnagade on centralized model performance management:

MPM reduces operational risks with AI/ML by addressing performance degradation, inadvertent bias, data quality and other undetected issues, alternative performance indicators, and, most importantly, black-box models.

MPM reduces operational risks with AI/ML by addressing performance degradation, inadvertent bias, data quality and other undetected issues, alternative performance indicators, and, most importantly, black-box models.

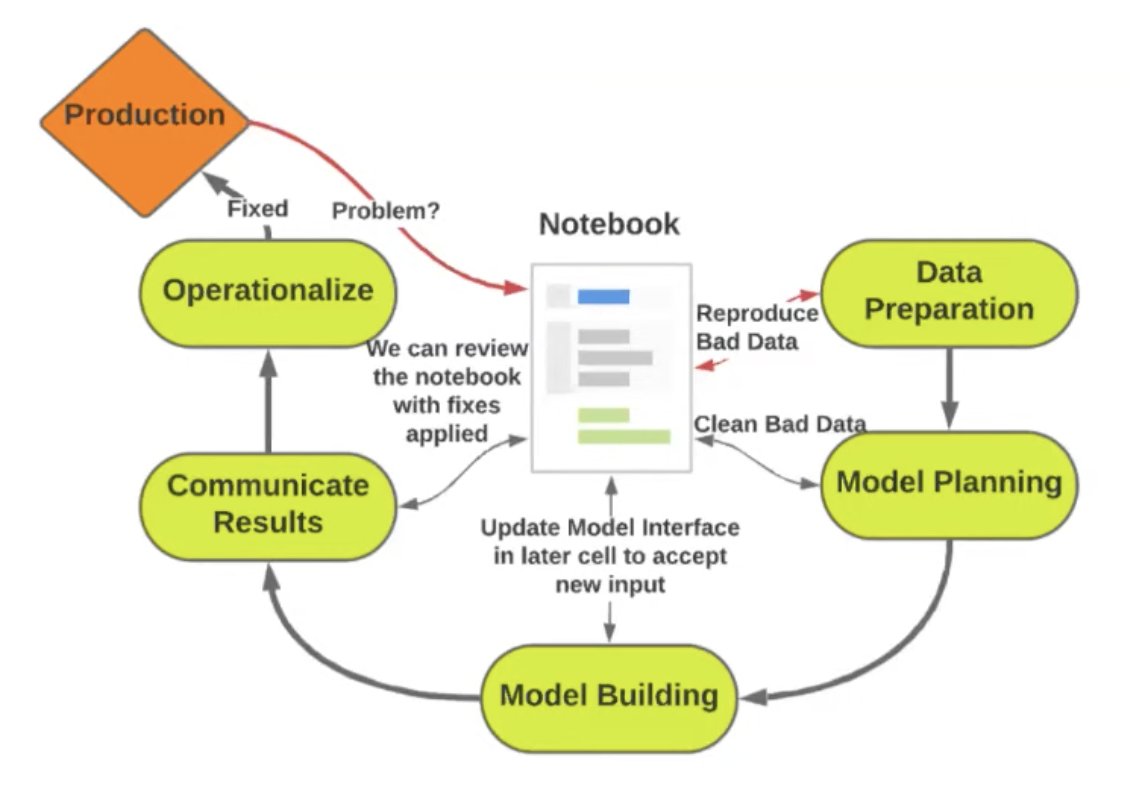

13/ @MichelleUfford on productionalizing data projects:

In the modern data lifecycle, friction points moving across steps have become a bottleneck. A unifying tool (like notebooks) serves as an interface to ensure that activities in prod are similar to activities in dev.

In the modern data lifecycle, friction points moving across steps have become a bottleneck. A unifying tool (like notebooks) serves as an interface to ensure that activities in prod are similar to activities in dev.

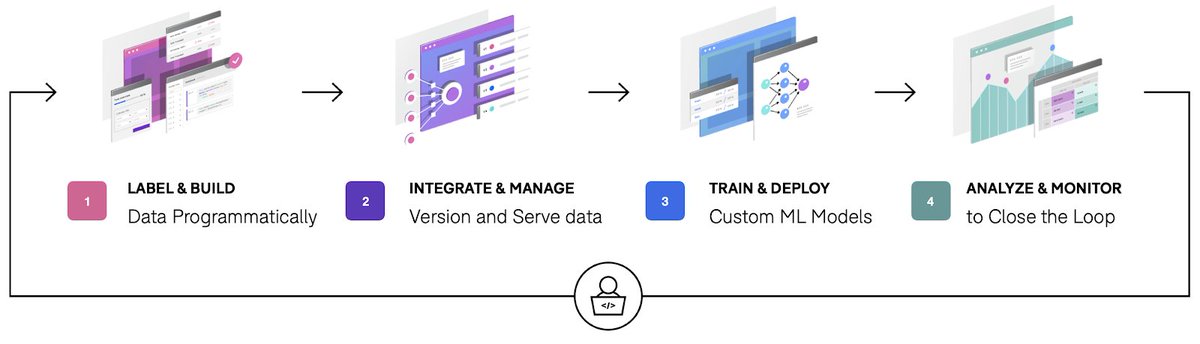

14/ @ajratner on programmatic labeling:

Rather than labeling data by hand, programmatic labeling lets users with domain knowledge and a clear understanding of the problem (Subject Matter Experts) label thousands of data points in minutes using powerful labeling functions.

Rather than labeling data by hand, programmatic labeling lets users with domain knowledge and a clear understanding of the problem (Subject Matter Experts) label thousands of data points in minutes using powerful labeling functions.

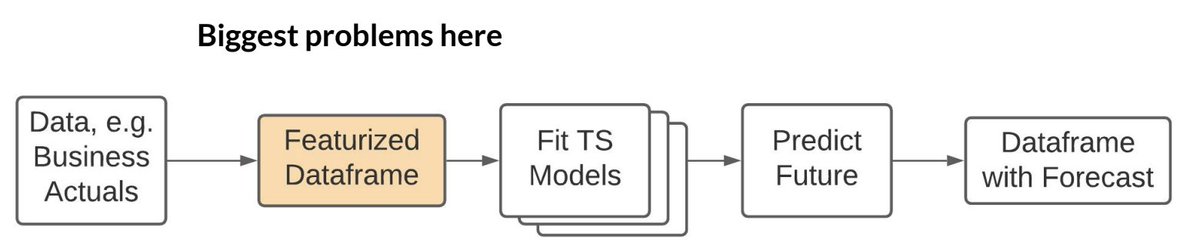

15/ @stefkrawczyk on creating a micro-framework to solve a featurization pain point at @stitchfix_algo:

- Unit testing is standardized

- Documentation is easy and natural

- Visualization is effortless with a DAG

- Debugging is simpler

- Data scientists can focus on what matters

- Unit testing is standardized

- Documentation is easy and natural

- Visualization is effortless with a DAG

- Debugging is simpler

- Data scientists can focus on what matters

16/ @orrshilon on using point-in-time for online inference:

- Monitor real-time feature service-level indicators

- Communicate internally with the platform team and externally with stakeholders

- Create legacy data backfill guarantees by manually testing and backfilling per p-i-t

- Monitor real-time feature service-level indicators

- Communicate internally with the platform team and externally with stakeholders

- Create legacy data backfill guarantees by manually testing and backfilling per p-i-t

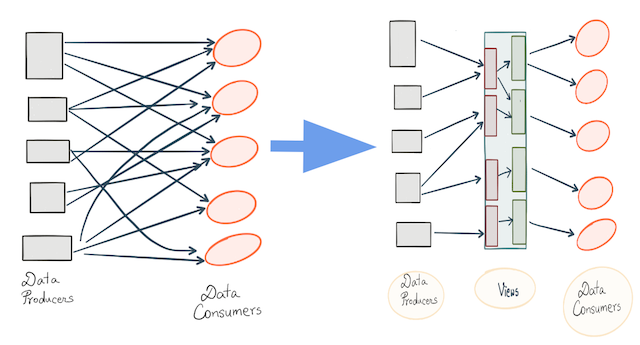

17/ @cwsteinbach on expressing datasets as code:

- Enables seamless movement between physical and virtual datasets

- Reuses code

- Manages dependencies

- Stays format- and storage-agnostic

- Decouples data producers from data consumers

- Enables seamless movement between physical and virtual datasets

- Reuses code

- Manages dependencies

- Stays format- and storage-agnostic

- Decouples data producers from data consumers

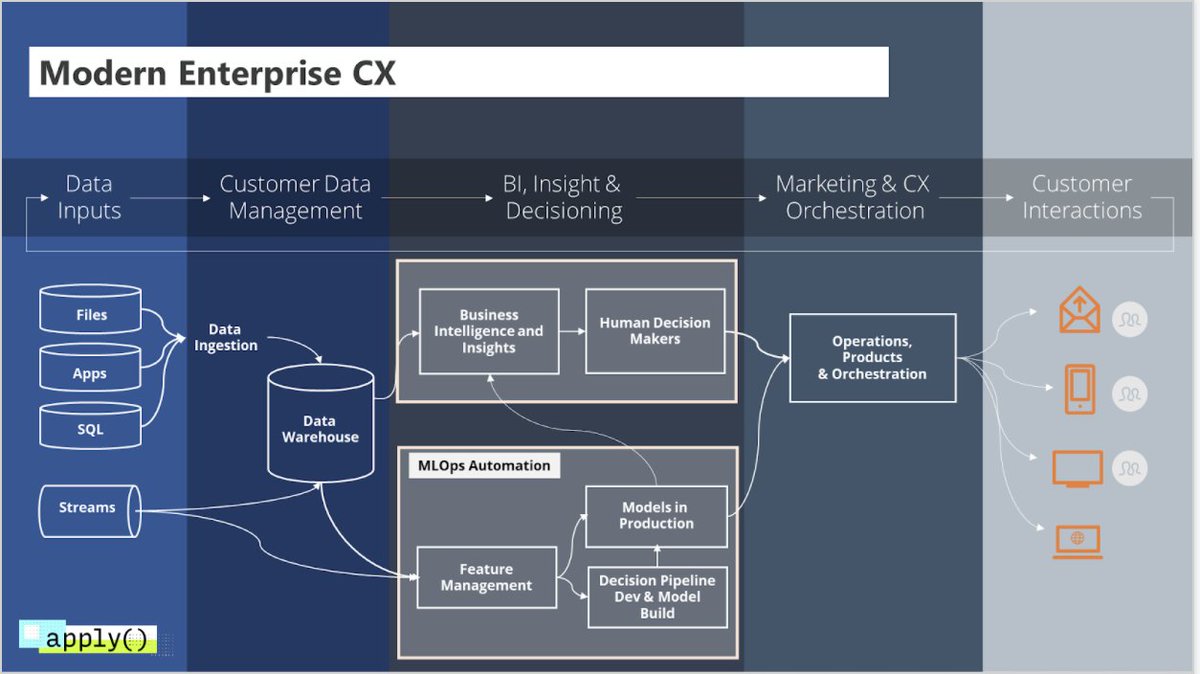

18/ @DeloitteDigital on developing a CX platform:

- Enforce harmony between technology/techniques/talent

- Be seamless during the E2E pipeline from data to engagement

- Use AI integrated, powerful, and designed for marketers

- Empower analytics with insights/measurement built-in

- Enforce harmony between technology/techniques/talent

- Be seamless during the E2E pipeline from data to engagement

- Use AI integrated, powerful, and designed for marketers

- Empower analytics with insights/measurement built-in

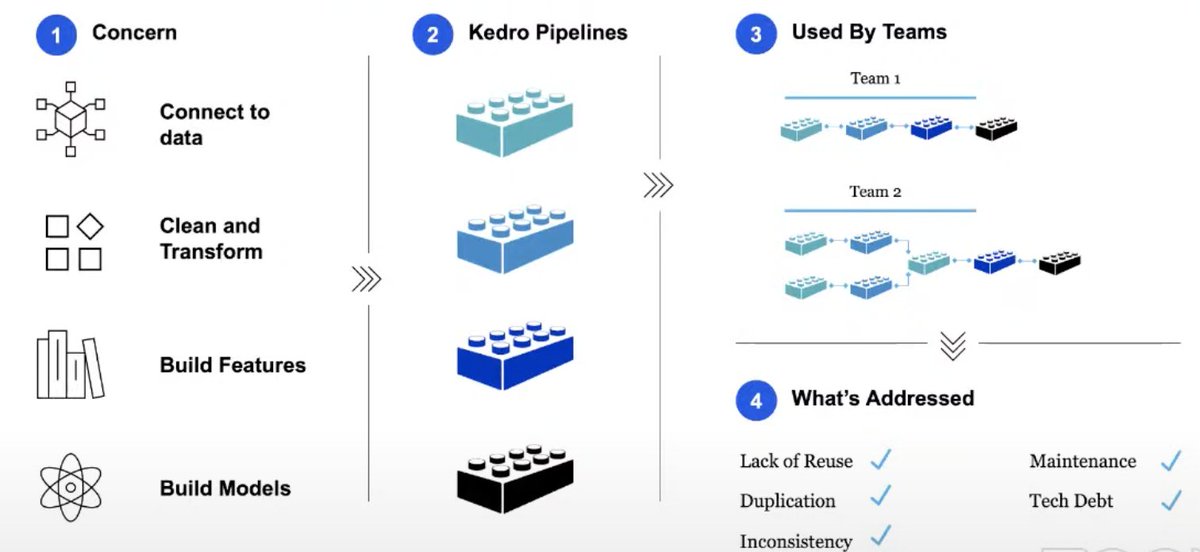

19/ @quantumblack on reusable ML:

Kedro sets a foundation for creating repeatable DS code, provides an easy transition from dev to prod, and applies SE concepts to DS code. It is the scaffolding that helps us develop a data/ML pipeline that can be deployed.

Kedro sets a foundation for creating repeatable DS code, provides an easy transition from dev to prod, and applies SE concepts to DS code. It is the scaffolding that helps us develop a data/ML pipeline that can be deployed.

20/ If you are a practitioner, investor, or operator excited about best practices for development patterns, tooling, and emerging architectures to successfully build and manage production ML applications, please reach out to trade notes and tell me more!  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🙏" title="Gefaltete Hände" aria-label="Emoji: Gefaltete Hände">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🙏" title="Gefaltete Hände" aria-label="Emoji: Gefaltete Hände">

DM is open https://abs.twimg.com/emoji/v2/... draggable="false" alt="📩" title="Umschlag mit nach unten zeigendem Pfeil darüber" aria-label="Emoji: Umschlag mit nach unten zeigendem Pfeil darüber">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="📩" title="Umschlag mit nach unten zeigendem Pfeil darüber" aria-label="Emoji: Umschlag mit nach unten zeigendem Pfeil darüber">

DM is open

Read on Twitter

Read on Twitter