People have been making the (valid) point that climate variability still exists even after we reach net-zero carbon emissions. This can also be turned around: how close to net-zero do you need to get to no longer be able to detect temperature trends?

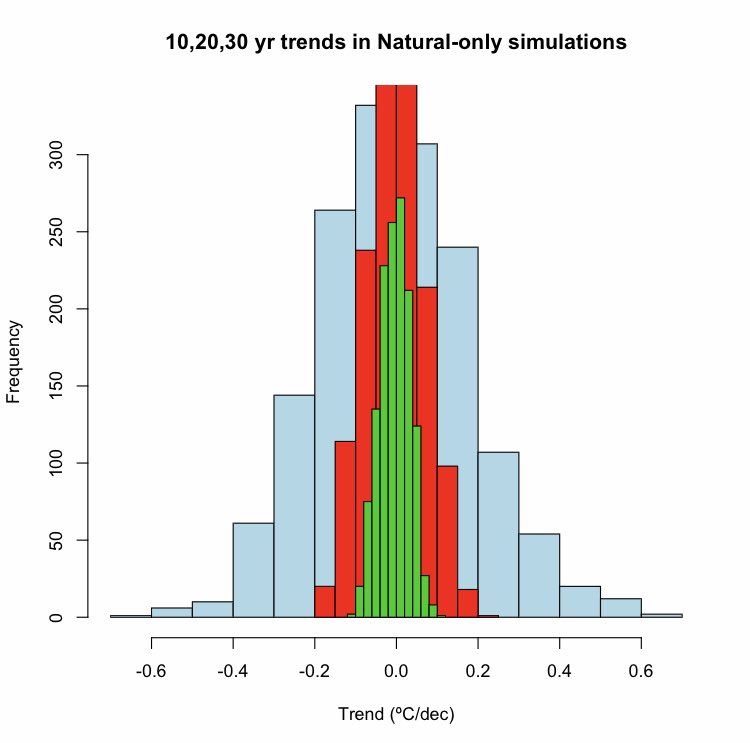

An estimate of natural variability at different periods can be derived from natural-forcing-only historical runs (includes volcanoes/solar etc). The 95% confidence intervals on the spread is 0.35, 0.14, 0.07°C/dec for 10, 20 & 30 yr trends respectively.

Roughly the current rate of temperature rise (~0.2°C/dec) corresponds to 10GtC/yr emissions. This is easily detectable in 20yr trends (but not over a 10yr period). But if we reduce emissions by 90%, the expected trend would be ~0.02°C/dec, which is not detectable even over 30yrs!

Note that because of natural variability and basic accounting uncertainty in the carbon cycle we will not know exactly how close we will be to net-zero (is accuracy within 0.5 GtC/yr achievable?). The annual variability in the atmospheric CO2 conc is ~0.3ppm.

I don’t have a fully worked answer to the original question, but the point is that once the bulk of emission cuts are achieved, the small residuals, the non-CO2 forcings and inherent natural variability will all be much more important for decadal temperature trends.

I can easily foresee a great deal of scientific debate about the relative importance of all these (uncertain) terms that won’t be that relevant to anything practical. To be clear that is a much better situation than the one we have today!

Read on Twitter

Read on Twitter