I& #39;ve found the root cause of the problem in @what3words and can now demonstrate it.

Let& #39;s pick a square in a UK urban area.

///could.find.tolls https://what3words.com/could.fines.tolls">https://what3words.com/could.fin...

Let& #39;s pick a square in a UK urban area.

///could.find.tolls https://what3words.com/could.fines.tolls">https://what3words.com/could.fin...

With the patent and API, we can now determine the intermediate figures:

i,j,k - the index into the word list

655, 1505, 1660

m - the integer form of i,jk scrambled from n.

4580900986

n - the unique sequential number that IDs a cell:

404906746

i,j,k - the index into the word list

655, 1505, 1660

m - the integer form of i,jk scrambled from n.

4580900986

n - the unique sequential number that IDs a cell:

404906746

I& #39;ve analysed the modulo multiplication used to scramble n into m. It displays periodicity.

If you move a fixed number of n away from the cell you are testing, you move into another region where the n to m conversions are moving in sync with each other but slightly apart.

If you move a fixed number of n away from the cell you are testing, you move into another region where the n to m conversions are moving in sync with each other but slightly apart.

So n=10000 and n=4169793 will have m of:

11814410000

11814411713 (1713 different)

Which resullts in the words:

///blaze.custom.nest

///blaze.custom.faster

2 common words.

11814410000

11814411713 (1713 different)

Which resullts in the words:

///blaze.custom.nest

///blaze.custom.faster

2 common words.

n=10001 and n=4169793 (adding 1 to n generally means "move up one cell")

5590591441

5590593154 (1713 different)

///spend.means.issues

///glass.young.issues

5590591441

5590593154 (1713 different)

///spend.means.issues

///glass.young.issues

n=10002 and n=4169794

14991772882

14991774595 (1713 different)

///party.hers.wiping

///party.butter.wiping

Two words common.

14991772882

14991774595 (1713 different)

///party.hers.wiping

///party.butter.wiping

Two words common.

This also works if you move sideways, to an extent.

The point is, we have a mathematical relationship between two areas where there is a *much* higher chance that two of the words are the same for a given cell.

The point is, we have a mathematical relationship between two areas where there is a *much* higher chance that two of the words are the same for a given cell.

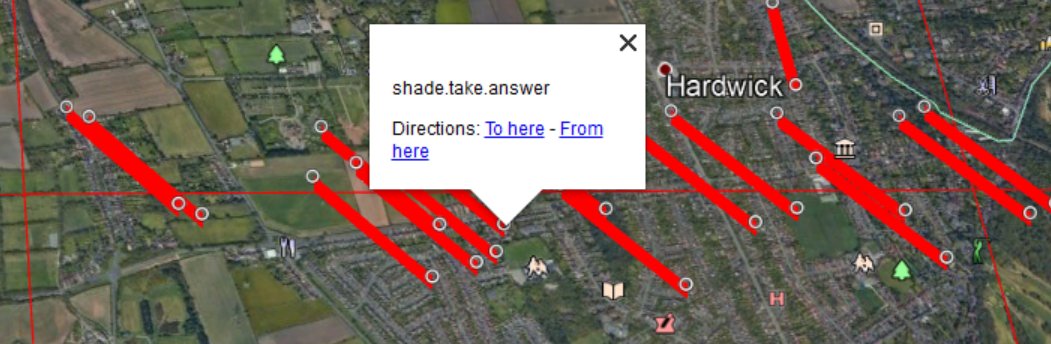

Back to our square

//could.fines.tolls.

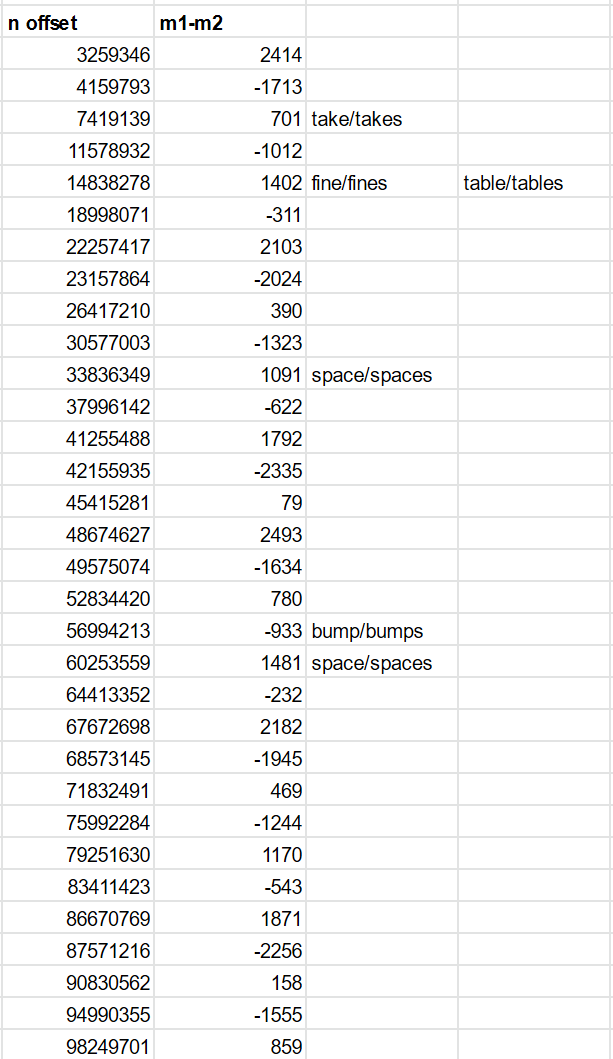

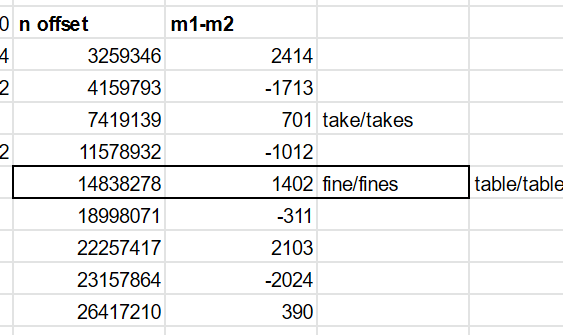

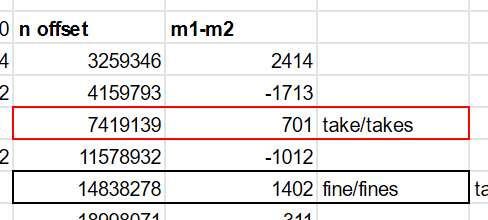

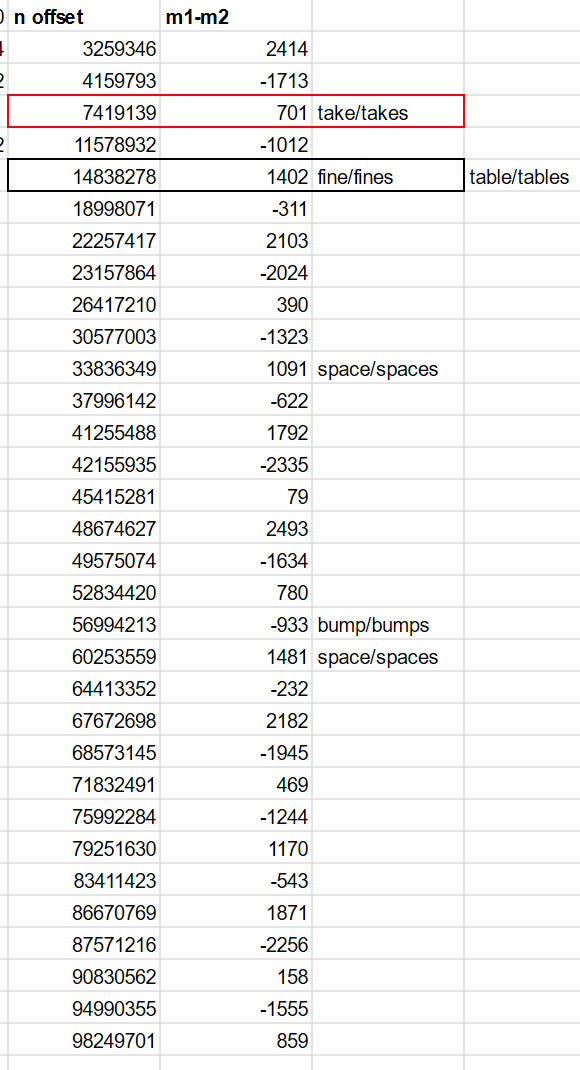

I wrote some Python to look into these areas offsets.

You can see in the "n" column that the pairs

could.*.tollls

think.*.tolls

Are really common!

You even have a plural pair there with could.fine.tolls.

//could.fines.tolls.

I wrote some Python to look into these areas offsets.

You can see in the "n" column that the pairs

could.*.tollls

think.*.tolls

Are really common!

You even have a plural pair there with could.fine.tolls.

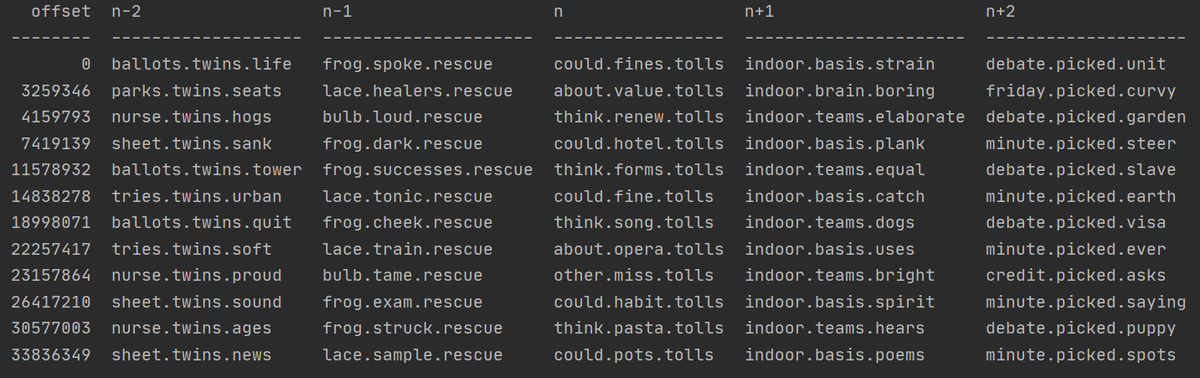

n-2:

parks.twins.*

nurse.twins.*

n-1:

frog.*.rescue

lace.*.rescue

n+1:

indoor.basis.*

indoor.teams.*

n+2:

minute.picked.*

debate.picked.*

parks.twins.*

nurse.twins.*

n-1:

frog.*.rescue

lace.*.rescue

n+1:

indoor.basis.*

indoor.teams.*

n+2:

minute.picked.*

debate.picked.*

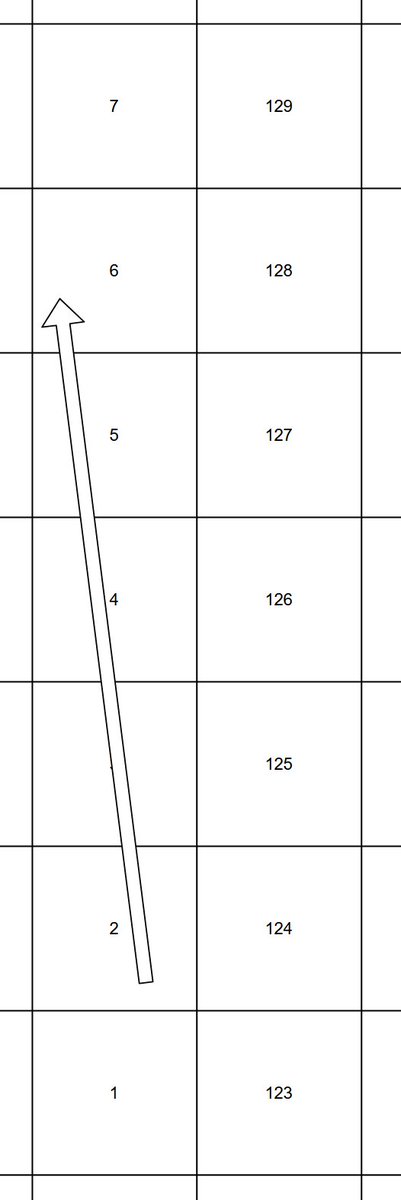

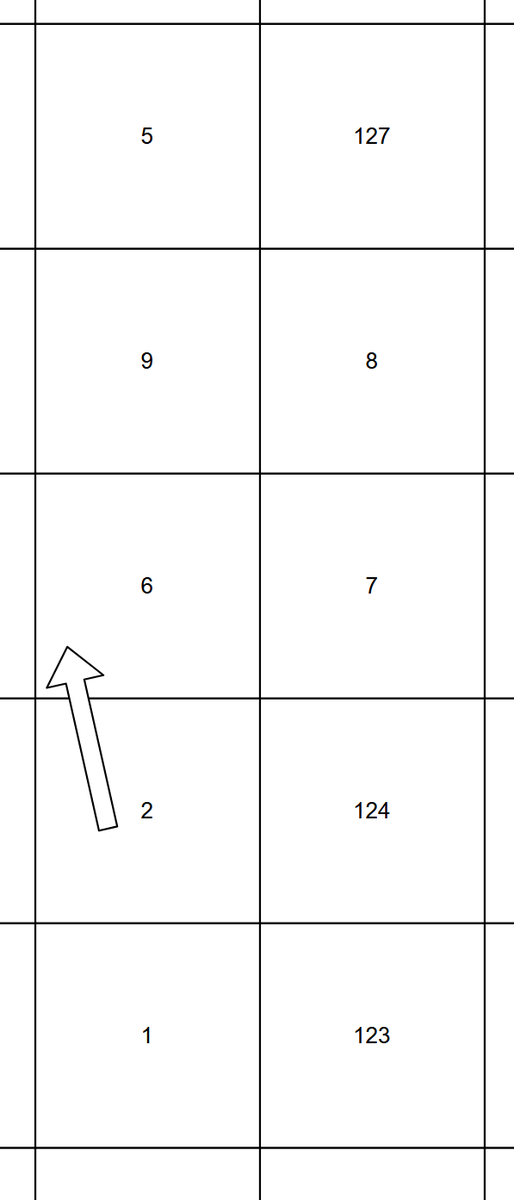

The problem here is that these n offsets where we see this issue are quite small.

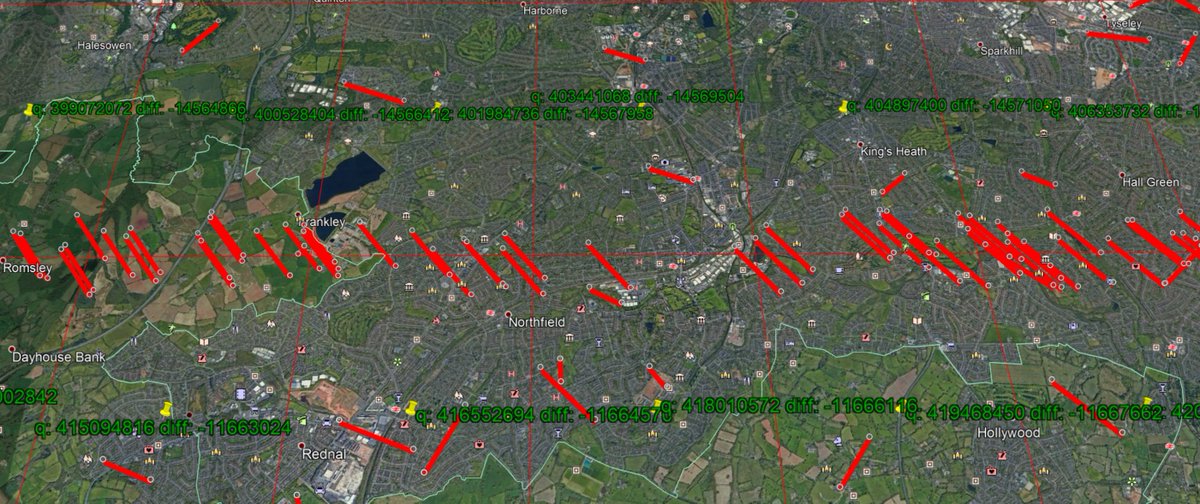

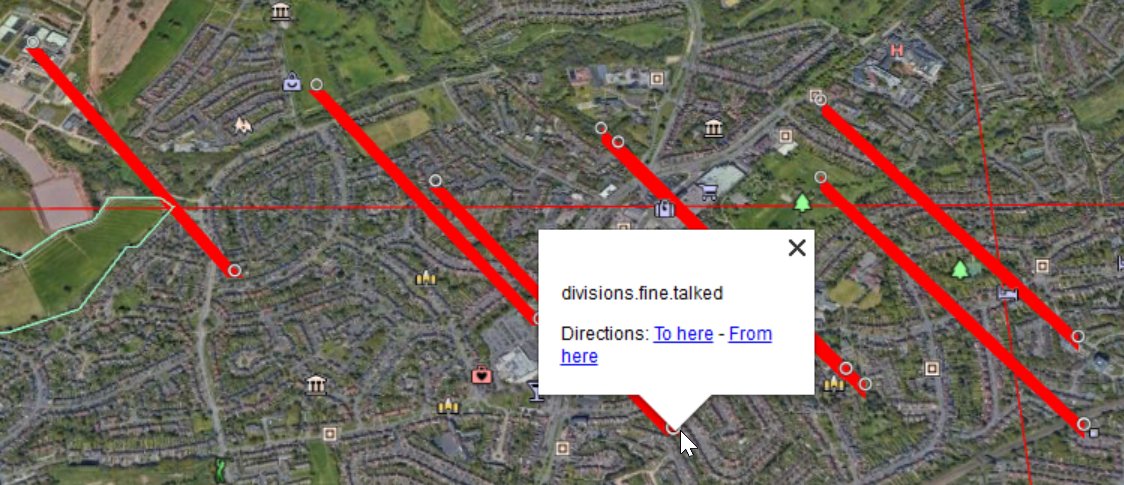

Each large cell holds a certain number of n cells. We start seeing these interesting offsets within 3 large cell distance away.

Each large cell holds a certain number of n cells. We start seeing these interesting offsets within 3 large cell distance away.

There& #39;s none under 2 cells away though, so why are we seeing far shorter distance?

It& #39;s because of q - the lookup table that places lower numbered cells in higher population density areas.

Adjacent cells might be different in n.

It& #39;s because of q - the lookup table that places lower numbered cells in higher population density areas.

Adjacent cells might be different in n.

When this happens, you get cells with these offset areas *very* closely matched.

We can see that the row above the banding has a "q" (the value on "n" on the lower left) that is approximately 14,560,000 lower than the cell below.

We can see that the row above the banding has a "q" (the value on "n" on the lower left) that is approximately 14,560,000 lower than the cell below.

Now look back to the offsets I found and the difference between m1 and m2 - 1402. That corresponds to the difference in word pairs fine/fines.

And there we go again.

The slight difference in the offsets is explained by the values being at the top of one cell and bottom of another, along with the lateral shift.

The slight difference in the offsets is explained by the values being at the top of one cell and bottom of another, along with the lateral shift.

I think this demonstrates why the system has these plural pairs. The words being plurals are *pure* chance.

As you can see, I& #39;ve only identified a few obvious ones. But, as expected, these are the most common middle words which are in closely spaced pairs.

As you can see, I& #39;ve only identified a few obvious ones. But, as expected, these are the most common middle words which are in closely spaced pairs.

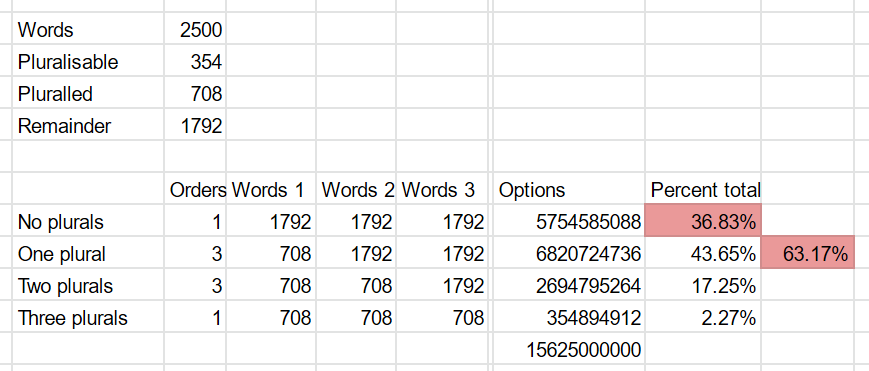

The last piece of the puzzle is the impact trying to make the system use shorter words in these urban areas.

The dictionary is limited to 2500 words, not the full 40000.

That& #39;s clearly going to increase the chance that you hit on the same word in two cells anyway!

The dictionary is limited to 2500 words, not the full 40000.

That& #39;s clearly going to increase the chance that you hit on the same word in two cells anyway!

But it& #39;s the impact of the plurals.

There are 354 words out of the 2500 that can have an s added to become another word in that list.

That means 708 are confusable, leaving only 1792 words.

There are 354 words out of the 2500 that can have an s added to become another word in that list.

That means 708 are confusable, leaving only 1792 words.

We do the maths on this, and over 63% of available word combinations contain one of these words that can be confused!

This isn& #39;t even accounting for the homophones.

Which haven& #39;t been removed because once/wants is in the list!

Which haven& #39;t been removed because once/wants is in the list!

All told, there are many flaws in how this system has been implemented.

It& #39;s simply not true that homophones have been removed, or that mistakes result in locations that are far away.

That& #39;s been shown experimentally and now by analysing the algorithm.

It& #39;s simply not true that homophones have been removed, or that mistakes result in locations that are far away.

That& #39;s been shown experimentally and now by analysing the algorithm.

I am not sure how this passed any inspection of any depth.

If I hadn& #39;t had to work out how it works from the patent, it would have been quicker.

And I am sure smart crypto/maths people quicker still.

If I hadn& #39;t had to work out how it works from the patent, it would have been quicker.

And I am sure smart crypto/maths people quicker still.

In b4 w3w fanboys say that you& #39;d only find these issues if you had too much time on your hands. https://twitter.com/Silverha1de/status/1384423675307479041">https://twitter.com/Silverha1...

Read on Twitter

Read on Twitter