#1. Recurrent Neural Networks: Understanding Long Short Term Memory ( LSTM ) Networks { A self-study thread }

#WhoKnowsHowManyDaysOfThreads

#WhoKnowsHowManyDaysOfThreads

Before jumping into the topic& #39;s core (meat and potatoes), let us take a brief look into RNNs.

The human memory essentially is always persistent, This meaning It does not start thinking in a way that has no basis of experience to solve a problem no matter how trivial it may be

The human memory essentially is always persistent, This meaning It does not start thinking in a way that has no basis of experience to solve a problem no matter how trivial it may be

This Persistence is what traditional Neural Networks lack and considered one of their major shortcomings and the RNN address this very issue. They act as a memory for the network while going forward with the problem at hand with each decision being influenced by the previous

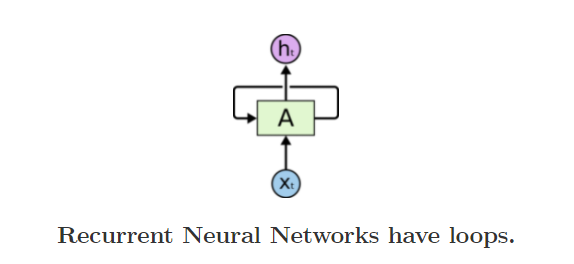

RNN has loops that allow the information to persist.

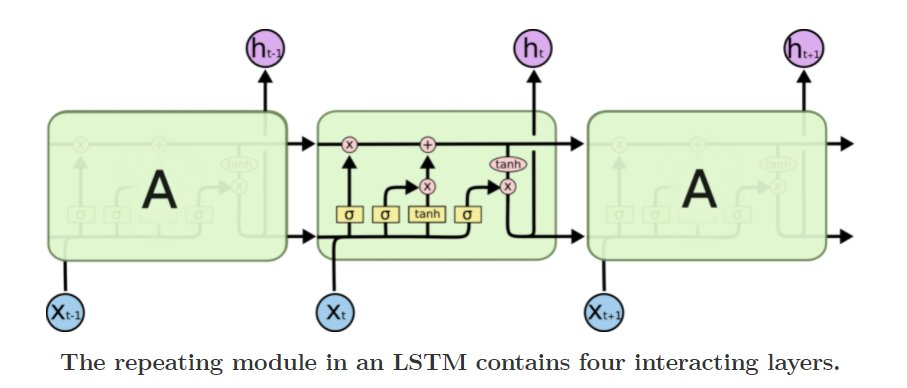

In the below diagram neural network(s) `A` looks at some input Xt and outputs a value ht. A loop allows information to be passed from one step to the next

In the below diagram neural network(s) `A` looks at some input Xt and outputs a value ht. A loop allows information to be passed from one step to the next

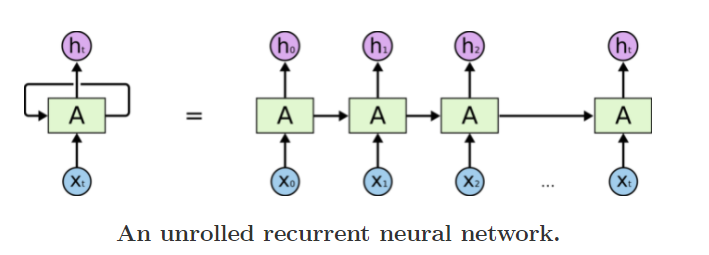

On unwinding this loop we realize that RNNs are multiple copies of the same network passing a message (memory) to the successor. So from this, we can think of RNNs being related to sequential types of data and thus are the natural architecture for NN to use for such data.

RNNs have been implemented to various problems like speech recognition, image captioning, language modeling, translation, etc. The most essential structure to these being the use of LSTMs that is mostly behind all the impressive feats of RNN.

The Problem of Long-Term Dependencies :

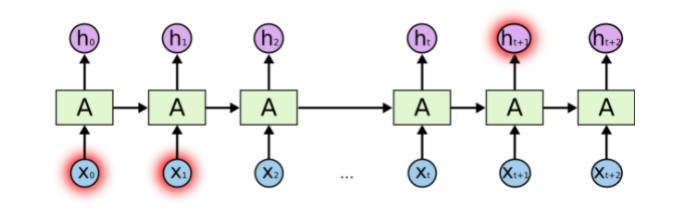

The one appeal of RNN is adding the memory component to a NN. It is pretty amazing that an RNN can connect the present information to the previous task but the question is to what extent?

This is where we start seeing the problem with RNNs

The one appeal of RNN is adding the memory component to a NN. It is pretty amazing that an RNN can connect the present information to the previous task but the question is to what extent?

This is where we start seeing the problem with RNNs

If the gap between the information we are presently trying to predict and the previous information needed to give context to the prediction is small RNNs can learn to use the past information

For example: "the CLOUDS are in the ___" should be SKY since there is only one subject

For example: "the CLOUDS are in the ___" should be SKY since there is only one subject

"clouds" we do not need any further context to predict that it will be that however in a sentence such as

"I GREW up in FRANCE.... I SPEAK FRENCH"

we can see the subject changing from GREW to SPEAK and so in order to predict FRENCH the RNN has to travel all the way back to the

"I GREW up in FRANCE.... I SPEAK FRENCH"

we can see the subject changing from GREW to SPEAK and so in order to predict FRENCH the RNN has to travel all the way back to the

previous sentence to get the context of FRANCE so it can predict FRENCH. In a similar way, our sentences keep getting larger the gap between the required predictions and the resources required for the said predictions becomes very large. This is the problem of short term memory

This is the very problem that the LSTM networks handle very efficiently.

They are capable of learning long-term dependencies thanks to the introduction of the cell state.

Remembering information for a long period of time is basically what LSTMs do.

They are capable of learning long-term dependencies thanks to the introduction of the cell state.

Remembering information for a long period of time is basically what LSTMs do.

The Core Idea Behind LSTMs :

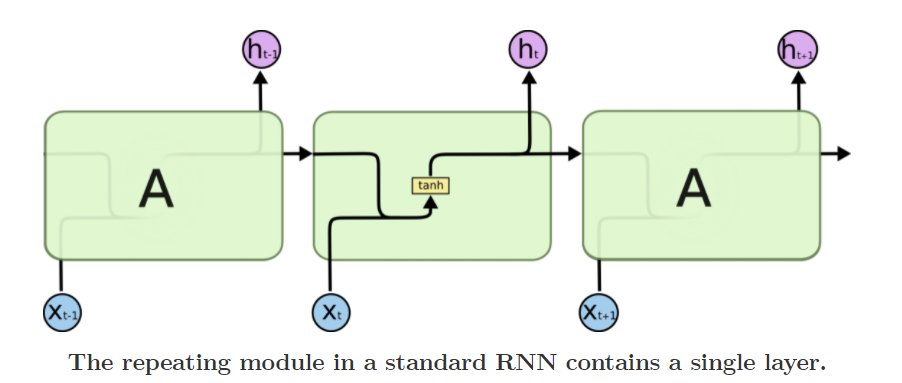

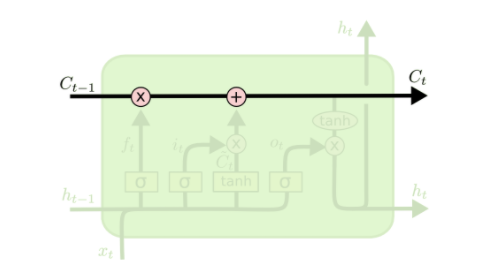

As said earlier the key to LSTMs is the cell state which is basically like the conveyor belt that carries information throughout the entirety of the network. This is where long-term information is being stored and discarded.

As said earlier the key to LSTMs is the cell state which is basically like the conveyor belt that carries information throughout the entirety of the network. This is where long-term information is being stored and discarded.

The process of adding or removing information in LSTMs is done through GATES that have a sigmoid neural net layer and a pointwise multiplication operation

This basically outputs numbers between 0 and 1 describing how much information to let through to the cell state and not.

This basically outputs numbers between 0 and 1 describing how much information to let through to the cell state and not.

STEP-BY-STEP LSTM with the multi-sub example :

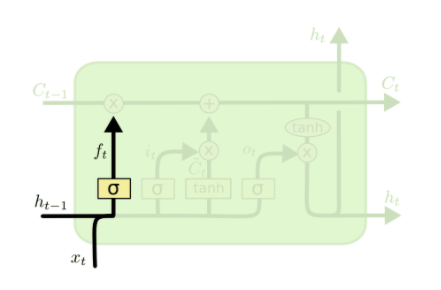

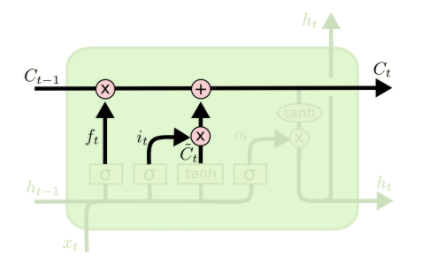

First, we decide on what information to allow into and throw from the cell state, and this decision is made by the forget-gate-layer(sigmoid)

It looks at both the present input and previous output and outputs a value between 0 and 1

First, we decide on what information to allow into and throw from the cell state, and this decision is made by the forget-gate-layer(sigmoid)

It looks at both the present input and previous output and outputs a value between 0 and 1

For example: In "I LIVE in FRANCE... I SPEAK FRENCH"

The gate first has LIVE in the cell state followed by FRANCE and then it has to forget the previous subject LIVE and replace with SPEAK since the context is being changed, and using the context of FRANCE we then predict FRENCH

The gate first has LIVE in the cell state followed by FRANCE and then it has to forget the previous subject LIVE and replace with SPEAK since the context is being changed, and using the context of FRANCE we then predict FRENCH

Not entirely sure about the example, correct me if I& #39;m wrong

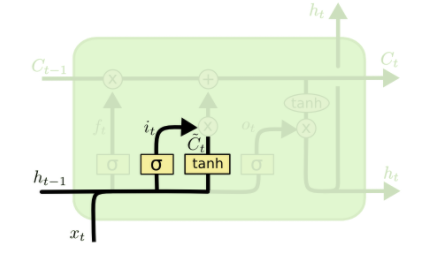

Next, we decide what new information we are going to store in the cell state.

This has two parts :

The sigmoid input gate layer for what to update and the tan h layer that decides the new candidates for the cell state

Next, we decide what new information we are going to store in the cell state.

This has two parts :

The sigmoid input gate layer for what to update and the tan h layer that decides the new candidates for the cell state

For example, We would want to add the new subject to the cell state SPEAK to replace the old one we are forgetting which is LIVE.

Now we actually update the old cell state into the new cell state with the new subject context.

This gets a little mathematical and I don& #39;t want to get into it here but basically, it drops the information about the old subject and adds the new information as we decided.

This gets a little mathematical and I don& #39;t want to get into it here but basically, it drops the information about the old subject and adds the new information as we decided.

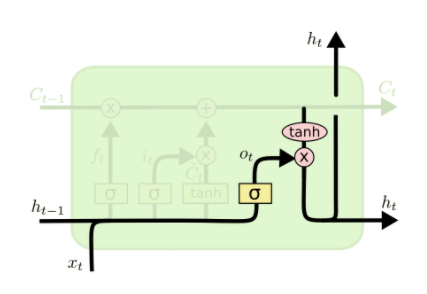

Finally, we decide what we are going to output. This output will be based on our cell state but a filtered version. Again there& #39;s math here... which is not all that important to understand but basically, the sigmoid layer decides which part of the cell state we want to output

then we put the cell state through tan h activation function ( to push the values between -1 and 1) and multiply it by the output of the sigmoid gate. Basically, this gives us only the output parts we decided to.

For example, it might output whether the verb relating to the

For example, it might output whether the verb relating to the

subject is singular or plural so that we just handle our grammar properly.

That& #39;s the general idea of how an LSTM works and carries the information into a depicted long short term memory structure. This thread is essentially word for word from https://colah.github.io/posts/2015-08-Understanding-LSTMs/.">https://colah.github.io/posts/201... I just wanted to refresh my knowledge with this from a week back. Keep Sharing:3

Read on Twitter

Read on Twitter