This leaked internal Facebook report on its content moderation failures (and qualified successes) leading up the Jan. 6 riot makes for a fascinating, concerning, and also just plain ~weird~ read. https://www.buzzfeednews.com/article/ryanmac/full-facebook-stop-the-steal-internal-report">https://www.buzzfeednews.com/article/r...

Facebook at this point has whole teams and task forces full of Very Serious People devoted to monitoring the site for bad guys. They& #39;ve developed a CIA-worthy lexicon of jargon and acronyms to diagnose and classify the different types of bad guys and intel techniques.

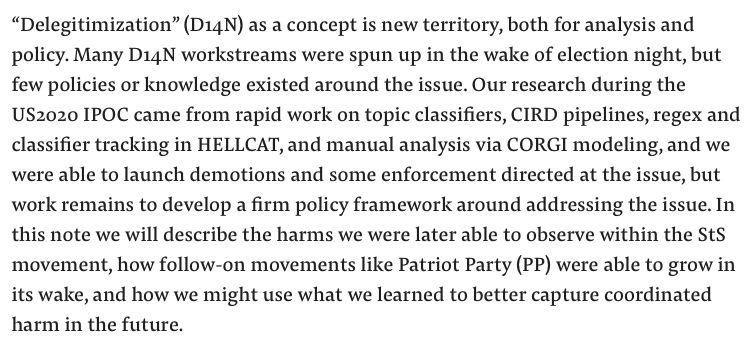

It& #39;s clear some folks at FB are putting real effort into making the site non-democracy-destroying. Yet all of their topic classifiers, CIRD pipelines, regex and classifier tracking in HELLCAT, and manual analysis via CORGI modeling are no match for the site& #39;s underlying dynamics.

I know it& #39;s a cliche at this point, but remind yourself that this was a site that an undergrad started for college kids to check each other out and post gossipy wall posts on each other& #39;s profiles, and then read this paragraph again. https://www.buzzfeednews.com/article/ryanmac/full-facebook-stop-the-steal-internal-report">https://www.buzzfeednews.com/article/r...

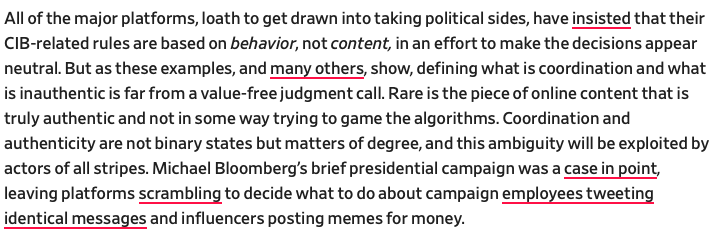

Who have guessed that "coordinated inauthentic behavior" would prove an impoverished and dangerously inadequate framework for addressing the threats posed by online movements to democratic societies?

Narrator: Lots of people guessed that https://twitter.com/katestarbird/status/1386817261109792770">https://twitter.com/katestarb...

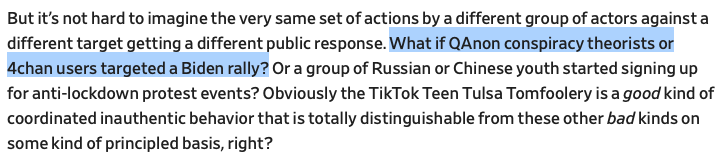

I know "coordinated inauthentic behavior" is an easy target, but it& #39;s emblematic of FB& #39;s failures in this realm. It& #39;s a tortured attempt to define online harm in an objective/apolitical way, to avoid value judgments. And *that& #39;s* how you miss something like the Jan. 6 riots.

Here& #39;s @evelyndouek in July 2020 on the weirdly uncritical acceptance of FB& #39;s awkward, squishy "coordinated inauthentic behavior" frame, and why that might turn out to be a problem: @ https://slate.com/technolog...

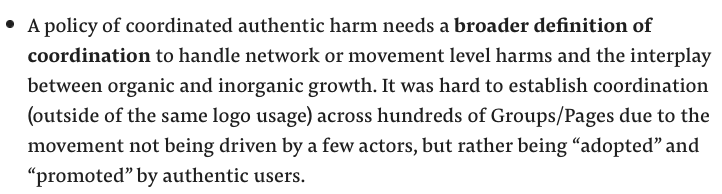

FB& #39;s internal report recommended a "broader definition of coordination," which feels like rearranging the stars in the Ptolemaic system. Until Facebook is willing to stake out some values more substantive than "authenticity," it& #39;s always going to be fighting the previous battle.

But the deeper, underlying problem is one that simply can& #39;t be addressed by teams of Facebook researcher/spooks with names like the Disaggregating Harmful Networks Taskforce wrestling through updated Adversarial Harmful Networks policies. (I swear I& #39;m not making these up.)

The underlying problem with Facebook is its own basic premise: that building automated global networks to instantly connect vast numbers of people around whatever turns out to best push their buttons would somehow be an inherent good for society, and not a fast-motion trainwreck.

If I still worked at @ozm i& #39;d 100% have @dlberes slacking me exasperatedly rn to make this into a post instead of a convoluted twitter thread.

I& #39;m sure this is true, and tbf the report does nudge in the direction of construing "harm" more broadly than in objective behavioral terms. My point is that the scope of work for these teams is always constrained to take the underlying model as a given. https://twitter.com/Flogabray/status/1386871638692700160">https://twitter.com/Flogabray...

Read on Twitter

Read on Twitter