This is an open peer review of the 3rd version of the #SystematicReview and #MetaAnalysis by @HannaMOllila, @liisatlaine and @jukka_koskela about effectiveness of #facemasks for preventing #COVID19 infection: https://www.medrxiv.org/content/10.1101/2020.07.31.20166116v3.">https://www.medrxiv.org/content/1... 1/36

First of all, I have to admit that the review has genuinely improved over time. However, there are still a number of serious concerns with it. I will try and elucidate point-by-point. 2/36

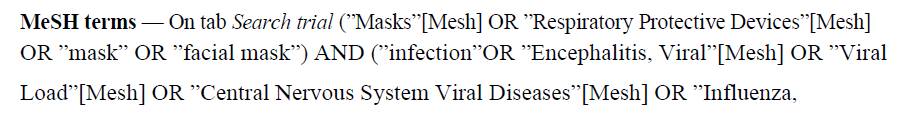

The PubMed systematic search strategy is the easiest to evaluate. It is very short. The terms covering #facemasks are the most important and thus ought to be exhaustive. In my expert opinion they are not and so this search lacks face validity. 3/36

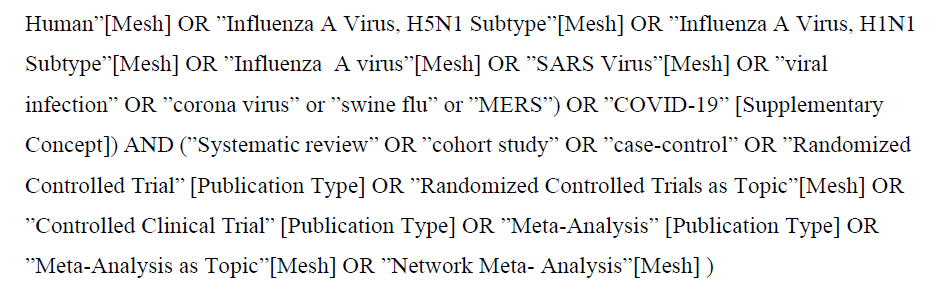

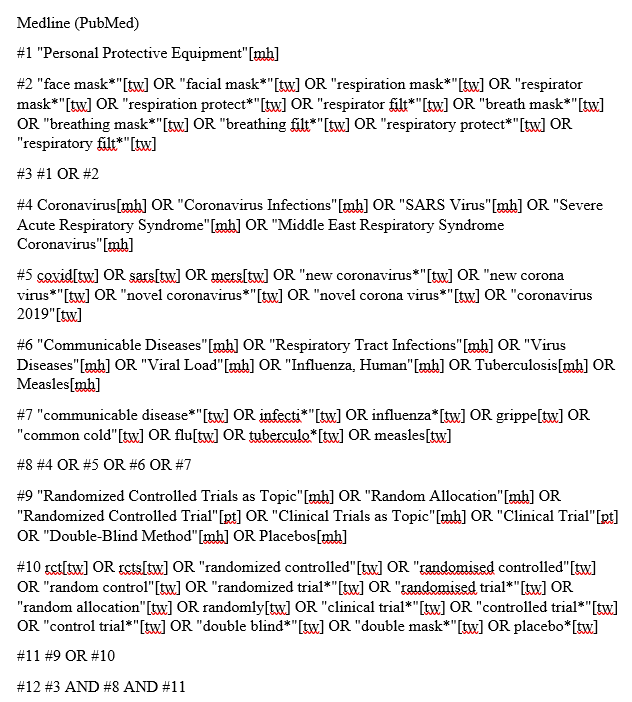

I asked Heikki Laitinen, my information specialist friend from @UniEastFinland to make a better one for a course I teach on #SystematicReview methods. It looks like this. 4/36

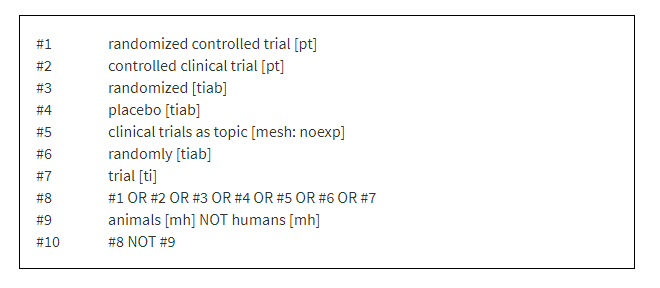

@HannaMOllila et al. use mostly just MeSH terms. They do not employ an #RCT filter, such as the @cochranecollab sensitivity- and precision-maximizing version available here: #section-3-6-1">https://training.cochrane.org/handbook/current/chapter-04-technical-supplement-searching-and-selecting-studies #section-3-6-1">https://training.cochrane.org/handbook/... 5/36

The thing with a #SystematicReviews is that if the search is suspicious then everything that builds on it (i.e. the whole review) is equally suspicious. Results and conclusions become very doubtful. So, at this point things are not looking good. 6/36

However, there are clear improvements! Such as a detailed table of included studies, and the naming of authors who conducted data extraction and risk of bias assessment. It’s also great to see how authors judged overall RoB per study. This is transparent! 7/36

Unfortunately, the authors continue the RoB section with a very curious sentence. I’ve read this a dozen times and I have no idea what they mean. Who is prioritizing categories of what here?!? And yes, any source of bias can be problematic but that is rather self-evident. 8/36

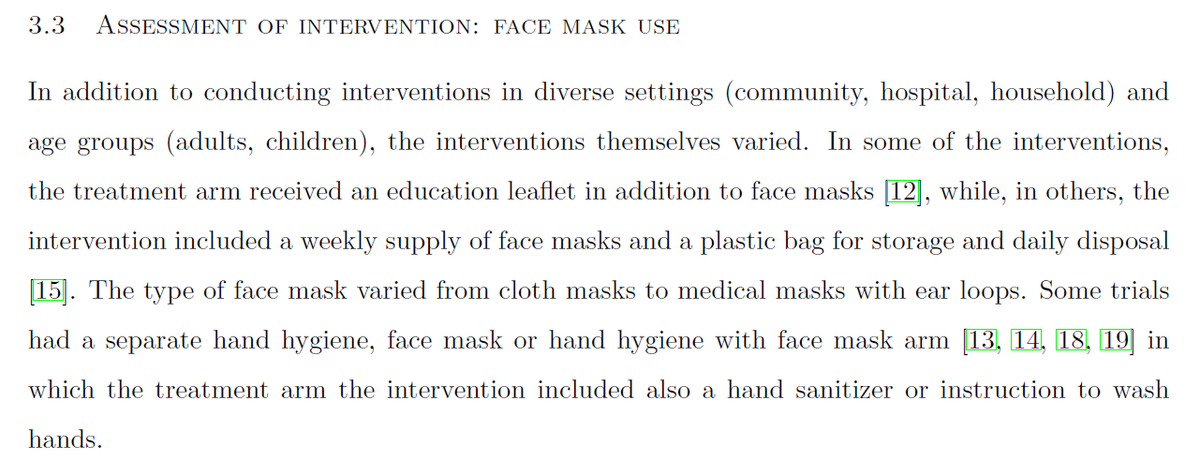

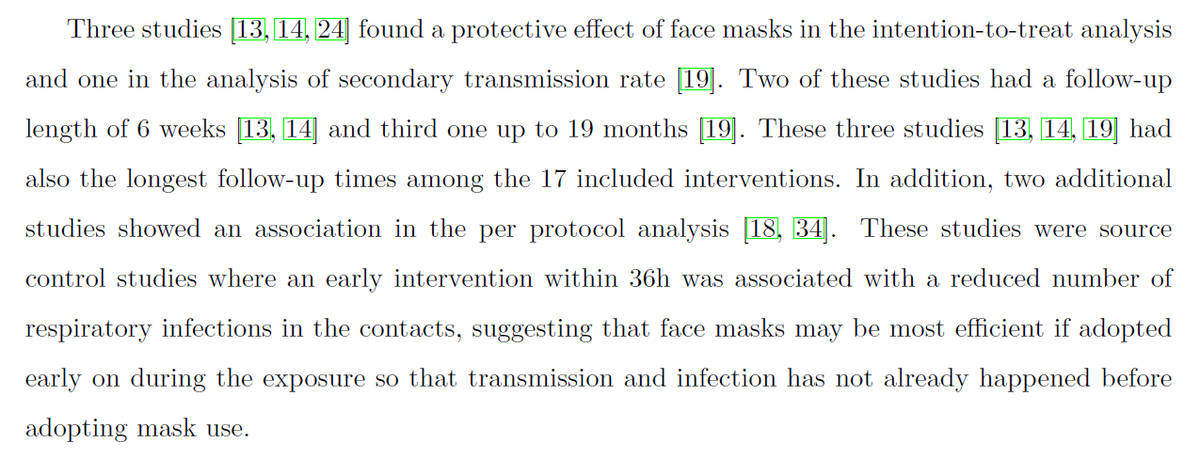

In the Results things get interesting. The authors describe the included studies’ #facemask interventions. This would be great if only it led to a differentiation of what interventions are actually similar enough to combine in meta-analysis. Spoiler alert: it doesn’t. 9/36

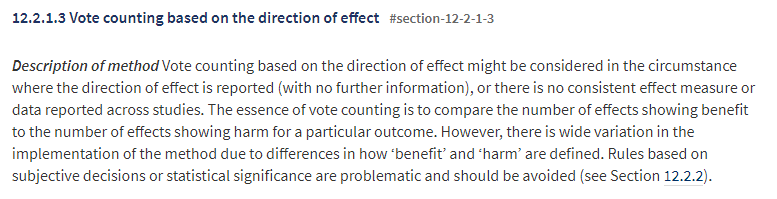

Next, the authors mix effects of interventions with their risk of bias assessment results and things get a little out of control. They decided to do vote counting. This means counting studies with pos, neg and inconclusive findings. This is wrong on so many levels. 10/36

The main problems with vote counting are that it provides no information on the magnitude of effects and it ignores differences in the relative sizes of the studies. It also artificially homogenizes the studies by largely ignoring PICO differences. https://training.cochrane.org/handbook/current/chapter-12">https://training.cochrane.org/handbook/... 11/36

What follows is a slightly wobbly account of the authors’ RoB assessment results. The authors highlight non-compliance as the main problem, i.e. participants not using masks as they were supposed to (or using when not supposed to). 12/36

It is a shame that the authors did not use @cochracollab RoB2 tool because it is directly equipped to deal with bias due to deviations from intended interventions. With RoB1 this is forced and awkward. The Handbook explains the details: #section-8-4">https://training.cochrane.org/handbook/current/chapter-08 #section-8-4">https://training.cochrane.org/handbook/... 13/36

Next the authors blurt out an oddly separate and out-of-place statement as a paragraph heading. I cannot fathom what the authors thought would be informative here. You’re supposed to say which trials and what details! 14/36

Section 3.6 rolls out the actual numerical effectiveness results. One does not need to be a statistician (but it helps) to understand that the authors first lump everything together and then try in a number of ways to tease out different results from the data. 15/36

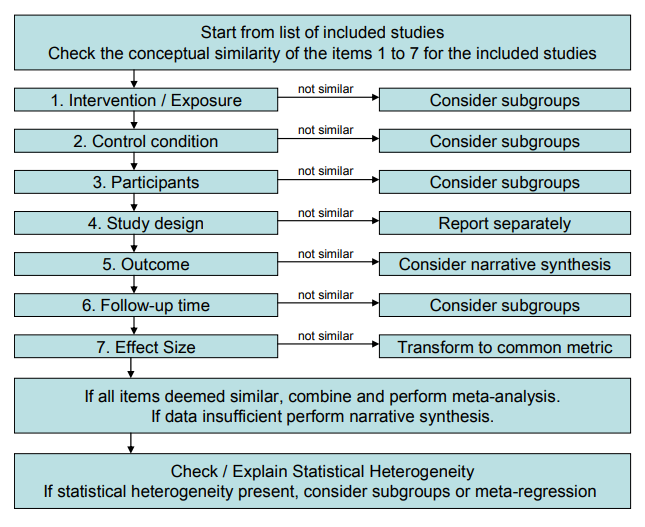

All this may seem awfully convincing for those not initiated into the secrets of #SystematicReview. But this is NOT the way we do analysis in @cochranecollab. The PICO question determines what is actually similar enough to combine. This review combines apples and oranges. 16/36

Egregious self-promotion alert! My colleagues and I did a methods paper almost 10 years ago recommending how to synthesize results of intervention studies. https://www.sjweh.fi/show_abstract.php?abstract_id=3201">https://www.sjweh.fi/show_abst... Reading this might have helped. 17/36

I also recommend checking out the #systematicreview by @jeremyphoward et al: https://www.pnas.org/content/118/4/e2014564118">https://www.pnas.org/content/1... and the evaluation I did of the same: https://twitter.com/MrJaniR/status/1349605357228855297.">https://twitter.com/MrJaniR/s... There it is crystal clear that the authors understand the intervention and how it’s supposed to work. 18/36

Here though, it seems like the authors don’t actually care. A mask is just a mask, right? Well, I at least disagree strongly. It should be pretty clear by now that surgical masks, cloth masks and tightly fitting FFP2/FFP3 masks are distinctly different. 19/36

This is how the authors justify the need for using adjusted data instead of raw data in their methods section. I just don’t get this. Randomisation should even out all of this. Thankfully @JesperKivela can explain so much better than me what is going on here. 20/36

After the Results we come to the bit about making sense of them. The authors rightly title this section as Quality of evidence. Unfortunately, this is probably the biggest let-down of the whole #SystematicReview due to the lack of #GRADE. 21/36

For those not in the know, #GRADE is a system that helps authors of #SystematicReview interpret their findings and draw conclusions by judging five things: risk of bias, imprecision, indirectness and inconsistency of the results and publication bias. https://www.gradeworkinggroup.org/ ">https://www.gradeworkinggroup.org/">... 22/36

Based on the abstract, the authors have considered (maybe) 3/5 of the #GRADE dimensions. Between-study heterogeneity (leading to wide confidence intervals) refers to imprecision, compliance bias to risk of bias and differences by environmental settings to indirectness. 23/36

However, because they do not use #GRADE, the authors cannot bring these diverse observations together to say how certain we can be that the effects they have uncovered are true. There is just an unjustified remark about findings supporting #Facemask use. 24/36

Now that we’ve gone over what is in the review, it is worth also noting what is NOT there and that is any form of explanation or justification of the changes the authors made in their plans following the publication of their protocol. 25/36

As I have already done this, I refer back to my detailed examination of the protocol on which this review is (supposedly) based: https://twitter.com/MrJaniR/status/1334775050923290624">https://twitter.com/MrJaniR/s... 26/36

Also, I cannot be bothered to compare this version of the review with the promises the authors made in their protocol because I already made this comparison with the 2nd version of the review: https://twitter.com/MrJaniR/status/1335855385840848896">https://twitter.com/MrJaniR/s... 27/36

I reiterate that the point of the protocol is not just to exist and enable ticking the PRISMA checklist. It is a set of promises and the expectation is that the review fulfils them. If not then we need an exhaustive and acceptable explanation why. #section-1-5">https://training.cochrane.org/handbook/current/chapter-01 #section-1-5">https://training.cochrane.org/handbook/... 28/36

As a side note, I previously urged the authors to explain how their review would improve upon the current gold standard that is the @cochranecollab review by Jefferson et al.: https://twitter.com/MrJaniR/status/1334776262905253888">https://twitter.com/MrJaniR/s... This would nicely justify their review. 29/36

Sadly, they dodged the opportunity and make a frivolous reference to AN OUTDATED VERSION of a superior review. This was published in BMJ in 2007, whereas the @cochranecollab version is from 2020. 30/36

Pro tip: If you want to insult a fellow researcher then ignore the obvious and important reason for referring to their work (such as explaining how you intend to improve on their work) and make a self-serving tactical reference like this. 31/36

Seriously, if the authors just made a mistake by referring to the review by Jefferson et al. published in the @BMJ_latest in 2007 https://www.bmj.com/content/early/2006/12/31/bmj.39393.510347.BE">https://www.bmj.com/content/e... then they can correct it by first reading and then referring to the updated @cochranecollab review by the same authors. 32/36

All in all, I would reject this manuscript because of: 1) sub-par systematic search, 2) unjustified lumping of everything in meta-analysis, 3) no #GRADE, 4) no explanation of deviations from protocol. This also means that I don’t trust the review’s results or conclusions. 33/36

If you want the most reliable evidence on the effectiveness of #facemasks (and other relevant interventions) on reducing the spread of respiratory viruses then refer to the 2020 @cochranecollab review by Tom Jefferson et al.: https://www.cochranelibrary.com/cdsr/doi/10.1002/14651858.CD006207.pub5/full">https://www.cochranelibrary.com/cdsr/doi/... 34/36

To anyone questioning my qualifications in judging a #SystematicReview I say I was the Managing Editor of @CochraneWork in 2010-2019. So, I’ve done this a lot. I’m also an author on a very relevant published @cochranecollab review. 35/36 https://www.cochranelibrary.com/cdsr/doi/10.1002/14651858.CD011621.pub5/full">https://www.cochranelibrary.com/cdsr/doi/...

Finally, I should probably stress that my personal opinion of whether we should use masks or not is entirely irrelevant and uninteresting. Believe the evidence and not me. I also harbor no ill will towards @HannaMOllila et al. and wish them success with their work. 36/36

Read on Twitter

Read on Twitter