(1/n) Saw this letter in @NEJM…also covered by journalists. Concurrent vs non-concurrent controls is a complex issue in platform trials. This is a tweetorial on these issues, and a comment on the letter. https://www.nejm.org/doi/full/10.1056/NEJMc2102446?af=R&rss=currentIssue">https://www.nejm.org/doi/full/...

(2/n) Most importantly, the letter addresses @remap_cap in tocilizumab and sarilumab. Both the concurrent and non-concurrent analyses are reported. They agree. This is a non-issue for these particular therapies. https://www.medpagetoday.com/infectiousdisease/covid19/92203">https://www.medpagetoday.com/infectiou...

(3/n) Why does this issue even exist? In any trial you can have time trends in the data. Patients earlier in the study may survive or function or feel at a lower (or higher) level at the beginning of the study than at the end. You could have oscillations during the trial, etc.

(4/n) For “standard” designs with equal randomization over time, time trends cancel out of standard estimates. You have an equal number of control and treatment effects in the first month, the second month, etc. The time effects just subtract out when you estimate.

(5/n) Many adaptive trials change randomization over time. This is a big deal with time trends. If one arm enrolled more when survival was high, and another enrolled more when survival was low, you can get wildly biased estimates of the treatment effect.

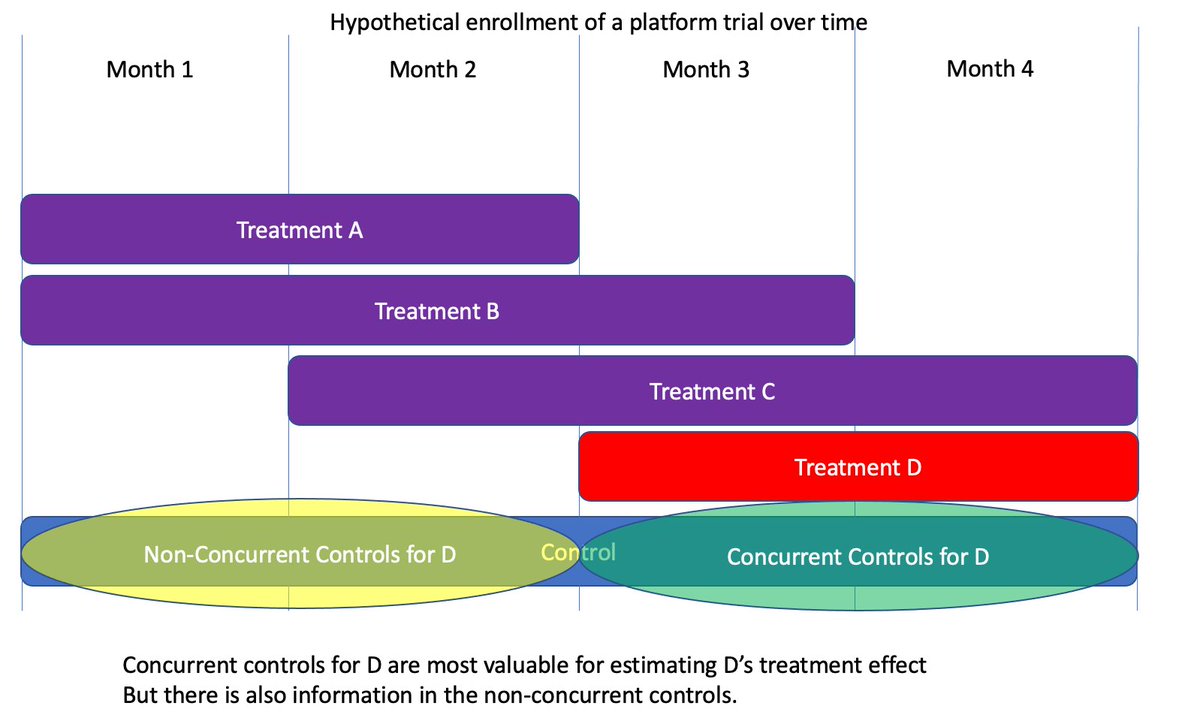

(6/n) This occurs in a platform when control has been enrolling the whole time and it is compared to a late arriving treatment. If you naively compare all the subjects, the late arrival wasn’t affect by early trial trends, while control was. You get biases.

(7/n) As Dodd et al note, these biases can get quite large. If you ignore them, you get bad estimates and poorly behaved statistical tests.

Major platform trials do not ignore them.

Major platform trials do not ignore them.

Major platform trials do not ignore them.

Major platform trials do not ignore them.

Major platform trials do not ignore them.

Major platform trials do not ignore them.

(8/n) I think there is general agreement to here. Now what to do about it? In some trials people consider time trends to be unlikely and don’t adjust. COVID is clearly a situation where we worry about time trends.

(9/n) One option is to just ignore everything but concurrent controls. If control is always randomizing and treatment D only enrolls August-December, then we compare treatment D only to the controls enrolled at the same time (August-December).

(10/n) If randomization is constant during the time of the concurrent controls, simple estimates avoid bias (the “cancellation” discussion from above). If you also have response adaptive randomization, you have to do more even with concurrent controls.

(11/n) The disadvantage with concurrent controls is that you throw away a lot of data. Can we make use of that information without running into biases? Yes, but we do have to be smart about it.

(12/n) Without any other arms in the trial, the non-concurrent controls would indeed be worthless. They would be hopelessly confounded with the time effects. Without some strong assumptions, your best option is to ignore them. Platforms are different....

(13/n) Most platforms have other arms. In the figure below we evaluate arms monthly, stopping some old arms and starting new ones each month.

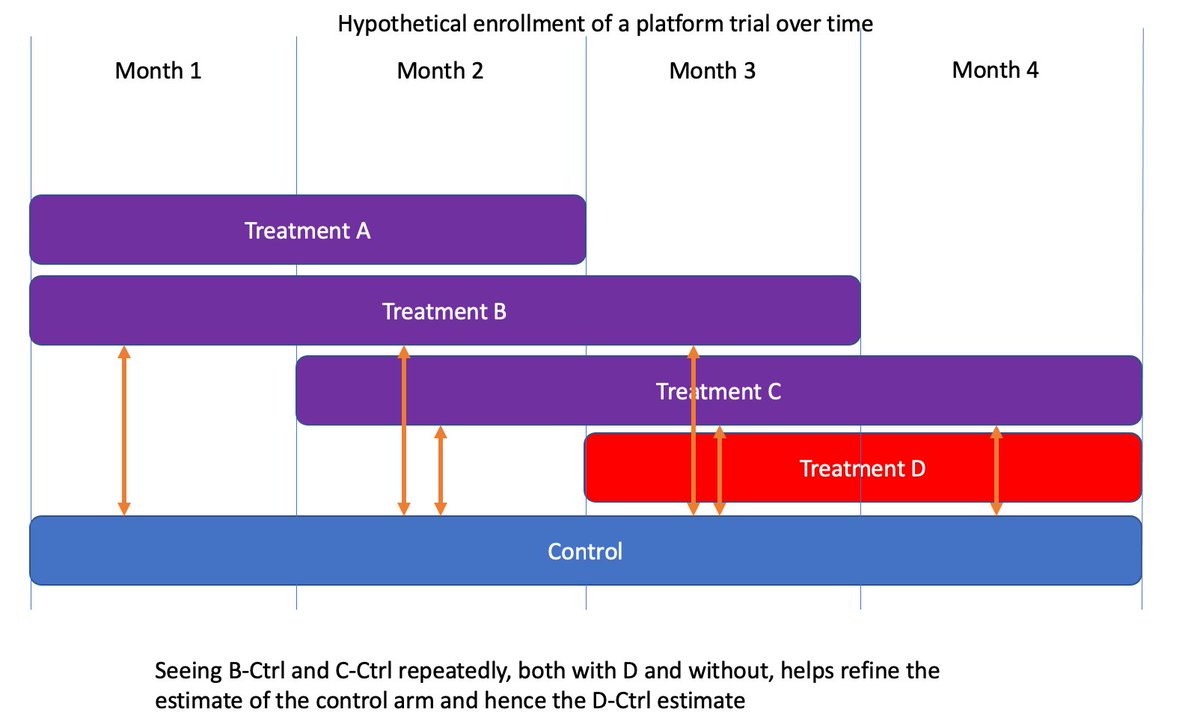

(14/n) If there are time effects, then the arms are moving up and down from month 1 to month 4. You can model that by putting in an effect for month in your analysis.

Y = Intercept + Arm Effects + Month effects + error

Y = Intercept + Arm Effects + Month effects + error

(15/n) If I want to estimate the treatment effect for arm D versus control, months 1-2 are not as valuable as the concurrent month 3-4 data, but have some value. You can plug this into your favorite regression software and it will pick an estimator which uses months 1 and 2.

(16/n) I’m omitting the math here, but happy to followup if people want to see it. In a standard trial we get “easy and automatic” cancellation of time effects with the standard difference in means. Here it& #39;s more complex, but we STILL cancel them out. Hence no time biases.

(17/n) How much does this benefit over concurrent controls? It depends on the sample sizes and the amount of overlap between all the arms. In the example above you reduce the variance by 14%, without introducing any biases. Why throw away free information?

(18/n) Additionally, these models naturally discount control information the farther back in time it goes. As there are less overlapping arms, the earlier controls are gradually ignored in favor of later ones, as you would intuitively expect.

(19/n) Why is information in these prior months? It’s the overlap. In months 3-4 we see D-Ctrl directly, but we also see how Ctrl relates to arms B and C while D is enrolling. We also see those same B-Ctrl and C-ctrl contrasts in months 1-2, so they refine the control estimate.

(20/n) There are other models. The one above just assumes unconstrained time effects in each month. In many situations you may expect a smooth change over time rather than jagged up/down motions. In those cases (including @remap_cap) people will apply smoothers over time.

(21/n) Finally some comments on the letter itself….I would have enjoyed a nuanced discussion of the modeling, rather than an extreme example of something that is NOT done in these platforms trials for most of the letter and only a couple sentences on what is done.

(22/n) Suppose you wrote a paper on a complex surgical technique. A letter comes in “Surgeries are bad if you stab the patient repeatedly with the scalpel. Apparently the authors did something different, but you never know whether those things work….”

(23/n) On the modeling actually done, the letter restricts to two sentences.

“The first is that the more modeling conducted, the less efficient the design is in terms of required sample sizes”.

We model all the time (covariate adjustments, etc), exactly for efficient gains.

“The first is that the more modeling conducted, the less efficient the design is in terms of required sample sizes”.

We model all the time (covariate adjustments, etc), exactly for efficient gains.

(24/24) Second

“More importantly, one never knows whether the modeling has successfully eliminated all potential bias”.

It’s hard to argue so broad a statement, but there needs to be a concrete concern. In the time trends and analysis described above, the bias is provably 0.

“More importantly, one never knows whether the modeling has successfully eliminated all potential bias”.

It’s hard to argue so broad a statement, but there needs to be a concrete concern. In the time trends and analysis described above, the bias is provably 0.

Read on Twitter

Read on Twitter