Studies using list randomization to measure #VAW in the last few years has  https://abs.twimg.com/emoji/v2/... draggable="false" alt="📈" title="Tabelle mit Aufwärtstrend" aria-label="Emoji: Tabelle mit Aufwärtstrend">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="📈" title="Tabelle mit Aufwärtstrend" aria-label="Emoji: Tabelle mit Aufwärtstrend">

Inspired by recent papers, a https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread"> of some notables + what I& #39;ve learned from reading them!

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread"> of some notables + what I& #39;ve learned from reading them!

[Throw-back to blog summarizing early work on @UNICEFInnocenti w/ @TiaPalermo https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇🏽" title="Rückhand Zeigefinger nach unten (mittlerer Hautton)" aria-label="Emoji: Rückhand Zeigefinger nach unten (mittlerer Hautton)">]

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇🏽" title="Rückhand Zeigefinger nach unten (mittlerer Hautton)" aria-label="Emoji: Rückhand Zeigefinger nach unten (mittlerer Hautton)">]

1/n https://blogs.unicef.org/evidence-for-action/measuring-taboo-topics-list-randomization-for-research-on-gender-based-violence/">https://blogs.unicef.org/evidence-...

Inspired by recent papers, a

[Throw-back to blog summarizing early work on @UNICEFInnocenti w/ @TiaPalermo

1/n https://blogs.unicef.org/evidence-for-action/measuring-taboo-topics-list-randomization-for-research-on-gender-based-violence/">https://blogs.unicef.org/evidence-...

Why use a list to measure violence instead of [or in addition to] direct measures? I& #39;ve seen two main reasons:

1) if you cannot meet the ethical req for asking direct measures

2) if you& #39;re worried abt under-reporting [esp if your intervention might increase reporting]

2/n

1) if you cannot meet the ethical req for asking direct measures

2) if you& #39;re worried abt under-reporting [esp if your intervention might increase reporting]

2/n

Lists were used during COVID-19 when ethical risks have been too high to ask questions directly

Most recently @yloxford included these in Peru & India asking youth about family violence - finding increases - https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">on #Peru

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">on #Peru  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🇵🇪" title="Flagge von Peru" aria-label="Emoji: Flagge von Peru"> findings by @MartaFavara

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🇵🇪" title="Flagge von Peru" aria-label="Emoji: Flagge von Peru"> findings by @MartaFavara  https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇🏽" title="Rückhand Zeigefinger nach unten (mittlerer Hautton)" aria-label="Emoji: Rückhand Zeigefinger nach unten (mittlerer Hautton)">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇🏽" title="Rückhand Zeigefinger nach unten (mittlerer Hautton)" aria-label="Emoji: Rückhand Zeigefinger nach unten (mittlerer Hautton)">

3/n https://twitter.com/MartaFavara/status/1383771454592872461">https://twitter.com/MartaFava...

Most recently @yloxford included these in Peru & India asking youth about family violence - finding increases -

3/n https://twitter.com/MartaFavara/status/1383771454592872461">https://twitter.com/MartaFava...

. @ebert_cara & @jisteinert also use lists to collect measures of severe physical & sexual violence against women & children within an online survey - motivated by ethical concerns  https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇🏽" title="Rückhand Zeigefinger nach unten (mittlerer Hautton)" aria-label="Emoji: Rückhand Zeigefinger nach unten (mittlerer Hautton)">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇🏽" title="Rückhand Zeigefinger nach unten (mittlerer Hautton)" aria-label="Emoji: Rückhand Zeigefinger nach unten (mittlerer Hautton)">

4/n https://twitter.com/a_peterman/status/1374027878065606658">https://twitter.com/a_peterma...

4/n https://twitter.com/a_peterman/status/1374027878065606658">https://twitter.com/a_peterma...

Others used lists as robustness checks on direct measures in IEs

In Bolivia & Ethiopia lists confirm impacts on direct reports of violence against girls & IPV, respectively

Bolivia @diegoubfal @selimgulesci: http://documents1.worldbank.org/curated/en/498221613504523709/pdf/Can-Youth-Empowerment-Programs-Reduce-Violence-against-Girls-during-the-COVID-19-Pandemic.pdf

Ethiopia">https://documents1.worldbank.org/curated/e... @kotsadam: https://www.cesifo.org/DocDL/cesifo1_wp8108.pdf">https://www.cesifo.org/DocDL/ces...

In Bolivia & Ethiopia lists confirm impacts on direct reports of violence against girls & IPV, respectively

Bolivia @diegoubfal @selimgulesci: http://documents1.worldbank.org/curated/en/498221613504523709/pdf/Can-Youth-Empowerment-Programs-Reduce-Violence-against-Girls-during-the-COVID-19-Pandemic.pdf

Ethiopia">https://documents1.worldbank.org/curated/e... @kotsadam: https://www.cesifo.org/DocDL/cesifo1_wp8108.pdf">https://www.cesifo.org/DocDL/ces...

What do we learn methodologically from list studies?

First, most evidence points to under-reporting in standard surveys - including for IPV

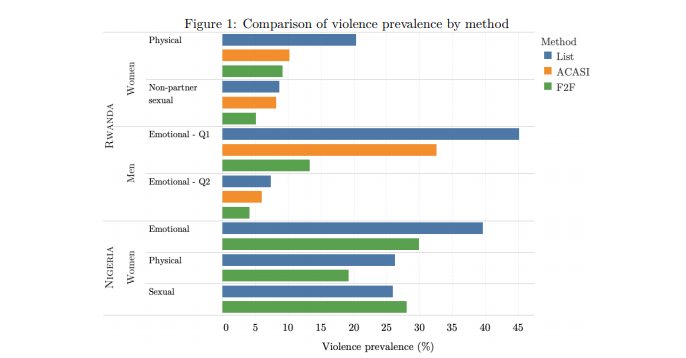

Nice paper by @ccullen_1 shows lists generally result in https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬆️" title="Pfeil nach oben" aria-label="Emoji: Pfeil nach oben"> prevalence in #Nigeria & #Rwanda

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬆️" title="Pfeil nach oben" aria-label="Emoji: Pfeil nach oben"> prevalence in #Nigeria & #Rwanda

https://openknowledge.worldbank.org/handle/10986/33876

6/n">https://openknowledge.worldbank.org/handle/10...

First, most evidence points to under-reporting in standard surveys - including for IPV

Nice paper by @ccullen_1 shows lists generally result in

https://openknowledge.worldbank.org/handle/10986/33876

6/n">https://openknowledge.worldbank.org/handle/10...

Similarly @Aure_Lepine et al. find direct surveys underestimate IPV by 16–20 pps as compared to list randomization measures in Burkina Faso  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🇧🇫" title="Flage von Burkina Faso" aria-label="Emoji: Flage von Burkina Faso"> in @socscimed

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🇧🇫" title="Flage von Burkina Faso" aria-label="Emoji: Flage von Burkina Faso"> in @socscimed

https://www.sciencedirect.com/science/article/pii/S0277953620305451

7/n">https://www.sciencedirect.com/science/a...

https://www.sciencedirect.com/science/article/pii/S0277953620305451

7/n">https://www.sciencedirect.com/science/a...

But, not always!

@taitarasu & @VeronicaFrisan1 compare item by item for IPV in the DHS vs list methods in Peru - so clearly these gaps vary by context, population, including levels of social norms & stigmatization around violence

8/n https://twitter.com/a_peterman/status/1363883786576949248">https://twitter.com/a_peterma...

@taitarasu & @VeronicaFrisan1 compare item by item for IPV in the DHS vs list methods in Peru - so clearly these gaps vary by context, population, including levels of social norms & stigmatization around violence

8/n https://twitter.com/a_peterman/status/1363883786576949248">https://twitter.com/a_peterma...

This work can help us understand IPV in numerous ways, including [but not limited to]:

1) understanding biases in reported data & what characteristics are linked to under-reporting

2) provide robustness checks & alternative analyses for IEs

A useful tool in the toolbox.

9/n

1) understanding biases in reported data & what characteristics are linked to under-reporting

2) provide robustness checks & alternative analyses for IEs

A useful tool in the toolbox.

9/n

But, their design & implementation can be tricky

@EconCath et al& #39;s new paper provides a nice summary of technical issues related to "control" item selection, including:

* Avoid high (low) prev control items

* Include neg corr control items

10/n

https://www.sciencedirect.com/science/article/pii/S2352827321000677">https://www.sciencedirect.com/science/a...

@EconCath et al& #39;s new paper provides a nice summary of technical issues related to "control" item selection, including:

* Avoid high (low) prev control items

* Include neg corr control items

10/n

https://www.sciencedirect.com/science/article/pii/S2352827321000677">https://www.sciencedirect.com/science/a...

This often requires piloting control items to figure out what the prevalence is (likely) to be in the population & how they are correlated with each other to avoid ceiling & floor effects

In addition, to avoid contrast effects - control items should not "stand out"

11/n

In addition, to avoid contrast effects - control items should not "stand out"

11/n

This is no easy task when the sensitive item is violence-related

Another issue relates to the efficiency of list estimates [SEs can be large!]

@Aure_Lepine et al.& #39;s paper shows using & #39;double lists& #39; [vs single] https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬆️" title="Pfeil nach oben" aria-label="Emoji: Pfeil nach oben"> precision of estimates by 40%

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬆️" title="Pfeil nach oben" aria-label="Emoji: Pfeil nach oben"> precision of estimates by 40%

https://www.sciencedirect.com/science/article/pii/S0277953620305451

12/n">https://www.sciencedirect.com/science/a...

Another issue relates to the efficiency of list estimates [SEs can be large!]

@Aure_Lepine et al.& #39;s paper shows using & #39;double lists& #39; [vs single]

https://www.sciencedirect.com/science/article/pii/S0277953620305451

12/n">https://www.sciencedirect.com/science/a...

This is particularly an issue when using lists in an IE

Another drawback is the logistical limitation in terms of indicator choice --> A list can only contain 1 violence question

Yet, the typical IPV gold standard combines many different behavioral measures

13/n

Another drawback is the logistical limitation in terms of indicator choice --> A list can only contain 1 violence question

Yet, the typical IPV gold standard combines many different behavioral measures

13/n

This makes comparability hard & as clearly a few list indicators do not equate to gold standard aggregates (even if they are under-reported!)

Also, some ethical questions left "hanging" --> do methods like lists [alone] mean no recommended violence protocols are needed?

14/n

Also, some ethical questions left "hanging" --> do methods like lists [alone] mean no recommended violence protocols are needed?

14/n

Obviously lots to learn for violence community on lists [& other methods to encourage safe disclosure] - which I find super exciting!

Please add any of your fav list studies for violence that I& #39;ve missed. . .

end/

Please add any of your fav list studies for violence that I& #39;ve missed. . .

end/

Read on Twitter

Read on Twitter prevalence in #Nigeria & #Rwanda https://openknowledge.worldbank.org/handle/10..." title="What do we learn methodologically from list studies?First, most evidence points to under-reporting in standard surveys - including for IPVNice paper by @ccullen_1 shows lists generally result in https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬆️" title="Pfeil nach oben" aria-label="Emoji: Pfeil nach oben"> prevalence in #Nigeria & #Rwanda https://openknowledge.worldbank.org/handle/10..." class="img-responsive" style="max-width:100%;"/>

prevalence in #Nigeria & #Rwanda https://openknowledge.worldbank.org/handle/10..." title="What do we learn methodologically from list studies?First, most evidence points to under-reporting in standard surveys - including for IPVNice paper by @ccullen_1 shows lists generally result in https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬆️" title="Pfeil nach oben" aria-label="Emoji: Pfeil nach oben"> prevalence in #Nigeria & #Rwanda https://openknowledge.worldbank.org/handle/10..." class="img-responsive" style="max-width:100%;"/>