What happens when we take a transformer pretrained on language data and finetune on image data? What happens if those weights are randomly initialized! This paper address this case. A summary thread  https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

paper: https://arxiv.org/abs/2103.05247 ">https://arxiv.org/abs/2103.... #MachineLearning #TransferLearning #GPT2

paper: https://arxiv.org/abs/2103.05247 ">https://arxiv.org/abs/2103.... #MachineLearning #TransferLearning #GPT2

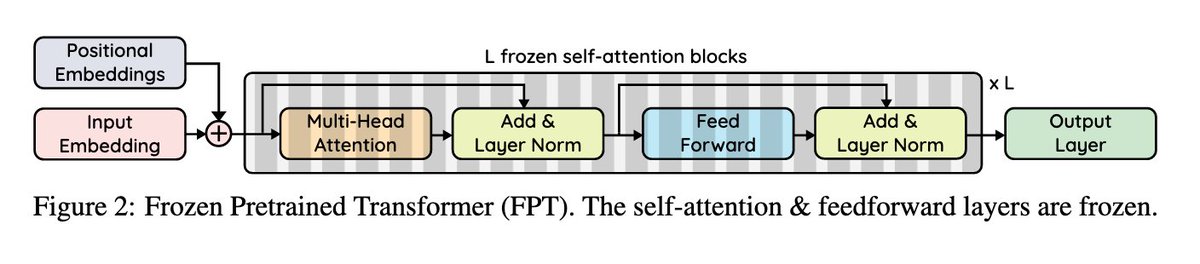

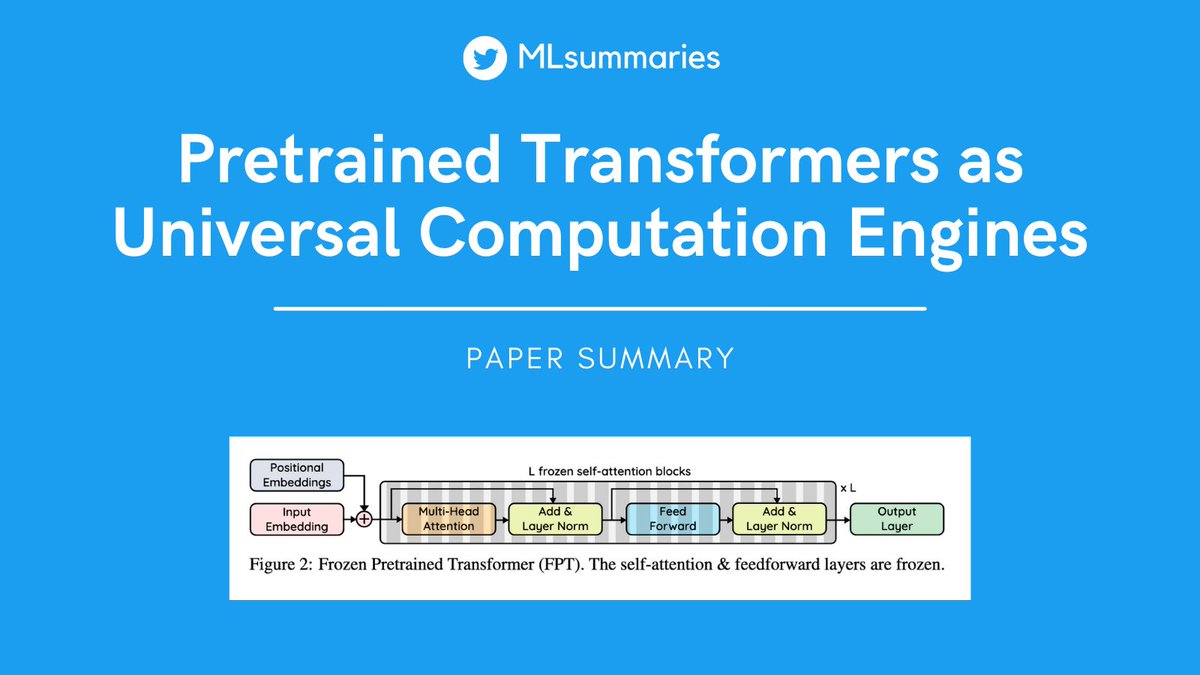

The authors take a pretrained GPT-2 and freeze all the transformer layers, just fine-tune the input, including positional encodings and output layers and layer-norm parameters, which is not properly shown in the figure! The model is called Frozen Pretrained Transformer (FPT).

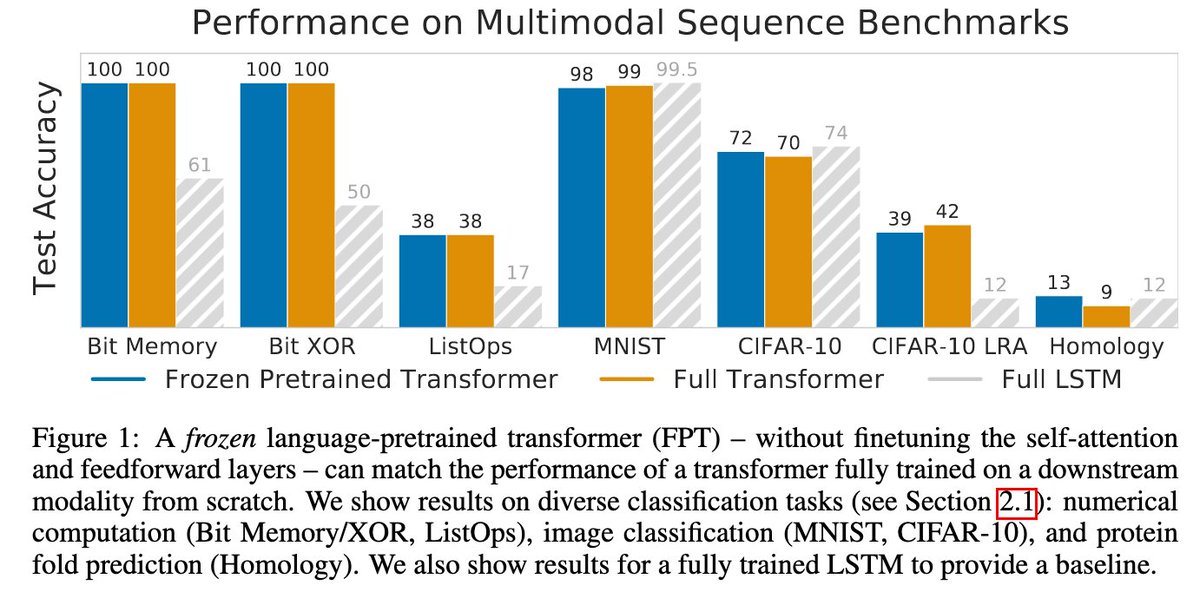

The authors show that FPT performs as well as a Full transformer (fully trained on the dataset) in many tasks. But it is not really apples to apples comparison because FPT has 12 transformer layers while the full transformer has only 3 such layers for CIFAR-10 case.

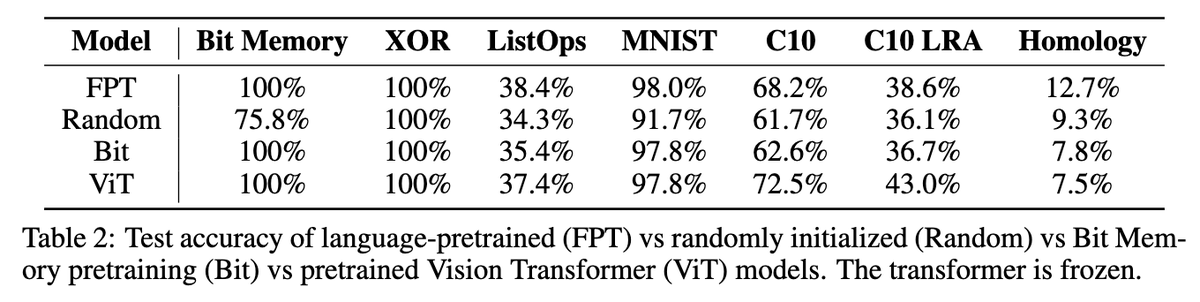

As we can see, Randomly initialized weights model is also doing well. This table honestly sets back the authors claim a bit, since the difference in accuracy is not so much. And intuitively pretrained vision transformer is doing better on image data (except MNIST)

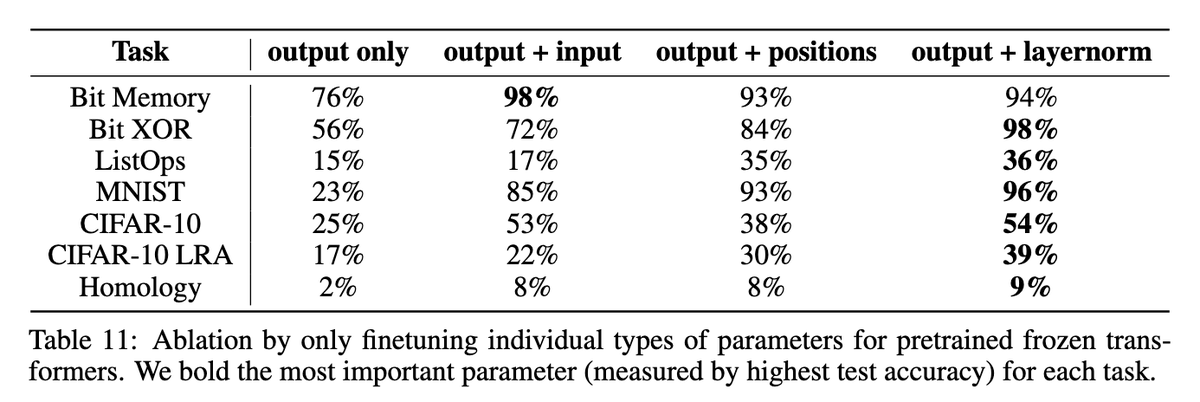

These results show ablations of FPT and Random Frozen transformers respectively. In both the cases, the impact of layernorm is significant.

Find the code implementations and the official blog of the paper here.

code: https://github.com/kzl/universal-computation

official">https://github.com/kzl/unive... blog: https://bair.berkeley.edu/blog/2021/03/23/universal-computation/">https://bair.berkeley.edu/blog/2021...

code: https://github.com/kzl/universal-computation

official">https://github.com/kzl/unive... blog: https://bair.berkeley.edu/blog/2021/03/23/universal-computation/">https://bair.berkeley.edu/blog/2021...

This paper summary is contributed by @gowthami_s. If you liked this thread, don& #39;t forget to retweet!  https://abs.twimg.com/emoji/v2/... draggable="false" alt="😇" title="Lächelndes Gesicht mit Heiligenschein" aria-label="Emoji: Lächelndes Gesicht mit Heiligenschein">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😇" title="Lächelndes Gesicht mit Heiligenschein" aria-label="Emoji: Lächelndes Gesicht mit Heiligenschein">

Read the full summary of the paper here - https://medium.com/ml-summaries/pretrained-transformers-as-universal-computation-engines-paper-summary-4079b2ae45b0">https://medium.com/ml-summar...

Read the full summary of the paper here - https://medium.com/ml-summaries/pretrained-transformers-as-universal-computation-engines-paper-summary-4079b2ae45b0">https://medium.com/ml-summar...

Read on Twitter

Read on Twitter paper: https://arxiv.org/abs/2103.... #MachineLearning #TransferLearning #GPT2" title="What happens when we take a transformer pretrained on language data and finetune on image data? What happens if those weights are randomly initialized! This paper address this case. A summary thread https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">paper: https://arxiv.org/abs/2103.... #MachineLearning #TransferLearning #GPT2" class="img-responsive" style="max-width:100%;"/>

paper: https://arxiv.org/abs/2103.... #MachineLearning #TransferLearning #GPT2" title="What happens when we take a transformer pretrained on language data and finetune on image data? What happens if those weights are randomly initialized! This paper address this case. A summary thread https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">paper: https://arxiv.org/abs/2103.... #MachineLearning #TransferLearning #GPT2" class="img-responsive" style="max-width:100%;"/>