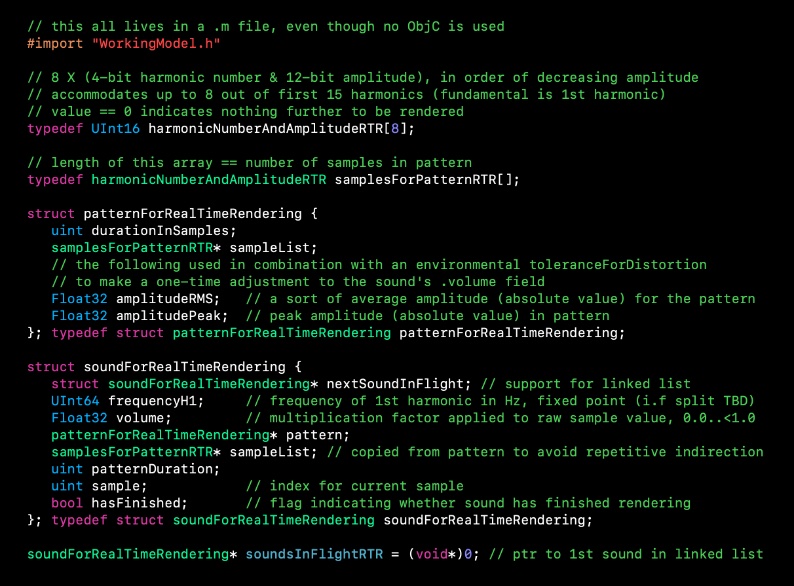

After removing inessentials and considerable refactoring

switching to fixed-point for pitch and phase

but not for volume (not yet anyway)

switching to fixed-point for pitch and phase

but not for volume (not yet anyway)

As this stands,

those 12-bit amplitudes,

will need to be converted to floats

in order to be useful.

those 12-bit amplitudes,

will need to be converted to floats

in order to be useful.

Also, as it stands, I& #39;ve left out pitch-bending.

Admittedly this was inadvertent,

but I think I& #39;ll leave it out of the pattern

for the time being.

I don& #39;t want to complicate

samplesForPatternRTR[]

or the code that makes use of it,

and I have a different idea...

Admittedly this was inadvertent,

but I think I& #39;ll leave it out of the pattern

for the time being.

I don& #39;t want to complicate

samplesForPatternRTR[]

or the code that makes use of it,

and I have a different idea...

As previously mentioned,

one way of handling pitch-bending

is to add a second array pointer

to the pattern struct,

also copying that to the sound struct,

and use the same index for both.

one way of handling pitch-bending

is to add a second array pointer

to the pattern struct,

also copying that to the sound struct,

and use the same index for both.

In principle, this would allow

mix/matching harmonic amplitude patterns

and pitch-bending patterns of the same length,

but it& #39;s still doesn& #39;t make provision for

the interactivity I& #39;m after.

mix/matching harmonic amplitude patterns

and pitch-bending patterns of the same length,

but it& #39;s still doesn& #39;t make provision for

the interactivity I& #39;m after.

Also, that interactivity really should extend to

the net amplitude of the sound as a whole,

not just the pitch.

A different approach is called for...

the net amplitude of the sound as a whole,

not just the pitch.

A different approach is called for...

Pitch and amplitude variation

not resulting from harmonic interactions

constitute a distinct pattern,

brought to the instrument by the performer.

They need to be incorporated into the sound

in real time,

and also recorded for later reproduction.

not resulting from harmonic interactions

constitute a distinct pattern,

brought to the instrument by the performer.

They need to be incorporated into the sound

in real time,

and also recorded for later reproduction.

And my earlier concern about

(my default approach to)

pitch-bending resulting in artifacts

is not at issue here.

That& #39;s an implementation detail,

or, perhaps, more than a detail,

but it can only be addressed

in the render algorithm.

(my default approach to)

pitch-bending resulting in artifacts

is not at issue here.

That& #39;s an implementation detail,

or, perhaps, more than a detail,

but it can only be addressed

in the render algorithm.

Something else for the render algorithm...

Equalization...

Sure, you can pipe the output of a source node

though an equalizer node,

but this being generative sound,

using explicit frequencies,

you can also apply

frequency-dependent amplitude modification

in the source node.

Equalization...

Sure, you can pipe the output of a source node

though an equalizer node,

but this being generative sound,

using explicit frequencies,

you can also apply

frequency-dependent amplitude modification

in the source node.

...I can practically feel

the synaptic connections

being formed... ;-)

the synaptic connections

being formed... ;-)

Moreover, that

"frequency-dependent amplitude modification"

constitutes yet another distinct pattern,

to be mix/matched with

the pattern of harmonic amplitudes.

"frequency-dependent amplitude modification"

constitutes yet another distinct pattern,

to be mix/matched with

the pattern of harmonic amplitudes.

All these patterns

mean even more pressure on memory access,

necessitating even more emphasis

on efficiency.

I& #39;m in my element! ;-)

mean even more pressure on memory access,

necessitating even more emphasis

on efficiency.

I& #39;m in my element! ;-)

Recapping...

The voice of the instrument:

•distribution of energy (amplitude) through harmonics

•frequency-dependent amplitude modification

What the performer contributes (recordable):

•sound events

•real-time variation in pitch and amplitude

The voice of the instrument:

•distribution of energy (amplitude) through harmonics

•frequency-dependent amplitude modification

What the performer contributes (recordable):

•sound events

•real-time variation in pitch and amplitude

Strictly speaking, the performer also contributes real-time variation in the duration of sound events, and potentially also in the distribution of energy through harmonics, but incorporating these is more complication than I& #39;m willing to undertake for the time being.

That said, having recognized this

these factors will continue to gnaw at me

until I figure out

some way of making provision

for their future incorporation,

but that& #39;s just me...

these factors will continue to gnaw at me

until I figure out

some way of making provision

for their future incorporation,

but that& #39;s just me...

Read on Twitter

Read on Twitter