There’s less than a week until Europe closes submissions on a consultation that could see Internet platforms being required to monitor ALL your emails, chats, and photos—even if they’re encrypted—using biased and unreliable AI algorithms. Here’s how to stop it!  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread"> RT #chatcontrol

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread"> RT #chatcontrol

First, share this link with everyone you know from Europe and tell them that it is important for them to respond, and that they must do so before Thursday. You don’t have to answer all the questions, and this thread will give you tips that make it easy. https://ec.europa.eu/info/law/better-regulation/have-your-say/initiatives/12726-Child-sexual-abuse-online-detection-removal-and-reporting-/public-consultation">https://ec.europa.eu/info/law/...

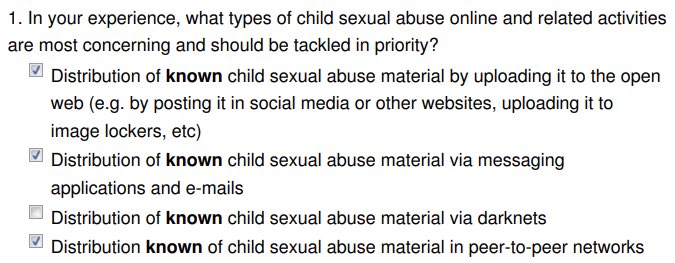

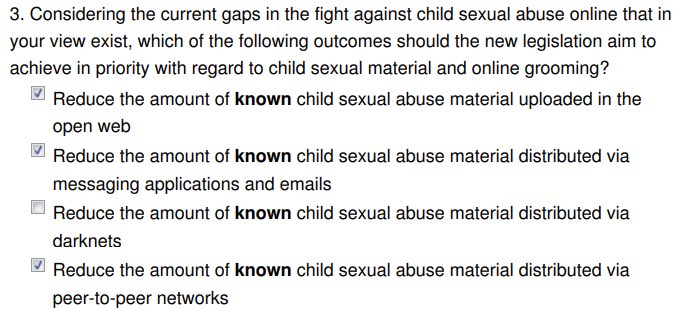

Q1: Check to say that tackling KNOWN child abuse images should be prioritized. The technical solutions that exist to eliminate child abuse images at scale only work on those that are already known. AI algorithms that claim to work on new images or grooming are flawed and biased.

Q3: Similar answers. Also check “Enable a swift takedown of CSAM after reporting” (not shown in the screenshot). Why aren’t we including distribution of CSAM via darknets as a priority? Because the tools to do that don’t exist yet, so it makes no sense to require this in the law.

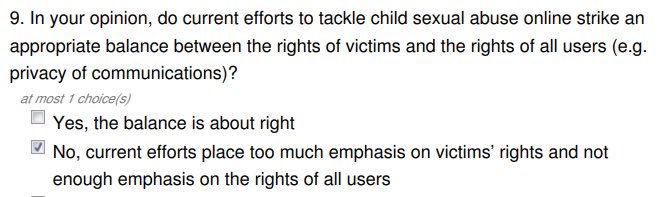

Skipping ahead to Q9: this is a leading question, with no good answer options. Just check one of the first two boxes or “No opinion.” If you leave a comment, say that you don’t agree that there is a conflict between victims’ rights and the rights of all users—because there isn’t.

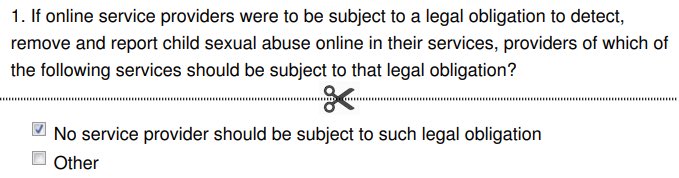

Numbering now restarts (confusing!). The next Q1 asks if platforms should be required by law to scan for child sexual abuse online (potentially using AI and circumventing encryption). We do want voluntary scanning to continue, but not like that—so we have to answer no here.

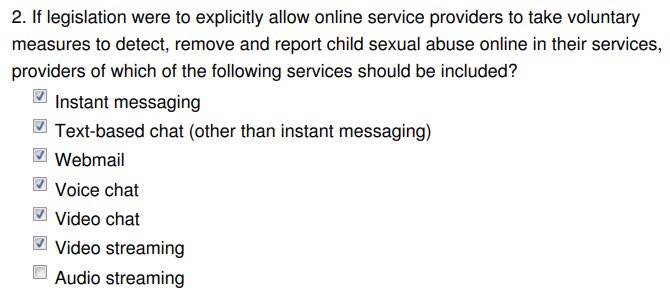

The next Q2 asks if they should be allowed to voluntarily scan for child sexual abuse. This seems like a no-brainer, but it does require a derogation from privacy law. You can check yes to all, or omit audio streaming and online gaming (to avoid fictional content being included).

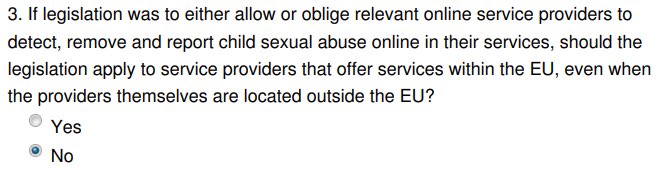

The following Q3 asks if Europe’s laws should apply to service providers from outside Europe. The problem with this is that other countries have their own CSAM reporting regimes, such as the NCMEC reporting regime in the USA. So until we know which regime is better, answer no.

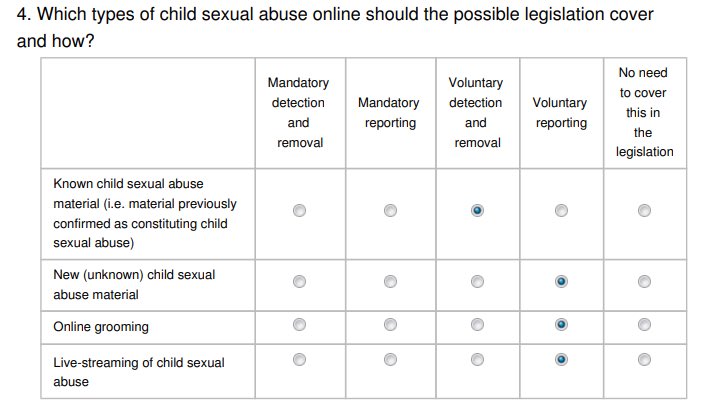

The next Q4 is important but confusing. Platforms can already remove whatever content they like, and the law wouldn’t change that. But we don’t want to over-incentivize removal of content that hasn’t already been identified as CSAM, so the screenshot shows the best way to answer.

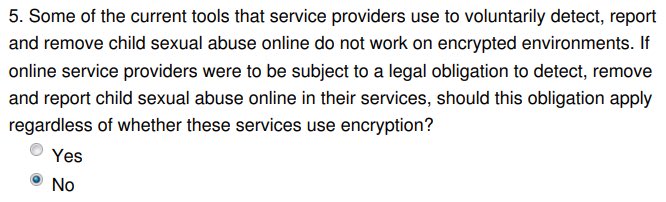

If you only answer one question, answer this Q5. Should platforms be required to bypass or weaken end-to-end encryption, so that they can scan all your private communications? Absolutely NOT. Doing this would harm children and adults alike. There are better ways to tackle abuse.

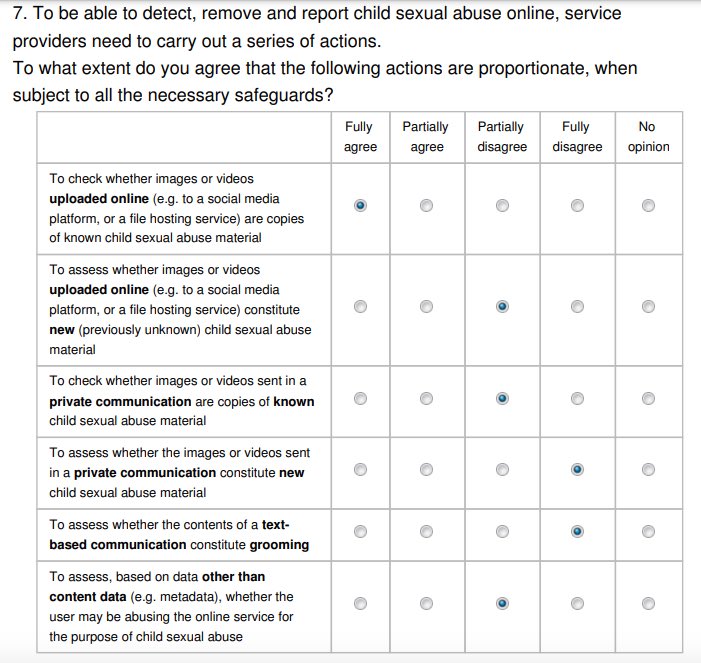

Q7: Remember that automatic CSAM detection tools only work reliably on known images. So mark the first checkbox “Fully agree” to agree that the use of these tools, with safeguards, is proportionate. Answer “Fully disagree” to scanning private communications and text messages.

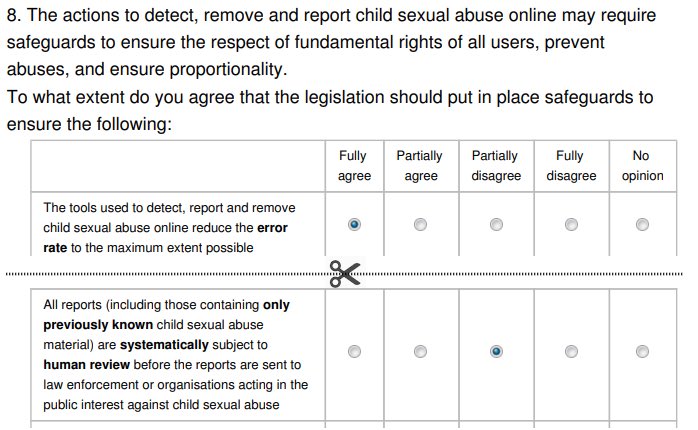

Q8 on safeguards: the more, the better, right? Generally yes, though we have answered that there probably doesn’t need to be further human review of images previously identified as CSAM. This can be traumatizing for the reviewers. Otherwise, you can agree to all of these.

There’s lots more, but this thread is already long and those are the most crucial questions to answer. Check out how @ProstasiaInc answered the remaining questions on transparency, reporting, law enforcement cooperation, survivor services, and prevention: https://prostasia.org/wp-content/uploads/2021/03/Contributionbd4f6eae-9e43-44e3-ae11-839c9ac7699d.pdf">https://prostasia.org/wp-conten...

Also read more about how representatives from government, industry, academia, and civil society are approaching the consultation, in the summary of @ProstasiaInc’s recent webinar on the topic, as summarized in our latest newsletter: https://prostasia.org/newsletter/?email_id=88">https://prostasia.org/newslette...

Read on Twitter

Read on Twitter