(1/n) Social science research often relies on scans of documents such as statistical tables, newspapers, firm level reports, etc. #EconTwitter

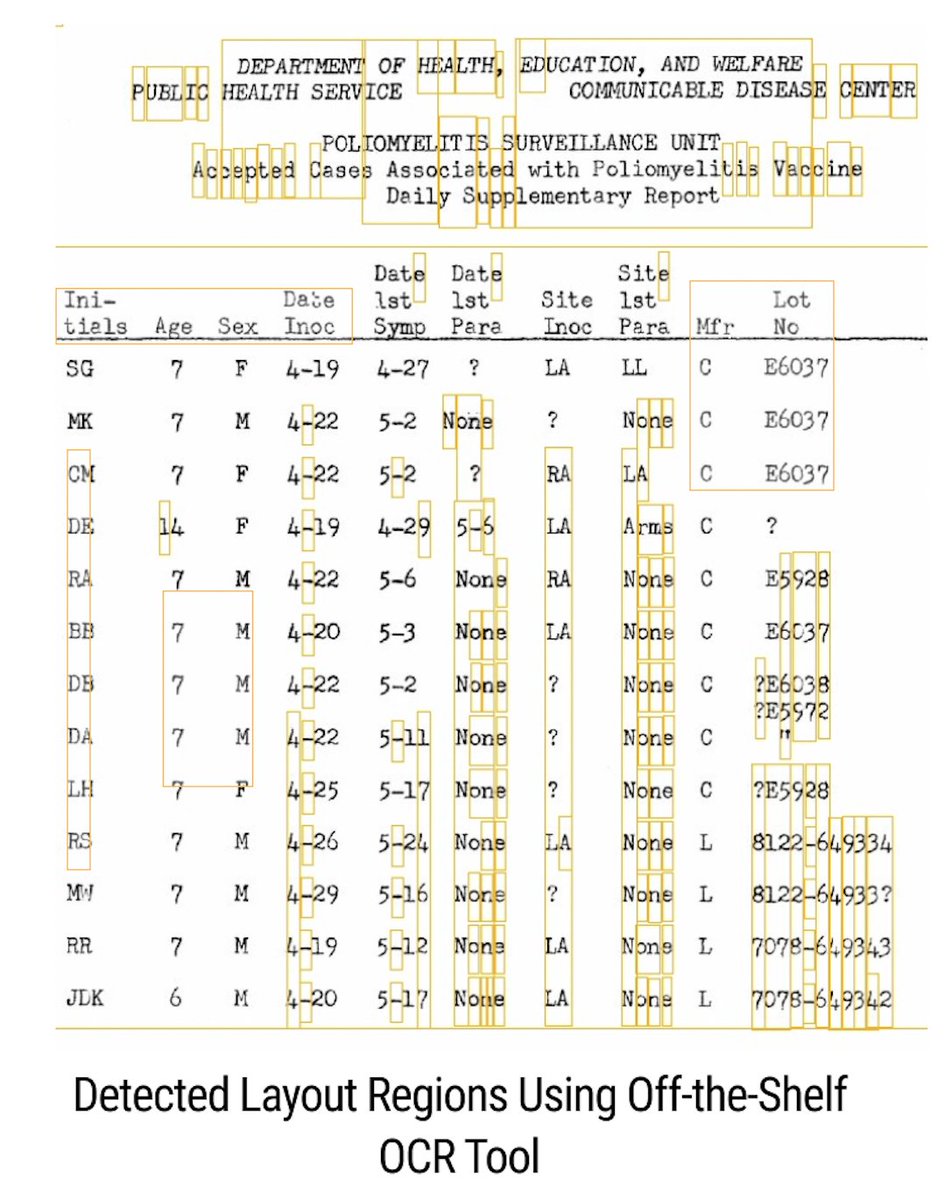

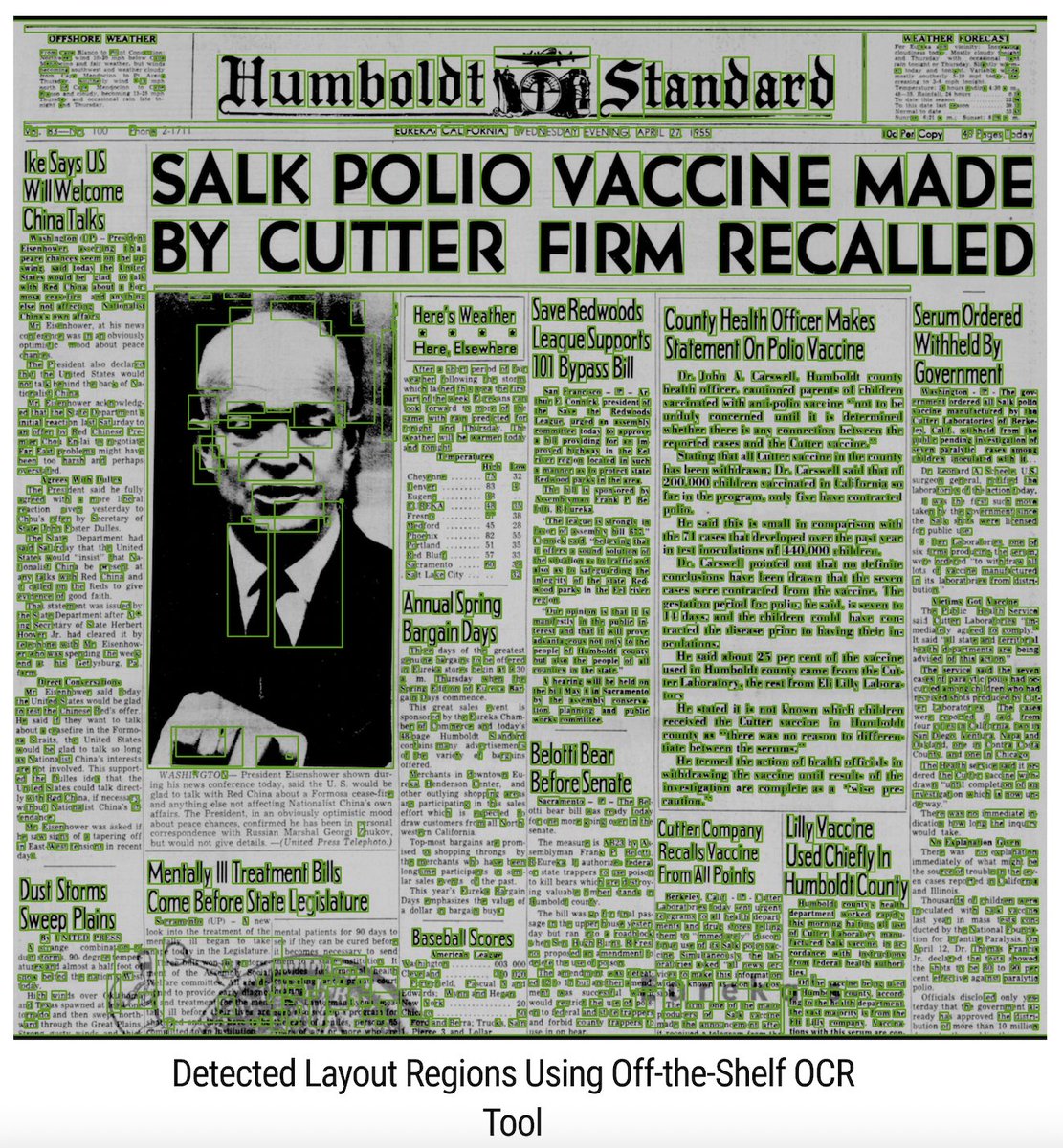

(2/n) Unfortunately, OCR often fails to detect layouts in such documents. These figures show off-the-shelf OCRed bounding boxes. Much of the text is not detected\some is detected twice\scrambled. The OCR cannot distinguish different text types, ie headlines v captions v articles.

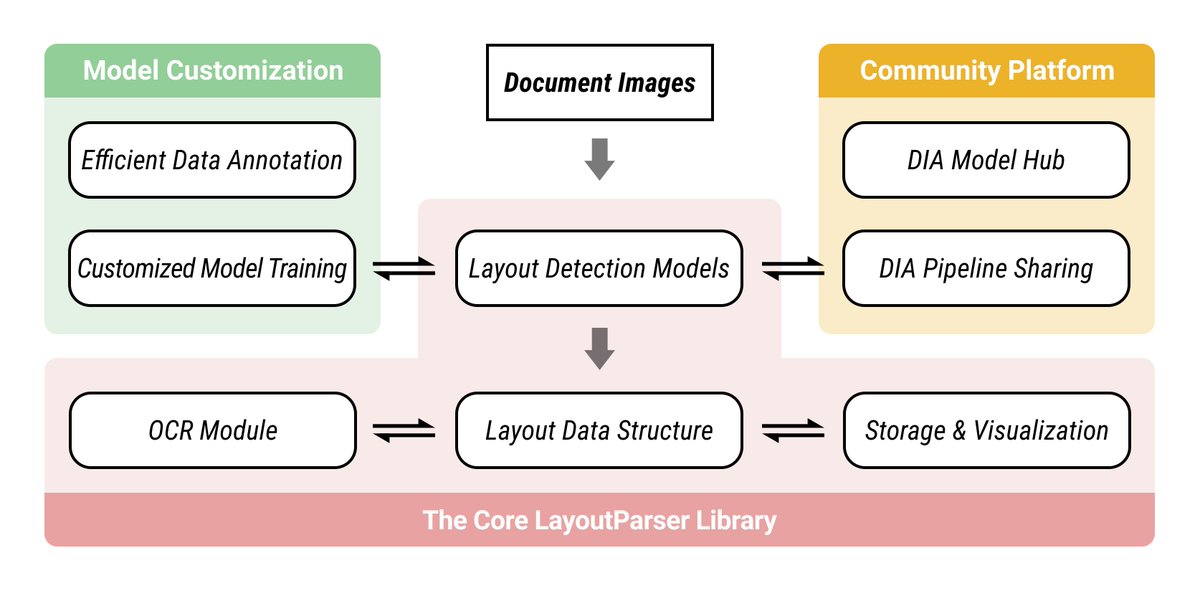

(3/n) We are releasing an open-source deep-learning powered library, Layout Parser, that provides a variety of tools for automatically processing document image data at scale.

Webpage: https://layout-parser.github.io/

Arxiv:">https://layout-parser.github.io/">... https://arxiv.org/abs/2103.15348

Github:https://arxiv.org/abs/2103.... href=" https://github.com/Layout-Parser/layout-parser">https://github.com/Layout-Pa...

Webpage: https://layout-parser.github.io/

Arxiv:">https://layout-parser.github.io/">... https://arxiv.org/abs/2103.15348

Github:

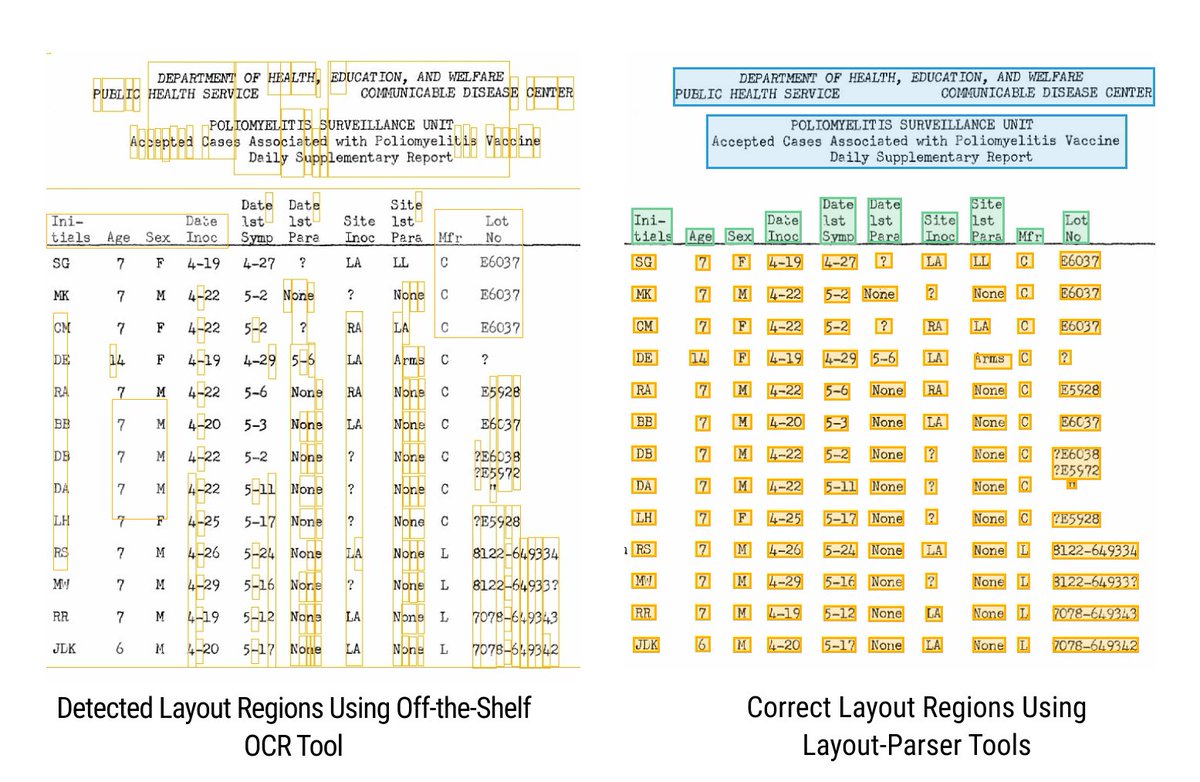

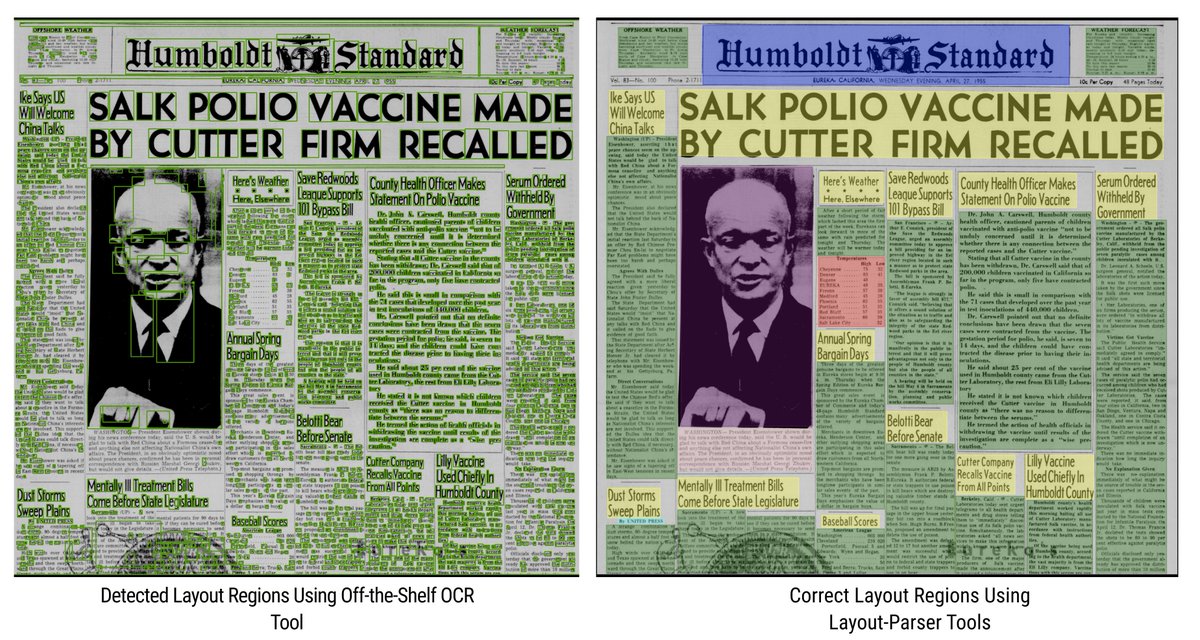

(4/n) Contrast the off-the-shelf OCR with the layout detection results we achieve through Layout Parser’s deep learning powered pipelines.

(6/n) Layout Parser is not just for English. Here’s another example, a complex historical table from Japan

(8/n) Layout Parser currently has some pre-trained models, and the pipelines for the above examples will be integrated when finalized. We are working to expand the types of documents it can process off-the-shelf

(9/n) With Layout Parser, you can train your own customized DL-based layout models. Because our pre-trained model zoo is currently small, right now Layout Parser is mostly useful for designing your own customized models

(10/n) Don’t have labeled data? Layout Parser incorporates a data annotation toolkit that makes it more efficient to create labeled data.

(11/n) Amongst its varied functionalities is a perturbation-based scoring method to select the most informative samples to label https://arxiv.org/abs/2010.01762 ">https://arxiv.org/abs/2010....

(12/n) Layout Parser builds wrappers to call OCR engines and comes with a DL-based CNN-RNN

(13/n) Layout Parser provides a flexible output structure to facilitate diverse downstream analyses.

(14/n) Layout Parser is implemented with simple APIs and can perform off-the-shelf layout analysis with four lines of Python code

(15/n) No background in deep learning? I’m teaching a new course this semester on deep learning for data curation at scale. I’ll be putting the course material into a public knowledgebase. I’ll post here when this is released (sometime in the next 1-2 months).

(16/n) We hope to make substantial innovations. With more resources we can expand the pre-trained model zoo significantly. Ultimately, we hope to convert the library into a user-friendly online platform that can be used by anyone, regardless of Python literacy or hardware.

(17/n) Building this takes a ton of work and financial resources. We’ve been invited to the final round of a large grant competition that would significantly expand Layout Parser, but we need to show there is demand for this from the social science community.

(18/n) If Layout-Parser seems relevant to your work, please consider taking less than a minute to visit our website: https://layout-parser.github.io/ .">https://layout-parser.github.io/">... If you are on Github, take two seconds to star our repo: https://github.com/Layout-Parser/layout-parser.">https://github.com/Layout-Pa... This will help us demonstrate crucial community support.

(19/n) Layout Parser contributors: @_shannon_shen, @ruochenxD, @MelissaLDell, @lee_bcg, @J_S_Carlson, Weining Li. Currently working with @qlquanle, @pquerubo, @LeanderHeldring, @krishna_econ, Sahar Parsa, and awesome RAs on additional models that will be added when complete.

Read on Twitter

Read on Twitter