Thread on the challenges of the lack of large good quality multi site datasets, and the promises of transfer learning and ensemble models, spurred by our new paper: "A Machine Learning perspective on the emotional content of Parkinsonian speech" ( https://doi.org/10.1016/j.artmed.2021.102061)">https://doi.org/10.1016/j... 1/n

led by valiant K Sechidis in partnership with Roche. Finding generalizable markers of neuro-psychiatric conditions is difficult. The conditions are highly heterogeneous and large good quality datasets are scarse, 2/n

due to costs/complexity in building them and necessary concerns in sharing them. This makes it difficult to build better theories of the mechanisms underlying the markers and to rely on the more promising and increasingly complex machine learning techniques. 3/n

This is true at least in the field I know best, acoustic markers of neuropsychiatric conditions: published work struggles with limited sample sizes and heterogeneity ( reviews: https://doi.org/10.1002/aur.1678">https://doi.org/10.1002/a... & https://doi.org/10.1101/583815 ">https://doi.org/10.1101/5... & https://doi.org/10.1044/2020_jslhr-19-00241).">https://doi.org/10.1044/2... 4/n

With these samples, advanced models (e.g. deep learning models independently inferring the most relevant features in the data) cannot be properly applied (overfitting goes brrrr). 5/n

More crucially, the generalisability of any inference to the larger clinical population is dubious and never properly tested. Mind you, most papers do use cross-validation, but that puts the representativity of your limited sample on a pedestal. And it simply doesn& #39;t hold 6/n

(much much frustrating work in the pipeline on this). In this project we took a step back, re-read the clinical descriptions of patients with Parkinson& #39;s, talked to clinicians (tho& #39; not enough to patients, no excuses here), and focused on the perceived sadness of the patients.7/n

If clinicians perceive sadness, and do so in a way that is related to specific maybe we could train models on the more easily accessible emotional speech datasets, before transferring them to Parkinsonian speech. 8/n

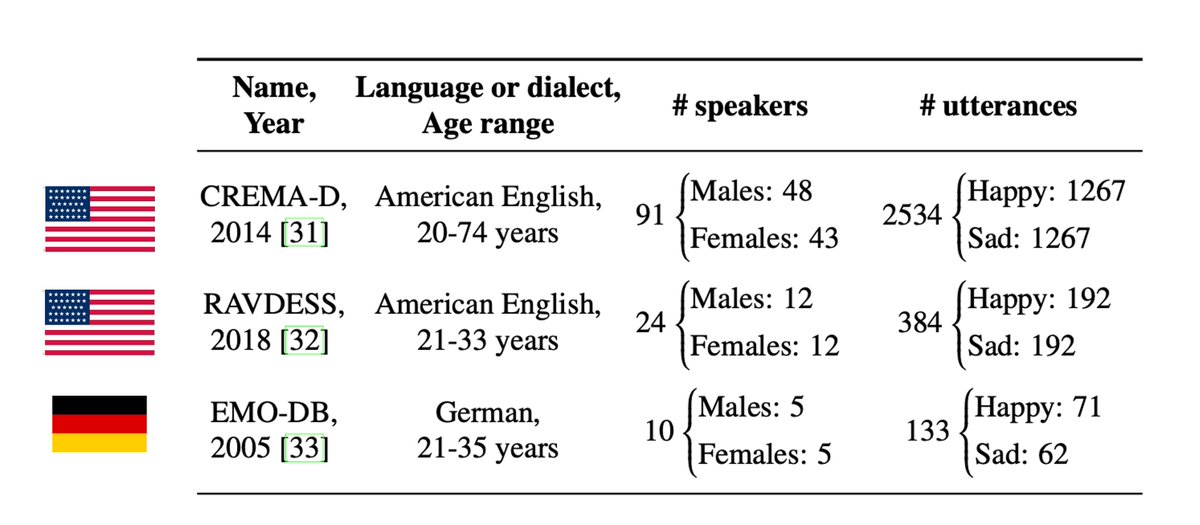

Further emotional speech datasets of varying quality are available in multiple languages (see 3 large ones in the pic, but more are available), allowing us to assess generalisability of the training and start building potentially multilingual algorithms. 9/n

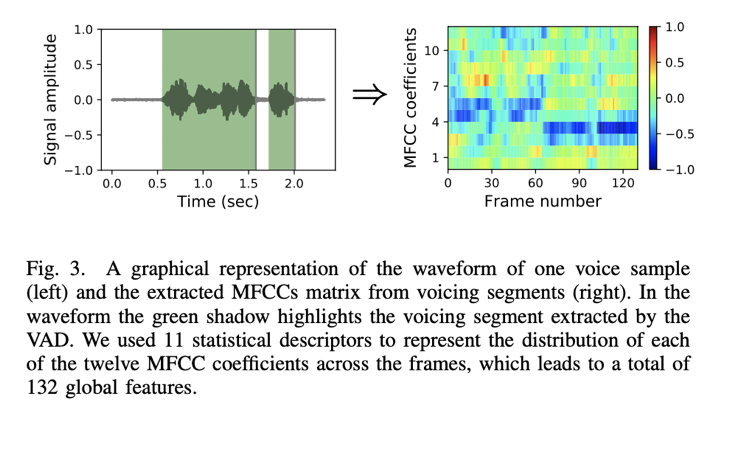

For each emotional dataset we extracted MFCCs and trained a 10-fold cross-validated gradient boosted trees to predict valence (sad or happy?). 10/n

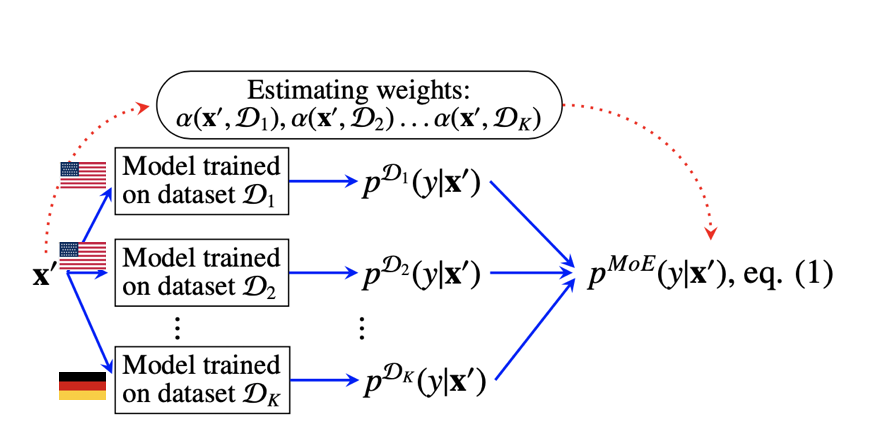

We then built a mixture of experts (MoE) model combining the predictions of the different algorithms: first we evaluated the similarity between the test stimulus and each of the training datasets, and then produced an accordingly weighted average of the predictions. 11/n

We then asked the mixture of experts to evaluate the valence of speech produced by 50 Spanish Parkinsonian patients and matched controls. N.B. the algorithms had never seen patients, nor Spanish before. 12/n

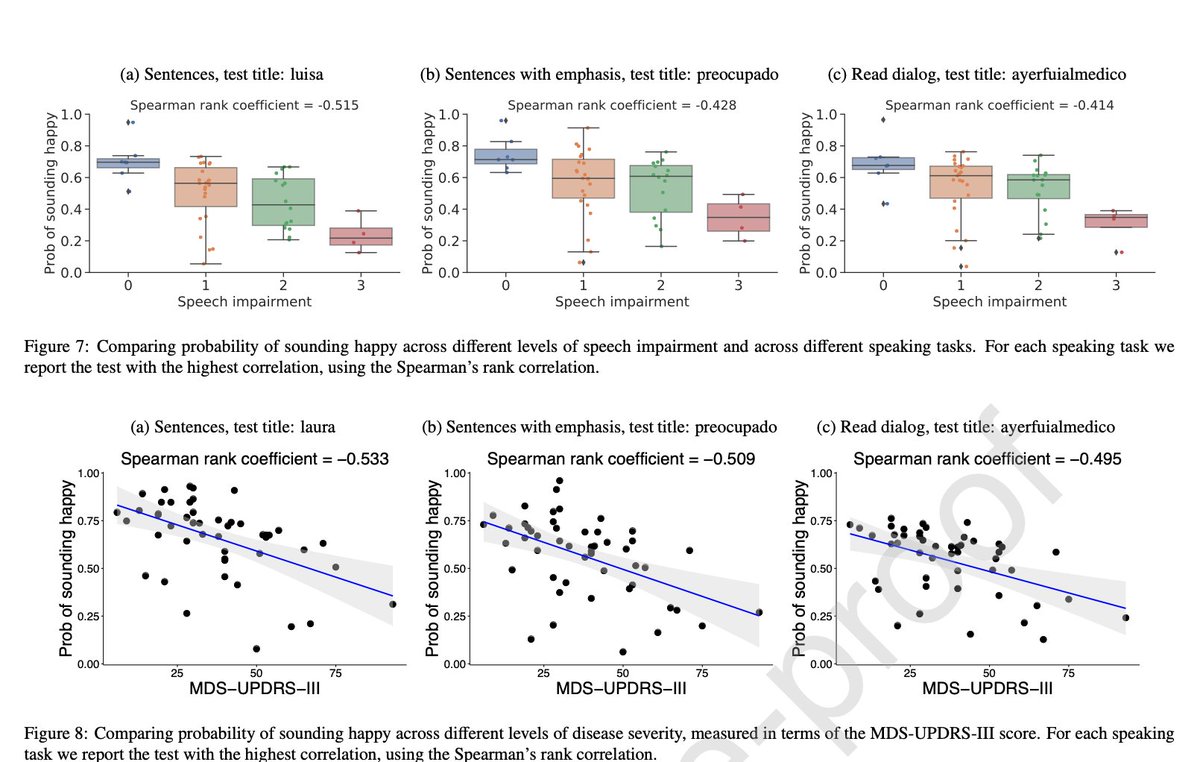

When evaluating read aloud sentences and dialogues, our MoE& #39;s predictions did relate to diagnostic group (cohen& #39;s d of 0.8) and speech and motor impairment severity (spearman rho of 0.4). 13/n

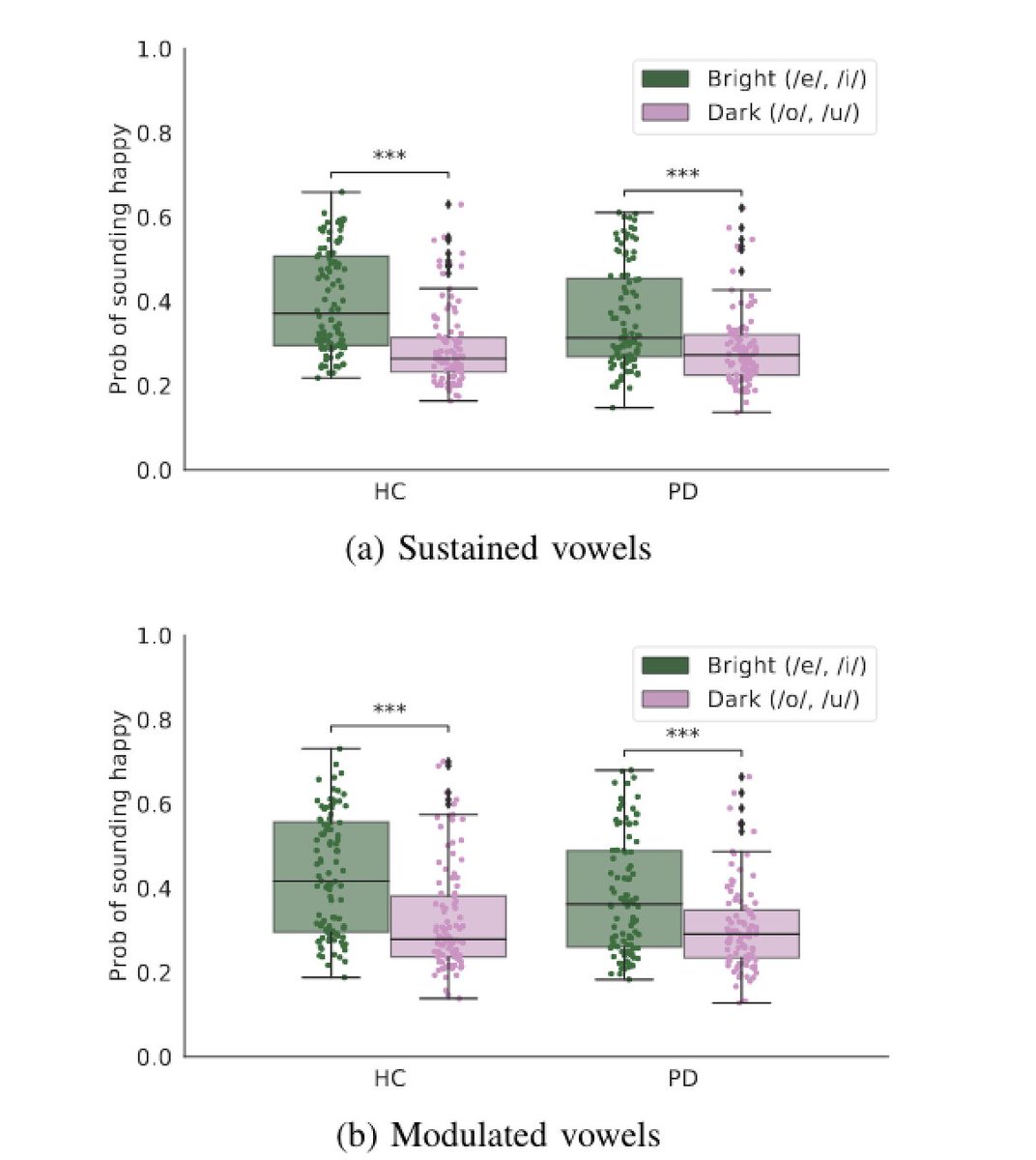

Interestingly this did not hold for prolonged phonations (aaaaa, iiiiii, eeee, etc.), but the algorithm did point to affective sound-symbolism (specific vowels convey emotional meaning, unreported in the manuscript) 14/n

In other words, a MoE trained on emotional speech in languages different from that of the test sample is able to pick up information related to diagnostic group and speech impairment severity, without any retraining. 15/n

The study is a proof of concept (e.g. more work is needed extending the MoE to more naturalistic speech, and to compare to more traditional algorithms, as https://doi.org/10.1121/1.5100272)">https://doi.org/10.1121/1... 16/n

However, the approach is promising and it& #39;s being extended now by @HansenLasse94 , working on speech in depression and possible confounds (beware of background noise!). 16/n

Take home message: there is a perhaps yet untapped potential in ensemble transfer learning, especially when coupled to reflection on mechanisms to motivate the transfer (yeps, I know here we use phenomenology) beyond this specific example. 17/n

In particular, I dream of being able to better rely on current work on network approaches to clinical features across diagnostic groups to create more nuanced trans-diagnostic MoE models. n/n

Read on Twitter

Read on Twitter