Hi everybody. Here is a quick little writeup on how game devs have tried to more efficiently render games and what this means for next generation. There will be some brief history that motivated modern GPU design decisions.(1/35)

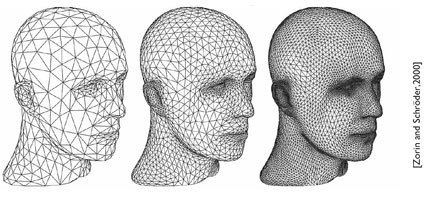

3D Computer Graphics are built by taking objects and representing them using polygons. The basic principles of this process can be learned in a geometry class. There are many different polygon types you can build your games out of,(2/35)

but triangles are normally used because they have the least number of points making them more efficient. In CGI films, they are not restricted by these limits and end up using rectangles for their objects. This is the first of many differences between the two.(3/35)

Another element real time graphics has to worry about is polygon budgets. CGI can use as many polygons as needed and if one is not visible, simply cull it. Geometric Culling is whenever you remove polygons from the graphics pipeline.(4/35)

Back in the day before GPUs were used for consumers, CPUs had to produce 3D Graphics. Demanding a CPU to perform the entire pipeline while culling individual triangles was impossible. This created strict polygon budgets. A different form of culling was needed.(5/35)

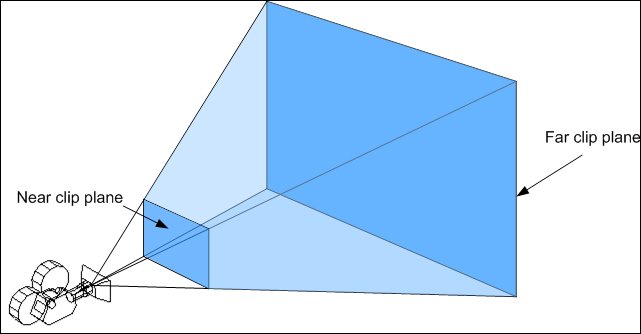

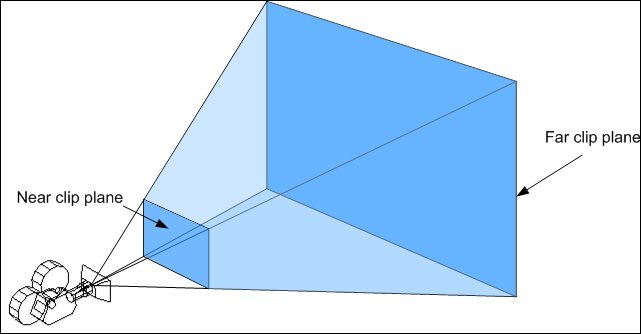

This led to view frustum culling. Based off what was in view of the player, you would remove triangles outside of that area. Objects too close were removed and object too far as well. This led to games having objects pop in which still happens today.(6/35)

I have seen some confusion caused because some people are saying the PS5 has radial culling because it can remove triangles that are offscreen. This has been a thing already for over 25 years and that person does not know what they are talking about.(7/35)

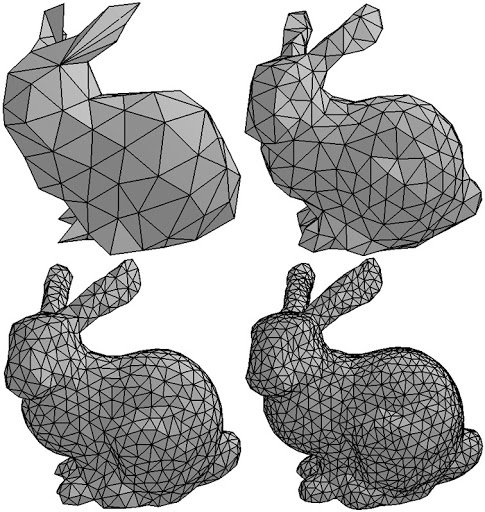

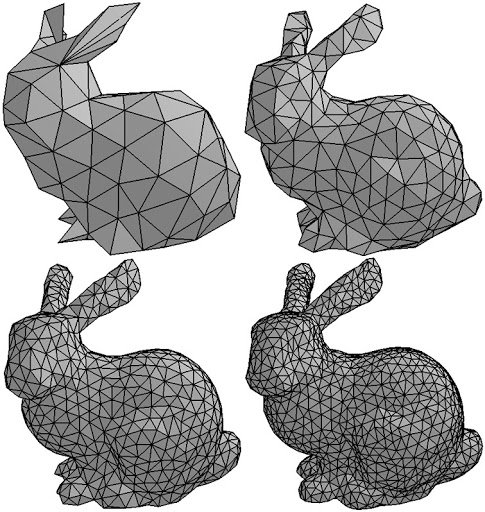

Now just removing triangles off screen is simply not enough for real time graphics in a lot of cases. This led to Level of Detail(LOD) models. Depending on distance from the camera an object is(already calculated for frustum culling), you can choose a LOD.(8/35)

Modern games use multiple LODs for objects, so that the closer objects are higher detailed and the further away ones are lower detailed. This saves huge amounts of performance and can keep high fidelity games within polygon budget.(9/35)

A common technique to increase triangle count is tesselation. Tesselation involves subdividing triangles. Basically, 1 triangle is converted to 2 and than 4 and so on. This adds geometric complexity, but does take more performance. Now on to HW.(10/35)

I am going to be using the Playstation ecosystem as it better represents the full evolution of how 3D HW runs(Xbox released 7 years later) and I have focused on Xbox more in previous writeups. I could go in depth for both, but it would be redundant.(11/35)

Up until this point, 3D Graphics are incredibly limited in games as they are run on the CPU in SW rendering. One of the main reasons for the Playstation ecosystem to grow so large was them gambling on 3D Graphics HW.(12/35)

The PS1 performed the pixel/texture side of 3D graphics in fixed function HW. Then it used a secondary CPU core called the Geometry Transformation Engine(GTE) for the geometry side of games. It proved to be incredibly successful and hard for CPUs on PC to beat.(13/35)

Now the PS2 kept a similar design structure with textures/pixels handled by their "GPU" and then used 2 Vector Processing Units to handle the geometry side of the equation. This differed from GameCube/Xbox which now had dedicated fixed function HW for its geometry pipeline.(14/35

Around this time a new culling technique called occlusion culling was gaining traction going into the PS3 era. Occlusion culling works by removing objects occluded(blocked from player view) from rendering. This is when the fixed function HW started to lose effectiveness.(15/35)

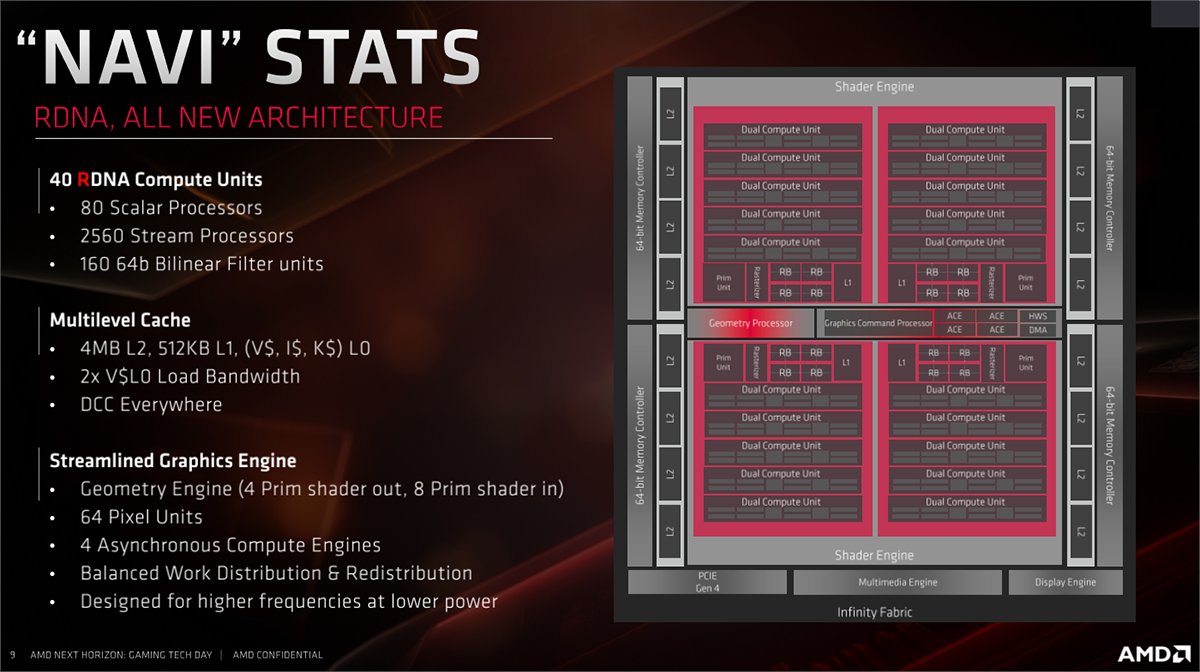

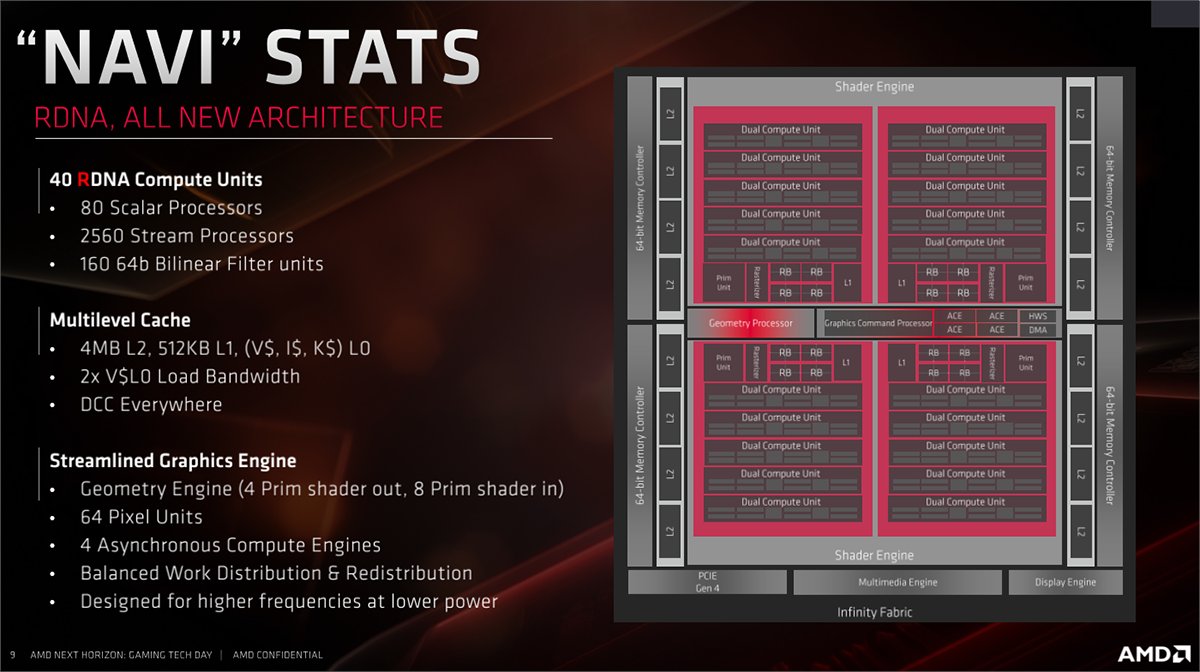

The HW still exists to this day. For example, in RDNA1 there is 4 primitive units which each can produce 4 triangles per clock. This is starting to become a GPU bottleneck in the PS3 era. The PS3 has this fixed function HW in its Nvidia GPU.(16/35)

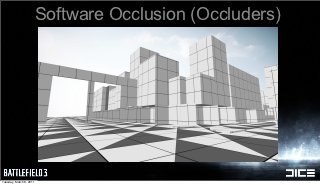

Now EA DICE wanted to push what 3D graphics could be on the(at that time) current gen consoles. So instead of relying on the limited fixed function HW, they built a SW occlusion technique for their latest Frostbite Engine and released in Battlefield 3.(17/35)

Battlefield 3 uses the CPU to cull triangles in boxes and only feeds the remaining triangles to the GPU. This strategy worked well on the Xbox 360 CPU, but worked way better on the PS3 Cell CPU which usually was underutilized.(18/35)

Battlefield 3 was one of the few games that looked better on the PS3. At the time, Battlefield 3 was one of the most visually impressive games on the market, and as a result it completely reshaped the next generation of video games.(19/35)

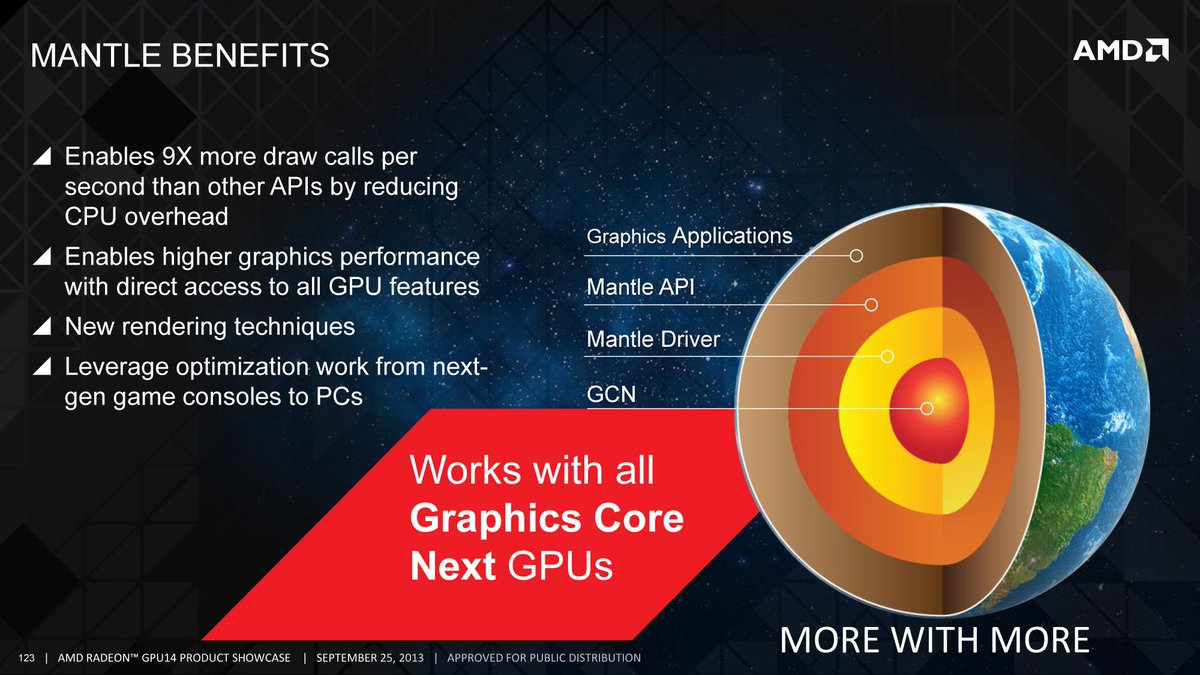

DICE found that a GPU task was more efficient performed on the CPU than the GPU. AMD consulted with DICE heavily on their new Graphics Core Next(GCN) Architecture. They also influenced the Mantle API.(20/35)

Mantle was focused on improving GPU utilization. One way was emphasis on Compute Shaders. Each Compute Unit would have 64 Stream Processors(SP) that can perform that many operations per clock. They could be used for any general purpose compute task.(21/35)

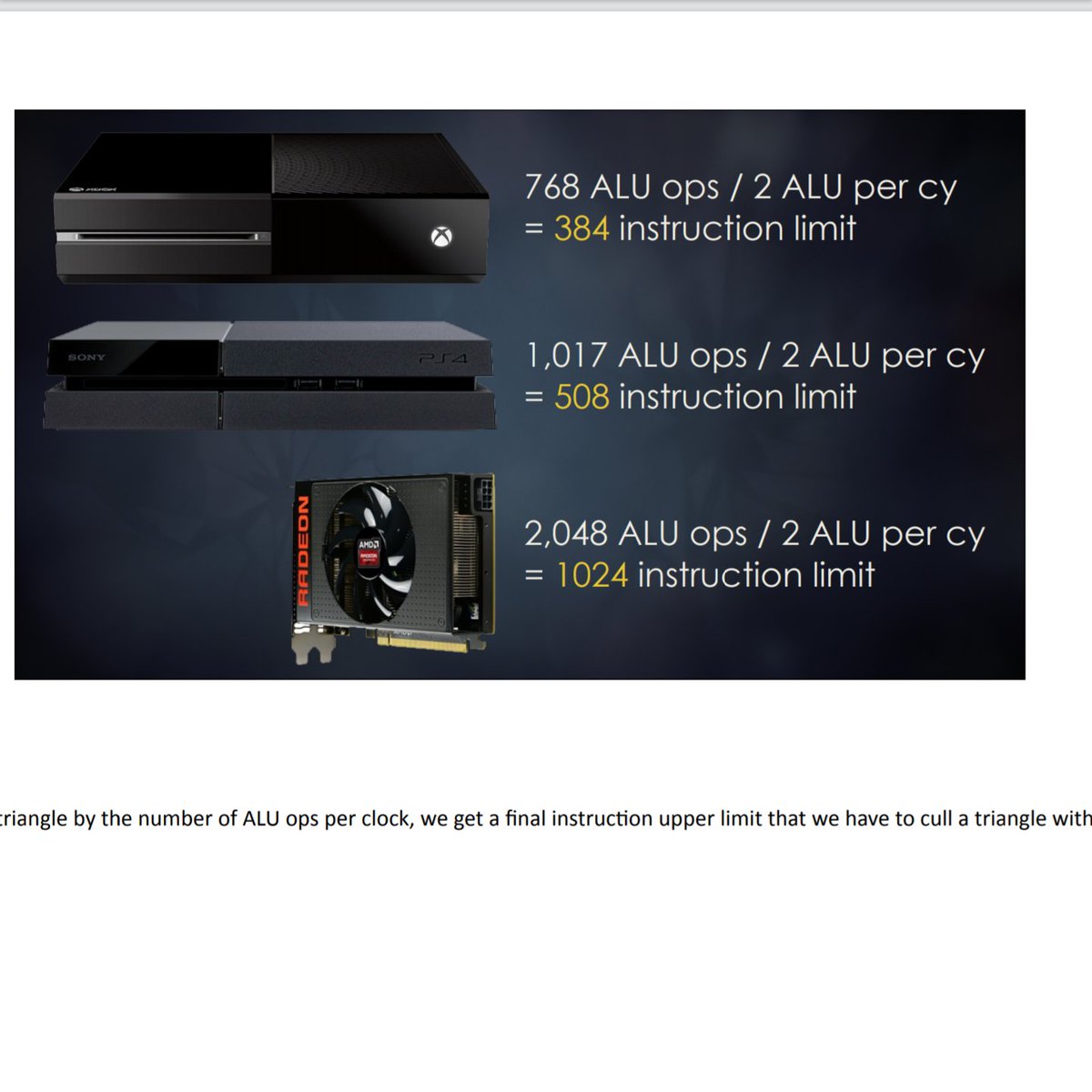

Compute tasks are math heavy or rely heavily on Arithmetic Logic Units(ALUs inside the SPs). Mantle eventually got cancelled and replaced by 3 APIs: PS4& #39;s API, DirectX12, and Vulkan. AMD built GCN around this focus which led to the PS4/X1 being compute machines.(22/35)

Nvidia& #39;s GPU architectures were not oriented this way which led to GCN largely being underutilized. However, some games did take advantage of this. Frostbite Engine(which the architecture was built for), used Compute Shaders for culling.(23/35)

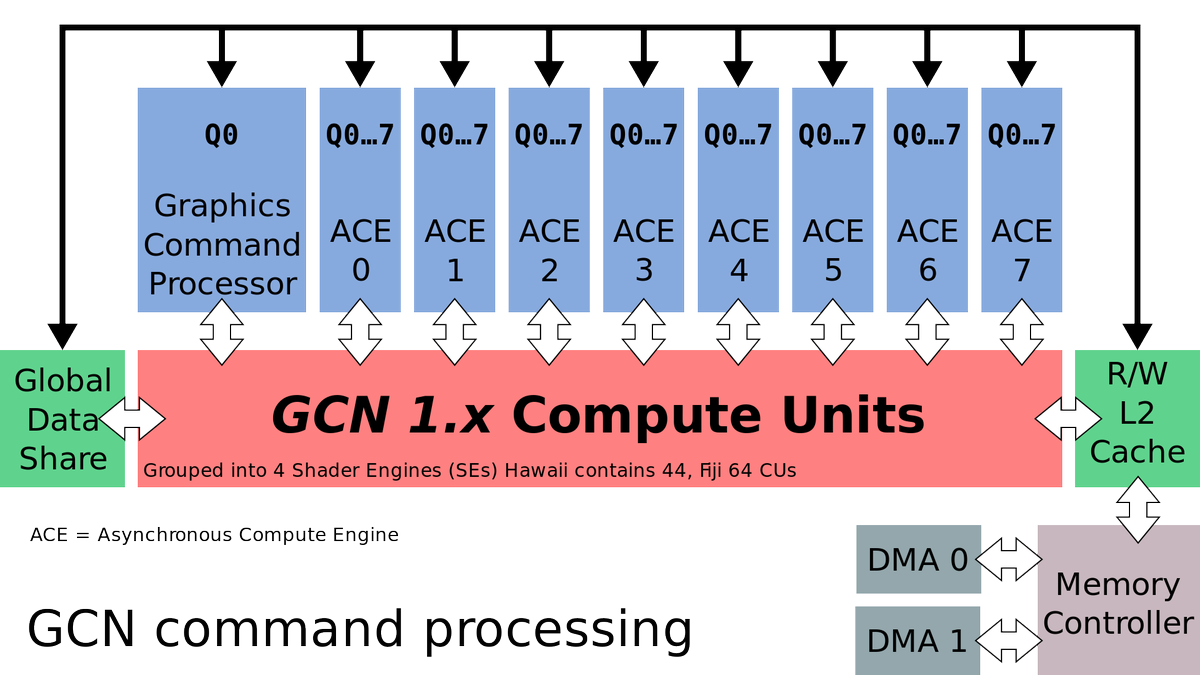

How Compute Shaders are implemented is important to how they perform. The GPU can input 2 types of workloads Graphics(Command Processor) and Compute(Asynch Compute Engine/ACE). These 2 pipelines don& #39;t directly communicate and rely on the CPU.(24/35)

This means many graphics functions are restricted from CS use and they can& #39;t share cache with graphics. This does degrade their pure performance, but Frostbite found a use. A net benefit would be if they could beat the fixed function HW.(25/35)

Here was their math for code performance required. What they did was bundle triangles in a meshlets. These meshlets could then be removed by the mesh or subdivison of the mesh. This is how modern Frostbite games work.(26/35)

Nvidia& #39;s Turing/Ampere architecture has inproved their compute capability to mitigate the fact that games are starting to utilize this. Other big titles using this recently are Death Stranding, Red Dead Redemption 2, COD:MW, and Doom Eternal.(27/35)

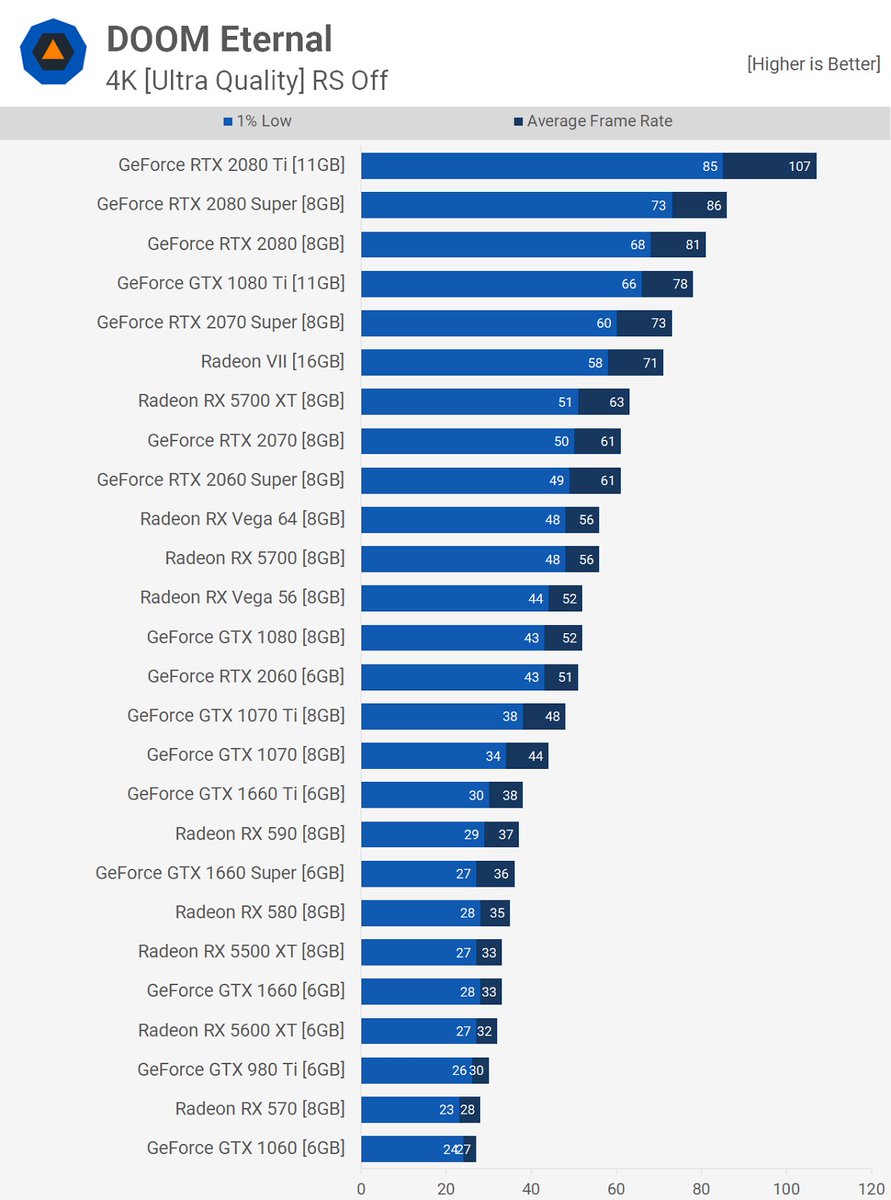

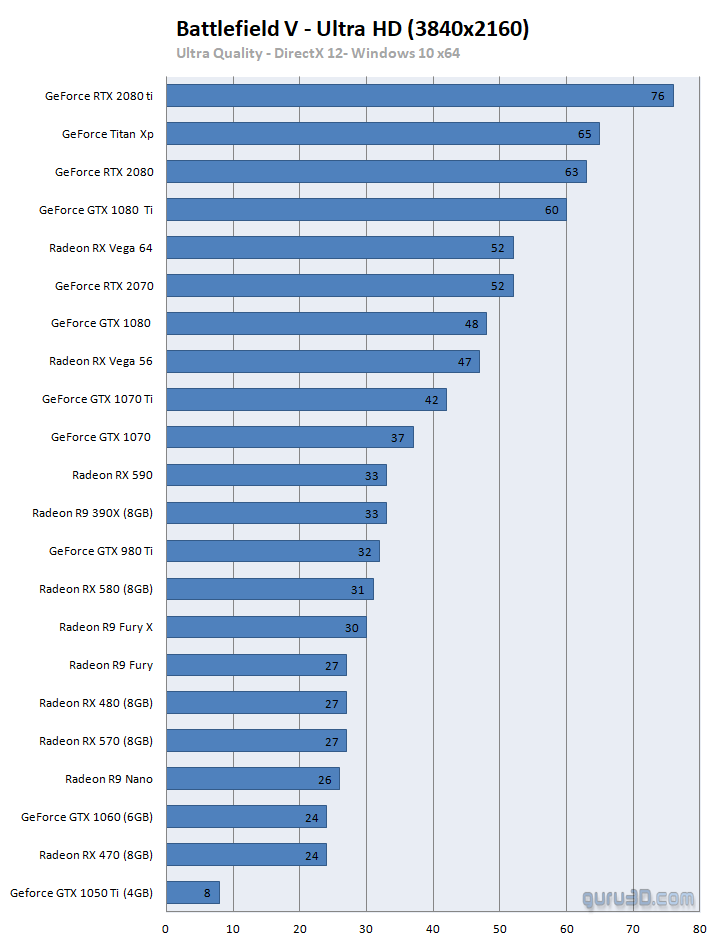

Now I said that games were underutilizing GCN right? Well some of these more recent titles are favoring this functionality. You can see how much better AMD GPUs are doing compared to the GTX 10 Series. Both AMD/Nvidia seeked to further improve performance.(28/35)

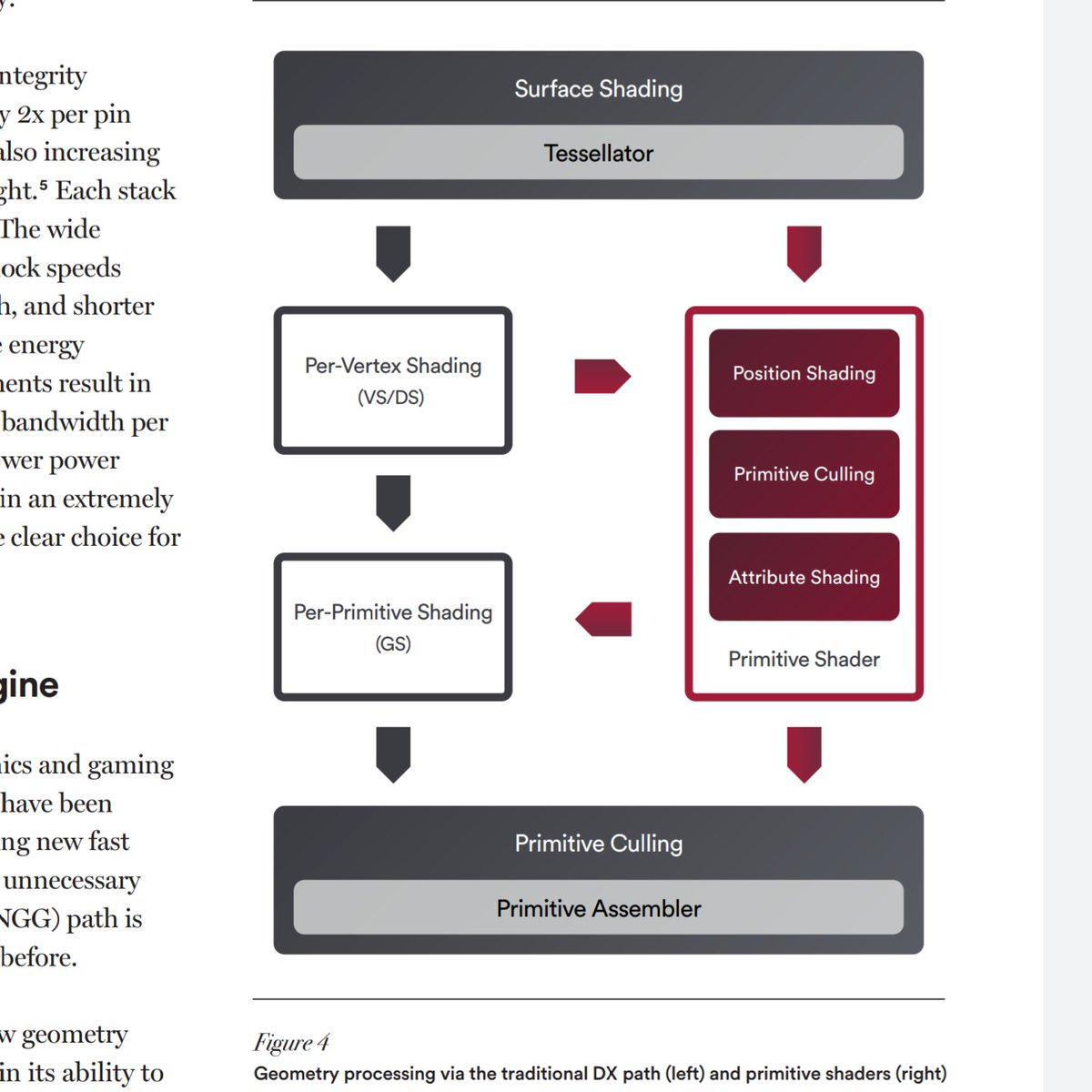

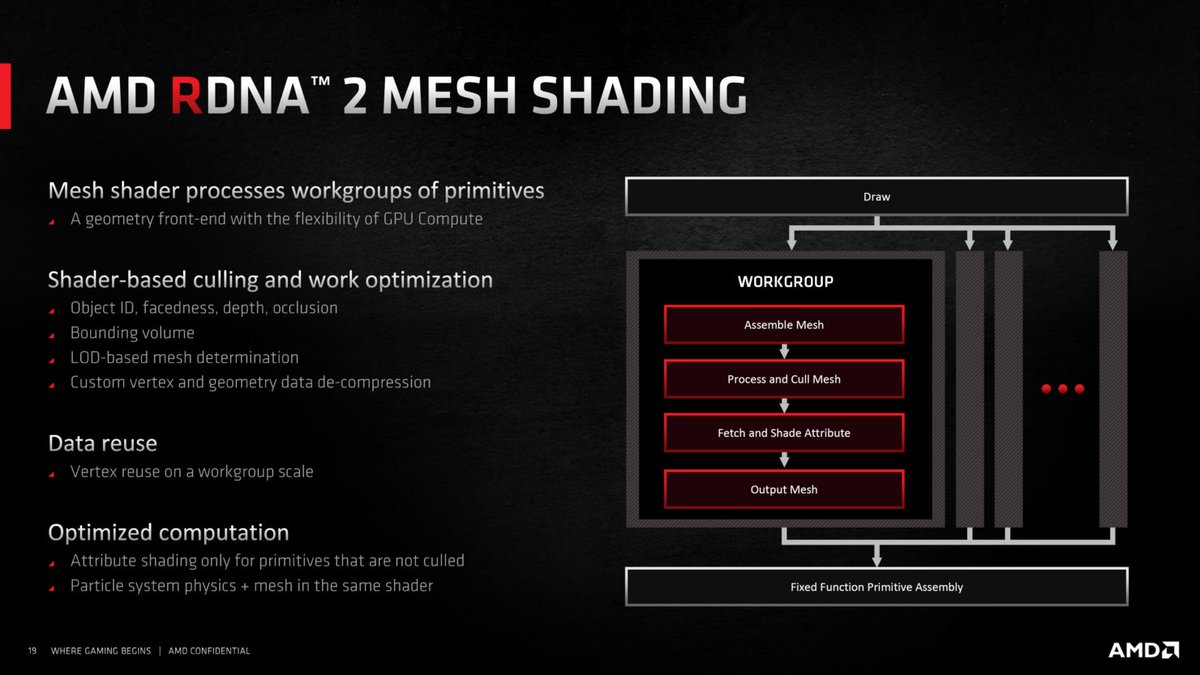

This led to Primitive/Mesh Shaders. The goal was to take the Compute Shader capabilities and integrate it with the graphics pipeline and not rely heavily on CPU/RAM to complete what the GPU/cache could do. AMD& #39;s Vega architecture introduced this groundbreaking feature.(29/35)

The problem for Vega was the lack of SW support. There is a general philosophy in silicon design to make the right bets years in advance. AMD has been incredibly successful recently because of this. RDNA is their new architecture built for the future of gaming.(30/35)

RDNA took Vega& #39;s new Geometry Engines and unified them for improved performance. It will take some time for developers to gain access to these benefits. I would look out for these 3 in the coming years: Unreal Engine 5, Frostbite(Battlefield), and Sony& #39;s 1st Party.(31/35)

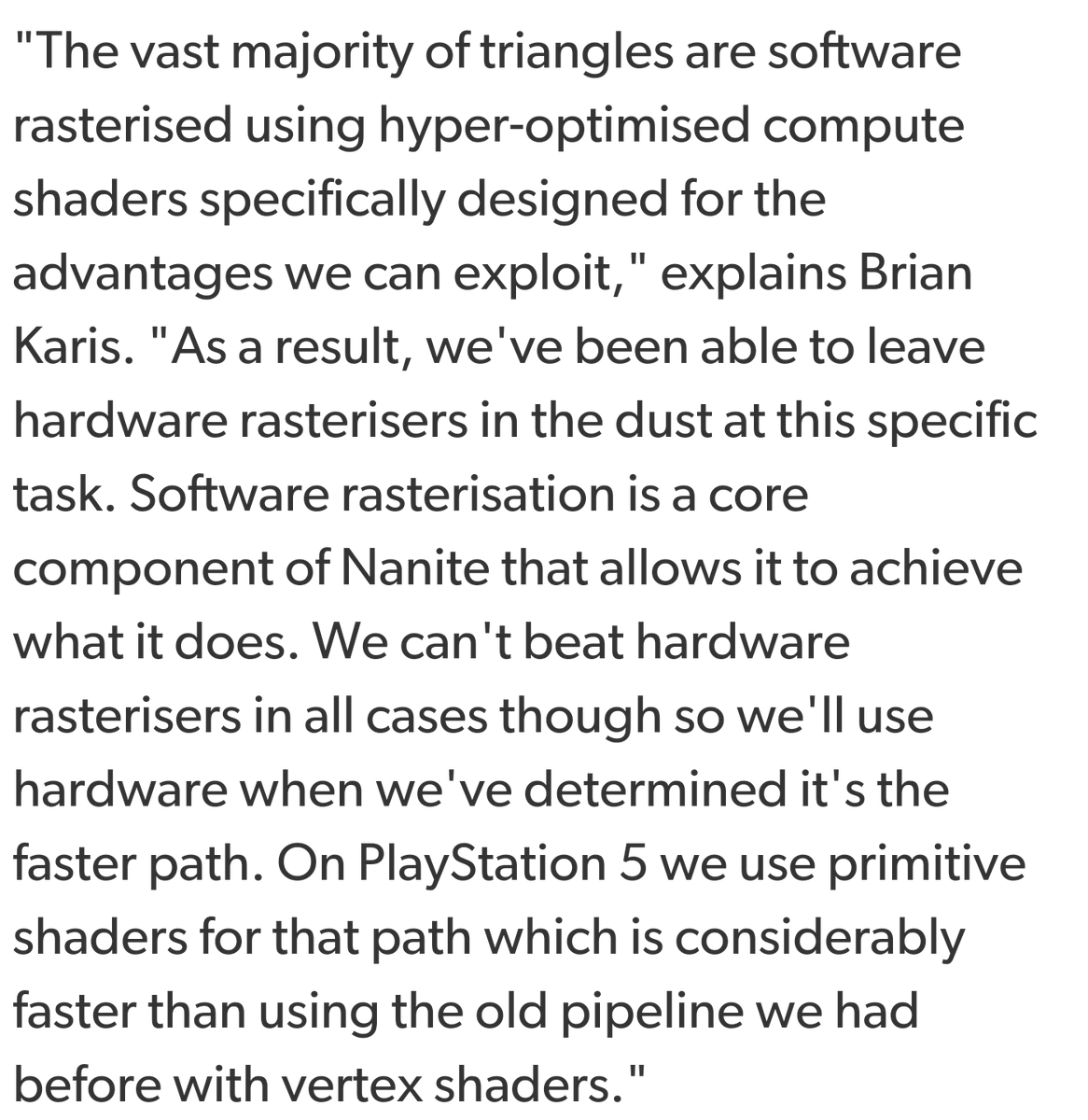

UE5 uses a new micropolygon solution similar to CGI movies where it only rendere polygons in view. UE5 is supporting the old Compute Shader solution for GPUs without Primitive/Mesh Shader support.(32/35)

A big difference between the current gen and next gen versions of UE5 games will be on how polygons are stored. HDDs have seek times over 500 times larger than SSDs limiting streaming of polygons. All polygons on the PS4 will need to be culled from RAM.(33/35)

For the PS5, culling can occur to polygons on the SSD as well thanks to the low seek times. For some, it might be a good time to rewatch the UE5 trailer with your newfound knowledge.(34/35) https://youtu.be/qC5KtatMcUw ">https://youtu.be/qC5KtatMc...

As always if you have questions please ask. I know this was a long one and I would recommend taking notes of this stuff to help with memory retention. Thanks.(35/35)

Read on Twitter

Read on Twitter