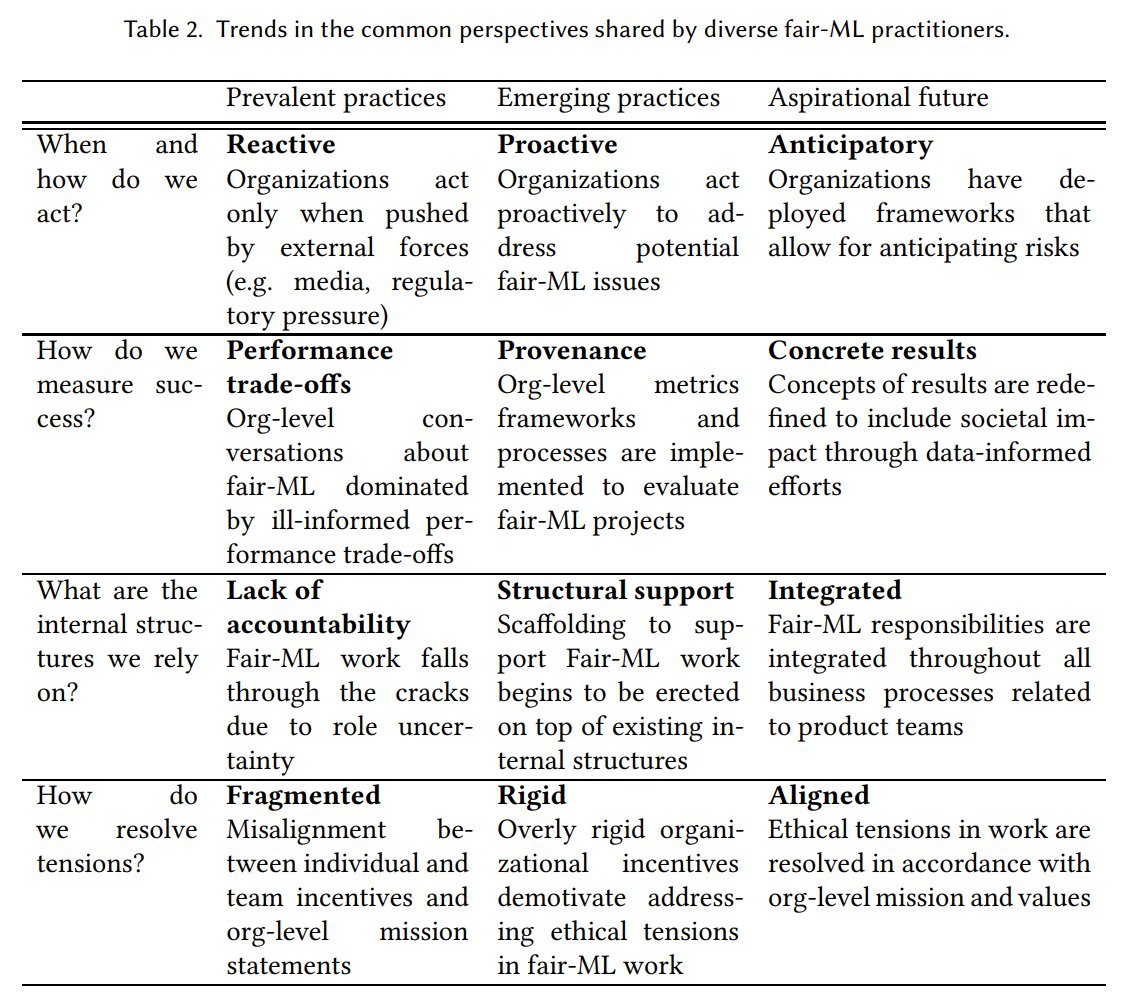

Ethnographic study that interviewed 26 responsible AI practitioners. Prevalent at many orgs:

- reactive, respond only to external pressure

- ill-informed performance trade-offs

- lack of accountability

- misalignment btwn team & individual incentives

https://arxiv.org/abs/2006.12358 ">https://arxiv.org/abs/2006....

- reactive, respond only to external pressure

- ill-informed performance trade-offs

- lack of accountability

- misalignment btwn team & individual incentives

https://arxiv.org/abs/2006.12358 ">https://arxiv.org/abs/2006....

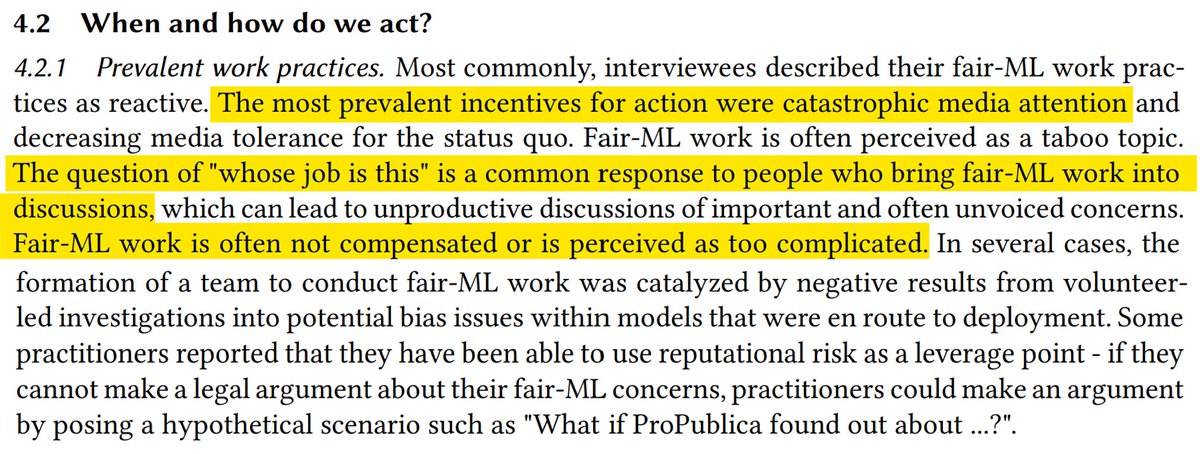

- The most prevalent incentives for action were catastrophic media attention & decreasing media tolerance

- Fair-ML work is often perceived as a taboo topic

- "Whose job is this" is a common response

- Fair-ML work is often not compensated or is perceived as too complicated

- Fair-ML work is often perceived as a taboo topic

- "Whose job is this" is a common response

- Fair-ML work is often not compensated or is perceived as too complicated

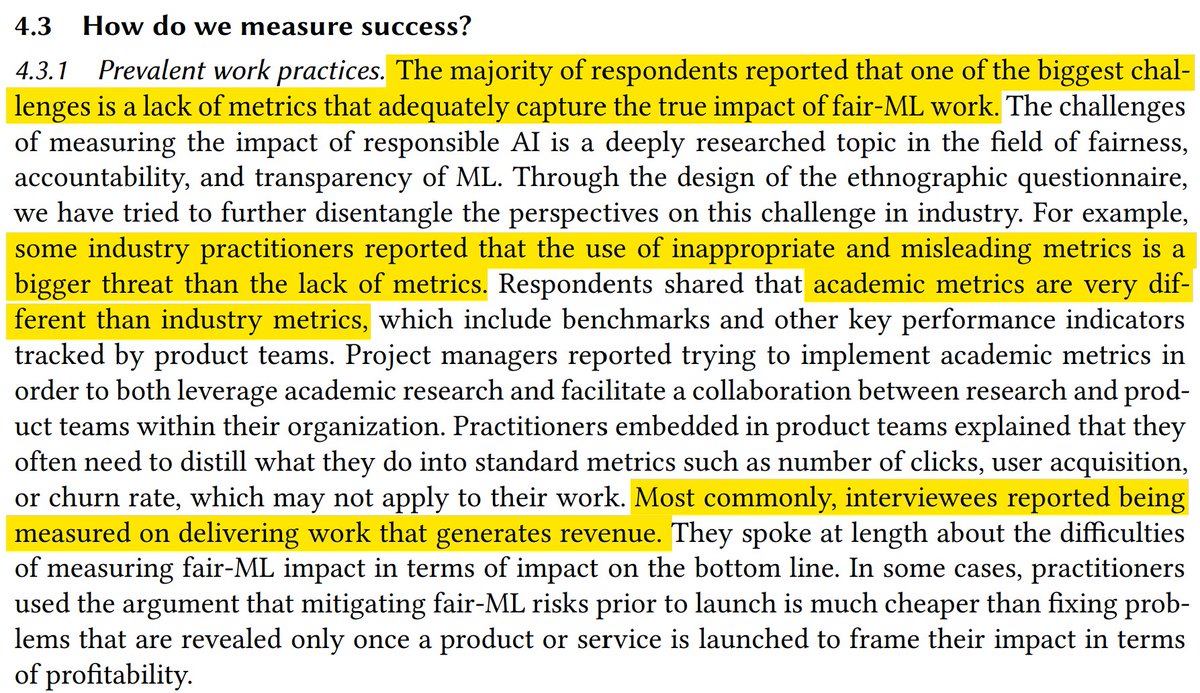

- One of the biggest challenges is lack of metrics that capture the true impact of fair-ML work

- Inappropriate & misleading metrics is a bigger threat than lack of metrics

- Most commonly, fair-ML practitioners reported being measured on delivering work that generates revenue

- Inappropriate & misleading metrics is a bigger threat than lack of metrics

- Most commonly, fair-ML practitioners reported being measured on delivering work that generates revenue

Metrics related challenges faced by fair-ML practitioners:

- time pressure leads org to focus on short-term & easier to measure goals

- qualitative skills not prioritized

- leaderships expects "magic": easy to implement solns that may not exist

- perf reviews don& #39;t account for it

- time pressure leads org to focus on short-term & easier to measure goals

- qualitative skills not prioritized

- leaderships expects "magic": easy to implement solns that may not exist

- perf reviews don& #39;t account for it

The above quotes are from "Where Responsible AI meets Reality: Practitioner Perspectives on Enablers for shifting Organizational Practices" by @bobirakova @jingyingyang @hsmcramer @ruchowdh

https://arxiv.org/abs/2006.12358 ">https://arxiv.org/abs/2006....

https://arxiv.org/abs/2006.12358 ">https://arxiv.org/abs/2006....

Read on Twitter

Read on Twitter