So I guess I can talk a bit now about what I& #39;ve spent most of the last year (in terms of #GravitationalWaves, not the hiding behind the sofa because of coronavirus et al.). Time for a  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread"> about using "robots" for @LIGO and @ego_virgo science.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread"> about using "robots" for @LIGO and @ego_virgo science.

Before I go on, a LOT of people were involved in putting together GWTC-2 ( https://arxiv.org/abs/2010.14527 );">https://arxiv.org/abs/2010.... I& #39;m only going to really focus on the bit I did, not because it& #39;s the most important, but just because I was there the whole time for those bits.

I got involved with the paper in a very serious way back in January around the time it became apparent that we& #39;d need some extra person-power to get everything ready in a timely manner. (Looking back this looks rather naive for a few reasons...)

Putting together a paper of this size is a big undertaking. Remember our first detection, GW150914, five years ago? That took us five months to get everything ready for. This time we had 39 events, not just one.

The plan to deal with this new regime was that we& #39;d delegate the analysis to members of our large collaborations, and that they& #39;d keep an eye on the analyses for their designated event. Way back in 2019 I was one of those people (I was assigned to GW190915_235702).

Initially this worked really well, and everyone did a fantastic job of looking after their analyses. But it& #39;s a lot of work, and everyone was very busy with their own work, their own teaching, or even their own studying (we had everyone from profs to grad students).

To understand where this system started to feel the strain you need to know a bit about the "lifecycle" of a gravitational wave signal. It has three critical steps:

1. Online analysis

2. Offline analysis

3. Final analysis

1. Online analysis

2. Offline analysis

3. Final analysis

Step 1 is highly automated, and happens *very* fast. It& #39;s what we use to determine if something we& #39;ve "detected" is a gravitational wave, or just noise. Then it works out if that thing& #39;s interesting enough to tell our colleagues in the astronomy community about.

When Step 1 is complete the event moves to Step 2. This is where our intrepid humans come in (and excel at their job).

The "online" analysis is fast, but not very accurate, so in Step 2 people look at the results, and if something looks odd or interesting...

The "online" analysis is fast, but not very accurate, so in Step 2 people look at the results, and if something looks odd or interesting...

they set up a more detailed (and slower) analysis to investigate. The analysis in this step is still a bit "rough" however, but it& #39;s an important step to help us determine if we& #39;ve seen something very exciting like GW190521, the massive BBH we announced a month or so ago.

Once step 2 happens we can make a decision about what step 3 looks like for an event.

If the event is really unusual we put together a team to work just on that signal. The events all get prepared for their most thorough analysis, which is the one presented in GWTC-2.

If the event is really unusual we put together a team to work just on that signal. The events all get prepared for their most thorough analysis, which is the one presented in GWTC-2.

Step 3 is where things get complicated. It& #39;s very important that we get things right at this stage, and that means there are lots of checks, and that we use our very best analysis techniques, which are expensive.

Coordinating this process between small teams of people worked well for GWTC-1 when we had a small number of events, but for GWTC-2 it was clear we& #39;d need something new. This is finally where I come into the story.

It was clear that the solution to this problem was to automate as much of step 3 as possible. Computers make far fewer mistakes because of things like copying and pasting the wrong line of a configuration than any person can manage, and they can do it *much* faster.

However, we were on a tight timeline, and we wanted it to be easy to spot problems across the hundred or so analysis processes which were running across thousands of CPU cores. This is where the solution started to get strange.

We make a lot of use of @gitlab to track our software (and paper) development in @LIGO, and as well as storing git repositories it has a bug tracker built in. It also has a lovely RESTful API, and can make kanban boards.

So we set up a repository to track the "O3a" analyses, and gave each event an issue in the tracker. So far, so good. We were starting to bring a lot of information together in one place.

Then we gave scripts running on our computing clusters access to the tracker on gitlab. These scripts were able to submit analysis jobs to the @HTCondor scheduling system, and then keep checking them periodically to make sure they were still running.

If a job "fell over" and stopped running one of the scripts would post a comment on the issue for the event, and it would then tag the event as "stuck". We had boards set up to monitor the state of each event as it passed through the analysis.

What we& #39;d actually built was a state machine, using @gitlab to store the state. This wasn& #39;t ever really the plan, but it& #39;s what happened.

When a job finished that would also get tagged, and we had a number of states which allowed us to control the entire analysis process using just an issue tracker and dragging issues around a kanban board.

When the analysis finished the scripts would upload all of the results, and commit them to a git repository for each event.*

* Don& #39;t try this at home, it makes massive git repos and very (rightly) upset sysadmins

* Don& #39;t try this at home, it makes massive git repos and very (rightly) upset sysadmins

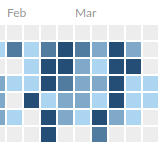

This had the side-effect of giving me the most consistent contribution graph of my life during March, and led to a lot of people questioning my working hours. I did eventually get the scripts to add a  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤖" title="Robotergesicht" aria-label="Emoji: Robotergesicht"> emoji to their work: hence the birth of the @LIGO robot.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤖" title="Robotergesicht" aria-label="Emoji: Robotergesicht"> emoji to their work: hence the birth of the @LIGO robot.

(It& #39;s called Olivaw, in case you were wondering. The software library is called Asimov...)

Anyway, this gave us an automated process which would do all of the monitoring of analyses, and uploading the results to somewhere everyone could get them. By around May my part in GWTC-2 was coming to an end, and I went back to doing non-Software-Engineering for a bit...

Read on Twitter

Read on Twitter emoji to their work: hence the birth of the @LIGO robot." title="This had the side-effect of giving me the most consistent contribution graph of my life during March, and led to a lot of people questioning my working hours. I did eventually get the scripts to add a https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤖" title="Robotergesicht" aria-label="Emoji: Robotergesicht"> emoji to their work: hence the birth of the @LIGO robot." class="img-responsive" style="max-width:100%;"/>

emoji to their work: hence the birth of the @LIGO robot." title="This had the side-effect of giving me the most consistent contribution graph of my life during March, and led to a lot of people questioning my working hours. I did eventually get the scripts to add a https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤖" title="Robotergesicht" aria-label="Emoji: Robotergesicht"> emoji to their work: hence the birth of the @LIGO robot." class="img-responsive" style="max-width:100%;"/>