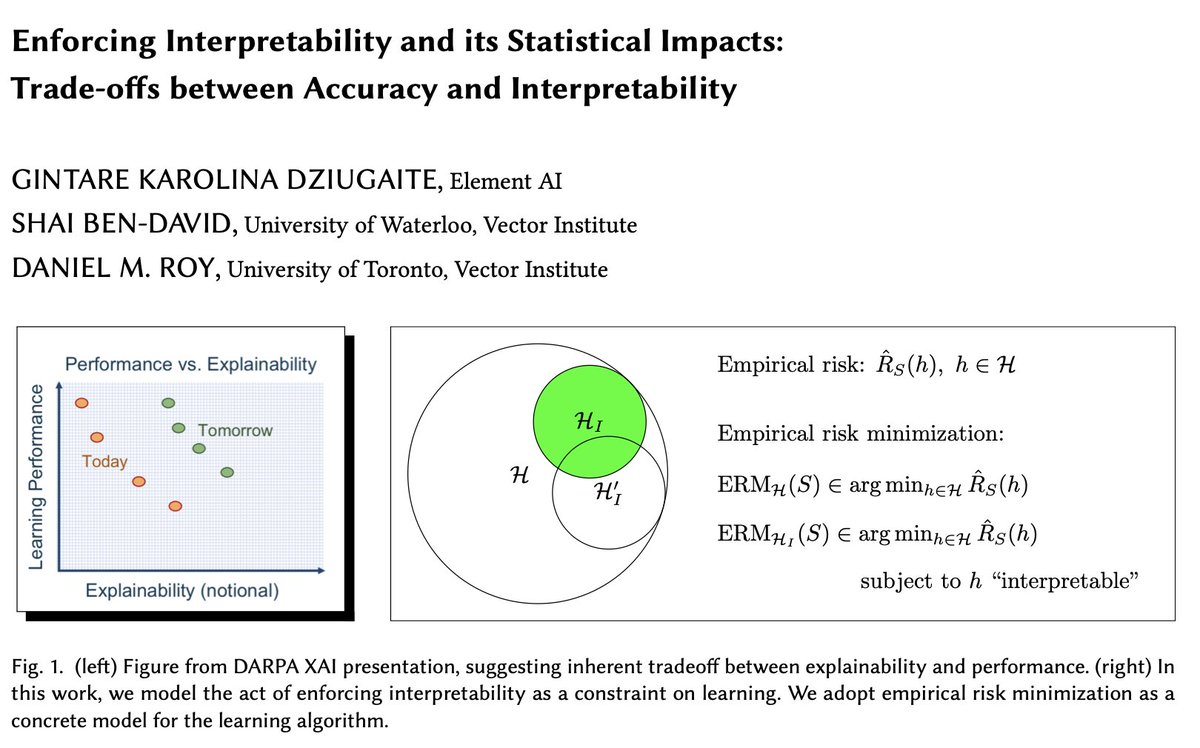

What& #39;s the "tradeoff" between interpretability & accuracy? Unfortunately, no one agrees on what& #39;s "interpretable". To move the needle an inch, @KDziugaite, Shai Ben-David, and I propose to model the *act* of enforcing interpretability as constrained ERM. https://arxiv.org/abs/2010.13764 ">https://arxiv.org/abs/2010....

This paper seems to really irk and confuse reviewers. Many want us to define what intepretable means. Others don& #39;t understand how studying the effect of an abstract constraint could bear on interpretability because... interpretability is special?

From our perspective, there& #39;s something to be gained reasonable about why one may or may not see a tradeoff when imposing interpretability. I think there& #39;s a more Bayesian story to tell here too about the application of black box machine learning tools and epistemic uncertainty.

Anyway, we are all interested to hear feedback and to see people help us build up some foundations.

Read on Twitter

Read on Twitter