Excited to *finally* share an old paper w/ @seth_freedman and @nehamm!

http://goodman-bacon.com/pdfs/fgbh_medicaid_x-sec.pdf

Some">https://goodman-bacon.com/pdfs/fgbh... folks say "Medicaid kills people" b/c they compare recipients to non-recipients while controlling for "a lot" of things. This is a bad idea.

Medicaid does not kill you.

http://goodman-bacon.com/pdfs/fgbh_medicaid_x-sec.pdf

Some">https://goodman-bacon.com/pdfs/fgbh... folks say "Medicaid kills people" b/c they compare recipients to non-recipients while controlling for "a lot" of things. This is a bad idea.

Medicaid does not kill you.

@Avik, for example, made this argument forcefully in 2011 (and it got lots of political traction) based on a paper in @AnnalsofSurgery. That paper is called "Primary Payer Status *Affects* Mortality for Major Surgical Operations" (emphasis mine):

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3071622/">https://www.ncbi.nlm.nih.gov/pmc/artic...

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3071622/">https://www.ncbi.nlm.nih.gov/pmc/artic...

It regressed in-hospital mortality on insurance dummies for a sample of surgery patients and controlled for "age, gender, income, geographic region, operation, and 30 comorbid conditions."

We ask whether a cross-sectional research design can get a causal effect of Medicaid.

We ask whether a cross-sectional research design can get a causal effect of Medicaid.

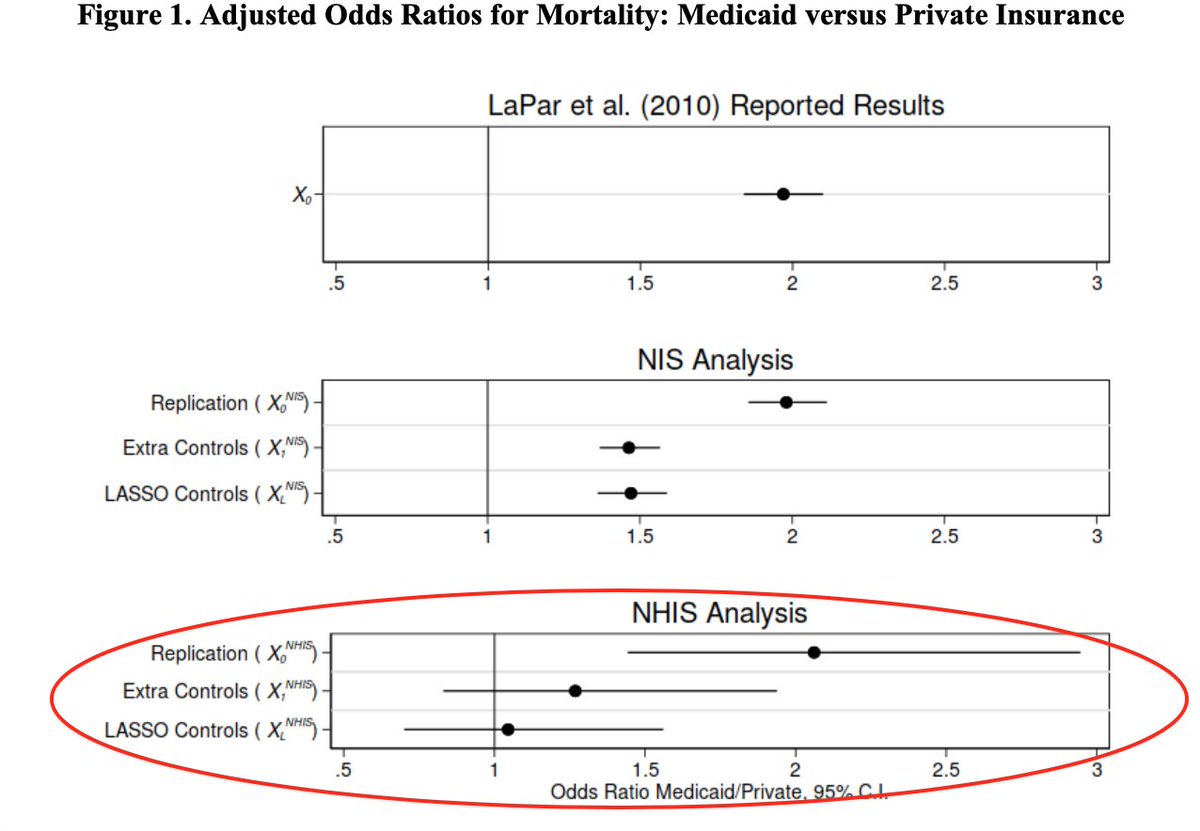

Step 1: Replicate. that was easy.

Step 2: see if the included controls balance omitted ones: no. even after you control for "a lot" you& #39;ve still got huge imbalance in race, severity, and SES.

Step 2: see if the included controls balance omitted ones: no. even after you control for "a lot" you& #39;ve still got huge imbalance in race, severity, and SES.

Step 3. Control for *that* stuff: the medicaid/mortality association falls by half in the NIS and is gone in the the National Health Interview Survey (where we have way more more covariates).

(even these X& #39;s don& #39;t balance *OTHER* X& #39;s in the NHIS...so much selection!)

(even these X& #39;s don& #39;t balance *OTHER* X& #39;s in the NHIS...so much selection!)

Step 4: Do some sensitivity analysis a la Altonji/Elder/Taber.

A correlation between unobservable selection into Medicaid and unobservable determinants of mortality of about 0.1 kills the association.

Maybe you have solid intuition for that, but I don& #39;t!

A correlation between unobservable selection into Medicaid and unobservable determinants of mortality of about 0.1 kills the association.

Maybe you have solid intuition for that, but I don& #39;t!

For one thing, the determinants of Medicaid and mortality that we can see are incredibly positively correlated (that& #39;s what the program is for!). What gets you on to Medicaid--poverty and ill health--is also dangerous.

Not a stretch to think the same is true of unobservables.

Not a stretch to think the same is true of unobservables.

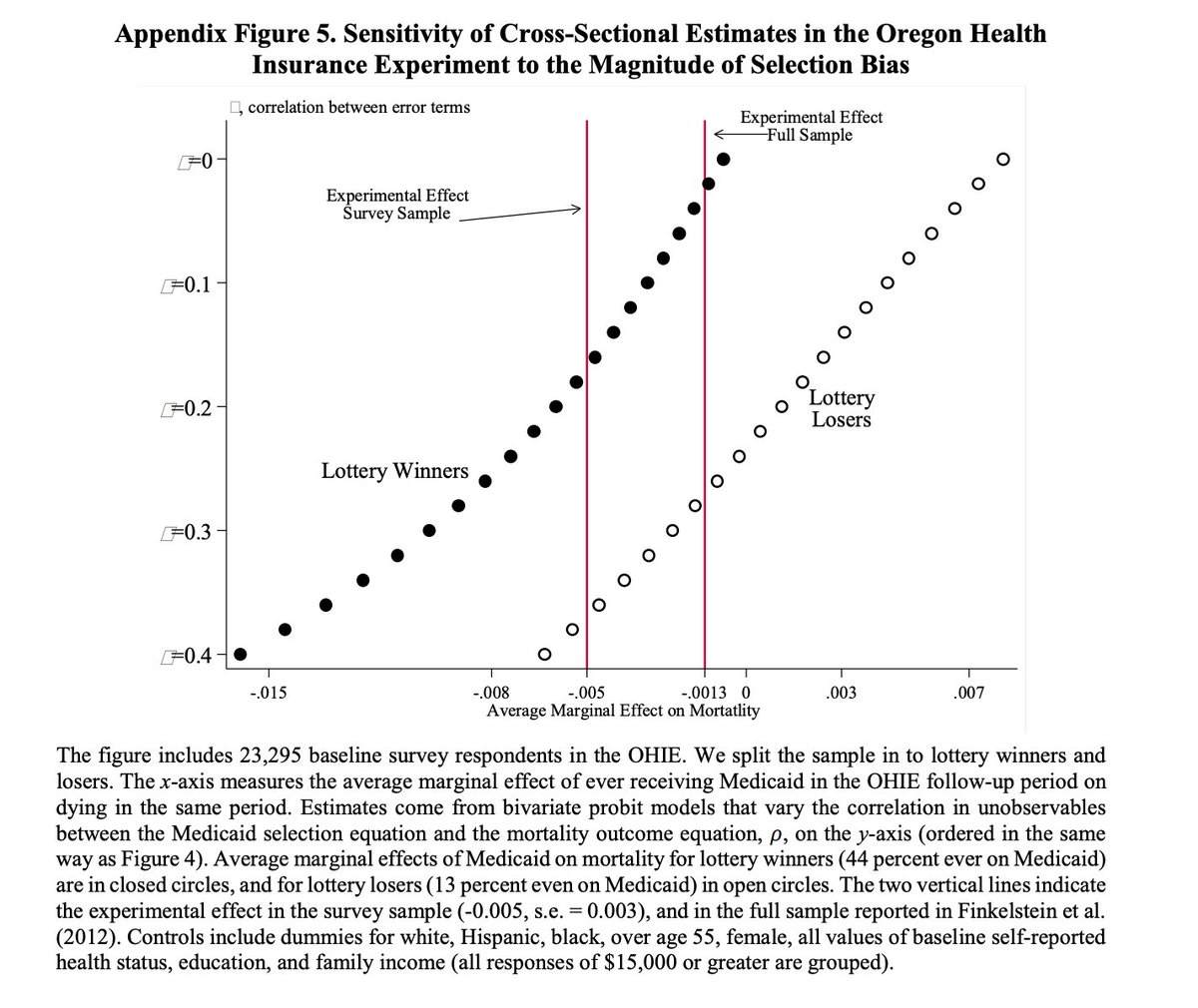

A really cool part is that we can estimate the degree of selection in the Oregon Health Insurance Experiment.

Knowing the experimental effect we can see how much selection it takes to make observational estimates equal the experimental one:

Knowing the experimental effect we can see how much selection it takes to make observational estimates equal the experimental one:

the "control for things" design assumes a correlation of 0 (the top dots) and you get null or positive mortality "effects" that way. for lottery winners you need a corr of about 0.17 and for losers you need 0.37 to get the experimental effect back. Note 0.37>0.17>0.1.

NB: you have to do this separately for winners and losers b/c the selection threshold is way way different.

Its so cool to me that you get less serious selection for the winners [44% on medicaid] than the losers [13% on medicaid].

Its so cool to me that you get less serious selection for the winners [44% on medicaid] than the losers [13% on medicaid].

So why do controls do so badly? For one, they& #39;re not good controls. "Income" is quartiles of ZIP income, not patient income! Even the NHIS income measure is in bins. SAD!

Those comorbidities, too, are *not* meant to capture underlying severity...and they don& #39;t.

Those comorbidities, too, are *not* meant to capture underlying severity...and they don& #39;t.

TBH even if the controls were "good" we have plenty of economic theory about insurance and private information that its not reasonable to just control for things. See @haroldpollack, @afrakt, @aaronecarroll and reinhardt:

https://www.nejm.org/doi/10.1056/NEJMp1103168?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%20%200pubmed">https://www.nejm.org/doi/10.10...

https://www.nejm.org/doi/10.1056/NEJMp1103168?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%20%200pubmed">https://www.nejm.org/doi/10.10...

That& #39;s why good research designs are so important and show the opposite!

@smilleralert and @LaurawherryR: https://twitter.com/smilleralert/status/1153274124401348608?s=20

Borgschulte">https://twitter.com/smilleral... and Vogler: https://www.sciencedirect.com/science/article/abs/pii/S0167629619306228?via%3Dihub

https://www.sciencedirect.com/science/a... href="https://twitter.com/jacobsgoldin">@jacobsgoldin et al (not Medicaid, but comparable pop): https://twitter.com/JohnHolbein1/status/1309469502283882497?s=20">https://twitter.com/JohnHolbe...

@smilleralert and @LaurawherryR: https://twitter.com/smilleralert/status/1153274124401348608?s=20

Borgschulte">https://twitter.com/smilleral... and Vogler: https://www.sciencedirect.com/science/article/abs/pii/S0167629619306228?via%3Dihub

Our paper is headed for a JOLE issue in honor of John DiNardo, who, if nothing else, delighted in fighting about policy-relevant research design issues.  https://abs.twimg.com/emoji/v2/... draggable="false" alt="💔" title="Gebrochenes Herz" aria-label="Emoji: Gebrochenes Herz">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="💔" title="Gebrochenes Herz" aria-label="Emoji: Gebrochenes Herz">

Read on Twitter

Read on Twitter