New paper!

Mapping Machine-Learned Physics into a Human-Readable Space ( https://arxiv.org/abs/2010.11998 ">https://arxiv.org/abs/2010.... )

Led by Taylor Faucett, with Jesse Thaler

This paper asks: can we translate a black-box NN into human language?

Mapping Machine-Learned Physics into a Human-Readable Space ( https://arxiv.org/abs/2010.11998 ">https://arxiv.org/abs/2010.... )

Led by Taylor Faucett, with Jesse Thaler

This paper asks: can we translate a black-box NN into human language?

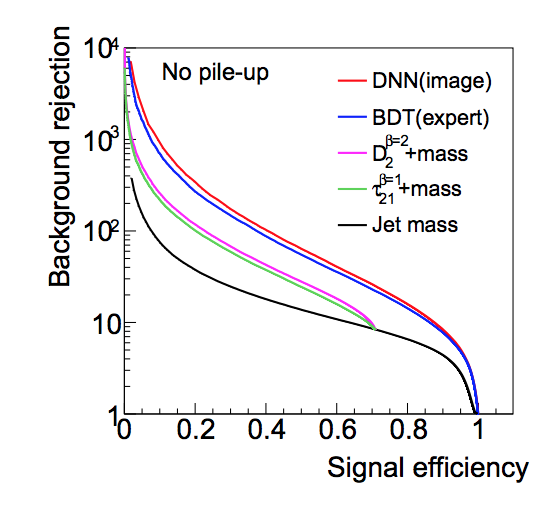

We started from a paper from a few years ago, that showed a CNN outperforming the combination of human ideas:

(red vs blue, it’s small but real)

(red vs blue, it’s small but real)

Our question was: what in the NN doing, and can we understand it?

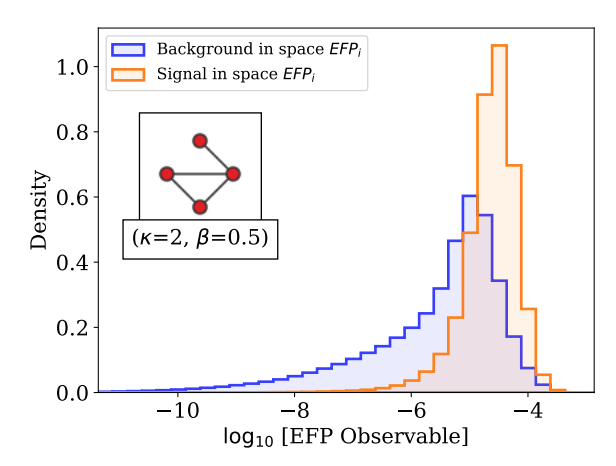

We defined a space of possible NEW observables, energy flow polynomials

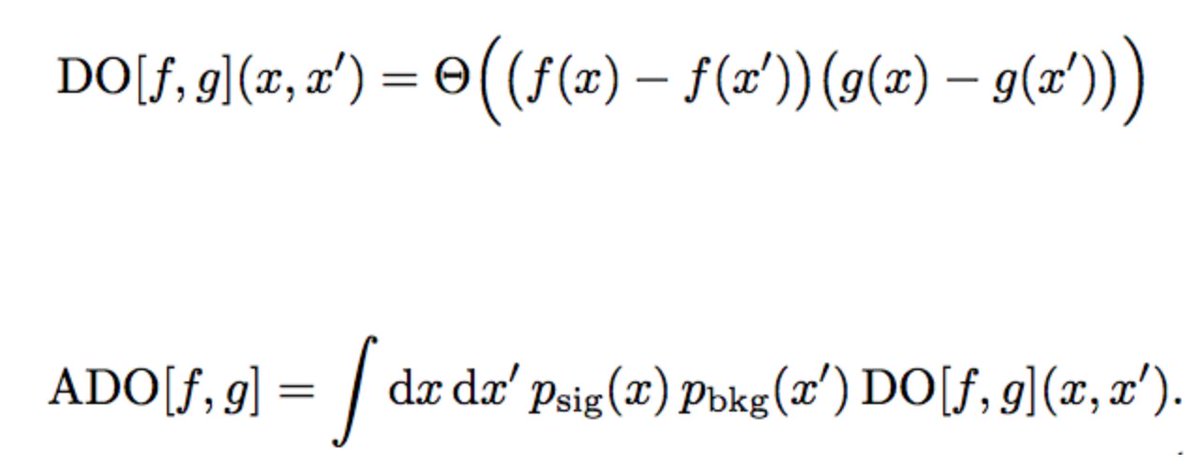

Then we defined a mapping between the NN and these observables, a new metric ADO that is 1 if networks order pairs of events the same way:

We defined a space of possible NEW observables, energy flow polynomials

Then we defined a mapping between the NN and these observables, a new metric ADO that is 1 if networks order pairs of events the same way:

Which, when we added to the network, it closed the gap!

Interestingly, this new observable is NOT theoretically well behaved (it’s IRC unsafe). And no IRC safe observable closes the gap!

So what’s the network doing?

Not something you want to rely on!

Interestingly, this new observable is NOT theoretically well behaved (it’s IRC unsafe). And no IRC safe observable closes the gap!

So what’s the network doing?

Not something you want to rely on!

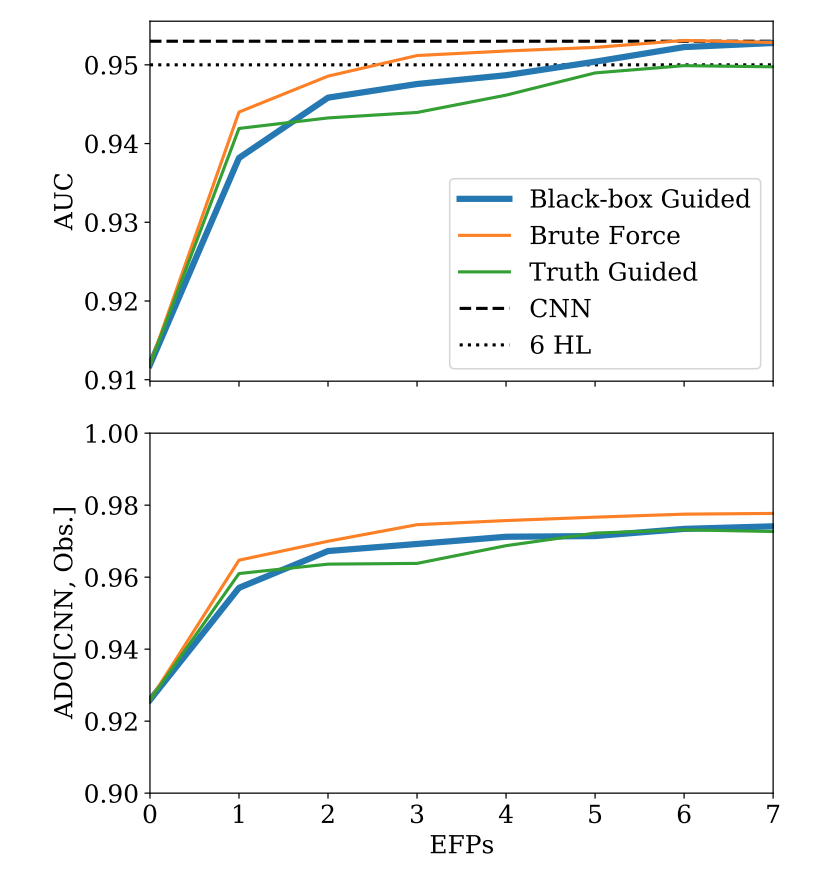

We also set it loose from a minimal set of observables to see what it did. We use ADO to quickly find good observables, getting a 300x speed up over a brute-force search.

Aaaaaaand, it matched the CNN using just 7 observables!

Aaaaaaand, it matched the CNN using just 7 observables!

The lesson is: use powerful deep nets to measure the amount of info in your data, but always translate it back to human space before using it.

This method should work for any black-box network. More applications coming in follow-up papers soon!

Read on Twitter

Read on Twitter