this Tesla SUV ran into a traffic barrier at 70mph while on Autopilot. how could this happen? there are 3 major contributing causes, and they& #39;re *fascinating*

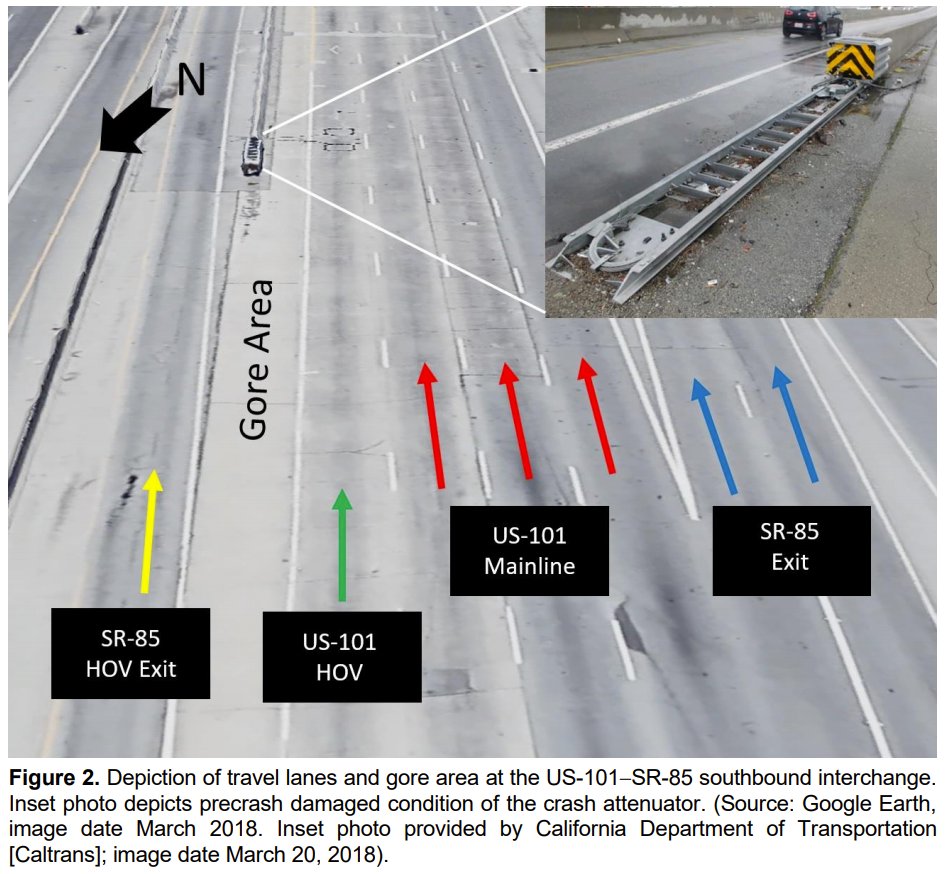

before we dig into the causes, let& #39;s set the scene first: the southbound 101 freeway at the intersection of 85. there is a left exit ramp so commuter-lane traffic can get to the 85 southbound commuter lane.

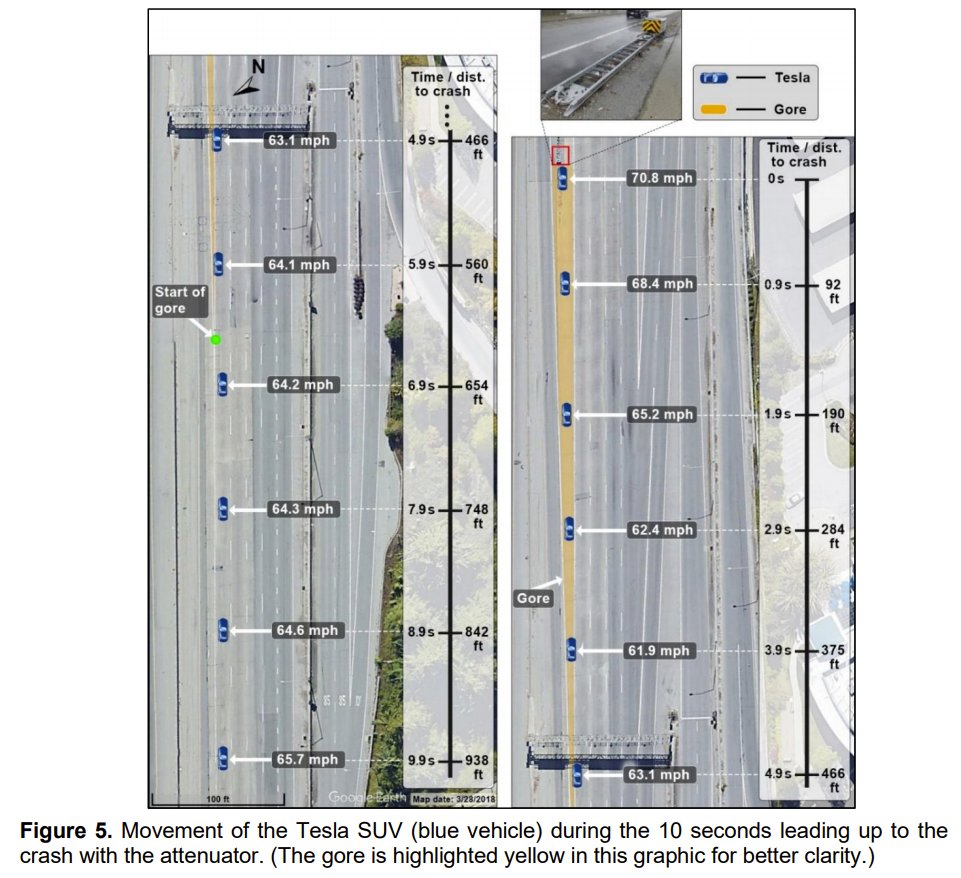

the Tesla was in the commuter lane, then departed that lane and headed into the strip of roadway (called the "gore") leading up to the median, crashing into the barrier.

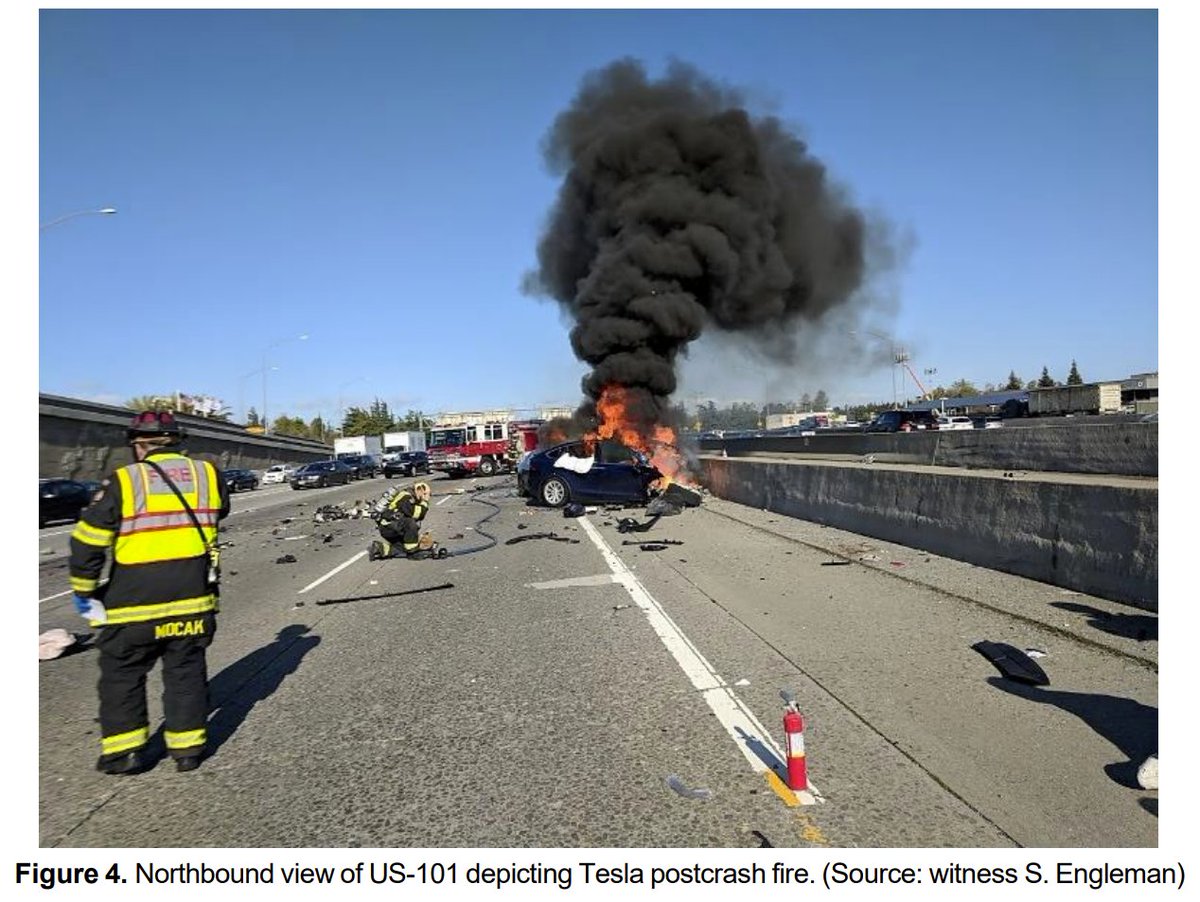

the crash ripped off the front half of the Tesla, which started to burn. nearby drivers stopped to pull the driver out.

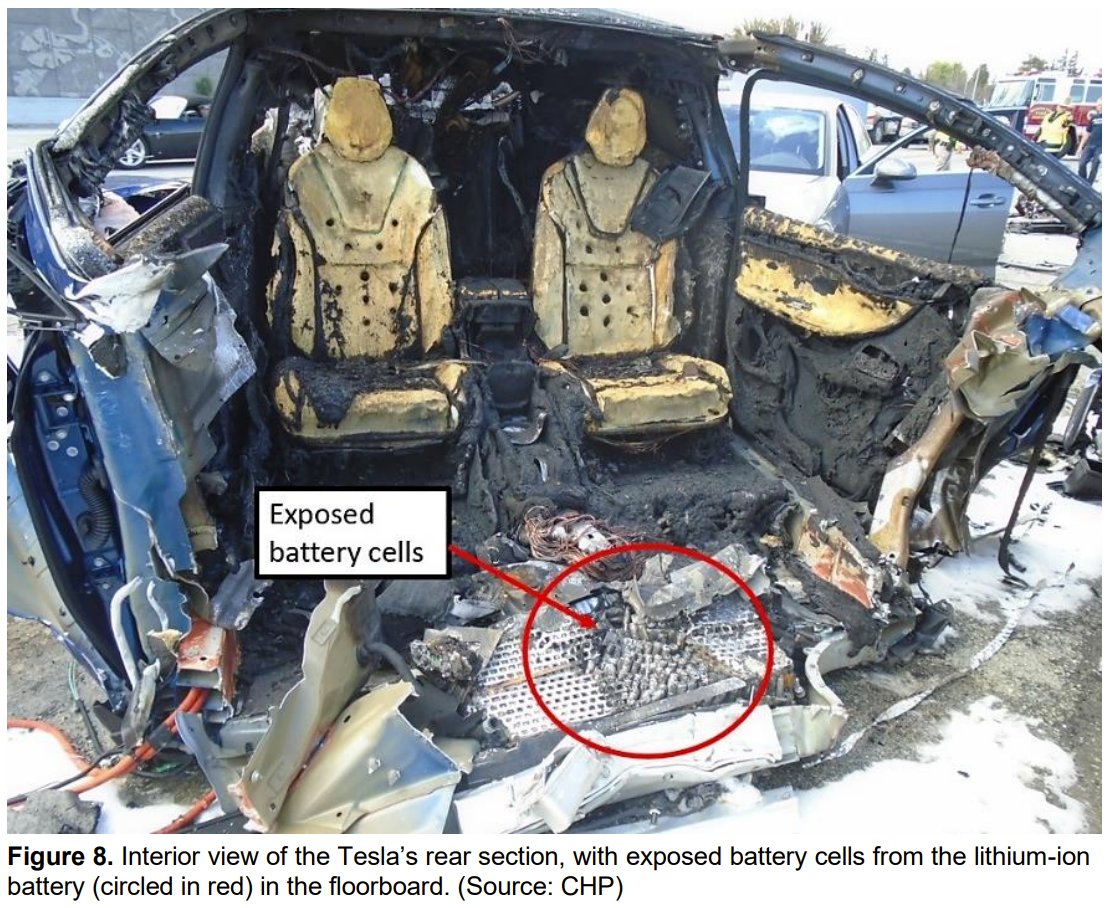

the fire got worse as the emergency crews were having trouble putting it out (what with all those lithium batteries involved) and had to call Tesla for specific instructions.

sadly, the driver died a few hours later of his injuries. his Tesla caught fire *again* a week later while in the impound facility and had to be extinguished again.

let& #39;s take a look at the first cause. a *crash attenuator* is a mechanical device that compresses when hit in a head-on collision. it absorbs and dissipates energy from the crash to slow the vehicle to a stop.

crash attenuators are really amazing devices that save lives all the time. the one installed here is the SCI Smart Cushion 100GM.

https://hillandsmith.com/products/smart-cushion/">https://hillandsmith.com/products/...

https://hillandsmith.com/products/smart-cushion/">https://hillandsmith.com/products/...

SCI has some really neat videos showing just how well they work. here is a head on collision at 60mph. if this were a real human, she would definitely sustain injuries, but she& #39;s likely going to survive. https://www.youtube.com/watch?v=2Q7lwAoo1GY">https://www.youtube.com/watch...

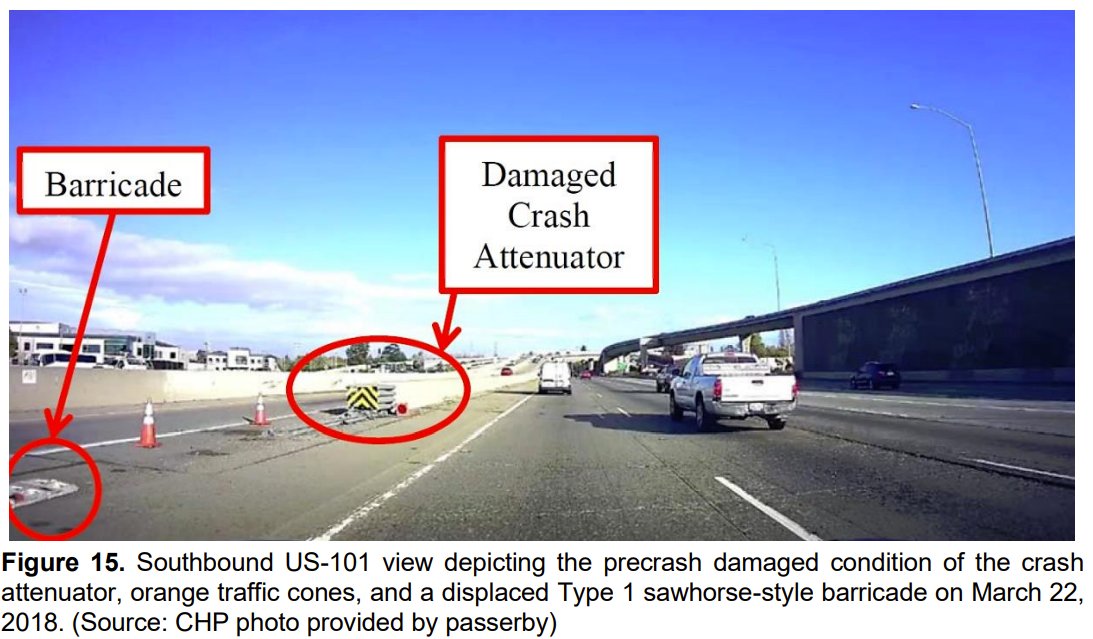

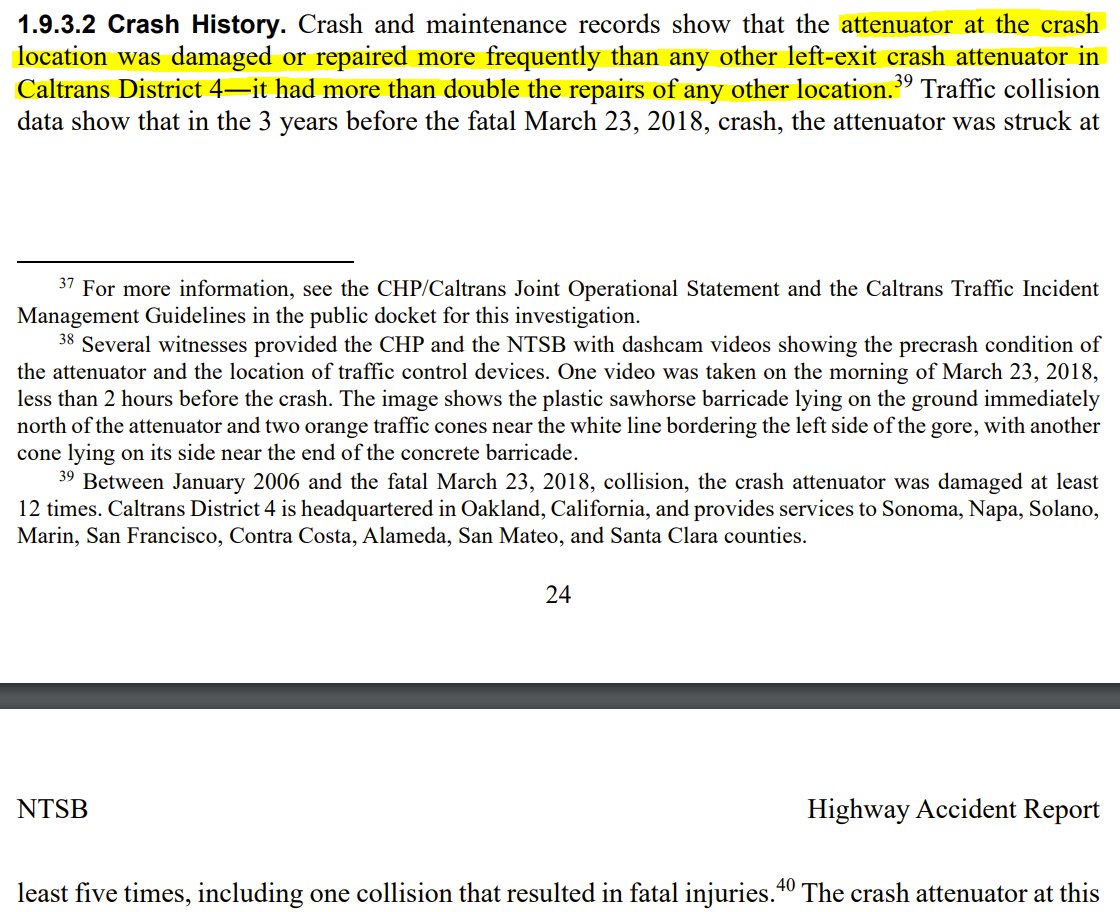

but something had gone wrong. the crash attenuator had already been set off 11 days before and had not been reset! CHP is supposed to let the CA DOT know, but they did not. instead, they put out some road cones and a sandwhich-board-style barricade that fell over later.

in addition, the road markings were ambigious and faded. i used to drive this route every day; this section of the freeway isn& #39;t in good shape. the road bed is torn up by heavy trucks; stripes are faded and haven& #39;t been painted in years.

in CA, left exits are not that common; many drivers have trouble navigating them if they& #39;re not familiar with the area. lots of people end up crossing over the solid white line at the last minute. in fact, this area almost looks like another lane!

after the accident the CA DOT painted chevron markings to make it more obvious that this isn& #39;t a lane. still, every time i drive by, i see people cutting across this area.

turns out quite a few people ran into this particular attenuator. hopefully the newly-painted chevrons will help drivers avoid this danger.

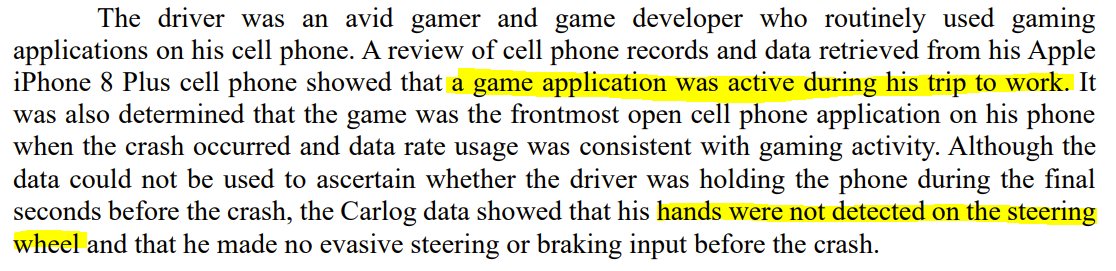

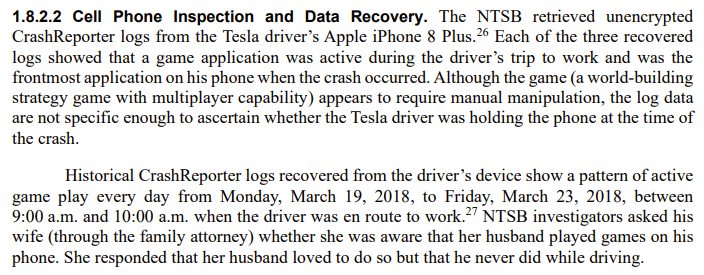

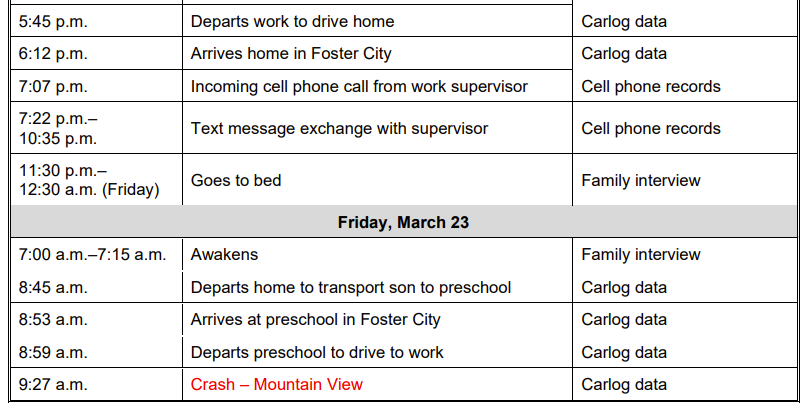

now lets look at the second cause: the driver was distracted by his cellphone. the NTSB investigators went through phone logs and found that he was playing a game and did not have his hands on the steering wheel for 6 seconds leading up to the crash.

the NTSB has been pushing hard for cellphone manufacturers to implement software lockouts to prevent people from using their phones while driving. statistics say that about 10% of drivers at any given moment are using their phone.  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤯" title="Explodierender Kopf" aria-label="Emoji: Explodierender Kopf">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤯" title="Explodierender Kopf" aria-label="Emoji: Explodierender Kopf">

the argument they use is that CA has already banned phone use while driving and has strictly enforced it, yet this does not deter people from doing it.

the phone lockout alternative is a solvable problem. you need to 1) detect if the user is the driver or a passenger and 2) allow emergency use for drivers.

tricky, but solvable.

tricky, but solvable.

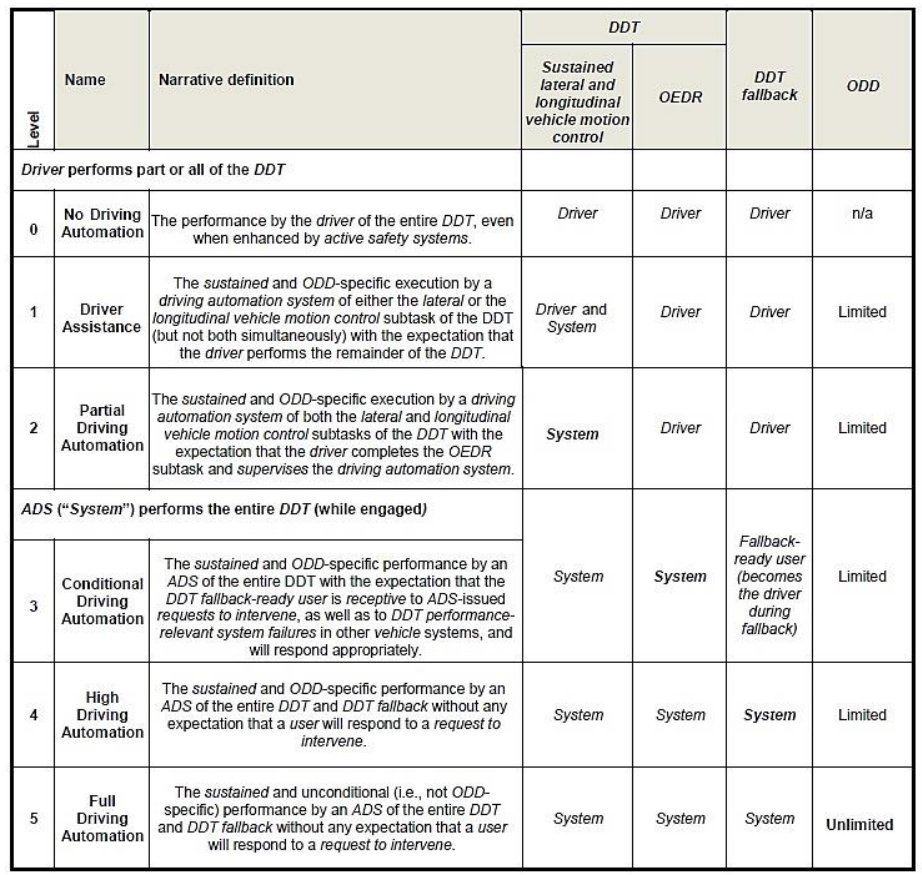

finally, the cause you& #39;ve been waiting for. this is the Tesla Autopilot system. it& #39;s a level 2 self-driving system (see below chart).

level 2 systems are not fully self-driving, despite Tesla& #39;s marketing which implies the contrary.

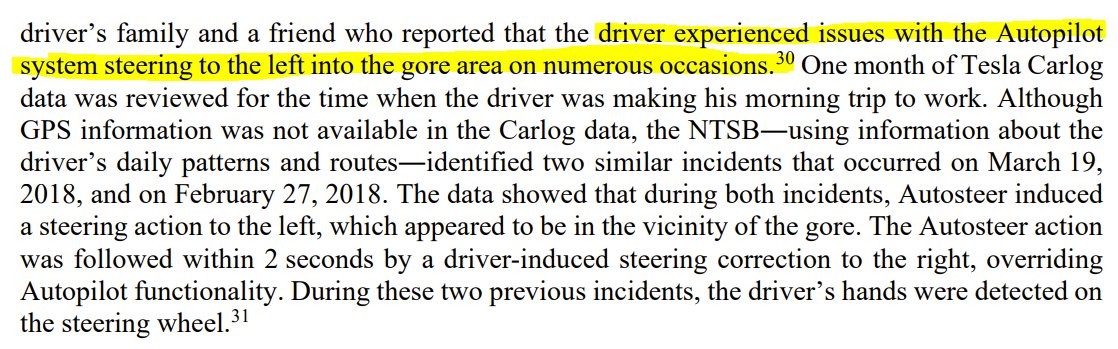

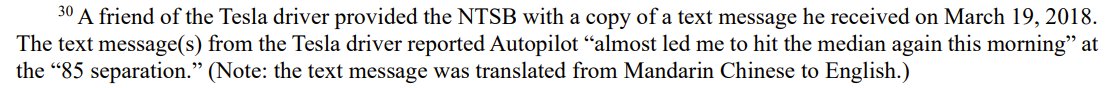

in this case, the Autopilot system repeatedly got confused at this particular left exit. on several previous occasions, the driver noticed Autodrive steering the car to the left, tracking the wrong white line, before he corrected it manually.

Autopilot is meant to be an augment to the driver--in other words, when Autopilot is on, the driver& #39;s role changes to become a more passive "supervisor" of the Autopilot system. this means that, although they& #39;re not controlling the vehicle, they still have to be vigilant.

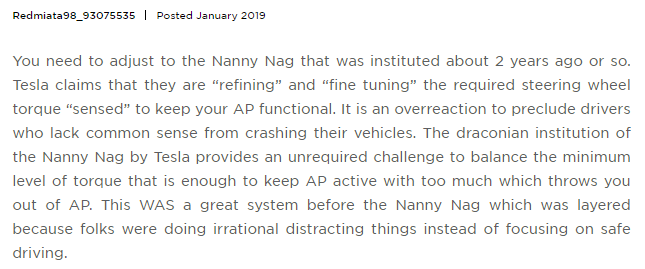

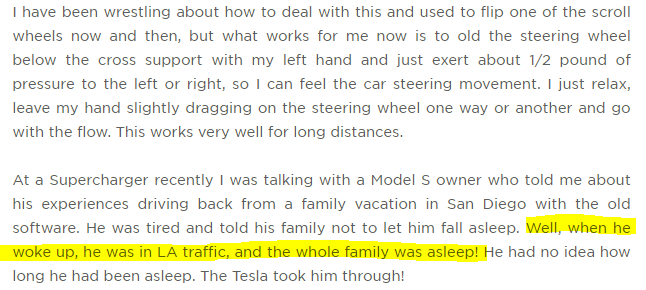

Tesla changed their firmware to try and improve the driver alerts, but the NTSB doesn& #39;t think it& #39;s enough. i agree with them, i mean, look at what crazy Tesla drivers do to disable the driver alerts, which some of them call "nanny nags." the attitude of these folks is terrifying.

furthermore, the NTSB believes that, at a federal level, there is not enough regulation of self-driving cars, and that the National Highway Traffic Safety Administration is too hands-off in their regulatory approach.

i don& #39;t think the NTSB went far enough.

Level 2 vehicle automation is intrinsically unsafe because it puts the human driver in a disadvantaged state: *passive vigilance*. let& #39;s dig into this a bit more, since this has been the cause of numerous industrial accidents.

ok fine, Tesla has disclaimers in the manual. if someone crashes while in Autopilot, it becomes their fault for not following the instructions, and not Autopilot& #39;s fault. but listen to this...

imagine your job is to watch a TV screen all day. if, on the screen, you see a pink gorilla, you have to push a button within 3 seconds. this only happens about once a month. how well do you think you would do? https://abs.twimg.com/emoji/v2/... draggable="false" alt="🦍" title="Gorilla" aria-label="Emoji: Gorilla">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🦍" title="Gorilla" aria-label="Emoji: Gorilla">

most people would fail miserably, and this job would cause them no end to misery! it is really hard to maintain focus on something that does not involve interaction. there are studies that show increased stress from jobs like this.

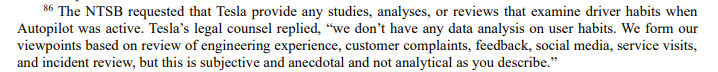

there& #39;s not much in the literature about passive vigilance specifically as it applies to level 2 self-driving vehicles. you would think this is something that Tesla would be *very* interested in examining, but...

when you& #39;re driving, you& #39;re engaged in an active behavior and you& #39;ll be able to sustain that activity for a few hours. you& #39;ll still need breaks, for sure. but if you have to be passively vigilant, you will lose focus much faster. this system puts you, the human, at a disadvantage

in other words, this system sets you up for failure!

for more info, check out the NTSB report: https://www.ntsb.gov/investigations/AccidentReports/Reports/HAR2001.pdf">https://www.ntsb.gov/investiga...

usual disclaimer: i& #39;m not a Professional Engineer. this is not my area of expertise. i& #39;m mostly summarizing the NTSB report. take everything with a grain of salt. salt is not provided, you must bring your own, etc.

i was reading the "precrash activities" table in full engineering mode. had a little snarky smile when i saw "text message exchange with supervisor" from 7:22pm to 10:35pm.

but then i saw "transport son to preschool" and that broke my heart. https://abs.twimg.com/emoji/v2/... draggable="false" alt="💔" title="Gebrochenes Herz" aria-label="Emoji: Gebrochenes Herz">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="💔" title="Gebrochenes Herz" aria-label="Emoji: Gebrochenes Herz"> https://abs.twimg.com/emoji/v2/... draggable="false" alt="😭" title="Laut schreiendes Gesicht" aria-label="Emoji: Laut schreiendes Gesicht">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😭" title="Laut schreiendes Gesicht" aria-label="Emoji: Laut schreiendes Gesicht">

but then i saw "transport son to preschool" and that broke my heart.

someone pointed out the NTSB held a public board meeting about this crash investigation. https://www.youtube.com/watch?v=w2VWAoLrzE0&feature=emb_logo">https://www.youtube.com/watch...

Read on Twitter

Read on Twitter

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😭" title="Laut schreiendes Gesicht" aria-label="Emoji: Laut schreiendes Gesicht">" title="i was reading the "precrash activities" table in full engineering mode. had a little snarky smile when i saw "text message exchange with supervisor" from 7:22pm to 10:35pm.but then i saw "transport son to preschool" and that broke my heart. https://abs.twimg.com/emoji/v2/... draggable="false" alt="💔" title="Gebrochenes Herz" aria-label="Emoji: Gebrochenes Herz">https://abs.twimg.com/emoji/v2/... draggable="false" alt="😭" title="Laut schreiendes Gesicht" aria-label="Emoji: Laut schreiendes Gesicht">" class="img-responsive" style="max-width:100%;"/>

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😭" title="Laut schreiendes Gesicht" aria-label="Emoji: Laut schreiendes Gesicht">" title="i was reading the "precrash activities" table in full engineering mode. had a little snarky smile when i saw "text message exchange with supervisor" from 7:22pm to 10:35pm.but then i saw "transport son to preschool" and that broke my heart. https://abs.twimg.com/emoji/v2/... draggable="false" alt="💔" title="Gebrochenes Herz" aria-label="Emoji: Gebrochenes Herz">https://abs.twimg.com/emoji/v2/... draggable="false" alt="😭" title="Laut schreiendes Gesicht" aria-label="Emoji: Laut schreiendes Gesicht">" class="img-responsive" style="max-width:100%;"/>