A quick, non-technical explanation of Dropout.

(As easy as I could make it.)

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread"> https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

(As easy as I could make it.)

Remember those two kids from school that sat together and copied from each other during exams?

They aced every test but were hardly brilliant, remember?

Eventually, the teacher had to set them apart. That was the only way to force them to learn.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

They aced every test but were hardly brilliant, remember?

Eventually, the teacher had to set them apart. That was the only way to force them to learn.

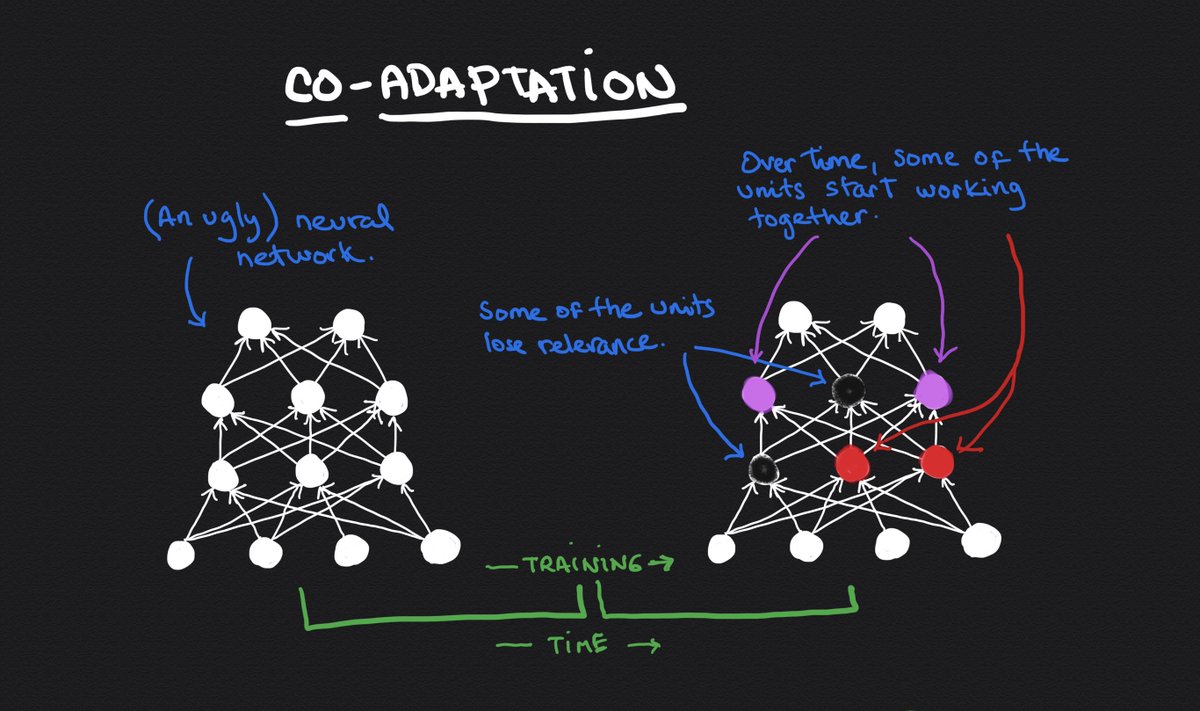

The same happens with neural networks.

Sometimes, a few hidden units create associations that, over time, provide most of the predictive power, forcing the network to ignore the rest.

This is called co-adaptation, and it prevents networks from generalizing appropriately.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

Sometimes, a few hidden units create associations that, over time, provide most of the predictive power, forcing the network to ignore the rest.

This is called co-adaptation, and it prevents networks from generalizing appropriately.

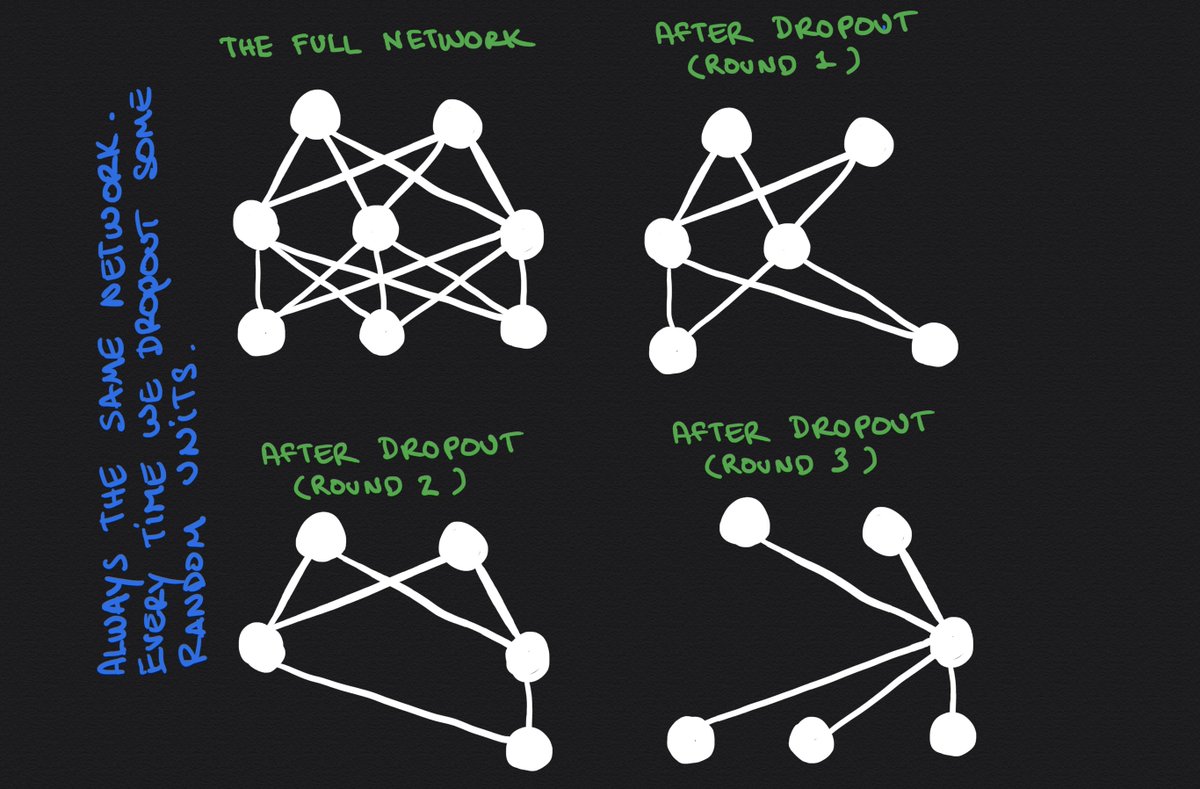

We can solve this problem like teachers do: breaking the associations preventing the network from learning.

This is what Dropout is for.

During training, Dropout randomly removes some of the units. This forces the network to learn in a balanced way.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

This is what Dropout is for.

During training, Dropout randomly removes some of the units. This forces the network to learn in a balanced way.

Units may or may not be present during a round of training.

Now every unit is on its own and can& #39;t rely on other units to do their work. They have to work harder by themselves.

Dropout works very well, and it& #39;s one of the main mechanisms to reduce overfitting.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

Now every unit is on its own and can& #39;t rely on other units to do their work. They have to work harder by themselves.

Dropout works very well, and it& #39;s one of the main mechanisms to reduce overfitting.

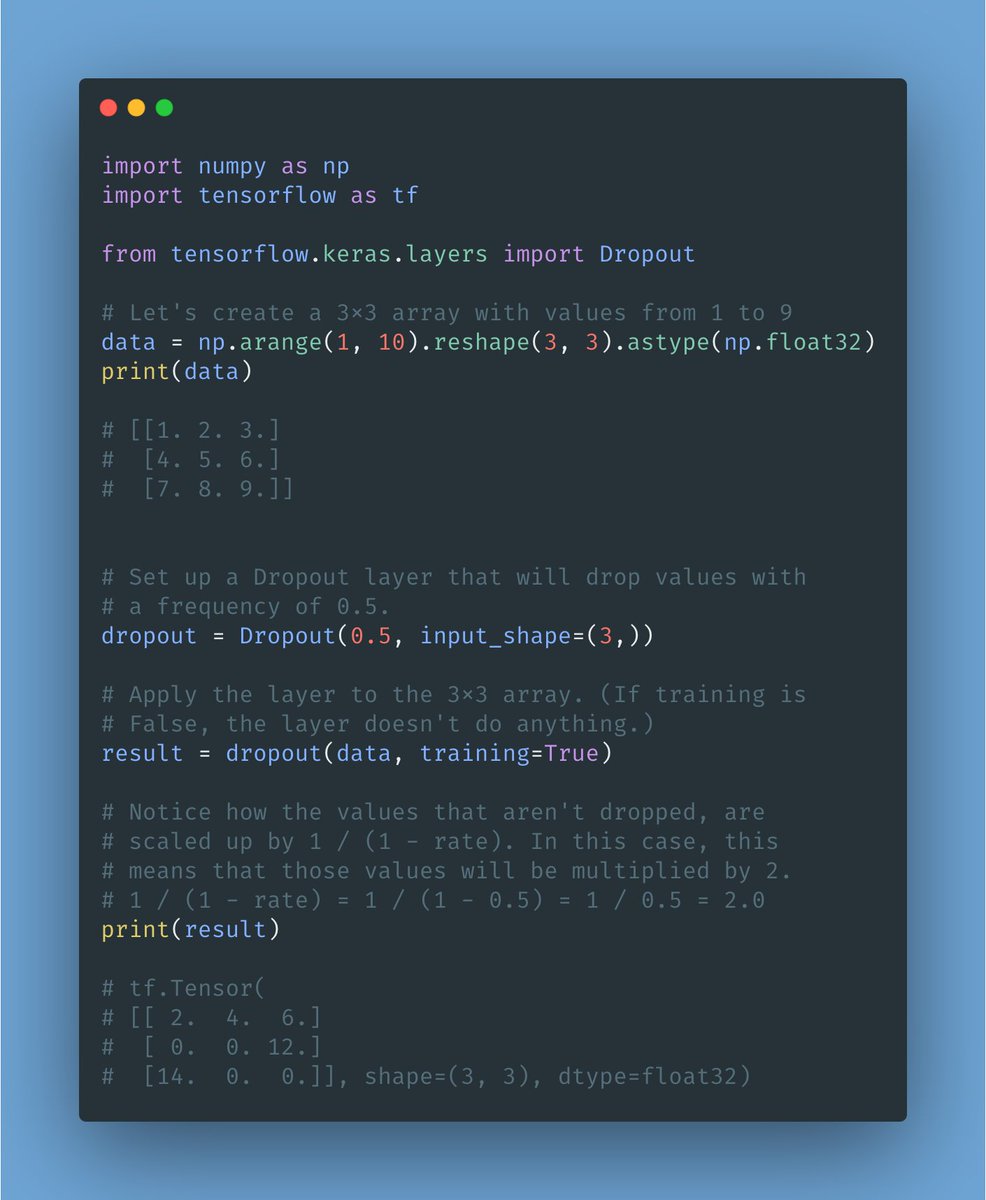

Here is an example of how Dropout works.

In this case, we are dropping 50% of all the units.

Notice how the result shows the dropped units (equal to zero), and it scaled the remaining units (to account for the missing units.)

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

In this case, we are dropping 50% of all the units.

Notice how the result shows the dropped units (equal to zero), and it scaled the remaining units (to account for the missing units.)

Finally, here is an excellent, more technical introduction to Dropout from @TeachTheMachine: https://machinelearningmastery.com/dropout-for-regularizing-deep-neural-networks/">https://machinelearningmastery.com/dropout-f...

Read on Twitter

Read on Twitter https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" title="A quick, non-technical explanation of Dropout.(As easy as I could make it.)https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" title="A quick, non-technical explanation of Dropout.(As easy as I could make it.)https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>

" title="The same happens with neural networks. Sometimes, a few hidden units create associations that, over time, provide most of the predictive power, forcing the network to ignore the rest.This is called co-adaptation, and it prevents networks from generalizing appropriately.https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>

" title="The same happens with neural networks. Sometimes, a few hidden units create associations that, over time, provide most of the predictive power, forcing the network to ignore the rest.This is called co-adaptation, and it prevents networks from generalizing appropriately.https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>

" title="We can solve this problem like teachers do: breaking the associations preventing the network from learning.This is what Dropout is for.During training, Dropout randomly removes some of the units. This forces the network to learn in a balanced way.https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>

" title="We can solve this problem like teachers do: breaking the associations preventing the network from learning.This is what Dropout is for.During training, Dropout randomly removes some of the units. This forces the network to learn in a balanced way.https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>

" title="Here is an example of how Dropout works.In this case, we are dropping 50% of all the units.Notice how the result shows the dropped units (equal to zero), and it scaled the remaining units (to account for the missing units.)https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>

" title="Here is an example of how Dropout works.In this case, we are dropping 50% of all the units.Notice how the result shows the dropped units (equal to zero), and it scaled the remaining units (to account for the missing units.)https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>