I always get Normalization and Standardization mixed up.

But they are different.

Notes about them and why do we care.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread"> https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

But they are different.

Notes about them and why do we care.

Feature scaling is key for a lot of Machine Learning algorithms to work well.

We always want all of our data on the same scale.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

We always want all of our data on the same scale.

Imagine we are working with a dataset of workers.

"Age" will range between 16 and 90.

"Salary" will range between 15,000 and 150,000.

Huge disparity!

Salary will dominate any comparisons because of its magnitude.

We can fix that by scaling both features.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

"Age" will range between 16 and 90.

"Salary" will range between 15,000 and 150,000.

Huge disparity!

Salary will dominate any comparisons because of its magnitude.

We can fix that by scaling both features.

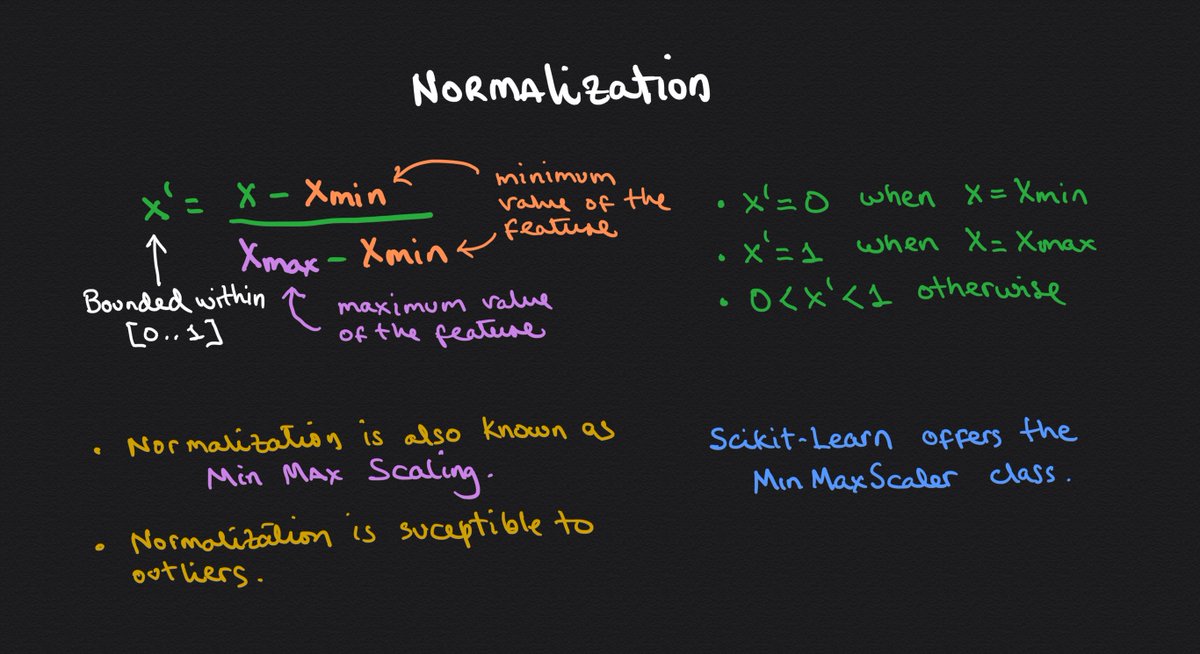

We can normalize or standardize these features.

The former will squeeze values within 0 and 1.

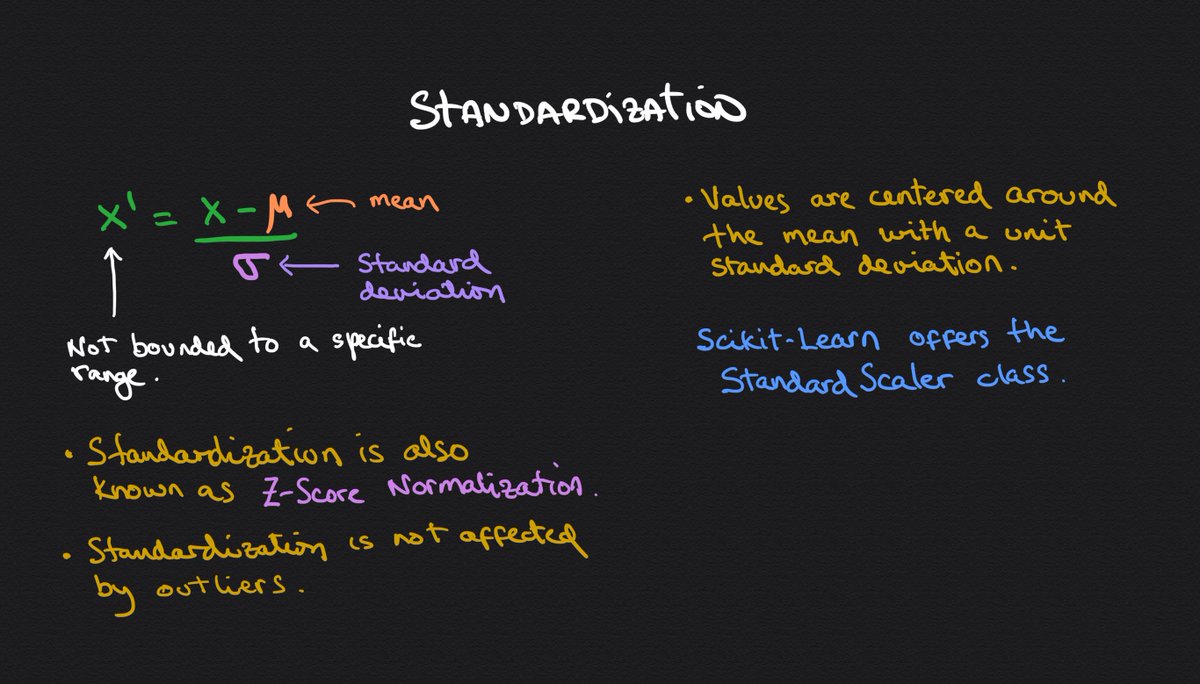

The latter will center values around the mean with a unit standard deviation.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

The former will squeeze values within 0 and 1.

The latter will center values around the mean with a unit standard deviation.

Here are some handwritten notes about Normalization.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

Here are some handwritten notes about Standardization.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

Which one is better?

Well, it depends.

There& #39;s really no way to determine which of these two methods works better without actually trying them (Thanks to @javaloyML for pointing this to me.)

It& #39;s a good idea to treat these as tunable settings that we can experiment with.

Well, it depends.

There& #39;s really no way to determine which of these two methods works better without actually trying them (Thanks to @javaloyML for pointing this to me.)

It& #39;s a good idea to treat these as tunable settings that we can experiment with.

Read on Twitter

Read on Twitter https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" title="I always get Normalization and Standardization mixed up.But they are different.Notes about them and why do we care.https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" title="I always get Normalization and Standardization mixed up.But they are different.Notes about them and why do we care.https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>

" title="Here are some handwritten notes about Normalization.https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>

" title="Here are some handwritten notes about Normalization.https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>

" title="Here are some handwritten notes about Standardization.https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>

" title="Here are some handwritten notes about Standardization.https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>