What is the lauded FSD rewrite @elonmusk is referring to? @karpathy already detailed exactly what it is in Feb 2020 ( https://www.youtube.com/watch?v=hx7BXih7zx8).">https://www.youtube.com/watch... Let& #39;s discuss...

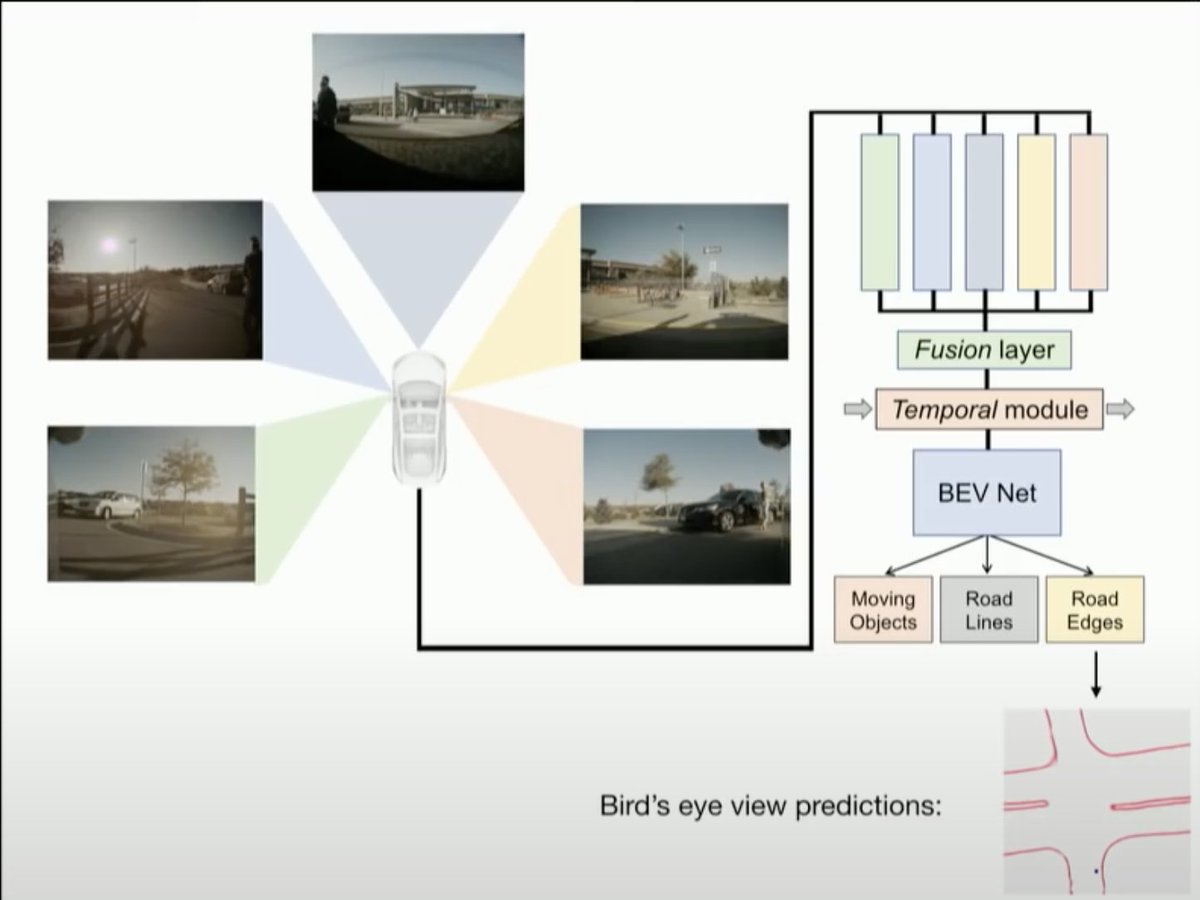

The vector space @elonmusk mentions is a Software 2.0 code rewrite of the occupancy tracker which is now engulfed into a "Bird& #39;s Eye View" Neural Net which merges all cameras and projects features into top down view. In @karpathy& #39;s own words: "This makes a huge difference".

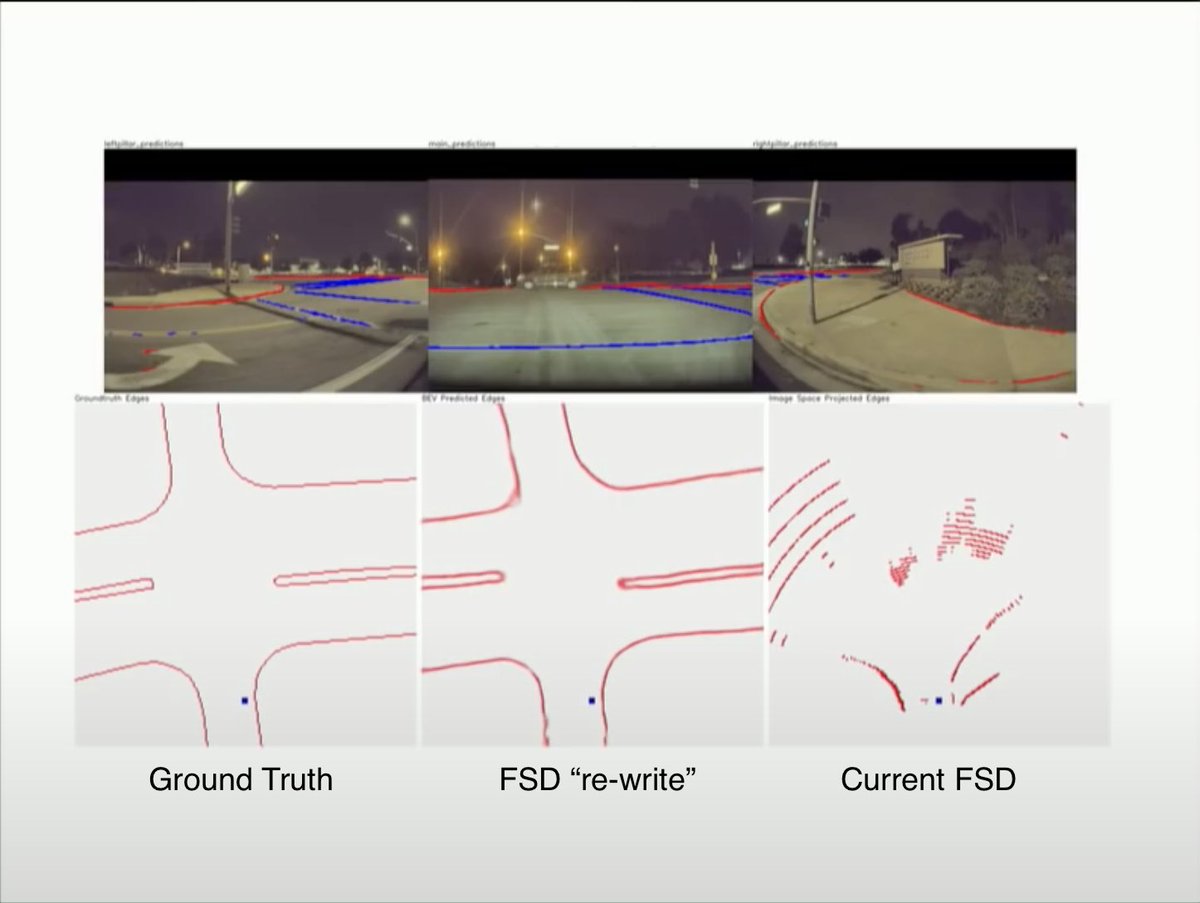

In this image, we really see the step change the re-write makes. Current FSD (right image) is not able predict the intersection layout, however the rewrite (centre image) almost *perfectly* predicts the exact layout of the intersection when compared to the map data (on the left).

Without the rewrite, FSD cannot take unprotected turns in an intersection because it cannot build an accurate representation of the layout. This is especially apparent at the horizon where only a few pixels of error create a lot of noise in distance mapping.

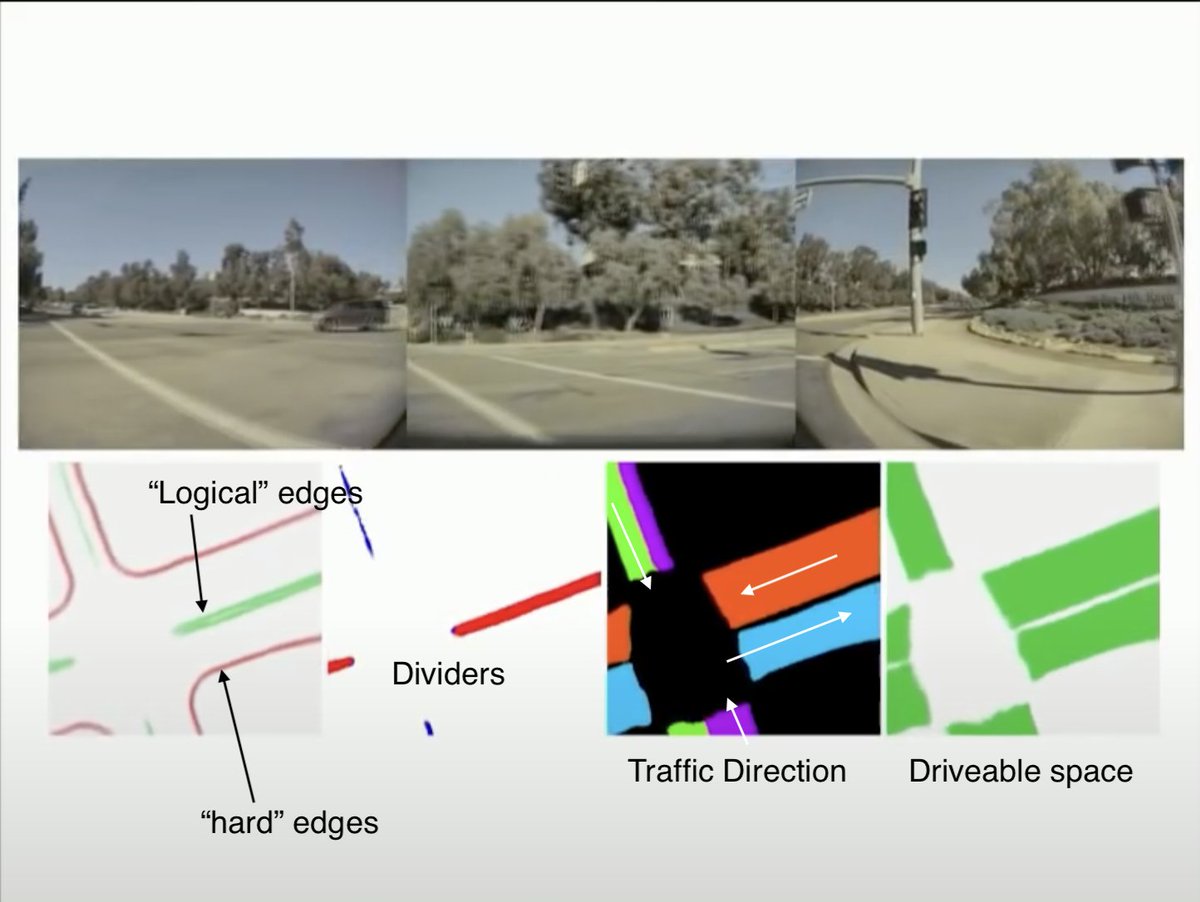

In the next example, we see how the rewrite is able to perfectly predict not only the layout of the intersection but also the features. Dividers, traffic flow, drivable space along with what the car already understands as traffic light controls, stop lines etc. Truly remarkable.

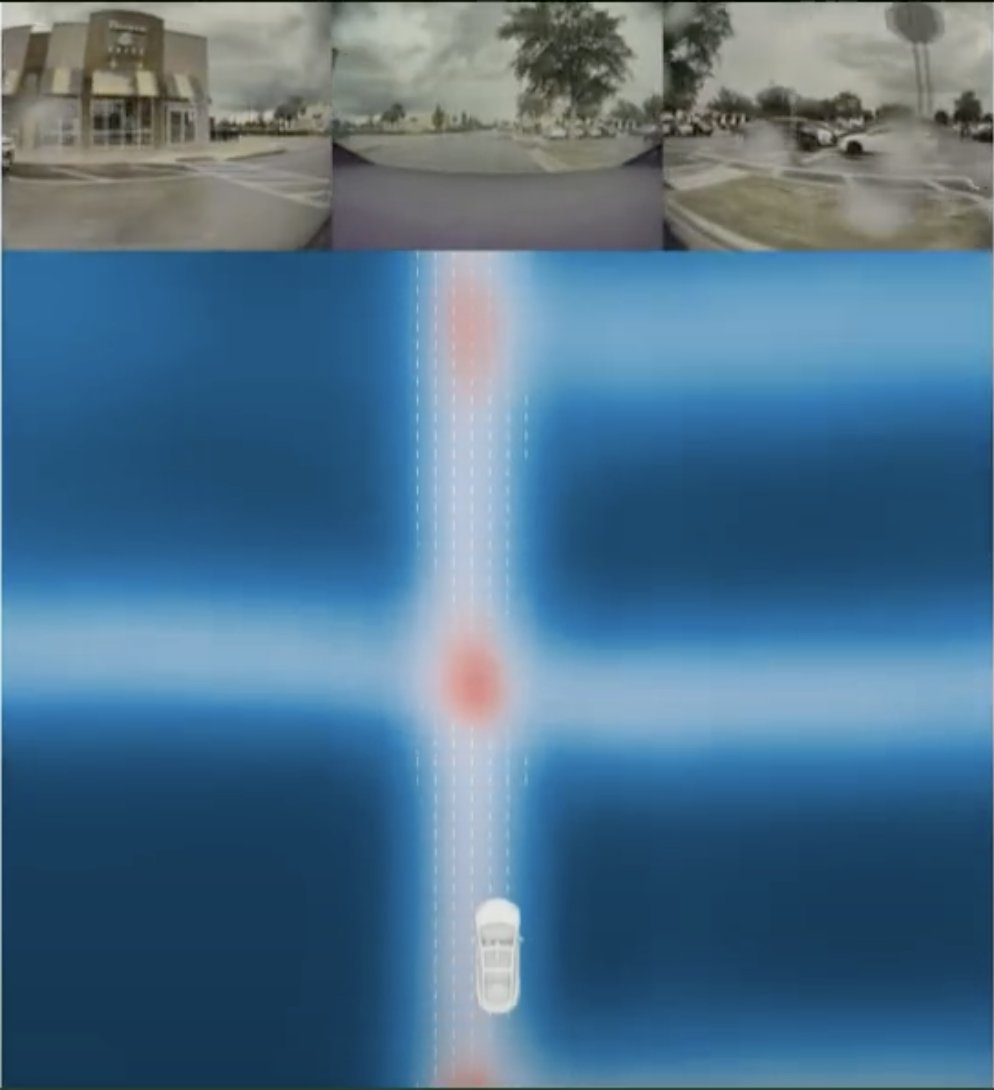

The next example seemingly shows a massive improvement to Smart Summon. The car is now able to re-project almost the entire carpark layout in bird& #39;s eye view plus accurately predict corridors, intersections and traffic flow. This is all directly output from the FSD re-write.

Having this "long term" planning ability based on a Neural Net output (i.e. FSD rewrite) means things like reverse smart summon become possible as the car can wind its way through the environment building a consistent topology as it moves.

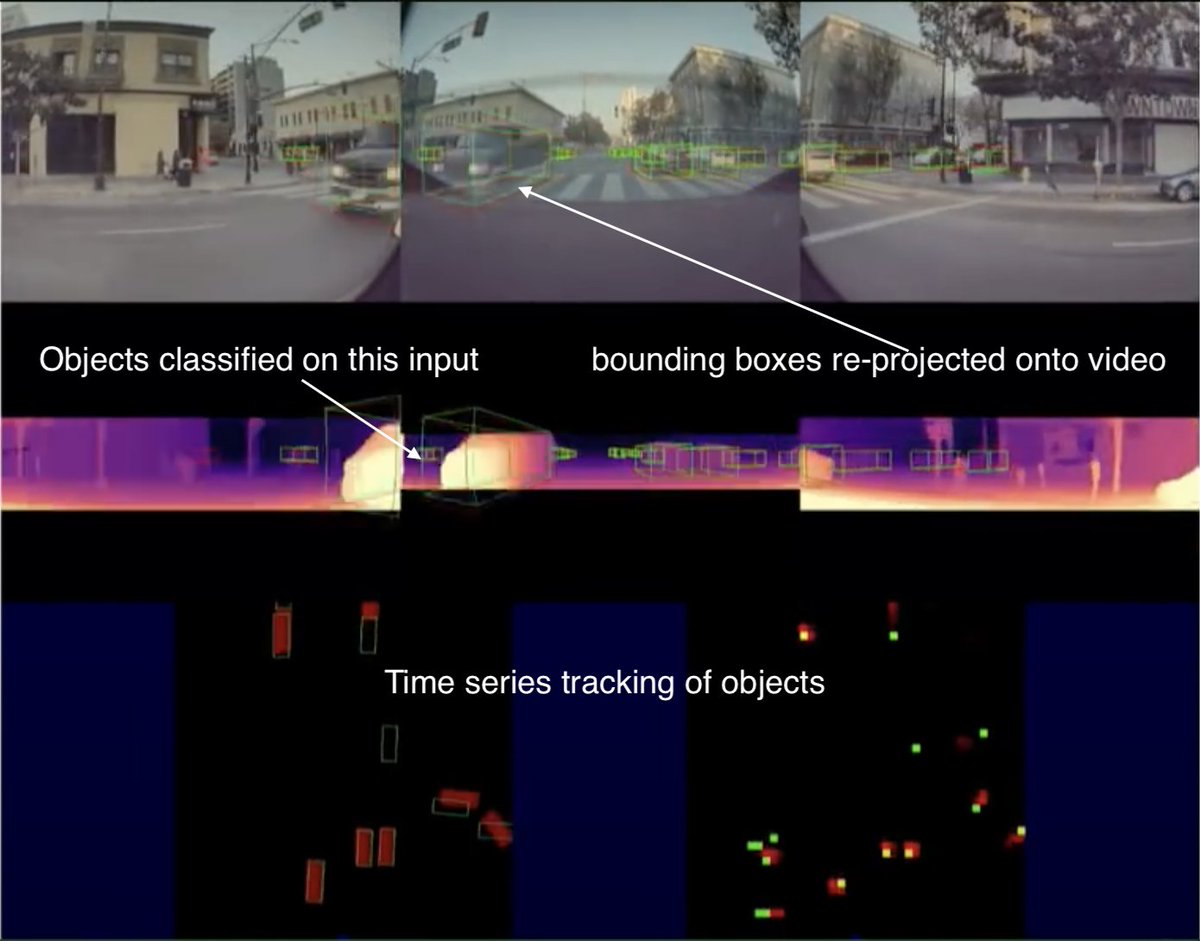

Next, we learn a more about what @elonmusk means when he says "labelling in 3d space". There is now a dedicated NN to predict the depth of each pixel. Essentially simulating LiDAR and then using that "point cloud" to classify objects in 3d space over time.

This again proves that the benefit of LiDAR is quickly evaporating as vision based Neural Nets can produce a point cloud representation of reality with enough accuracy for autonomous vehicles. This improvement is constant due to the self supervision training mode.

Self supervision training involves predicting and then checking how closely it matches reality in the subsequent frame before adjusting the weights and trying again. Repeat this billions of times and the NN model starts to be able to detect depth per pixel very accurately.

Finally the most interesting part is to do with the policy (i.e. how to drive) component of FSD. In @karpathy& #39;s own words "This policy is still in the land of (software) 1.0". However, the only way to achieve true autonomy is to train a NN to builds a policy internally.

Hand labelling all examples for the NN to train on is onerous to say the least. This is where the Dojo may come in. It allows for a few examples to be seeded and use "self supervision" training to iterate over raw video of examples to create an optimised model.

To be clear, I don& #39;t think the imminent FSD beta release will include the Neural Net based policy but the previous perception based NN in conjunction with Dojo certainly sets the stage for this to occur.

Read on Twitter

Read on Twitter