This week, famous researchers called for more transparency in #medical #AI and...

I hate it.

Not only is the letter they make their case in wrong-headed and bizarrely off-target, but @michaelhoffman is unprofessional in that twitter thread. So, a semi-angry tweetorial.

1/12 https://twitter.com/michaelhoffman/status/1316398546468376577">https://twitter.com/michaelho...

I hate it.

Not only is the letter they make their case in wrong-headed and bizarrely off-target, but @michaelhoffman is unprofessional in that twitter thread. So, a semi-angry tweetorial.

1/12 https://twitter.com/michaelhoffman/status/1316398546468376577">https://twitter.com/michaelho...

Their letter is about a study from @GoogleHealth

titled "International evaluation of an AI system for breast cancer screening."

It& #39;s good work. Importantly for this discussion, it is a model *evaluation* study, not a model *development* study.

https://www.nature.com/articles/s41586-019-1799-6

2/12">https://www.nature.com/articles/...

titled "International evaluation of an AI system for breast cancer screening."

It& #39;s good work. Importantly for this discussion, it is a model *evaluation* study, not a model *development* study.

https://www.nature.com/articles/s41586-019-1799-6

2/12">https://www.nature.com/articles/...

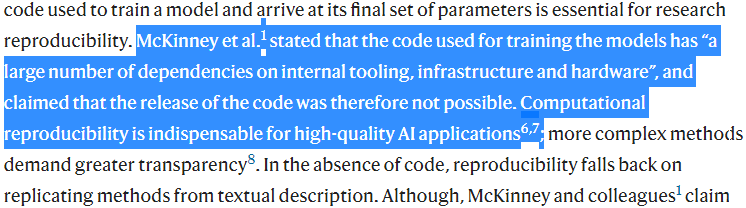

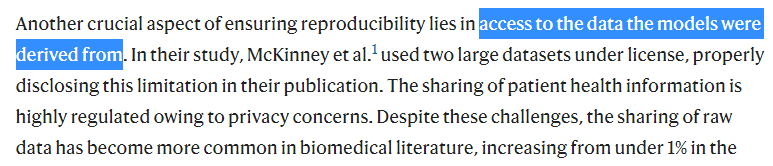

The authors of the letter spend about 2/3rds of it complaining that they can& #39;t reproduce *the model*.

Even when they talk about data access (their only other argument), they are mostly focused on the data "the models were derived from".

Why is this So. damn. weird?

3/12

Even when they talk about data access (their only other argument), they are mostly focused on the data "the models were derived from".

Why is this So. damn. weird?

3/12

IT IS AN EVALUATION STUDY!

Let& #39;s look at another type of clinical evaluation study - a drug trial.

Do we think that when it comes time to reproduce drug trial results, the first step is *synthesise the drug from scratch*?

I mean, has any trialist *ever* said this https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬇️" title="Pfeil nach unten" aria-label="Emoji: Pfeil nach unten">?

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬇️" title="Pfeil nach unten" aria-label="Emoji: Pfeil nach unten">?

4/12

Let& #39;s look at another type of clinical evaluation study - a drug trial.

Do we think that when it comes time to reproduce drug trial results, the first step is *synthesise the drug from scratch*?

I mean, has any trialist *ever* said this

4/12

So how do we reproduce drug trials and how should we reproduce AI evaluations? There are 2 ways:

1) we take the product, be it a drug or trained model (the developer will either donate the use of it for the trial or we will purchase it) and test on a similar population.

5/12

1) we take the product, be it a drug or trained model (the developer will either donate the use of it for the trial or we will purchase it) and test on a similar population.

5/12

or 2) we take the results, in this case the model output scores, human reader scores, and labels, and we redo their statistical analysis to make sure they didn& #39;t oopsie.

To be fair, the authors mention this second approach https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬇️" title="Pfeil nach unten" aria-label="Emoji: Pfeil nach unten">. But have Google refused to share this data?

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬇️" title="Pfeil nach unten" aria-label="Emoji: Pfeil nach unten">. But have Google refused to share this data?

6/12

To be fair, the authors mention this second approach

6/12

I doubt it, because I asked them for similar data months ago. They didn& #39;t even quibble, they just said yes!

In fact, I have asked for similar data from numerous commercial and academic groups, and never have I been refused. Everyone is happy to share this.

7/12

In fact, I have asked for similar data from numerous commercial and academic groups, and never have I been refused. Everyone is happy to share this.

7/12

I& #39;m not gonna get into the weeds on "can you reproduce resnets from a basic description" or "is it enough that data is freely accessible if you can swing a licence agreement" because it is irrelevant to reproducing the study.

Instead, let& #39;s look at what really annoyed me.

8/12

Instead, let& #39;s look at what really annoyed me.

8/12

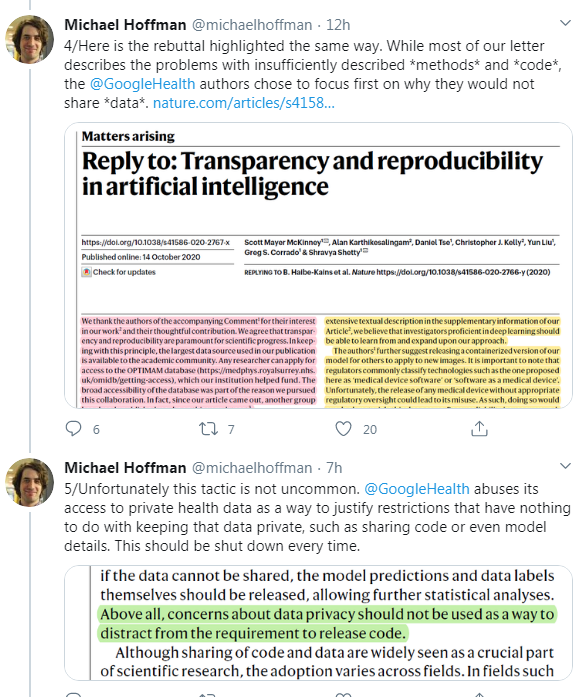

@michaelhoffman& #39;s tweets are wild. He claims outright scientific malice on the part of Google. He says they "abuse access to private health data" to prevent sharing code.

This is absurd. The model training code is irrelevant to any reproduction of this evaluation study.

9/12

This is absurd. The model training code is irrelevant to any reproduction of this evaluation study.

9/12

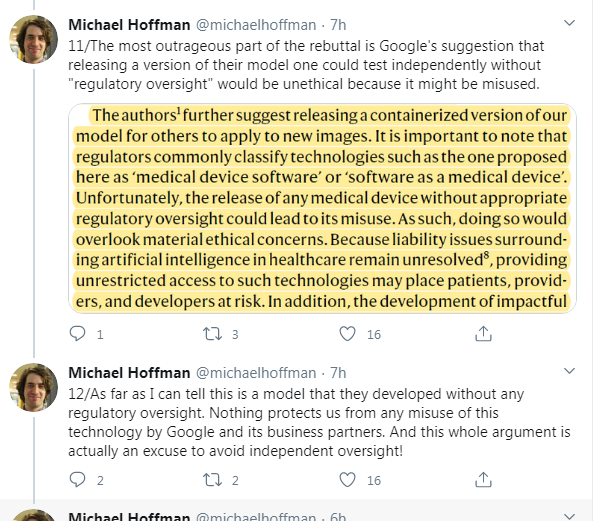

He then claims that Google is hiding their model behind a regulatory smokescreen.

Do you know why they didn& #39;t need regulatory oversight to develop their model? Because *they never applied it to patients*. This test was in-silico!

10/12

Do you know why they didn& #39;t need regulatory oversight to develop their model? Because *they never applied it to patients*. This test was in-silico!

10/12

Patients are protected against misuse by the fact this model is *not used on patients*. If they released the model, it could be used on patients.

That isn& #39;t an excuse to avoid oversight! It is a real safety concern.

Me when I see medical AI models on the internet https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬇️" title="Pfeil nach unten" aria-label="Emoji: Pfeil nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬇️" title="Pfeil nach unten" aria-label="Emoji: Pfeil nach unten">

11/12

That isn& #39;t an excuse to avoid oversight! It is a real safety concern.

Me when I see medical AI models on the internet

11/12

This letter, and the authors (who I respect a lot) really surprised me. It isn& #39;t that concerns about reproducibility are wrong.

But here, for this paper, they are misplaced. They demand the wrong data, miss the point of regulation, and misunderstand reproducibility in AI.

12/12

But here, for this paper, they are misplaced. They demand the wrong data, miss the point of regulation, and misunderstand reproducibility in AI.

12/12

Read on Twitter

Read on Twitter

. But have Google refused to share this data?6/12" title="or 2) we take the results, in this case the model output scores, human reader scores, and labels, and we redo their statistical analysis to make sure they didn& #39;t oopsie.To be fair, the authors mention this second approachhttps://abs.twimg.com/emoji/v2/... draggable="false" alt="⬇️" title="Pfeil nach unten" aria-label="Emoji: Pfeil nach unten">. But have Google refused to share this data?6/12" class="img-responsive" style="max-width:100%;"/>

. But have Google refused to share this data?6/12" title="or 2) we take the results, in this case the model output scores, human reader scores, and labels, and we redo their statistical analysis to make sure they didn& #39;t oopsie.To be fair, the authors mention this second approachhttps://abs.twimg.com/emoji/v2/... draggable="false" alt="⬇️" title="Pfeil nach unten" aria-label="Emoji: Pfeil nach unten">. But have Google refused to share this data?6/12" class="img-responsive" style="max-width:100%;"/>