ML paper review time - DenseNet!  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🕸️" title="Spinnennetz" aria-label="Emoji: Spinnennetz">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🕸️" title="Spinnennetz" aria-label="Emoji: Spinnennetz">

This paper won the Best Paper Award at the 2017 Conference on Computer Vision and Pattern Recognition (CVPR) - the best conference for computer vision problems.

It introduces a new CNN architecture where the layers are densely connected.

This paper won the Best Paper Award at the 2017 Conference on Computer Vision and Pattern Recognition (CVPR) - the best conference for computer vision problems.

It introduces a new CNN architecture where the layers are densely connected.

I attended a talk by Prof. Weinberger at GCPR 2017. He compared traditional CNNs to playing a game of Chinese Whispers - every layer passes what it learned to the next one. If the some information is wrong, though, it will propagate to the end without being corrected.  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤷♂️" title="Achselzuckender Mann" aria-label="Emoji: Achselzuckender Mann">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤷♂️" title="Achselzuckender Mann" aria-label="Emoji: Achselzuckender Mann">

The Idea  https://abs.twimg.com/emoji/v2/... draggable="false" alt="💡" title="Elektrische Glühbirne" aria-label="Emoji: Elektrische Glühbirne">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="💡" title="Elektrische Glühbirne" aria-label="Emoji: Elektrische Glühbirne">

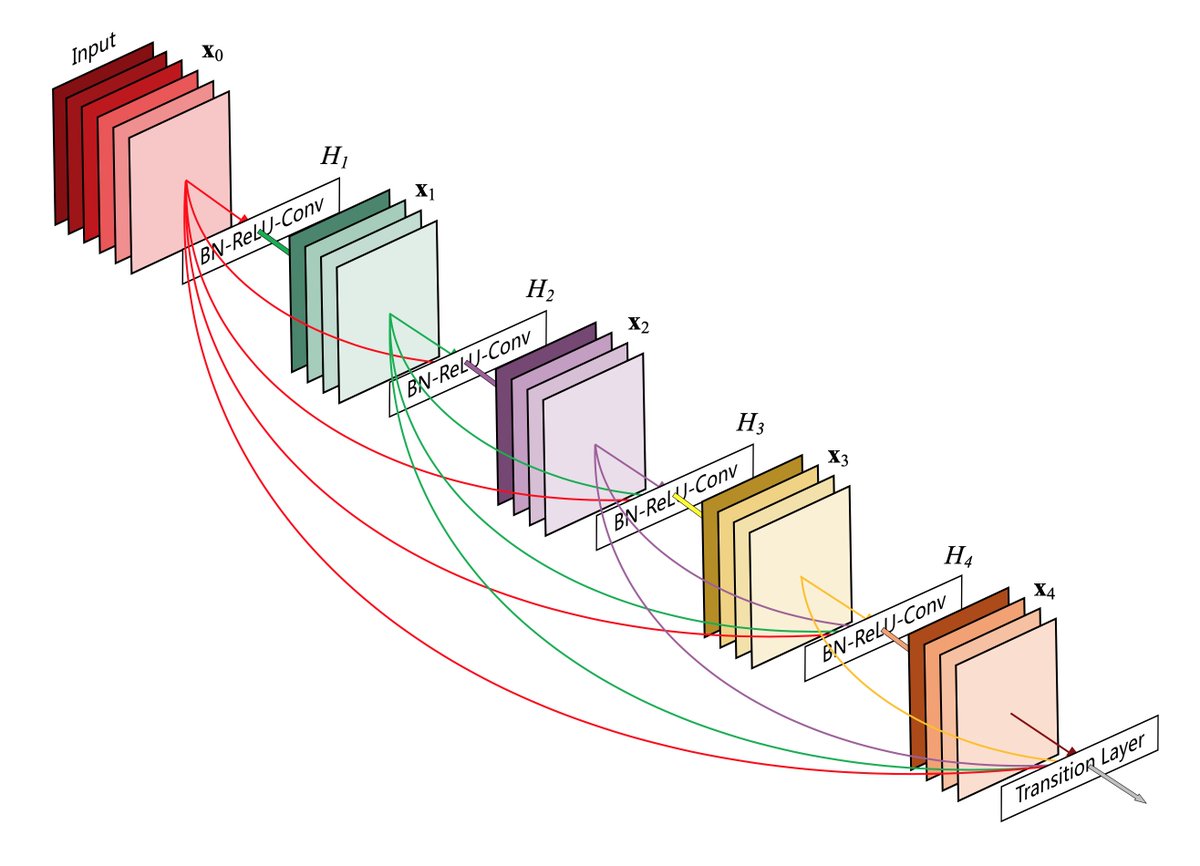

The main idea of DenseNet is to connect each layer not only to the previous layer, but also to all other layers before that. In this way, the layers will be able to directly access all features that were computed in the chain before.

The main idea of DenseNet is to connect each layer not only to the previous layer, but also to all other layers before that. In this way, the layers will be able to directly access all features that were computed in the chain before.

Details  https://abs.twimg.com/emoji/v2/... draggable="false" alt="⚙️" title="Zahnrad" aria-label="Emoji: Zahnrad">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⚙️" title="Zahnrad" aria-label="Emoji: Zahnrad">

Creating "shortcuts" between layers has been done before DenseNet - important for training very deep networks.

The interesting point here is that the connectivity is dense and that the feature maps from all layers are concatenated and not summed up as in ResNet.

Creating "shortcuts" between layers has been done before DenseNet - important for training very deep networks.

The interesting point here is that the connectivity is dense and that the feature maps from all layers are concatenated and not summed up as in ResNet.

Details (2)  https://abs.twimg.com/emoji/v2/... draggable="false" alt="⚙️" title="Zahnrad" aria-label="Emoji: Zahnrad">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⚙️" title="Zahnrad" aria-label="Emoji: Zahnrad">

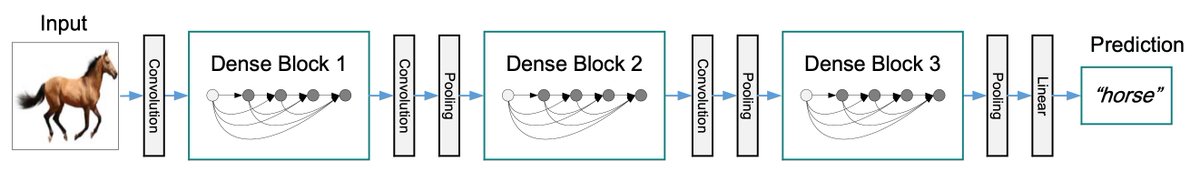

Sharing feature maps is not possible when the resolution is reduced by pooling, so in practice there are 3-4 dense blocks connected by so called transition layers. The layers in each block are densely connected.

Sharing feature maps is not possible when the resolution is reduced by pooling, so in practice there are 3-4 dense blocks connected by so called transition layers. The layers in each block are densely connected.

Growth rate  https://abs.twimg.com/emoji/v2/... draggable="false" alt="📈" title="Tabelle mit Aufwärtstrend" aria-label="Emoji: Tabelle mit Aufwärtstrend">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="📈" title="Tabelle mit Aufwärtstrend" aria-label="Emoji: Tabelle mit Aufwärtstrend">

An important hyper parameter is the growth rate - it specifies the number of feature maps that is added after each layer. In contrast to other architectures, DenseNet can use very narrow layers, typically containing only 12 feature maps.

An important hyper parameter is the growth rate - it specifies the number of feature maps that is added after each layer. In contrast to other architectures, DenseNet can use very narrow layers, typically containing only 12 feature maps.

Training  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏋️♂️" title="Man lifting weights" aria-label="Emoji: Man lifting weights">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏋️♂️" title="Man lifting weights" aria-label="Emoji: Man lifting weights">

A big advantage of DenseNet is that it is easy and fast to train, because it avoids the Vanishing Gradients problem. During backpropagation, the gradients are free to flow directly to each layer because of the dense connections. It& #39;s much faster to train than ResNet.

A big advantage of DenseNet is that it is easy and fast to train, because it avoids the Vanishing Gradients problem. During backpropagation, the gradients are free to flow directly to each layer because of the dense connections. It& #39;s much faster to train than ResNet.

Size  https://abs.twimg.com/emoji/v2/... draggable="false" alt="⭕" title="Fetter großer Kreis" aria-label="Emoji: Fetter großer Kreis">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⭕" title="Fetter großer Kreis" aria-label="Emoji: Fetter großer Kreis">

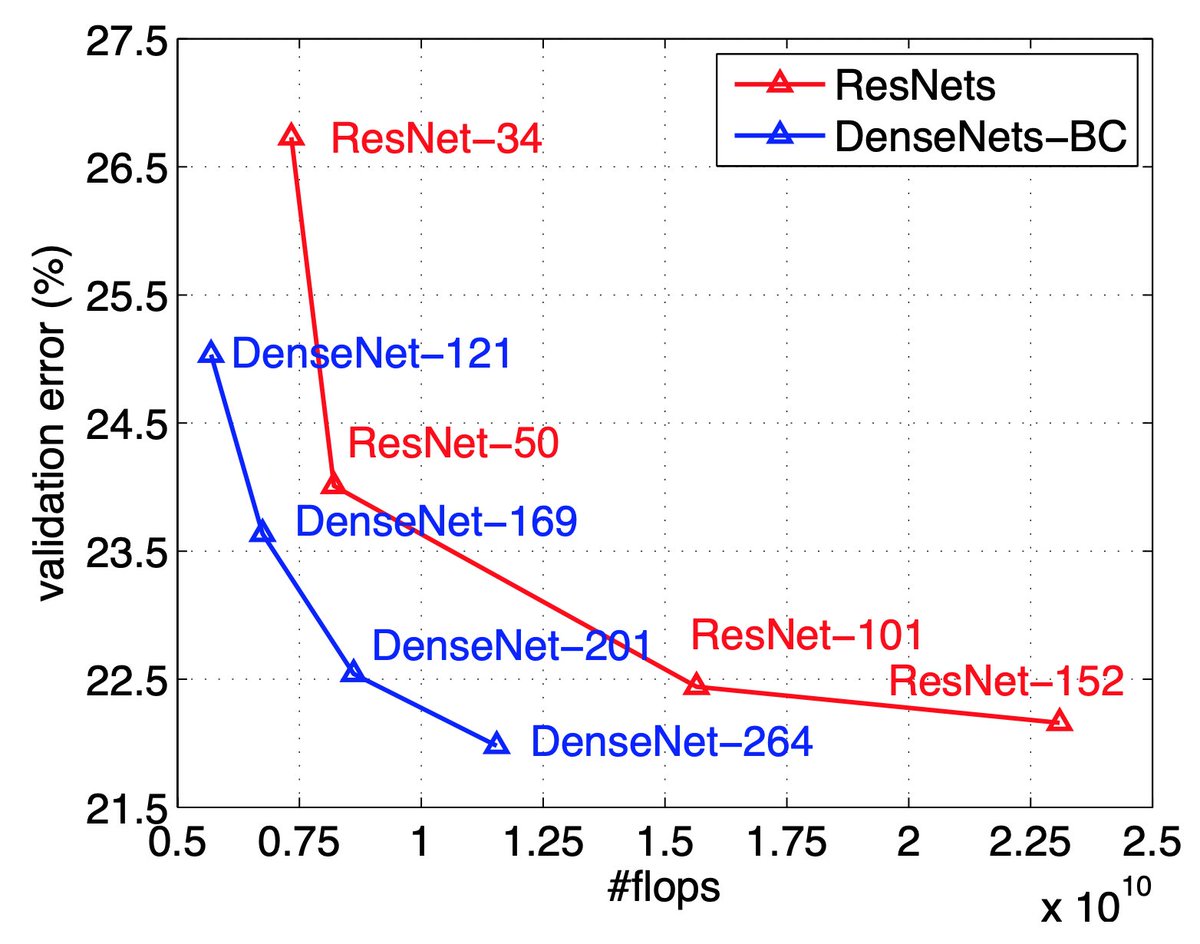

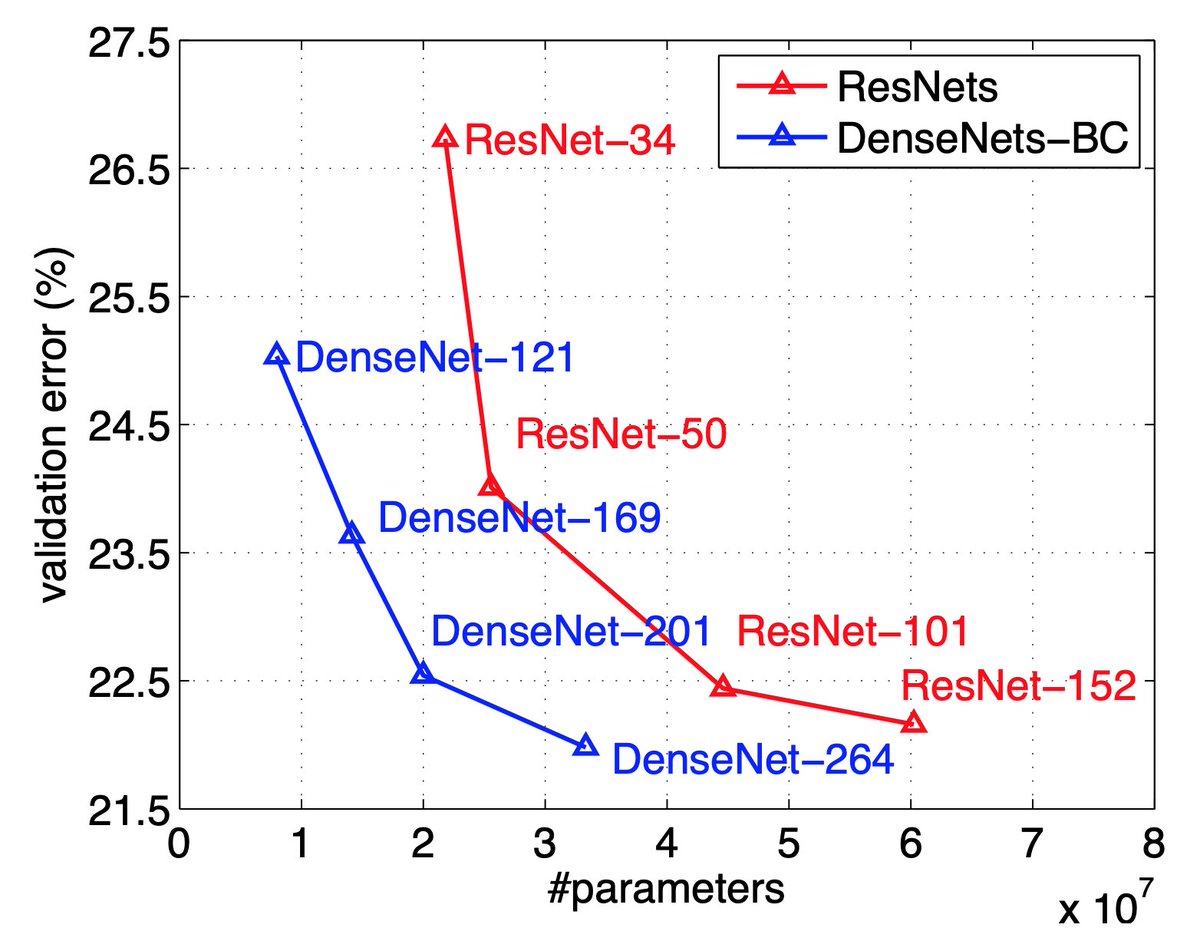

Sharing the feature maps between layers also means that the overall size of the network can be reduced. Indeed, DenseNet is able to achieve accuracy similar to ResNet with half of the number of parameters.

Sharing the feature maps between layers also means that the overall size of the network can be reduced. Indeed, DenseNet is able to achieve accuracy similar to ResNet with half of the number of parameters.

Generalization  https://abs.twimg.com/emoji/v2/... draggable="false" alt="✅" title="Fettes weißes Häkchen" aria-label="Emoji: Fettes weißes Häkchen">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="✅" title="Fettes weißes Häkchen" aria-label="Emoji: Fettes weißes Häkchen">

An nice side effect of having a smaller network is that it is less prone to overfitting. Especially when no data augmentation is performed, DenseNet achieves significantly better results than other methods.

An nice side effect of having a smaller network is that it is less prone to overfitting. Especially when no data augmentation is performed, DenseNet achieves significantly better results than other methods.

Results  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏆" title="Trophäe" aria-label="Emoji: Trophäe">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏆" title="Trophäe" aria-label="Emoji: Trophäe">

DenseNet is tested on several benchmark datasets (including ImageNet) and either beats all other methods or achieves results comparable to the state-of-the-art, but using much less computational resources.

DenseNet is tested on several benchmark datasets (including ImageNet) and either beats all other methods or achieves results comparable to the state-of-the-art, but using much less computational resources.

Conclusion  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏁" title="Karierte Flagge" aria-label="Emoji: Karierte Flagge">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏁" title="Karierte Flagge" aria-label="Emoji: Karierte Flagge">

DenseNet is an architecture where each layer is able to reuse the information in all previous layer. This results in a network that is smaller, faster to train and generalizes better.

It is implemented in all major DL frameworks: PyTorch, Keras, Tensorflow etc.

DenseNet is an architecture where each layer is able to reuse the information in all previous layer. This results in a network that is smaller, faster to train and generalizes better.

It is implemented in all major DL frameworks: PyTorch, Keras, Tensorflow etc.

Further reading  https://abs.twimg.com/emoji/v2/... draggable="false" alt="📖" title="Offenes Buch" aria-label="Emoji: Offenes Buch">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="📖" title="Offenes Buch" aria-label="Emoji: Offenes Buch">

- Original article: https://arxiv.org/abs/1608.06993

-">https://arxiv.org/abs/1608.... Extended version in TPAMI: http://www.gaohuang.net/papers/DenseNet_Journal.pdf

-">https://www.gaohuang.net/papers/De... Code: https://github.com/liuzhuang13/DenseNet">https://github.com/liuzhuang...

- Original article: https://arxiv.org/abs/1608.06993

-">https://arxiv.org/abs/1608.... Extended version in TPAMI: http://www.gaohuang.net/papers/DenseNet_Journal.pdf

-">https://www.gaohuang.net/papers/De... Code: https://github.com/liuzhuang13/DenseNet">https://github.com/liuzhuang...

Read on Twitter

Read on Twitter This paper won the Best Paper Award at the 2017 Conference on Computer Vision and Pattern Recognition (CVPR) - the best conference for computer vision problems.It introduces a new CNN architecture where the layers are densely connected." title="ML paper review time - DenseNet! https://abs.twimg.com/emoji/v2/... draggable="false" alt="🕸️" title="Spinnennetz" aria-label="Emoji: Spinnennetz">This paper won the Best Paper Award at the 2017 Conference on Computer Vision and Pattern Recognition (CVPR) - the best conference for computer vision problems.It introduces a new CNN architecture where the layers are densely connected." class="img-responsive" style="max-width:100%;"/>

This paper won the Best Paper Award at the 2017 Conference on Computer Vision and Pattern Recognition (CVPR) - the best conference for computer vision problems.It introduces a new CNN architecture where the layers are densely connected." title="ML paper review time - DenseNet! https://abs.twimg.com/emoji/v2/... draggable="false" alt="🕸️" title="Spinnennetz" aria-label="Emoji: Spinnennetz">This paper won the Best Paper Award at the 2017 Conference on Computer Vision and Pattern Recognition (CVPR) - the best conference for computer vision problems.It introduces a new CNN architecture where the layers are densely connected." class="img-responsive" style="max-width:100%;"/>

" title="I attended a talk by Prof. Weinberger at GCPR 2017. He compared traditional CNNs to playing a game of Chinese Whispers - every layer passes what it learned to the next one. If the some information is wrong, though, it will propagate to the end without being corrected. https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤷♂️" title="Achselzuckender Mann" aria-label="Emoji: Achselzuckender Mann">" class="img-responsive" style="max-width:100%;"/>

" title="I attended a talk by Prof. Weinberger at GCPR 2017. He compared traditional CNNs to playing a game of Chinese Whispers - every layer passes what it learned to the next one. If the some information is wrong, though, it will propagate to the end without being corrected. https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤷♂️" title="Achselzuckender Mann" aria-label="Emoji: Achselzuckender Mann">" class="img-responsive" style="max-width:100%;"/>

The main idea of DenseNet is to connect each layer not only to the previous layer, but also to all other layers before that. In this way, the layers will be able to directly access all features that were computed in the chain before." title="The Idea https://abs.twimg.com/emoji/v2/... draggable="false" alt="💡" title="Elektrische Glühbirne" aria-label="Emoji: Elektrische Glühbirne">The main idea of DenseNet is to connect each layer not only to the previous layer, but also to all other layers before that. In this way, the layers will be able to directly access all features that were computed in the chain before." class="img-responsive" style="max-width:100%;"/>

The main idea of DenseNet is to connect each layer not only to the previous layer, but also to all other layers before that. In this way, the layers will be able to directly access all features that were computed in the chain before." title="The Idea https://abs.twimg.com/emoji/v2/... draggable="false" alt="💡" title="Elektrische Glühbirne" aria-label="Emoji: Elektrische Glühbirne">The main idea of DenseNet is to connect each layer not only to the previous layer, but also to all other layers before that. In this way, the layers will be able to directly access all features that were computed in the chain before." class="img-responsive" style="max-width:100%;"/>

Sharing feature maps is not possible when the resolution is reduced by pooling, so in practice there are 3-4 dense blocks connected by so called transition layers. The layers in each block are densely connected." title="Details (2) https://abs.twimg.com/emoji/v2/... draggable="false" alt="⚙️" title="Zahnrad" aria-label="Emoji: Zahnrad">Sharing feature maps is not possible when the resolution is reduced by pooling, so in practice there are 3-4 dense blocks connected by so called transition layers. The layers in each block are densely connected." class="img-responsive" style="max-width:100%;"/>

Sharing feature maps is not possible when the resolution is reduced by pooling, so in practice there are 3-4 dense blocks connected by so called transition layers. The layers in each block are densely connected." title="Details (2) https://abs.twimg.com/emoji/v2/... draggable="false" alt="⚙️" title="Zahnrad" aria-label="Emoji: Zahnrad">Sharing feature maps is not possible when the resolution is reduced by pooling, so in practice there are 3-4 dense blocks connected by so called transition layers. The layers in each block are densely connected." class="img-responsive" style="max-width:100%;"/>

A big advantage of DenseNet is that it is easy and fast to train, because it avoids the Vanishing Gradients problem. During backpropagation, the gradients are free to flow directly to each layer because of the dense connections. It& #39;s much faster to train than ResNet." title="Training https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏋️♂️" title="Man lifting weights" aria-label="Emoji: Man lifting weights">A big advantage of DenseNet is that it is easy and fast to train, because it avoids the Vanishing Gradients problem. During backpropagation, the gradients are free to flow directly to each layer because of the dense connections. It& #39;s much faster to train than ResNet." class="img-responsive" style="max-width:100%;"/>

A big advantage of DenseNet is that it is easy and fast to train, because it avoids the Vanishing Gradients problem. During backpropagation, the gradients are free to flow directly to each layer because of the dense connections. It& #39;s much faster to train than ResNet." title="Training https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏋️♂️" title="Man lifting weights" aria-label="Emoji: Man lifting weights">A big advantage of DenseNet is that it is easy and fast to train, because it avoids the Vanishing Gradients problem. During backpropagation, the gradients are free to flow directly to each layer because of the dense connections. It& #39;s much faster to train than ResNet." class="img-responsive" style="max-width:100%;"/>

Sharing the feature maps between layers also means that the overall size of the network can be reduced. Indeed, DenseNet is able to achieve accuracy similar to ResNet with half of the number of parameters." title="Size https://abs.twimg.com/emoji/v2/... draggable="false" alt="⭕" title="Fetter großer Kreis" aria-label="Emoji: Fetter großer Kreis">Sharing the feature maps between layers also means that the overall size of the network can be reduced. Indeed, DenseNet is able to achieve accuracy similar to ResNet with half of the number of parameters." class="img-responsive" style="max-width:100%;"/>

Sharing the feature maps between layers also means that the overall size of the network can be reduced. Indeed, DenseNet is able to achieve accuracy similar to ResNet with half of the number of parameters." title="Size https://abs.twimg.com/emoji/v2/... draggable="false" alt="⭕" title="Fetter großer Kreis" aria-label="Emoji: Fetter großer Kreis">Sharing the feature maps between layers also means that the overall size of the network can be reduced. Indeed, DenseNet is able to achieve accuracy similar to ResNet with half of the number of parameters." class="img-responsive" style="max-width:100%;"/>