Here& #39;s @revanhoe and @adanielescu talking about Gender Inclusive Conversational AIs. (And parallels to other Biases)

This sounds like it will be a complicated and nuanced, yet important, topic.

This sounds like it will be a complicated and nuanced, yet important, topic.

(I can& #39;t keep up - I& #39;m sorry!)

We have evidence of gender bias:

- Devices work better for men

- Personalities display gender stereotypes

- Harmful behavior

We have evidence of gender bias:

- Devices work better for men

- Personalities display gender stereotypes

- Harmful behavior

"[A]t least 5% of interactions [with chatbots] were unambiguously sexually explicit."

When interactions were flirtatious or worse - the chatbots often encourage this.

When interactions were flirtatious or worse - the chatbots often encourage this.

So why is it harmful that our chatbots are female presenting?

Because they reinforce the stereotype of "barking orders at women".

Because they reinforce the stereotype of "barking orders at women".

But we don& #39;t need to gender our things.

We have an opportunity to do better...

(cue @adanielescu)

We have an opportunity to do better...

(cue @adanielescu)

Most people associate a gender with the device based on the voice they hear. But a non-binary voice is sometimes heard as "strange".

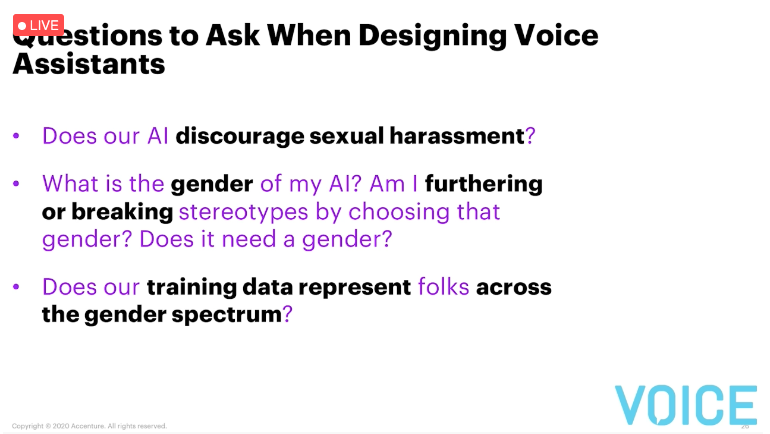

There isn& #39;t a straightforward solution. But this is part of it.

There isn& #39;t a straightforward solution. But this is part of it.

Notion of differing word choices is *very* interesting to me. I& #39;m wondering how much we should be varying based on the context of the person asking... or if there is a more neutral way to create replies. But sounds like not yet. (Can& #39;t find a handle for Sharone, if they have one)

Big takeaways:

- Be deliberate in our thinking about gender and voice agents

- Be active in discouraging sexual harassment

- Be inclusive to capture everyone& #39;s needs and represent them

- Be deliberate in our thinking about gender and voice agents

- Be active in discouraging sexual harassment

- Be inclusive to capture everyone& #39;s needs and represent them

I know I thought long and hard about which voice to use when working on @VodoDrive. I initially went with a male-sounding voice, since I didn& #39;t want a stereotypical image of a boss yelling at a secretary for numbers, but have elaborate plans to make that highly tailorable.

Read on Twitter

Read on Twitter

!["[A]t least 5% of interactions [with chatbots] were unambiguously sexually explicit."When interactions were flirtatious or worse - the chatbots often encourage this. "[A]t least 5% of interactions [with chatbots] were unambiguously sexually explicit."When interactions were flirtatious or worse - the chatbots often encourage this.](https://pbs.twimg.com/media/EkYkmEnXsAIgnpS.png)