Folks who want to use AI/ML for good generally think of things like predictive models, but actually... smart methods for extracting data from forms would do more for journalism, climate science, medicine, democracy etc. than almost any other application. A THREAD.

1/x

1/x

Here& #39;s how form extraction could help climate science

https://twitter.com/ed_hawkins/status/1167769410238595072

2/x">https://twitter.com/ed_hawkin...

https://twitter.com/ed_hawkins/status/1167769410238595072

2/x">https://twitter.com/ed_hawkin...

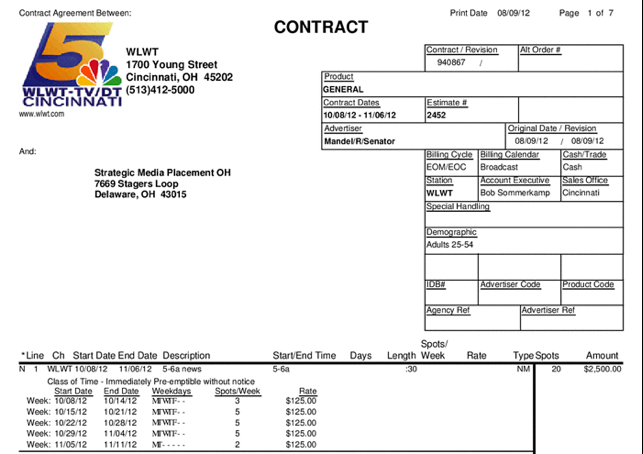

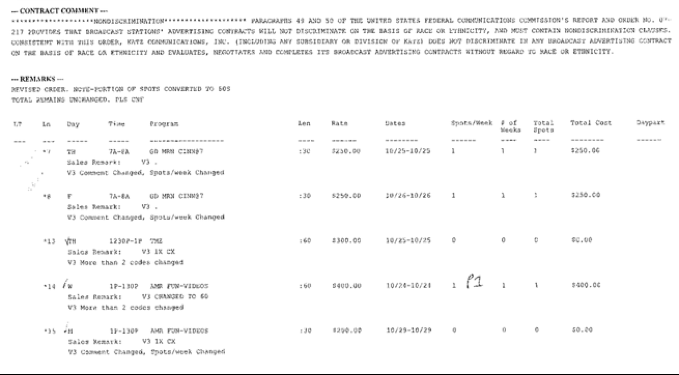

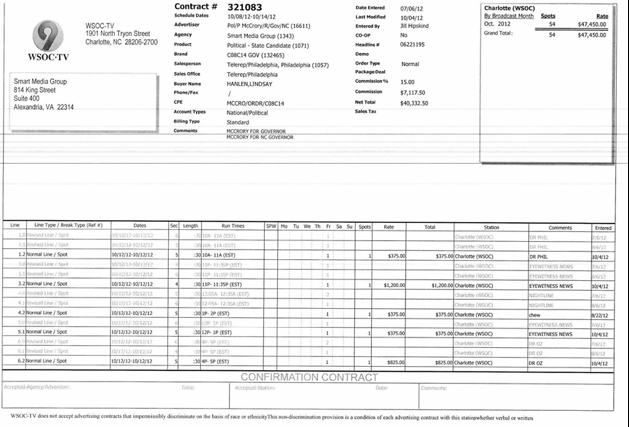

Here& #39;s an example of how form extraction could help journalism, reading through the FCC political ad disclosure PDFS which are -- to understate it -- a mess.

http://jonathanstray.com/extracting-campaign-finance-data-from-gnarly-pdfs-using-deep-learning

3/x">https://jonathanstray.com/extractin...

http://jonathanstray.com/extracting-campaign-finance-data-from-gnarly-pdfs-using-deep-learning

3/x">https://jonathanstray.com/extractin...

Here& #39;s an incredibly hard form extraction problem that is core to democracy and human rights: digitizing the hand-written text + photos archive of the Guatemalan secret police https://www.nationalgeographic.com/photography/proof/2017/07/archive-of-the-national-police-guatemala/

4/x">https://www.nationalgeographic.com/photograp...

4/x">https://www.nationalgeographic.com/photograp...

Here& #39;s what I know about solving the form extraction problem. First, the basics:

- Tesseract 4.0 is near-SOTA OCR in 100+ languagtes, you probably want to start here https://github.com/tesseract-ocr/tesseract

-">https://github.com/tesseract... PDFPlumber will spit out tokens with their bounding boxes https://github.com/jsvine/pdfplumber

5/x">https://github.com/jsvine/pd...

- Tesseract 4.0 is near-SOTA OCR in 100+ languagtes, you probably want to start here https://github.com/tesseract-ocr/tesseract

-">https://github.com/tesseract... PDFPlumber will spit out tokens with their bounding boxes https://github.com/jsvine/pdfplumber

5/x">https://github.com/jsvine/pd...

If you have a simple enough table, and only one type of form layout, you may be able use PDFPlumber or Tabula to solve the problem directly

https://tabula.technology/

https://tabula.technology/">... href=" #extracting-tables">https://github.com/jsvine/pdfplumber #extracting-tables

6/x">https://github.com/jsvine/pd...

https://tabula.technology/

6/x">https://github.com/jsvine/pd...

There are a several commercial products that may solve your form extraction needs

https://web.altair.com/monarch-pdf-to-excel

https://web.altair.com/monarch-p... href=" https://rossum.ai/

https://rossum.ai/">... href=" https://aws.amazon.com/textract/

7/x">https://aws.amazon.com/textract/...

https://web.altair.com/monarch-pdf-to-excel

7/x">https://aws.amazon.com/textract/...

If that doesn& #39;t work (or too expensive or you need an open solution), then it& #39;s research time! First off, what you want is "multi-modal" approaches that consider text, geometry, maybe even font weight to understand document structure. E.g. Fonduer https://sing.stanford.edu/site/publications/fonduer-sigmod18.pdf

8/x">https://sing.stanford.edu/site/publ...

8/x">https://sing.stanford.edu/site/publ...

Here& #39;s some Google work that does representation learning on tokens+geometry input (exactly what PDFPlumber outputs)

- "Representation Learning for Information Extraction

from Form-like Documents" https://aclweb.org/anthology/2020.acl-main.580.pdf

9/x">https://aclweb.org/anthology...

- "Representation Learning for Information Extraction

from Form-like Documents" https://aclweb.org/anthology/2020.acl-main.580.pdf

9/x">https://aclweb.org/anthology...

Here& #39;s a graph convolutional neural network approach to form extraction

https://nanonets.com/blog/information-extraction-graph-convolutional-networks/

10/x">https://nanonets.com/blog/info...

https://nanonets.com/blog/information-extraction-graph-convolutional-networks/

10/x">https://nanonets.com/blog/info...

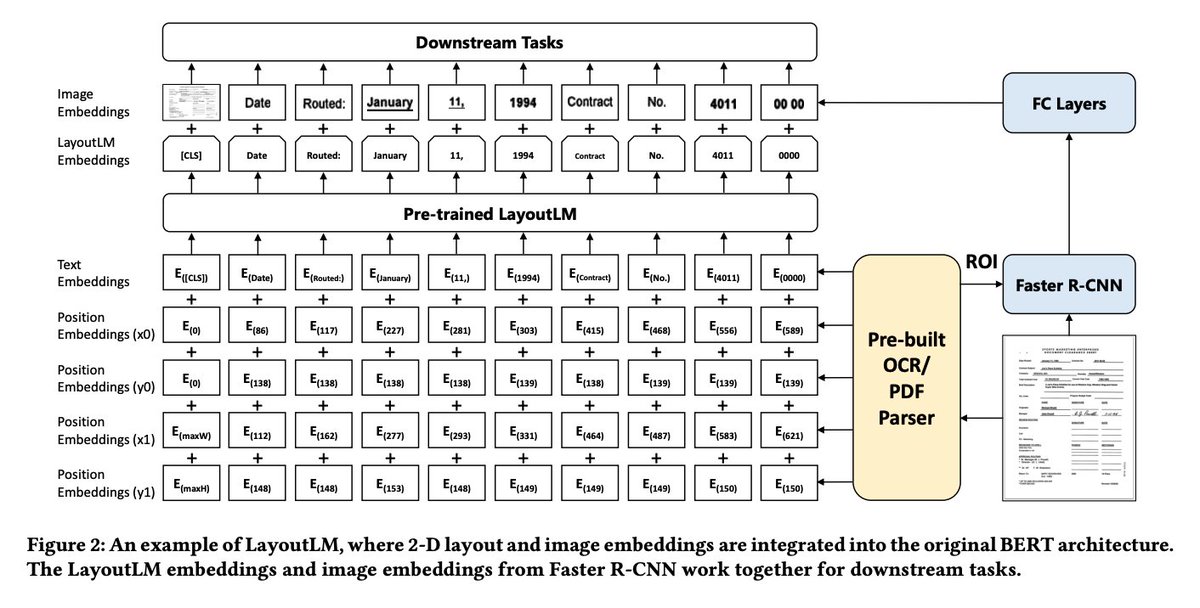

And here is what seems to be the (public?) SOTA of multi-modal form extraction. Uses text + geometry + image embeddings in a BERT model

"LayoutLM: Pre-training of Text and Layout for Document Image Understanding"

https://arxiv.org/abs/1912.13318

11/x">https://arxiv.org/abs/1912....

"LayoutLM: Pre-training of Text and Layout for Document Image Understanding"

https://arxiv.org/abs/1912.13318

11/x">https://arxiv.org/abs/1912....

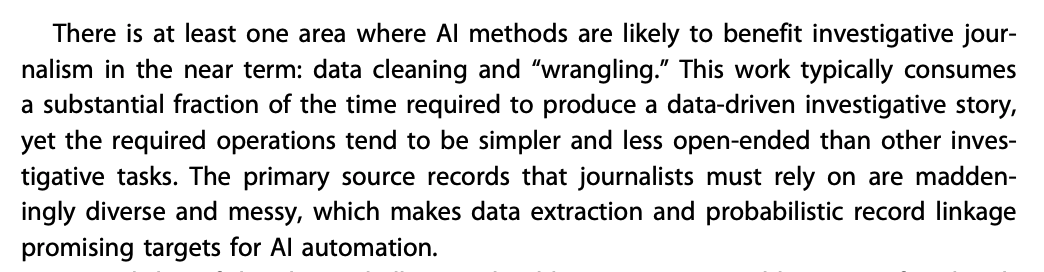

Just in case you& #39;re not yet convinced, a longer argument about why form extraction (and data wrangling generally) is likely the most productive application of AI to journalism -- the problem is well-specified, and data prep takes a LOT of time.

https://www.researchgate.net/profile/Jonathan_Stray/publication/334182207_Making_Artificial_Intelligence_Work_for_Investigative_Journalism/links/5e41b987a6fdccd9659a1737/Making-Artificial-Intelligence-Work-for-Investigative-Journalism.pdf

12/x">https://www.researchgate.net/profile/J...

https://www.researchgate.net/profile/Jonathan_Stray/publication/334182207_Making_Artificial_Intelligence_Work_for_Investigative_Journalism/links/5e41b987a6fdccd9659a1737/Making-Artificial-Intelligence-Work-for-Investigative-Journalism.pdf

12/x">https://www.researchgate.net/profile/J...

Finally, a big shoutout to the deepform team: @metaphdor, @moredataneeded, @danielfennelly, Andrea Lowe and Gray Davidson. We& #39;ve been working hard on extracting the FCC political TV Ad PDFs, "public" info which costs $100k to buy clean data. Code:

https://github.com/project-deepform/deepform

13/x">https://github.com/project-d...

https://github.com/project-deepform/deepform

13/x">https://github.com/project-d...

If you are an ML engineer who would like to get involved in form-extraction-for-democracy, @weights_biases is very kindly hosting a public benchmark for the FCC ad data. Can you advance the open state of the art and beat our baseline? We& #39;d love that!

https://wandb.ai/deepform/political-ad-extraction/benchmark

~END~">https://wandb.ai/deepform/...

https://wandb.ai/deepform/political-ad-extraction/benchmark

~END~">https://wandb.ai/deepform/...

Read on Twitter

Read on Twitter