One of my PhD students, Lyra ( @Lyra_Wang) proposed an idea to use machine learning to significantly reduce the cost of #methane emissions mitigation.

I didn& #39;t think it would work. She still wanted to try.

And here& #39;s what happened.

As background, #methane leakage from O&G is a big issue. They are also hard to find:

1) Leaks cannot be predicted

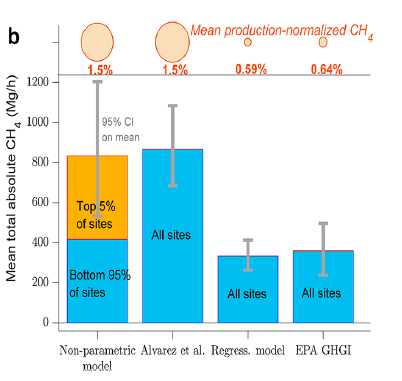

2) There are super-emitters - the top 5% of sites account for *half* of all emissions (see fig.)

3) We couldn& #39;t predict which sites will become super-emitters

1) Leaks cannot be predicted

2) There are super-emitters - the top 5% of sites account for *half* of all emissions (see fig.)

3) We couldn& #39;t predict which sites will become super-emitters

So what did governments do? They required operators to check *every* single site for leaks. These are called leak detection and repair programs. So, operators go site by site, in random order, to find leaks.

They work.

But, they& #39;re also expensive. https://iopscience.iop.org/article/10.1088/1748-9326/ab6ae1/meta">https://iopscience.iop.org/article/1...

They work.

But, they& #39;re also expensive. https://iopscience.iop.org/article/10.1088/1748-9326/ab6ae1/meta">https://iopscience.iop.org/article/1...

. @Lyra_Wang asked if we can predict which sites become super-emitters using public data. Consider this:

You are an operator with 200 sites (20/200 sites being super-emitters). In random order, it will take 40 days to finish the survey. ~20 days to find 50% of emissions.

You are an operator with 200 sites (20/200 sites being super-emitters). In random order, it will take 40 days to finish the survey. ~20 days to find 50% of emissions.

Turns out, you can use machine learning to identify a survey order instead of randomly going to all sites. You can do this by asking the model to estimate probability that a site is likely to be a super-emitter and survey in order of highest probabilities.

Here& #39;s what we found:

Here& #39;s what we found:

In the ML predicted order, you mitigate 50% of emissions by day 7. If you survey randomly, it takes 12 days to achieve 50% mitigation. If you survey based on production volumes, it takes 10 days.

Cost to achieve 50% mitigation reduces from $82/tCO2e to $49/tCO2e. That& #39;s huge!

Cost to achieve 50% mitigation reduces from $82/tCO2e to $49/tCO2e. That& #39;s huge!

Obviously, this is preliminary. We& #39;re trying to extend the model to all of the US, improve predictive power, & add other variables (e.g, time since last survey).

ML is powerful, but whether it will be useful in this context remains to be seen.

Feedback & new ideas are welcome!

ML is powerful, but whether it will be useful in this context remains to be seen.

Feedback & new ideas are welcome!

Read on Twitter

Read on Twitter