"Scaling up fact-checking using the wisdom of crowds"

We find that 10 laypeople rating just headlines match performance of professional fact-checkers researching full articles- using set of URLs flagged by internal FB algorithm

https://psyarxiv.com/9qdza/ ">https://psyarxiv.com/9qdza/&qu...

Fact-checking could help fight misinformation online:

➤ Platforms can downrank flagged content so that fewer users see it

➤ Corrections can reduce false beliefs (forget backfires: e.g. https://link.springer.com/article/10.1007/s11109-018-9443-y">https://link.springer.com/article/1... by @thomasjwood @EthanVPorter)

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚨" title="Polizeiautos mit drehendem Licht" aria-label="Emoji: Polizeiautos mit drehendem Licht">But there is a BIG problem!

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚨" title="Polizeiautos mit drehendem Licht" aria-label="Emoji: Polizeiautos mit drehendem Licht">But there is a BIG problem! https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚨" title="Polizeiautos mit drehendem Licht" aria-label="Emoji: Polizeiautos mit drehendem Licht">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚨" title="Polizeiautos mit drehendem Licht" aria-label="Emoji: Polizeiautos mit drehendem Licht">

➤ Platforms can downrank flagged content so that fewer users see it

➤ Corrections can reduce false beliefs (forget backfires: e.g. https://link.springer.com/article/10.1007/s11109-018-9443-y">https://link.springer.com/article/1... by @thomasjwood @EthanVPorter)

Professional fact-checking doesnt SCALE

eg last Jan, FB& #39;s US partners factchecked just 200 articles/month!

https://thehill.com/policy/technology/478896-critics-fear-facebook-fact-checkers-losing-misinformation-fight

Even">https://thehill.com/policy/te... if ML expands factcheck reach theres desperate need for scalability

FCs also perceived as having liberal bias which creates political issues

eg last Jan, FB& #39;s US partners factchecked just 200 articles/month!

https://thehill.com/policy/technology/478896-critics-fear-facebook-fact-checkers-losing-misinformation-fight

Even">https://thehill.com/policy/te... if ML expands factcheck reach theres desperate need for scalability

FCs also perceived as having liberal bias which creates political issues

Here& #39;s where the *wisdom of crowds* comes in

Crowd judgments have been shown to perform well in guessing tasks, medical diagnoses, and market predictions

Plus politically balanced crowds cant be accused of bias

BUT can crowds actually do a good job of evaluating news articles?

Crowd judgments have been shown to perform well in guessing tasks, medical diagnoses, and market predictions

Plus politically balanced crowds cant be accused of bias

BUT can crowds actually do a good job of evaluating news articles?

We set out to answer this question

It was critical to use *representative* articles- otherwise unclear if findings would generalize

So we partnered with FB Community Review team, and got 207 URLs flagged for fact-checking by an internal FB algorithm https://www.axios.com/facebook-fact-checking-contractors-e1eaeb8b-54cd-4519-8671-d81121ef1740.html">https://www.axios.com/facebook-...

It was critical to use *representative* articles- otherwise unclear if findings would generalize

So we partnered with FB Community Review team, and got 207 URLs flagged for fact-checking by an internal FB algorithm https://www.axios.com/facebook-fact-checking-contractors-e1eaeb8b-54cd-4519-8671-d81121ef1740.html">https://www.axios.com/facebook-...

Next we had 3 professional factcheckers research each article & rate its accuracy

First surprise: They disagreed more than you might expect!

The avg correlation b/t the fact-checkers& #39; ratings was .62

On half the articles, 1 FC disagreed w other 2; on other half, all 3 agreed

First surprise: They disagreed more than you might expect!

The avg correlation b/t the fact-checkers& #39; ratings was .62

On half the articles, 1 FC disagreed w other 2; on other half, all 3 agreed

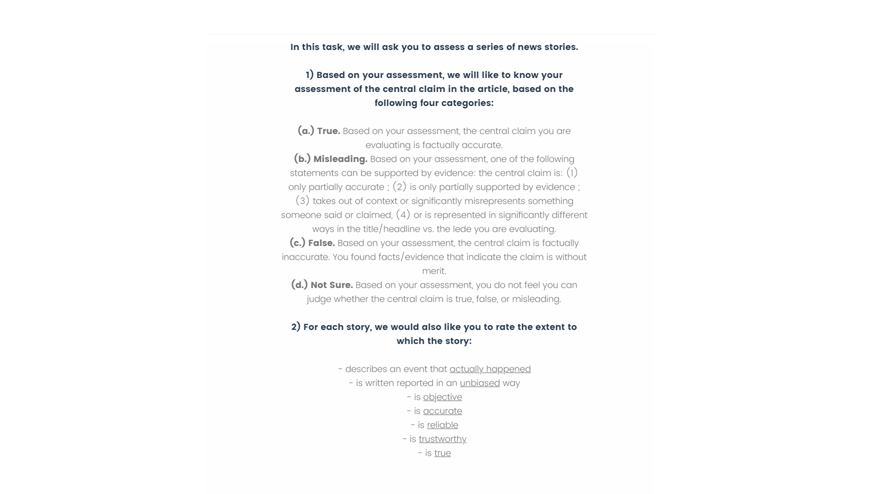

Then we recruited N=1,128 laypeople from MTurk to rate the same articles (20/turker)

For scalability, they just read & rated each headline+lede, not full article

Half shown URL domain, other half no source info

Our Q: How well do layperson ratings predict factchecker ratings?

For scalability, they just read & rated each headline+lede, not full article

Half shown URL domain, other half no source info

Our Q: How well do layperson ratings predict factchecker ratings?

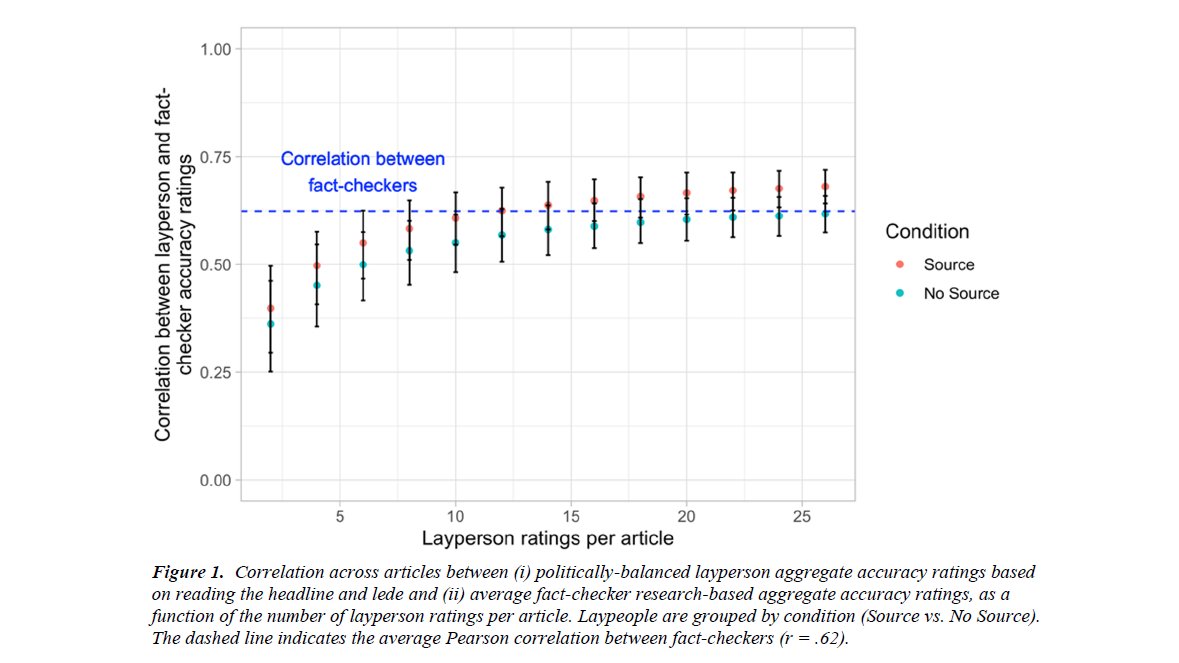

We created politically-balanced crowds & correlated their avg ratings with avg factchecker ratings

The crowd does quite well:

With as few as 10 laypeople, crowd is as correlated with average fact-checker rating as the fact-checkers’ ratings are correlated with each other!!

The crowd does quite well:

With as few as 10 laypeople, crowd is as correlated with average fact-checker rating as the fact-checkers’ ratings are correlated with each other!!

Providing article publisher domain improved crowd performance (a bit)

Consistent w suggestion that source info only helps when mismatch exists between headline plausibility & source: these headlines were mostly implausible, but some had trusted sources https://misinforeview.hks.harvard.edu/article/emphasizing-publishers-does-not-reduce-misinformation/">https://misinforeview.hks.harvard.edu/article/e...

Consistent w suggestion that source info only helps when mismatch exists between headline plausibility & source: these headlines were mostly implausible, but some had trusted sources https://misinforeview.hks.harvard.edu/article/emphasizing-publishers-does-not-reduce-misinformation/">https://misinforeview.hks.harvard.edu/article/e...

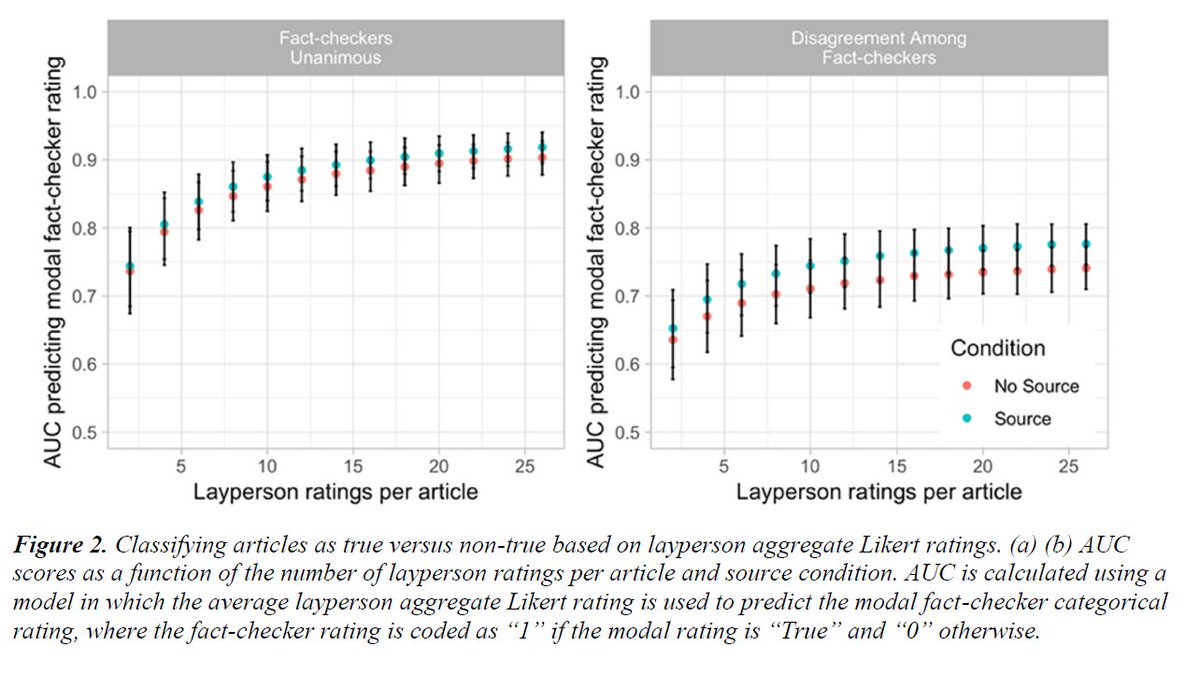

Next, we used laypeople ratings to predict the modal categorical rating fact-checkers gave to each headline (1 = True, 0 = Not True)

Overall AUC=.86

AUC>0.9 for articles where factcheckers were unanimous

AUC>0.75 for articles where one FC disagreed w other 2

Pretty damn good!

Overall AUC=.86

AUC>0.9 for articles where factcheckers were unanimous

AUC>0.75 for articles where one FC disagreed w other 2

Pretty damn good!

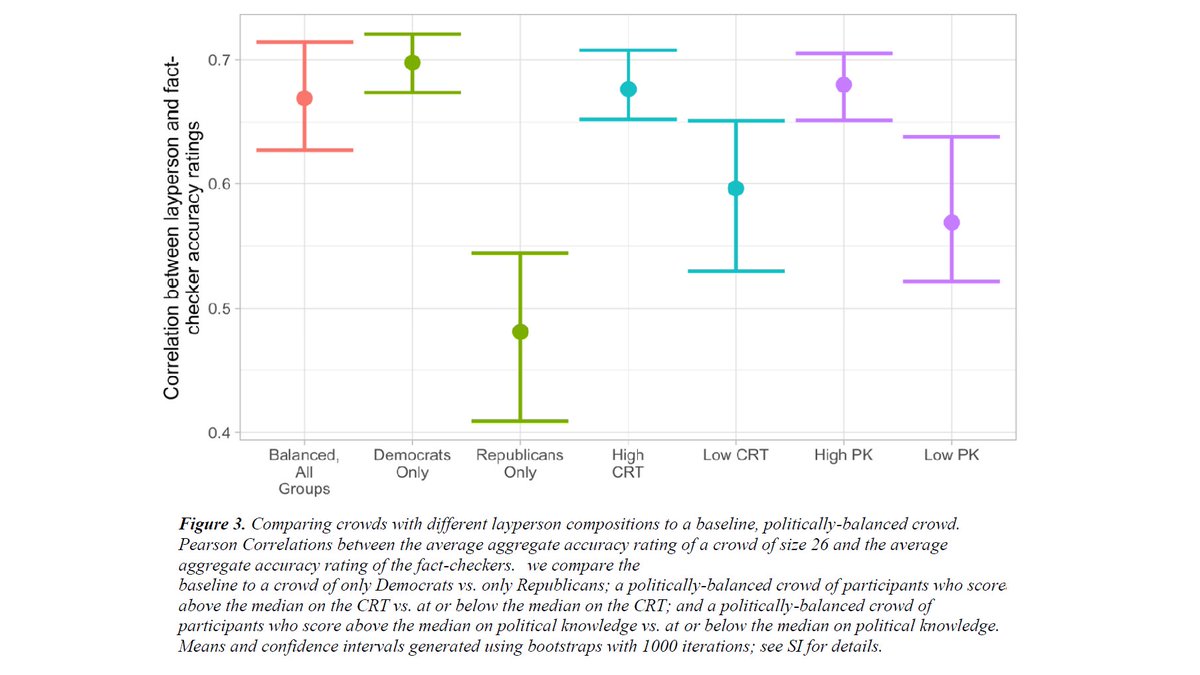

Finally we asked if some crowds did better than others

Answer:Yes & no

Crowds that were 1)Dem 2)high CRT 3)high political knowledge did better than 1)Rep 2)low CRT 3)low PK counterparts- but DIDNT outperform overall crowd!

Crowd neednt be all experts to match expert judgment

Answer:Yes & no

Crowds that were 1)Dem 2)high CRT 3)high political knowledge did better than 1)Rep 2)low CRT 3)low PK counterparts- but DIDNT outperform overall crowd!

Crowd neednt be all experts to match expert judgment

Caveats:

1) Individuals still fell for misinfo- but *crowds* did well

2) Need to protect against coordinated attacks (eg randomly poll users, not reddit-like)

3) Not representative sample- but point is that some laypeople can do well (FB could hire turkers!)

4) This was pre-COVID

1) Individuals still fell for misinfo- but *crowds* did well

2) Need to protect against coordinated attacks (eg randomly poll users, not reddit-like)

3) Not representative sample- but point is that some laypeople can do well (FB could hire turkers!)

4) This was pre-COVID

Overall, we think that crowdsourcing is a really promising avenue for platforms trying to scale their fact-checking program!

Led by @_JenAllen @AaArechar w @GordPennycook

Thanks to FB Community Review team and others who gave comments

Would love to hear your thoughts too! https://abs.twimg.com/emoji/v2/... draggable="false" alt="🎉" title="Partyknaller" aria-label="Emoji: Partyknaller">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🎉" title="Partyknaller" aria-label="Emoji: Partyknaller">

Led by @_JenAllen @AaArechar w @GordPennycook

Thanks to FB Community Review team and others who gave comments

Would love to hear your thoughts too!

Read on Twitter

Read on Twitter Working paper alert!https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚨" title="Polizeiautos mit drehendem Licht" aria-label="Emoji: Polizeiautos mit drehendem Licht">"Scaling up fact-checking using the wisdom of crowds"We find that 10 laypeople rating just headlines match performance of professional fact-checkers researching full articles- using set of URLs flagged by internal FB algorithm https://psyarxiv.com/9qdza/&qu..." title="https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚨" title="Polizeiautos mit drehendem Licht" aria-label="Emoji: Polizeiautos mit drehendem Licht">Working paper alert!https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚨" title="Polizeiautos mit drehendem Licht" aria-label="Emoji: Polizeiautos mit drehendem Licht">"Scaling up fact-checking using the wisdom of crowds"We find that 10 laypeople rating just headlines match performance of professional fact-checkers researching full articles- using set of URLs flagged by internal FB algorithm https://psyarxiv.com/9qdza/&qu..." class="img-responsive" style="max-width:100%;"/>

Working paper alert!https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚨" title="Polizeiautos mit drehendem Licht" aria-label="Emoji: Polizeiautos mit drehendem Licht">"Scaling up fact-checking using the wisdom of crowds"We find that 10 laypeople rating just headlines match performance of professional fact-checkers researching full articles- using set of URLs flagged by internal FB algorithm https://psyarxiv.com/9qdza/&qu..." title="https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚨" title="Polizeiautos mit drehendem Licht" aria-label="Emoji: Polizeiautos mit drehendem Licht">Working paper alert!https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚨" title="Polizeiautos mit drehendem Licht" aria-label="Emoji: Polizeiautos mit drehendem Licht">"Scaling up fact-checking using the wisdom of crowds"We find that 10 laypeople rating just headlines match performance of professional fact-checkers researching full articles- using set of URLs flagged by internal FB algorithm https://psyarxiv.com/9qdza/&qu..." class="img-responsive" style="max-width:100%;"/>