Here& #39;s a small experiment: A #GPT3 Travel Agent.

http://edwardbenson.com/ai-travel-agent

Type">https://edwardbenson.com/ai-travel... in a city and it will give you a list of tourism suggestions. I made it to see how easily you can perform structured information extraction from language models.

Small Thread

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten"> https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten"> https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

http://edwardbenson.com/ai-travel-agent

Type">https://edwardbenson.com/ai-travel... in a city and it will give you a list of tourism suggestions. I made it to see how easily you can perform structured information extraction from language models.

Small Thread

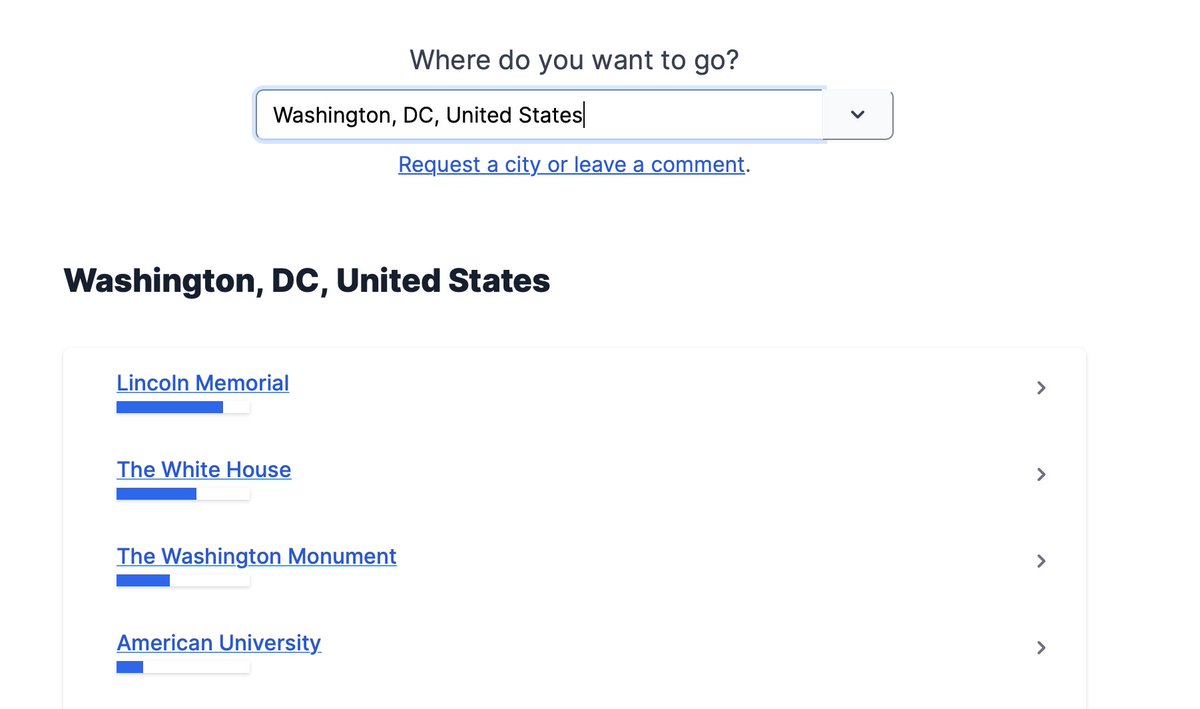

Here are example results for Washington, DC.

https://edwardbenson.com/ai-travel-agent/Washington-DC-United-States

Results">https://edwardbenson.com/ai-travel... are weighted based on how many times #GPT3 generated that suggestion. These suggestions look decent to me, despite almost no parameter tuning on my part and just a bit of lightweight post-processing.

https://edwardbenson.com/ai-travel-agent/Washington-DC-United-States

Results">https://edwardbenson.com/ai-travel... are weighted based on how many times #GPT3 generated that suggestion. These suggestions look decent to me, despite almost no parameter tuning on my part and just a bit of lightweight post-processing.

Building this made me think a lot about Online v. Offline AI

We tend to think of AI-powered products as being connected live to the #AI. This makes sense when dynamic context is important, but it also has problems: difficult to host, expensive to scale, hard to quality control.

We tend to think of AI-powered products as being connected live to the #AI. This makes sense when dynamic context is important, but it also has problems: difficult to host, expensive to scale, hard to quality control.

This demo, by contrast, has no AI in the serving loop.

Everything is pre-computed offline with a set of scripts and data files that can be version & quality controlled.

The code ends up looking a bit like a static web hosting framework, but for AI.

Everything is pre-computed offline with a set of scripts and data files that can be version & quality controlled.

The code ends up looking a bit like a static web hosting framework, but for AI.

When the use case supports it, precomputing results like this makes tremendous sense to me.

I suspect we& #39;ll see some interesting players in the "AI Content Pipeline" market. Humans will still act as editors and senior writers, while the AI fills the top of the funnel.

I suspect we& #39;ll see some interesting players in the "AI Content Pipeline" market. Humans will still act as editors and senior writers, while the AI fills the top of the funnel.

This is my first time playing with any sort of automated content generation, and I& #39;m curious what& #39;s already out there in the wild.

If you have any suggestions or links I should be looking at, let me know.

If you have any suggestions or links I should be looking at, let me know.

Blog version of this thread, where you can also sign up for email updates:

https://edwardbenson.com/2020/10/gpt3-travel-agent">https://edwardbenson.com/2020/10/g...

https://edwardbenson.com/2020/10/gpt3-travel-agent">https://edwardbenson.com/2020/10/g...

Read on Twitter

Read on Twitter