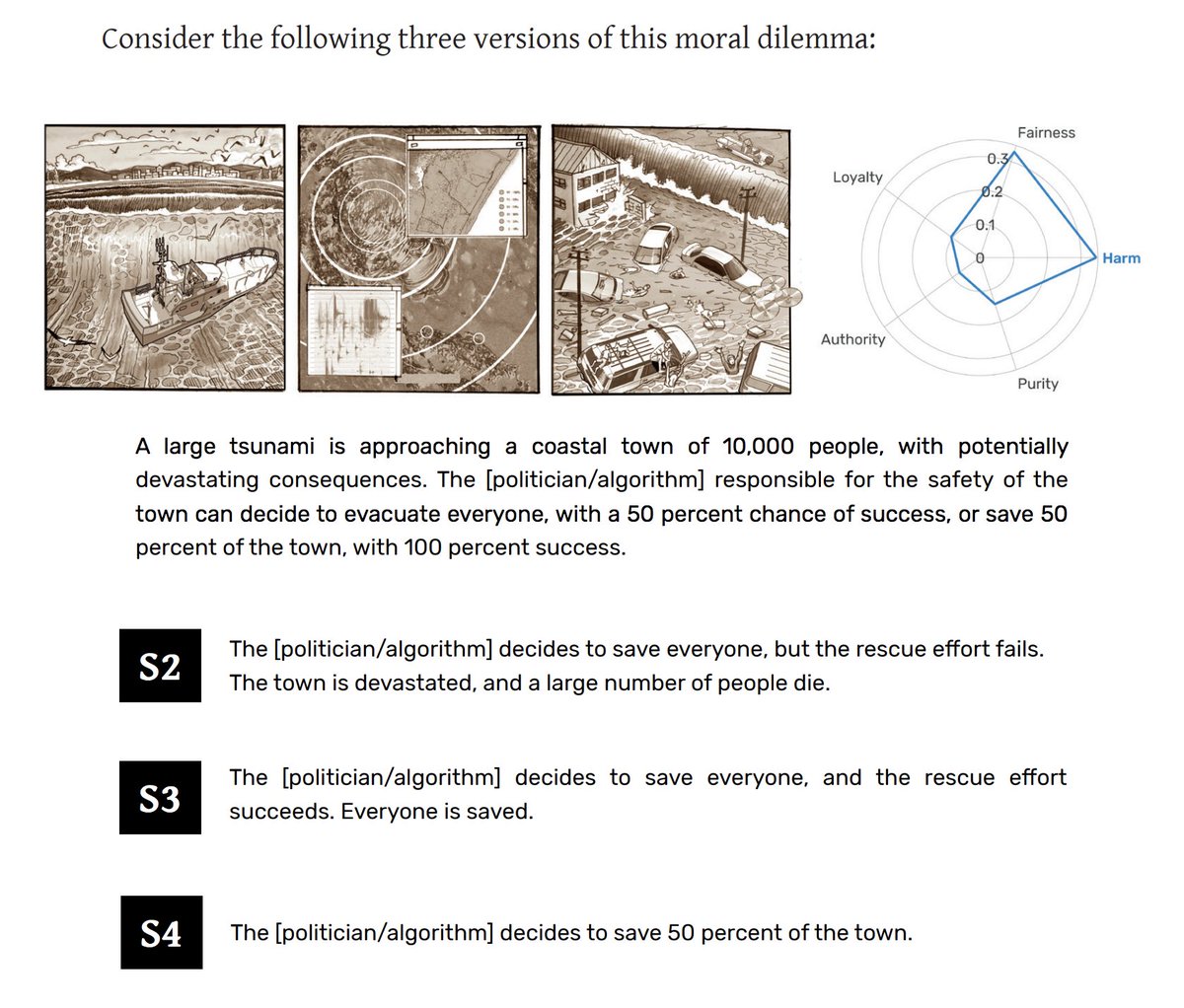

A tsunami is approaching a town of 10,000 people. How would you judge a politician or algorithm that:

a) Tries to save everyone, but fails.

b) Tries to save everyone, and succeeds.

c) Compromises and saves half of the town.

1/

https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

a) Tries to save everyone, but fails.

b) Tries to save everyone, and succeeds.

c) Compromises and saves half of the town.

1/

https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

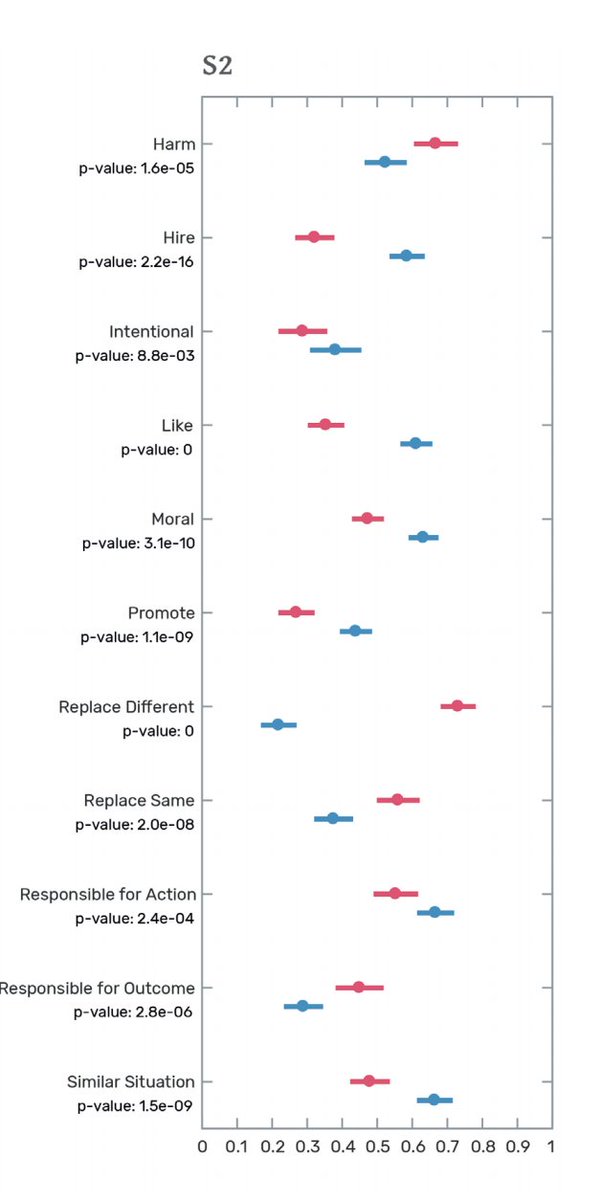

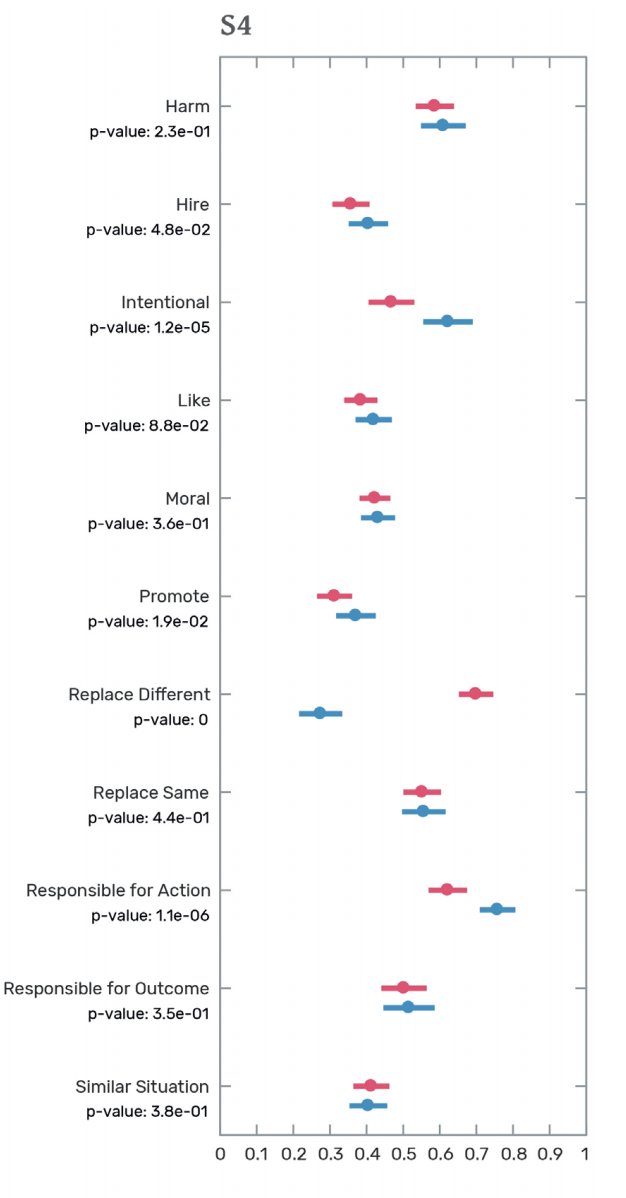

About 150 to 200 subjects, who saw only one of the six conditions (risky success, risky failure, or compromise, as either the action of a human or a machine), evaluated

each scenario.

2/ https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

each scenario.

2/ https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

When the action involves taking a risk and failing, people evaluate the risk taking politician more positively than the risk-taking algorithm. They report liking the politician more, and they consider the politician’s decision as more

morally correct.

3/

https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

morally correct.

3/

https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

They also consider the action of the algorithm as more harmful and where more likely to report that they would have done the same when the risky choice is

presented as a human action.

4/ https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

presented as a human action.

4/ https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

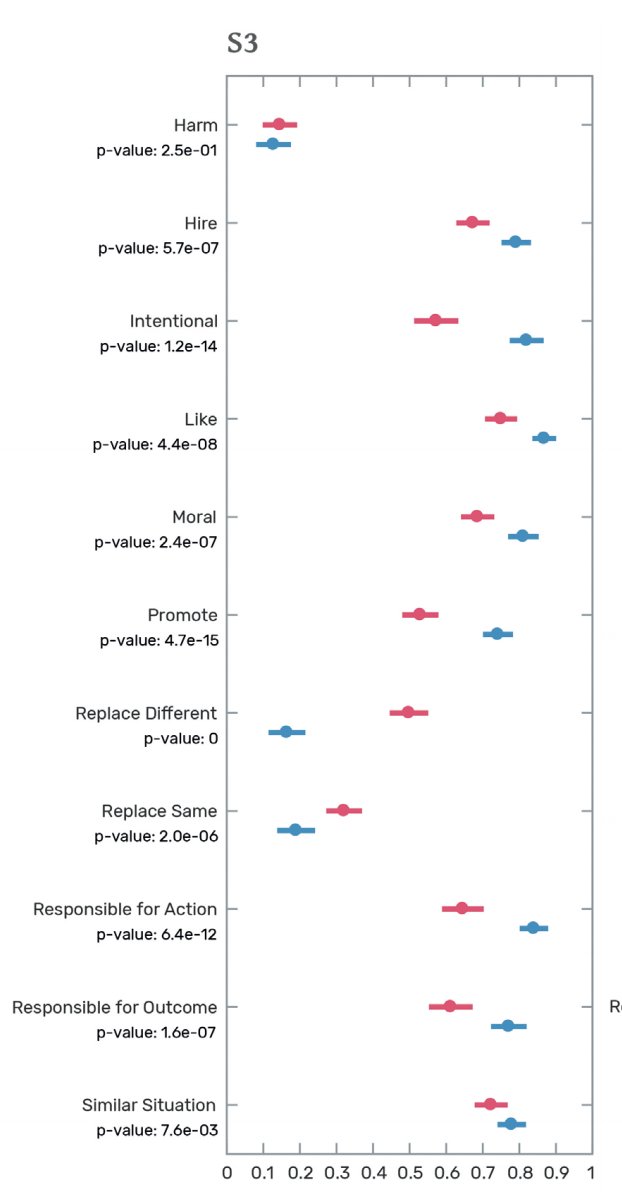

In the scenario where the risk resulted in success, people evaluate the politician more positively than the algorithm. They like the politician more, consider their

action as more morally correct, and are more likely to want to hire or promote them.

5/

https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

action as more morally correct, and are more likely to want to hire or promote them.

5/

https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

In the compromise scenario we see almost no difference. People see the action of the politician as more intentional, but they rate the politician and the algorithm equally in terms of harm and moral judgment.

6/

https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

6/

https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

But why do we observe such marked differences?

On the one hand, these results agree with previous research showing that people quickly lose confidence in algorithms after seeing them err, a phenomenon known as

algorithm aversion.

7/ https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

On the one hand, these results agree with previous research showing that people quickly lose confidence in algorithms after seeing them err, a phenomenon known as

algorithm aversion.

7/ https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

On the other hand, people may be using different mental models to judge the actions of the politician and the algorithm.

8/ https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

8/ https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

In the tsunami scenario, a human decision-maker (the politician) is a moral agent who is expected to acknowledge the moral status of everyone. Hence, they are expected to try to save all citizens, even if this is risky.

9/ https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

9/ https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

Thus, when the human fails, they are still evaluated positively because they tried to do the “right” thing. A machine in the same situation does not enjoy the same benefit of the doubt.

10/ https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

10/ https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

A machine that tries to save everyone, and fails, may not be seen as a moral agent trying to do the right thing, but rather as a defective system that erred because of its limited capacities.

11/ https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

11/ https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

In simple words, in the context of a moral dilemma, people may expect machines to be rational and people to

be human.

12/

Read more at: https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

be human.

12/

Read more at: https://www.judgingmachines.com/ ">https://www.judgingmachines.com/">...

Read on Twitter

Read on Twitter