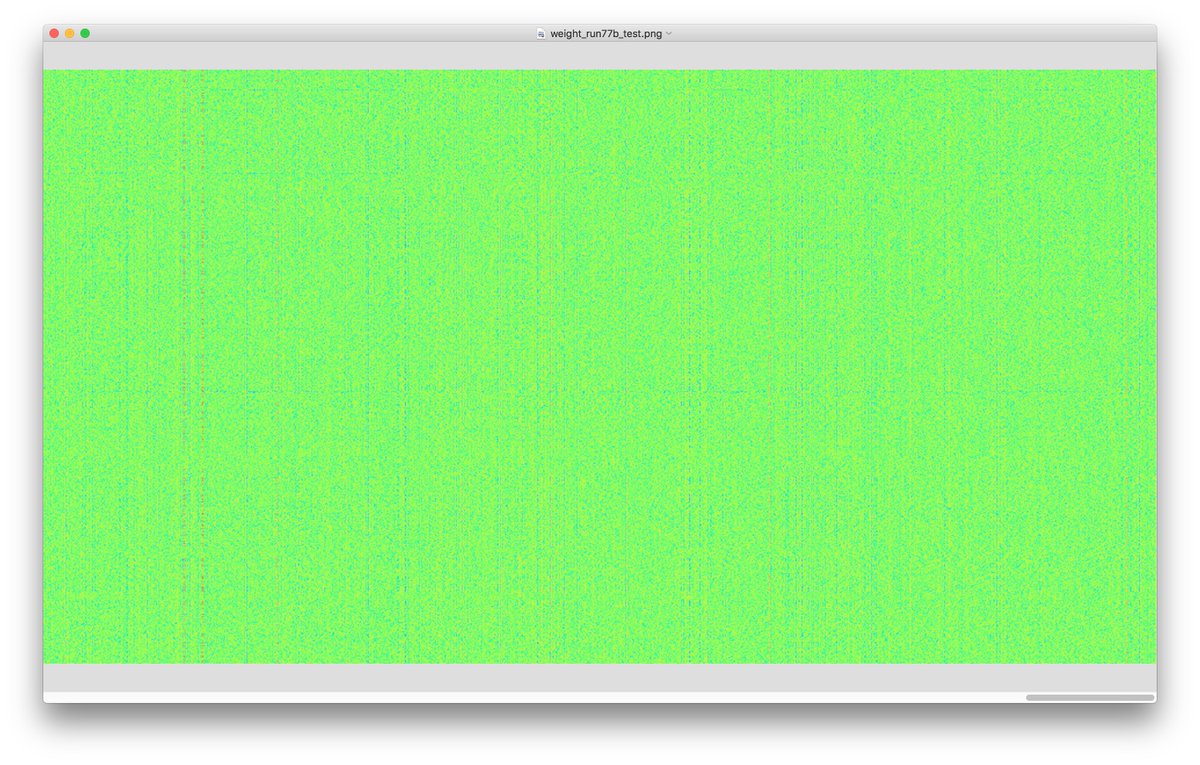

I& #39;ve been examining the actual weight values of various models. Many layers have clear patterns that seem to be spectral lines.

It seems like neural networks encode information into specific rows or columns. I wonder why.

It seems like neural networks encode information into specific rows or columns. I wonder why.

OpenAI& #39;s position embedding layer (wpe) has very clear sinusoidal patterns (as observed by /u/fpgaminer: https://www.reddit.com/r/MachineLearning/comments/iifw9h/r_gpt2_position_embeddings_visualized/)">https://www.reddit.com/r/Machine...

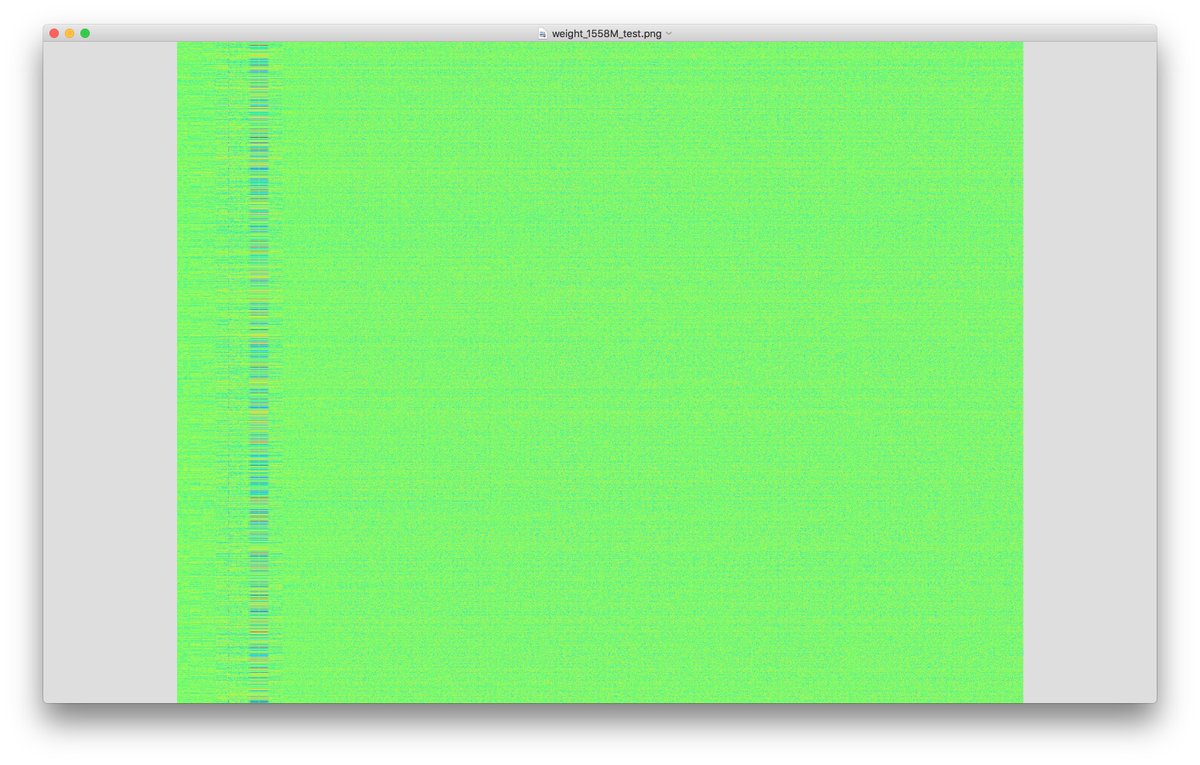

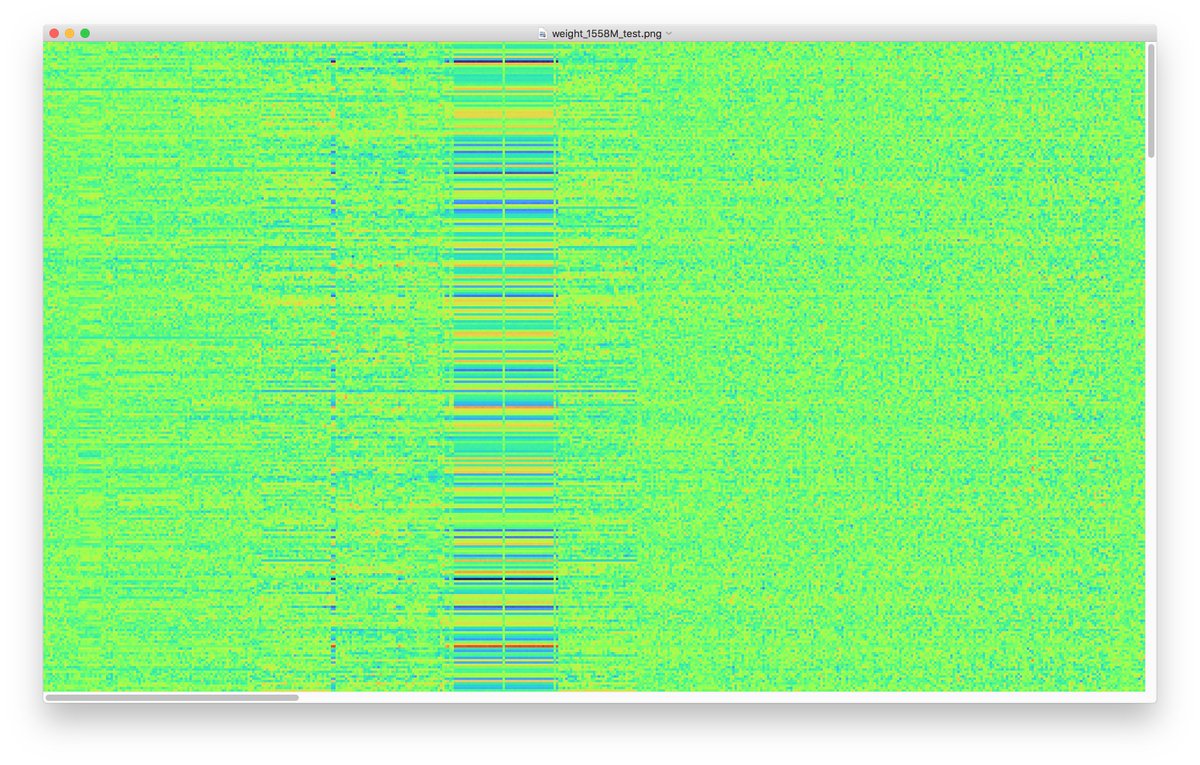

1.5B& #39;s wpe layer:

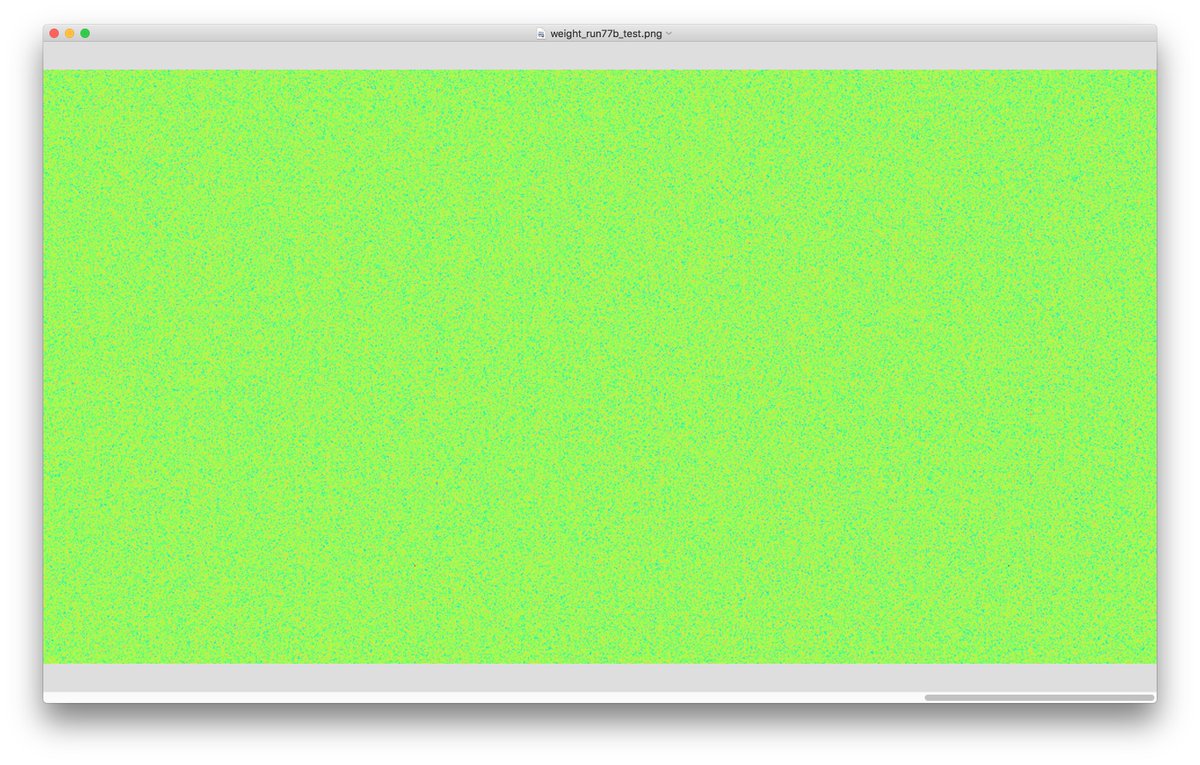

The next 2048 vocab embeddings from 1.5B don& #39;t seem to have a clear pattern. I wonder what was special about the vertical stripes from the previous 2048...

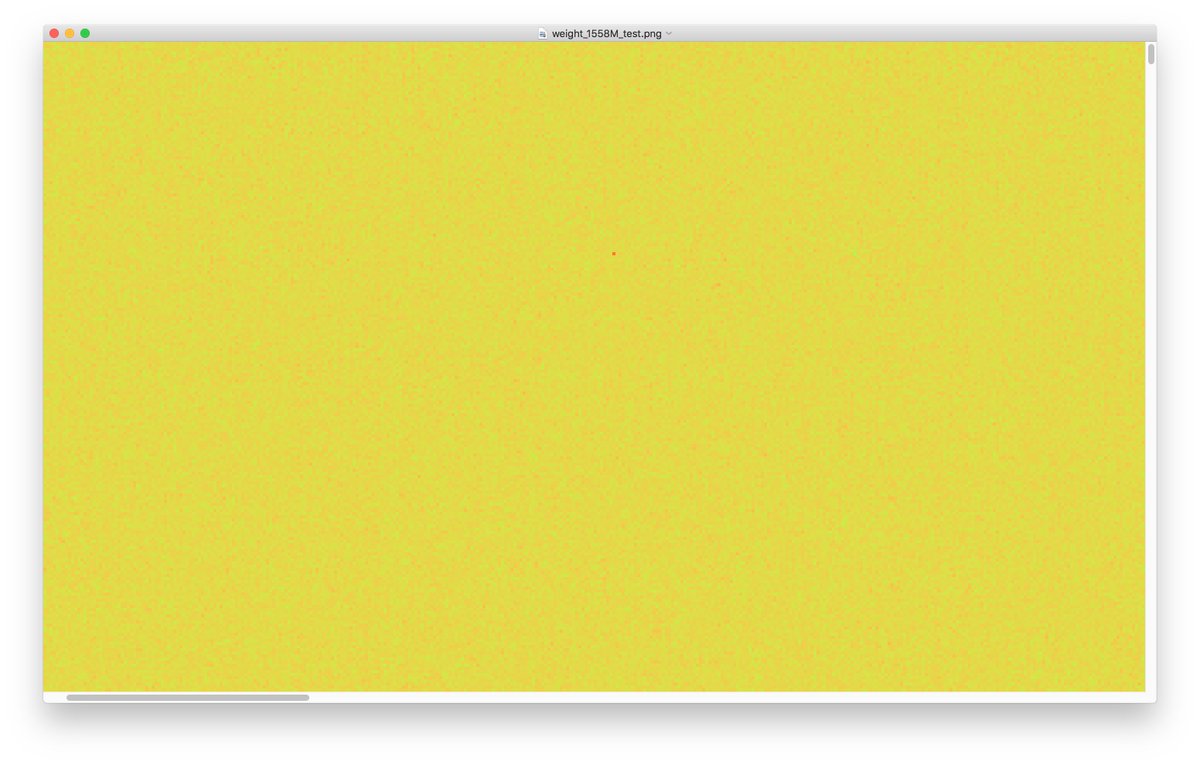

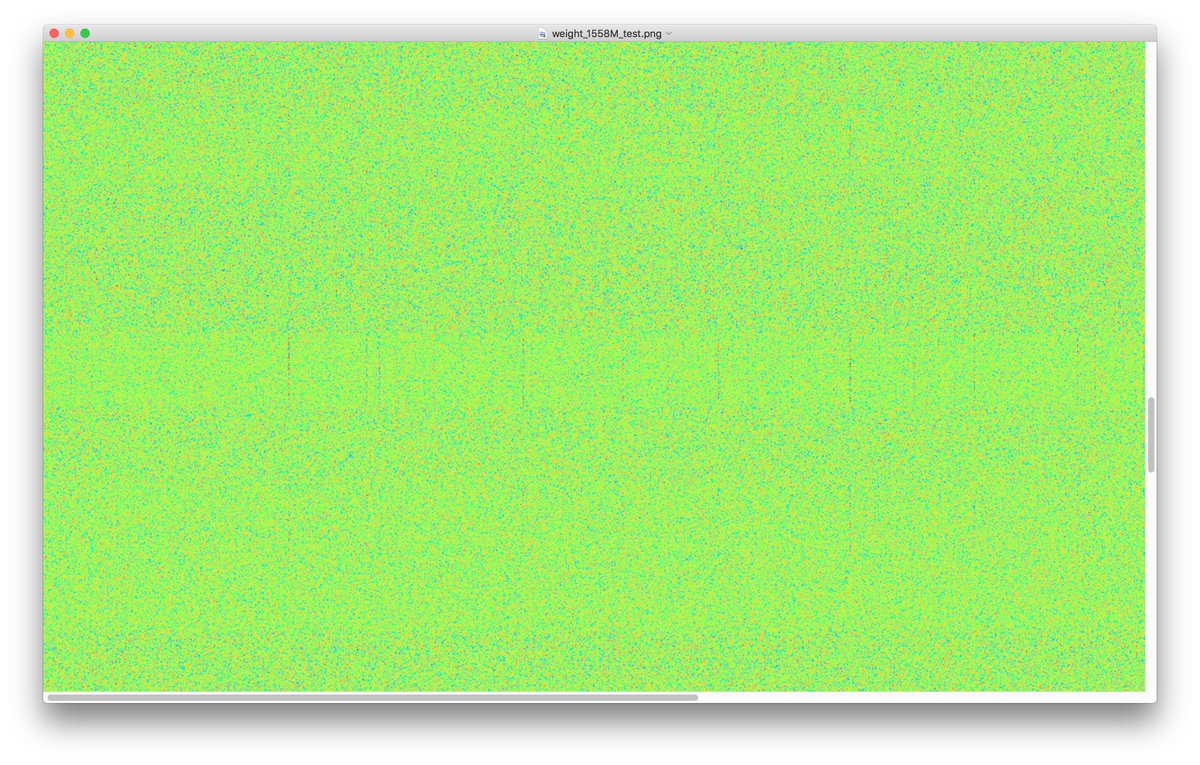

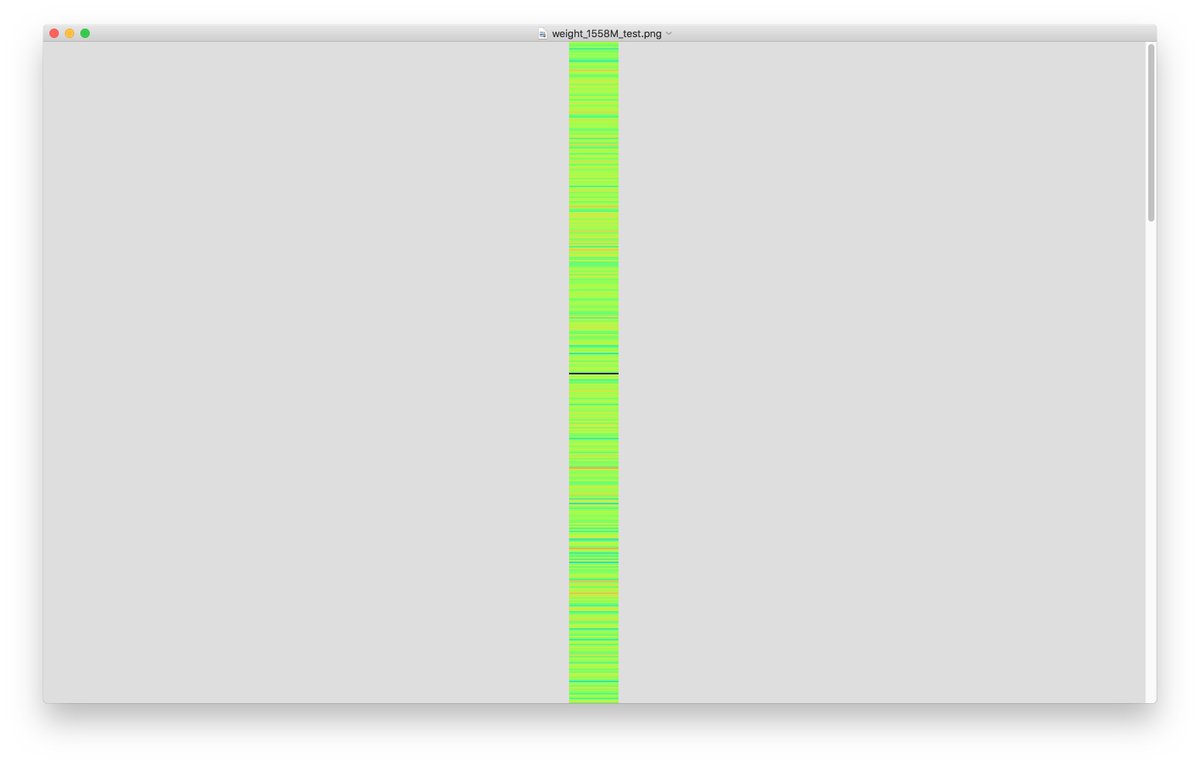

Some layers have "hotspots": the model has decided that one specific weight value should be very different from the surrounding values. It& #39;s like a spectral line, but a point rather than a line.

(This layer is & #39;model/h8/mlp/c_fc/w& #39; from OpenAI& #39;s 1558M)

(This layer is & #39;model/h8/mlp/c_fc/w& #39; from OpenAI& #39;s 1558M)

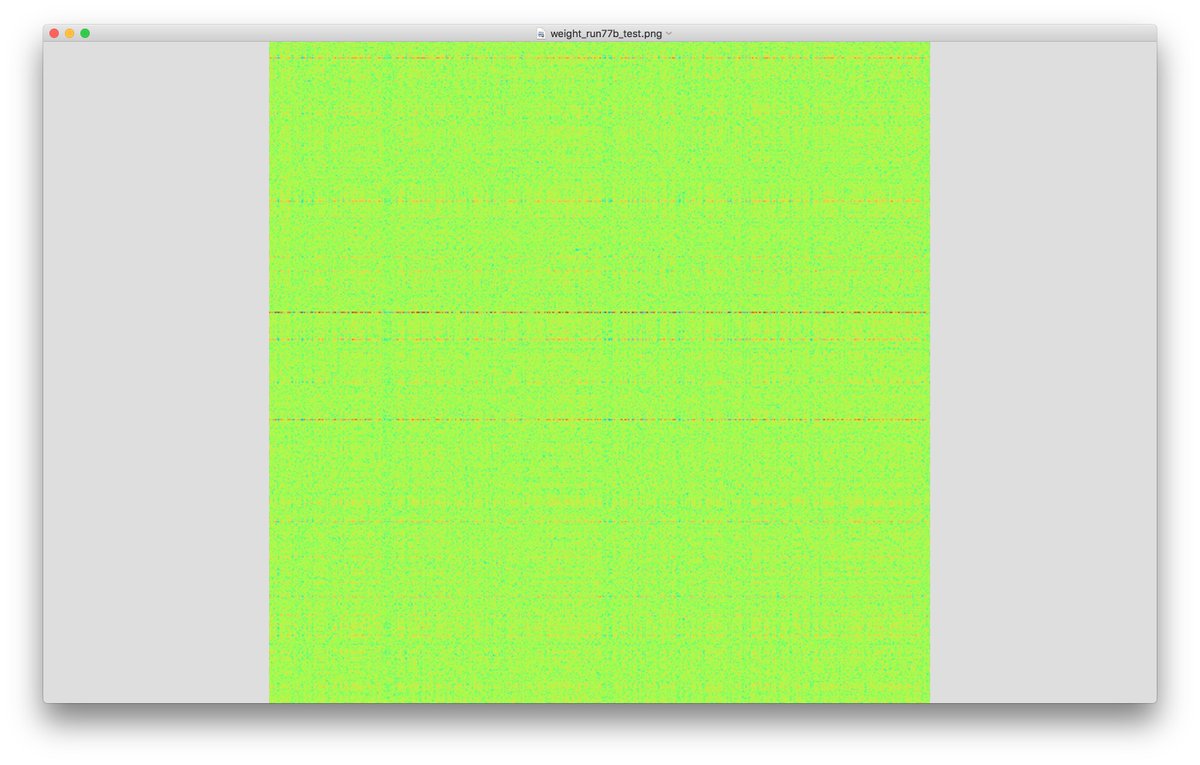

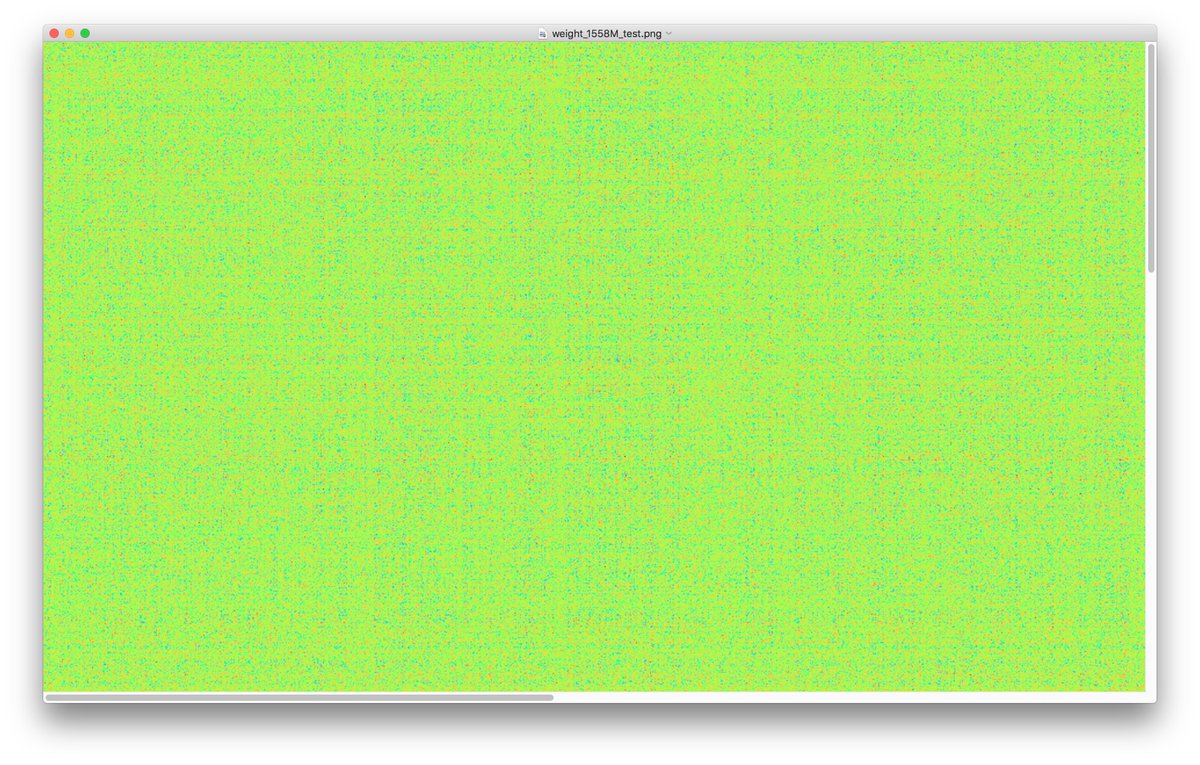

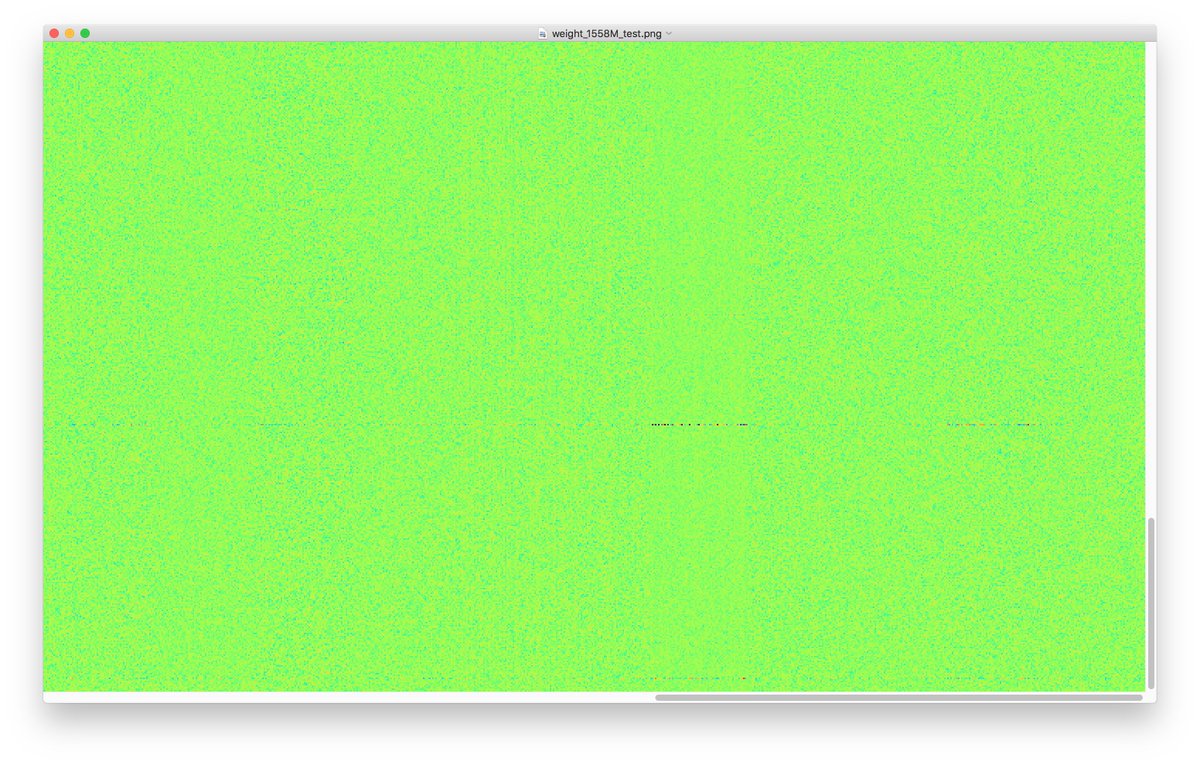

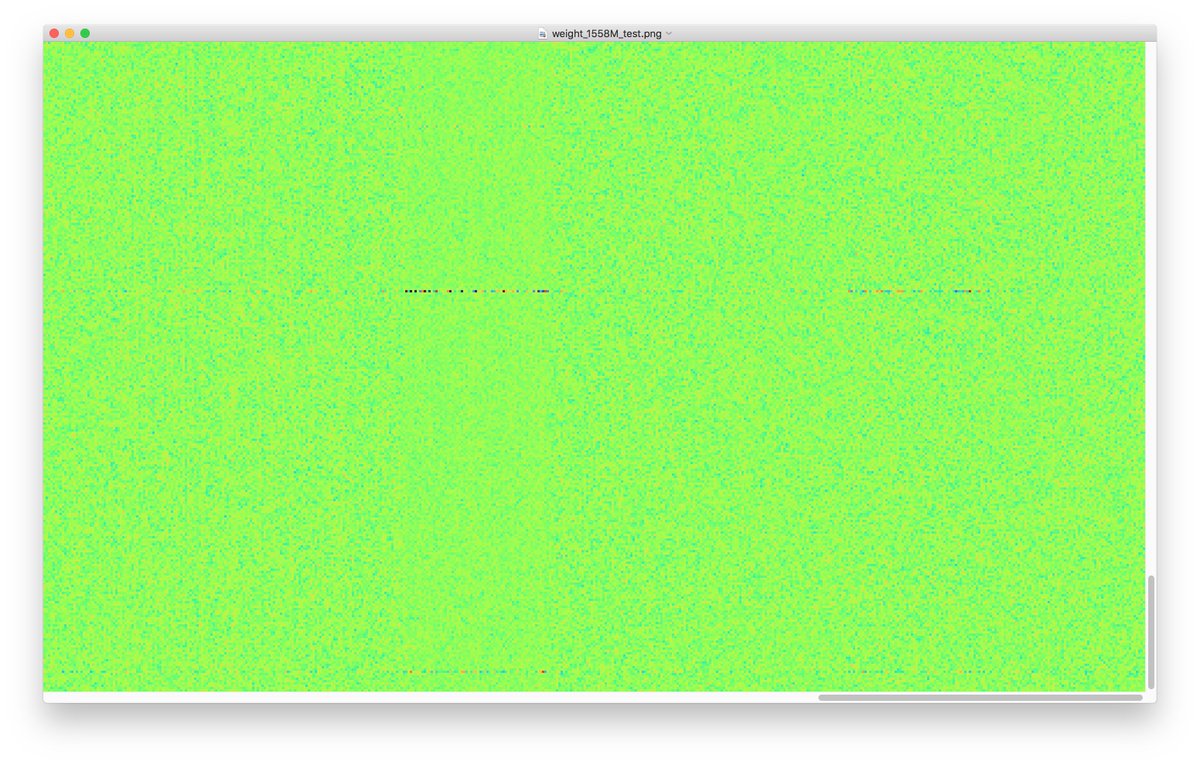

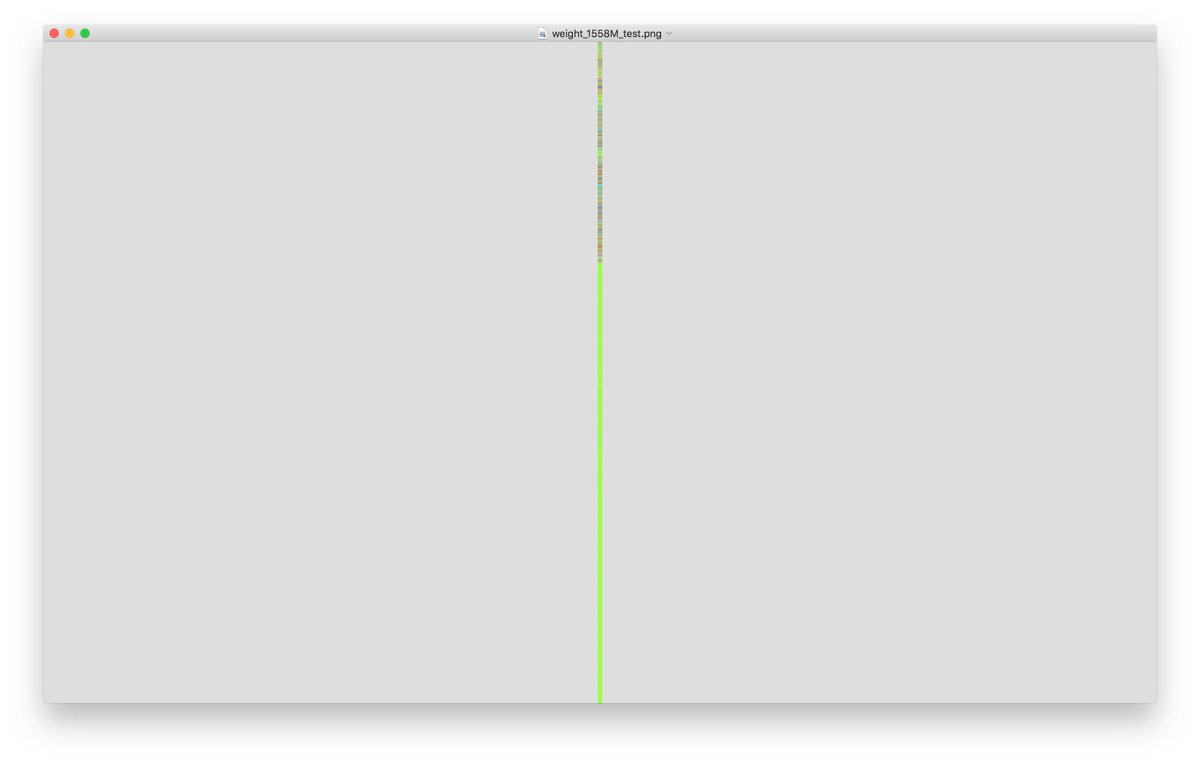

More spectral lines, this time in & #39;model/h8/attn/c_attn/w& #39; from OpenAI& #39;s 1558M.

Zooming out, you can see some clear horizontal patterns. Those are the 25 attention heads.

Zooming out, you can see some clear horizontal patterns. Those are the 25 attention heads.

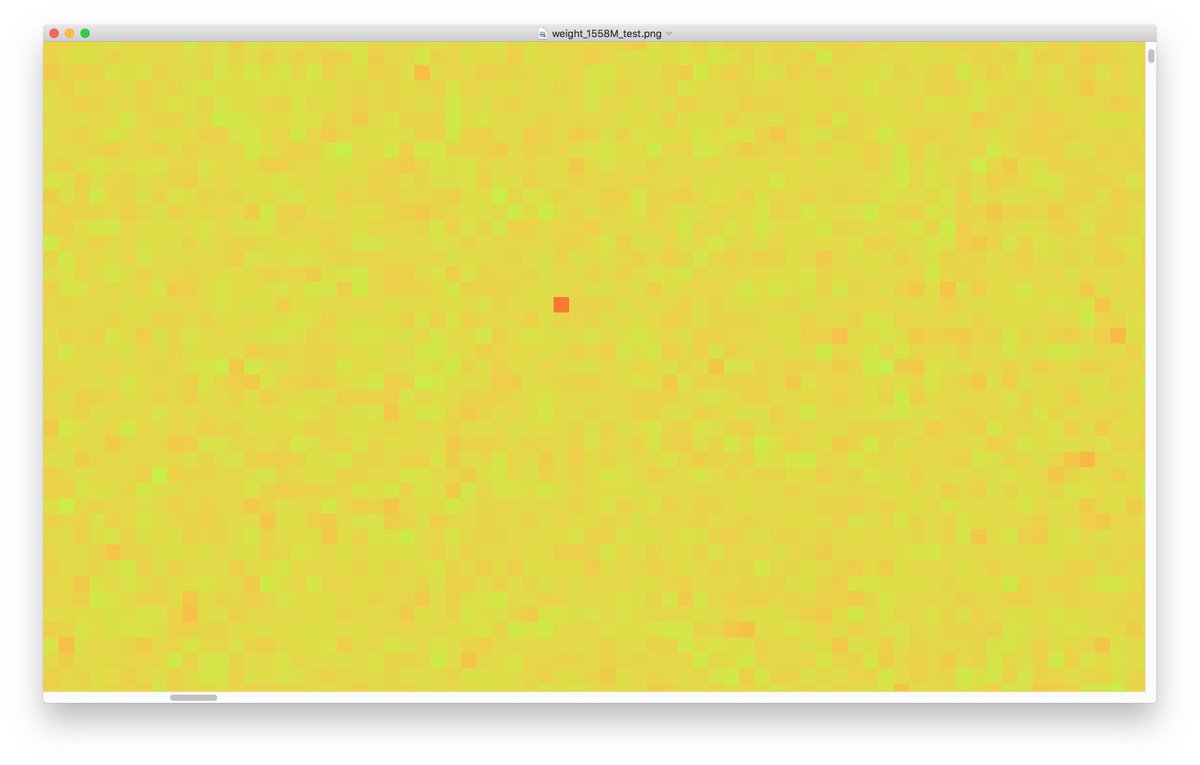

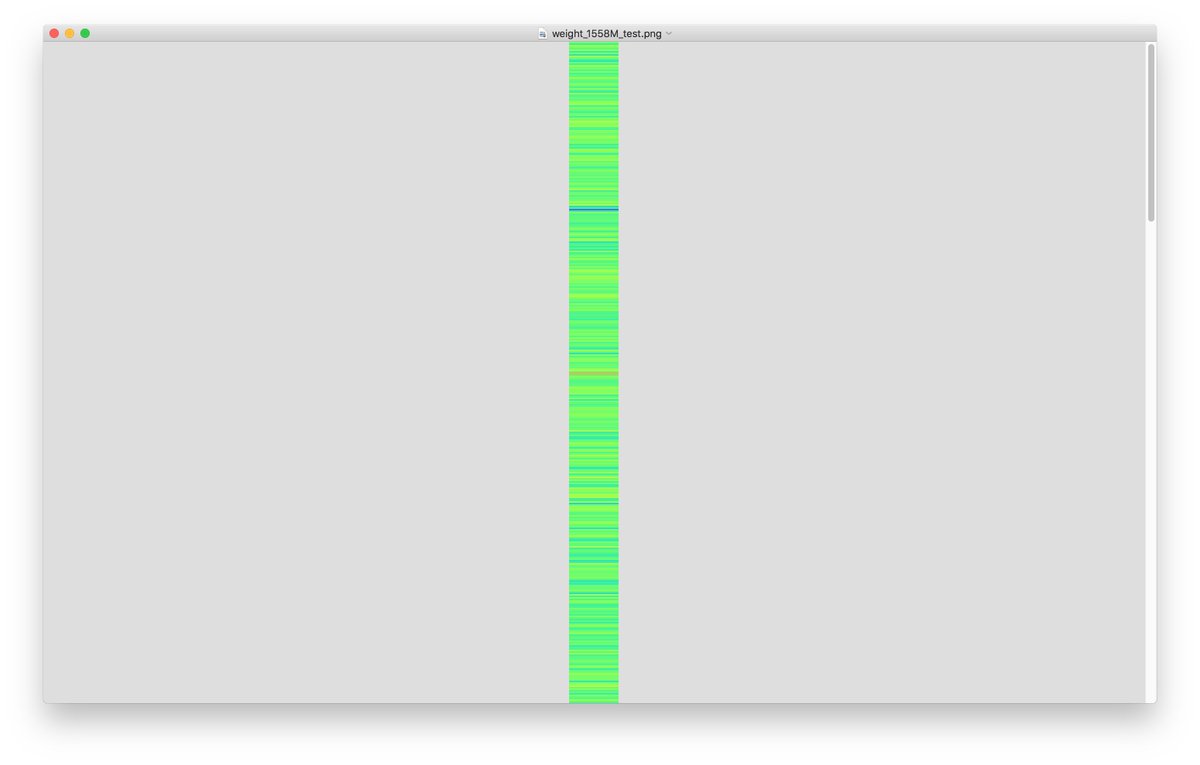

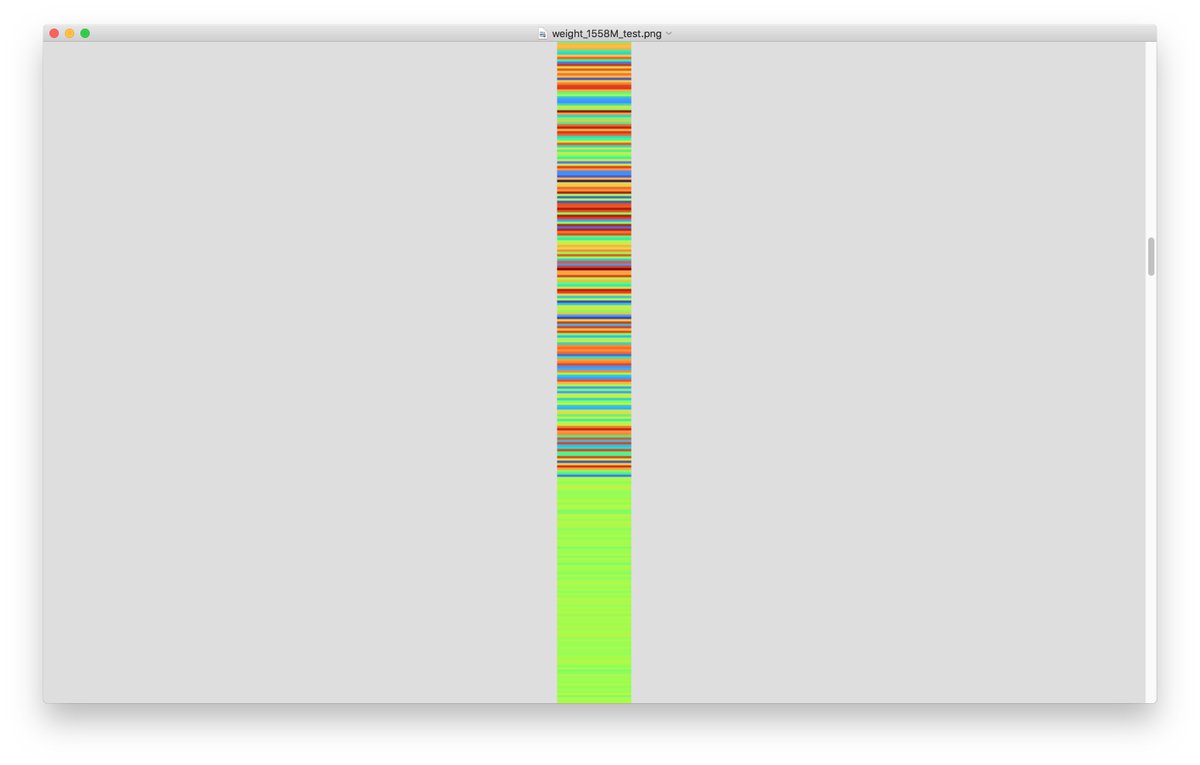

Spectral lines from & #39;model/h8/attn/c_proj/w& #39; of OpenAI& #39;s 1558M. The model seems to encode information into specific rows for some reason.

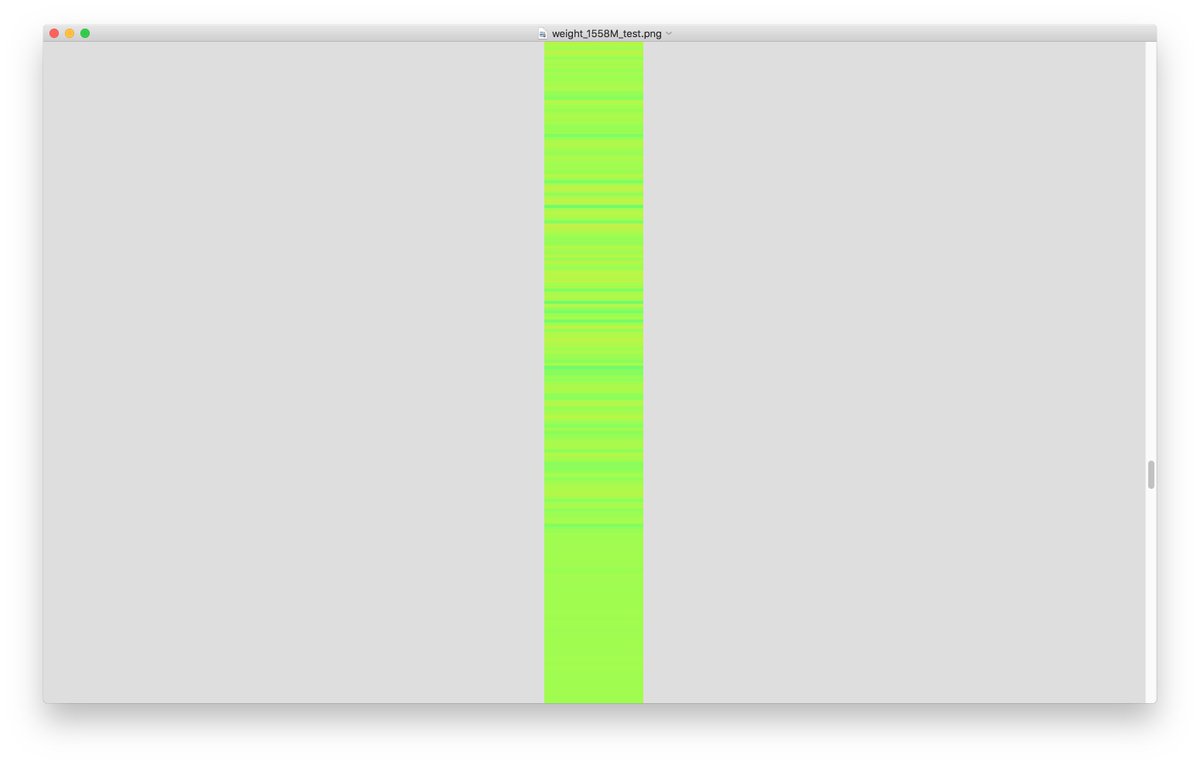

The spectral lines might correspond to the layernorm values. Here& #39;s a visualization of & #39;model/h8/ln_1/g& #39; and & #39;model/h8/ln_1/b& #39;, which are 1D layers with 1600 values each.

Some values are very different from the others.

Some values are very different from the others.

Read on Twitter

Read on Twitter