It& #39;s finally time for some paper review!  https://abs.twimg.com/emoji/v2/... draggable="false" alt="📜" title="Schriftrolle" aria-label="Emoji: Schriftrolle">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="📜" title="Schriftrolle" aria-label="Emoji: Schriftrolle"> https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔍" title="Nach links zeigende Lupe" aria-label="Emoji: Nach links zeigende Lupe">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔍" title="Nach links zeigende Lupe" aria-label="Emoji: Nach links zeigende Lupe"> https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧐" title="Gesicht mit Monokel" aria-label="Emoji: Gesicht mit Monokel">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧐" title="Gesicht mit Monokel" aria-label="Emoji: Gesicht mit Monokel">

I promised the other day to start posting threads with summaries of papers that had a big impact on the field of ML and CV.

Here is the first one - the AlexNet paper!

I promised the other day to start posting threads with summaries of papers that had a big impact on the field of ML and CV.

Here is the first one - the AlexNet paper!

This is one of the most influential papers in Computer Vision, which spurred a lot of interest in deep learning. AlexNet combined several interesting ideas to make the CNN generalize well on the huge ImageNet dataset. It won the ILSVRC-2012 challenge by a big margin.

Architecture  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏗️" title="Gebäude im Bau" aria-label="Emoji: Gebäude im Bau">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏗️" title="Gebäude im Bau" aria-label="Emoji: Gebäude im Bau">

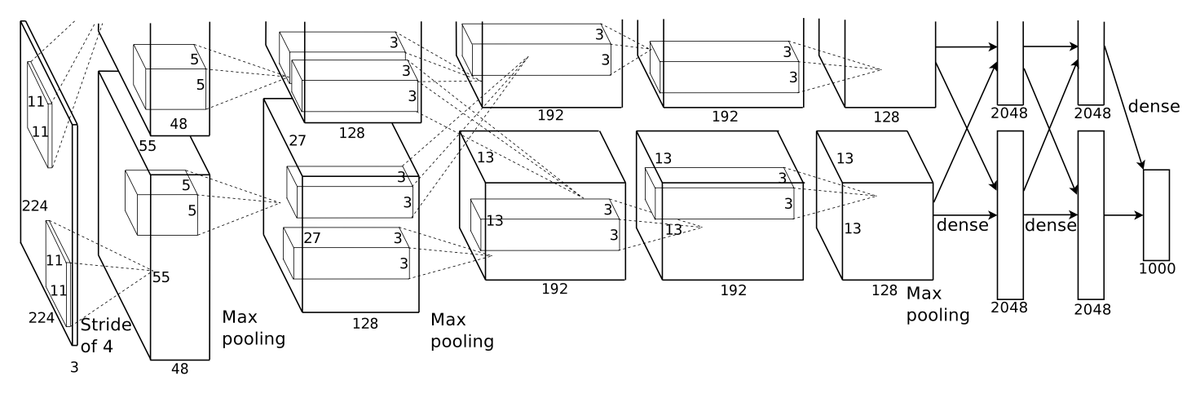

The network consists of 5 convolutional layers (some of which followed by max-pooling) and 3 fully-connected layers and has 60M parameters. The network is distributed over two GPUs with only particular layers allowed to communicate between them.

The network consists of 5 convolutional layers (some of which followed by max-pooling) and 3 fully-connected layers and has 60M parameters. The network is distributed over two GPUs with only particular layers allowed to communicate between them.

Activation function  https://abs.twimg.com/emoji/v2/... draggable="false" alt="📈" title="Tabelle mit Aufwärtstrend" aria-label="Emoji: Tabelle mit Aufwärtstrend">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="📈" title="Tabelle mit Aufwärtstrend" aria-label="Emoji: Tabelle mit Aufwärtstrend">

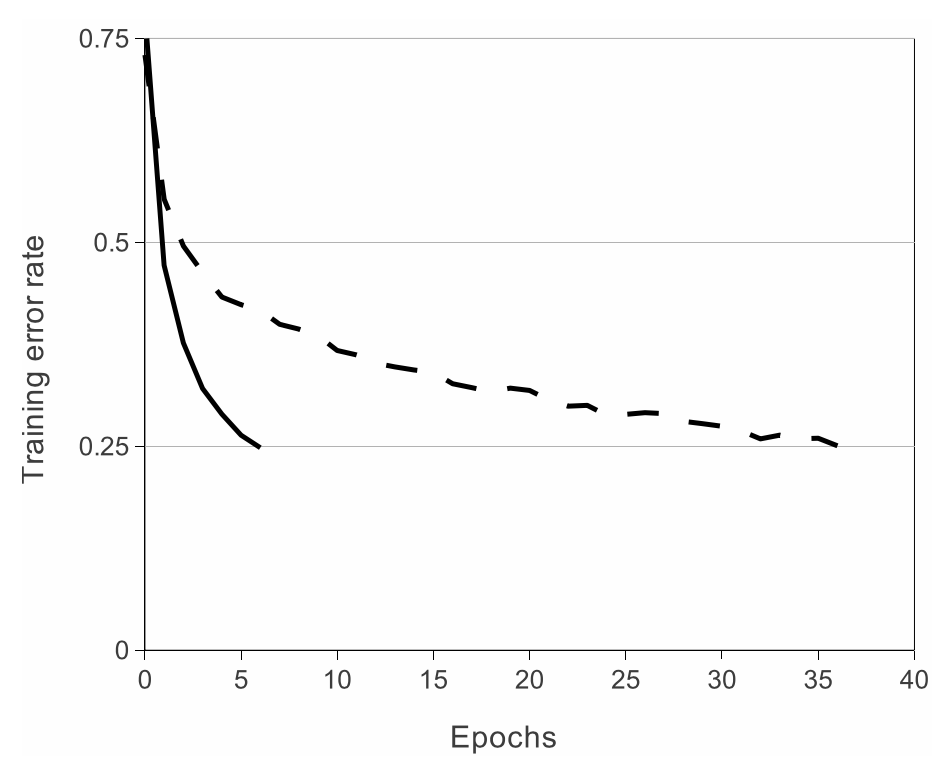

AlexNet is one of the first papers to use ReLUs instead of sigmoid or tanh. The network was able to learn much faster (about 6x) and enabled the authors to train such a large network in the first place.

Btw. ReLU is a complicated way to say max(0,x) https://abs.twimg.com/emoji/v2/... draggable="false" alt="😉" title="Zwinkerndes Gesicht" aria-label="Emoji: Zwinkerndes Gesicht">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😉" title="Zwinkerndes Gesicht" aria-label="Emoji: Zwinkerndes Gesicht">

AlexNet is one of the first papers to use ReLUs instead of sigmoid or tanh. The network was able to learn much faster (about 6x) and enabled the authors to train such a large network in the first place.

Btw. ReLU is a complicated way to say max(0,x)

Normalization  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🗜️" title="Komprimierung" aria-label="Emoji: Komprimierung">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🗜️" title="Komprimierung" aria-label="Emoji: Komprimierung">

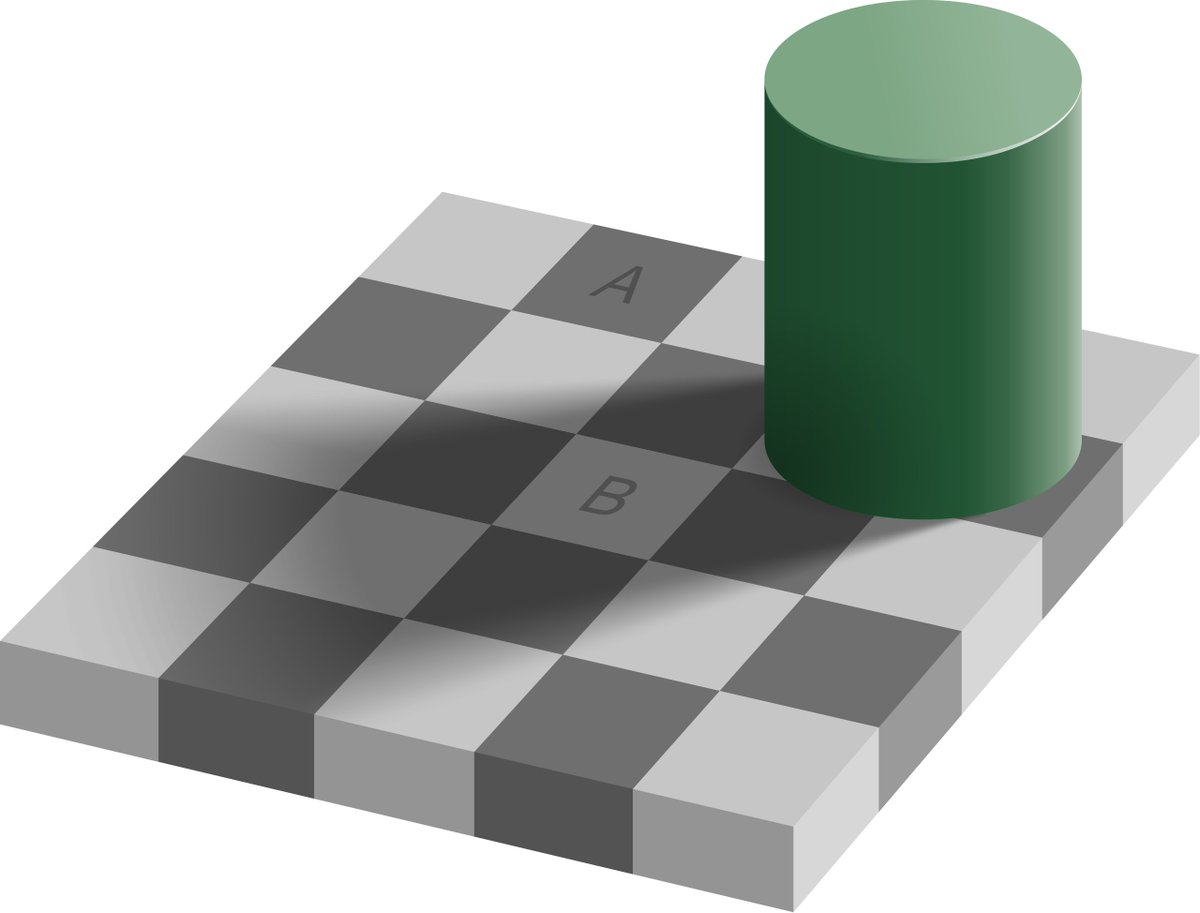

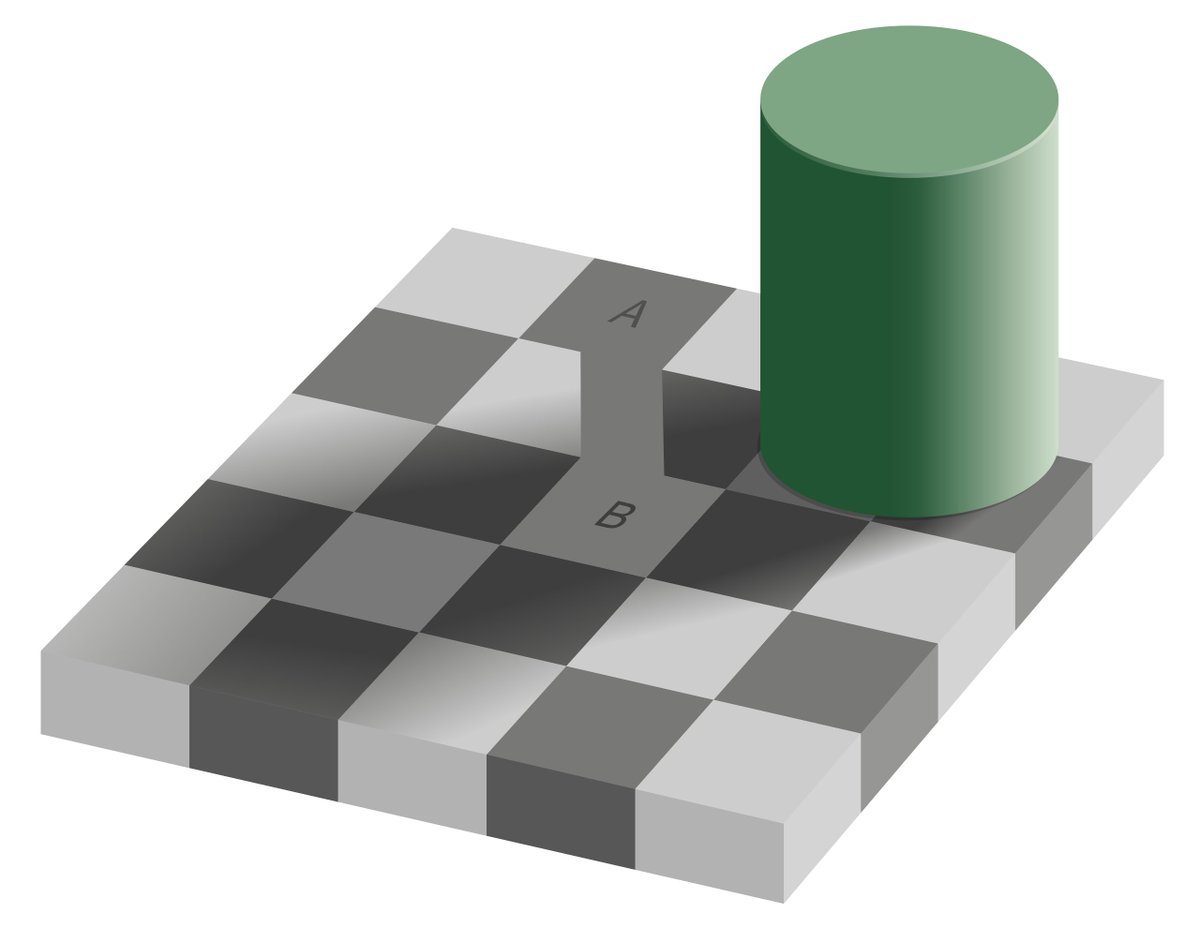

AlexNet uses Local Response Normalization to improve generalization. It is similar to lateral inhibition in biology, where neurons try to increase contrast to their neighbors. Example image - A and B are the same color, but your brain sees them differently.

AlexNet uses Local Response Normalization to improve generalization. It is similar to lateral inhibition in biology, where neurons try to increase contrast to their neighbors. Example image - A and B are the same color, but your brain sees them differently.

Pooling  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔲" title="Schwarzes Quadrat" aria-label="Emoji: Schwarzes Quadrat">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔲" title="Schwarzes Quadrat" aria-label="Emoji: Schwarzes Quadrat">

Pooling reduces the resolution of the feature maps and adds some translational invariance. Contrary to the traditional non-overlapping pooling methods, AlexNet allows neighbor regions to overlap. This further improves generalization.

Image: https://medium.com/x8-the-ai-community/explaining-alexnet-convolutional-neural-network-854df45613aa">https://medium.com/x8-the-ai...

Pooling reduces the resolution of the feature maps and adds some translational invariance. Contrary to the traditional non-overlapping pooling methods, AlexNet allows neighbor regions to overlap. This further improves generalization.

Image: https://medium.com/x8-the-ai-community/explaining-alexnet-convolutional-neural-network-854df45613aa">https://medium.com/x8-the-ai...

Dropout  https://abs.twimg.com/emoji/v2/... draggable="false" alt="⛔" title="Nicht betreten" aria-label="Emoji: Nicht betreten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⛔" title="Nicht betreten" aria-label="Emoji: Nicht betreten">

Dropout was a new technique in 2012, but it turns out to be crucial for good generalization in AlexNet. During training, the output of random 50% of the neurons in the first 2 fully-connected layers is set to 0, which reduces co-adaptation of neurons and overfitting.

Dropout was a new technique in 2012, but it turns out to be crucial for good generalization in AlexNet. During training, the output of random 50% of the neurons in the first 2 fully-connected layers is set to 0, which reduces co-adaptation of neurons and overfitting.

Results  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏆" title="Trophäe" aria-label="Emoji: Trophäe">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏆" title="Trophäe" aria-label="Emoji: Trophäe">

The results that AlexNet achieved vastly superior to any other method at that time. They won the ILSVRC-2012 challenge with 15.3% top-5 error rate with the second-best method being 26.2%.

The results that AlexNet achieved vastly superior to any other method at that time. They won the ILSVRC-2012 challenge with 15.3% top-5 error rate with the second-best method being 26.2%.

Feature maps  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🌠" title="Sternschnuppe" aria-label="Emoji: Sternschnuppe">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🌠" title="Sternschnuppe" aria-label="Emoji: Sternschnuppe">

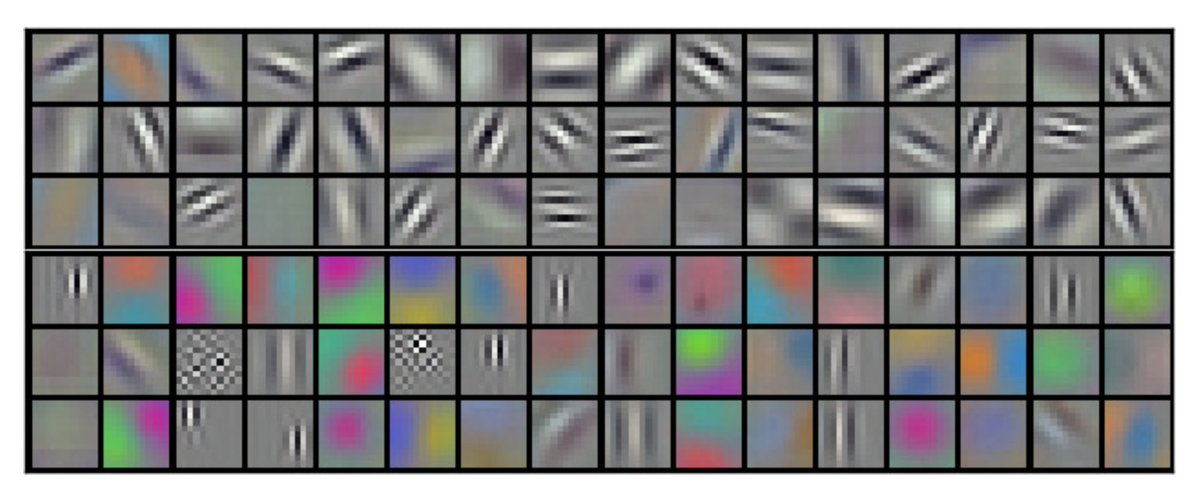

A very interesting result is how the two GPUs learned fundamentally different filters in the first convolutional layer. GPU 1 learned frequency and orientation filters, while GPU 2 focused on color. This happened in every run, independent on the initialization.

A very interesting result is how the two GPUs learned fundamentally different filters in the first convolutional layer. GPU 1 learned frequency and orientation filters, while GPU 2 focused on color. This happened in every run, independent on the initialization.

Even though, CNNs weren& #39;t a new thing in 2012, AlexNet was the first method that managed to train such a large network. It employing several techniques that made it possible without severe overfitting.

It is considered by many as the paper that started it all...

It is considered by many as the paper that started it all...

Btw. there is a minimal implementation of the AlexNet architecture in PyTorch. You can take a look at the code here: https://github.com/pytorch/vision/blob/master/torchvision/models/alexnet.py">https://github.com/pytorch/v...

Read on Twitter

Read on Twitter https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔍" title="Nach links zeigende Lupe" aria-label="Emoji: Nach links zeigende Lupe">https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧐" title="Gesicht mit Monokel" aria-label="Emoji: Gesicht mit Monokel">I promised the other day to start posting threads with summaries of papers that had a big impact on the field of ML and CV.Here is the first one - the AlexNet paper!" title="It& #39;s finally time for some paper review! https://abs.twimg.com/emoji/v2/... draggable="false" alt="📜" title="Schriftrolle" aria-label="Emoji: Schriftrolle">https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔍" title="Nach links zeigende Lupe" aria-label="Emoji: Nach links zeigende Lupe">https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧐" title="Gesicht mit Monokel" aria-label="Emoji: Gesicht mit Monokel">I promised the other day to start posting threads with summaries of papers that had a big impact on the field of ML and CV.Here is the first one - the AlexNet paper!" class="img-responsive" style="max-width:100%;"/>

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔍" title="Nach links zeigende Lupe" aria-label="Emoji: Nach links zeigende Lupe">https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧐" title="Gesicht mit Monokel" aria-label="Emoji: Gesicht mit Monokel">I promised the other day to start posting threads with summaries of papers that had a big impact on the field of ML and CV.Here is the first one - the AlexNet paper!" title="It& #39;s finally time for some paper review! https://abs.twimg.com/emoji/v2/... draggable="false" alt="📜" title="Schriftrolle" aria-label="Emoji: Schriftrolle">https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔍" title="Nach links zeigende Lupe" aria-label="Emoji: Nach links zeigende Lupe">https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧐" title="Gesicht mit Monokel" aria-label="Emoji: Gesicht mit Monokel">I promised the other day to start posting threads with summaries of papers that had a big impact on the field of ML and CV.Here is the first one - the AlexNet paper!" class="img-responsive" style="max-width:100%;"/>

The network consists of 5 convolutional layers (some of which followed by max-pooling) and 3 fully-connected layers and has 60M parameters. The network is distributed over two GPUs with only particular layers allowed to communicate between them." title="Architecture https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏗️" title="Gebäude im Bau" aria-label="Emoji: Gebäude im Bau">The network consists of 5 convolutional layers (some of which followed by max-pooling) and 3 fully-connected layers and has 60M parameters. The network is distributed over two GPUs with only particular layers allowed to communicate between them." class="img-responsive" style="max-width:100%;"/>

The network consists of 5 convolutional layers (some of which followed by max-pooling) and 3 fully-connected layers and has 60M parameters. The network is distributed over two GPUs with only particular layers allowed to communicate between them." title="Architecture https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏗️" title="Gebäude im Bau" aria-label="Emoji: Gebäude im Bau">The network consists of 5 convolutional layers (some of which followed by max-pooling) and 3 fully-connected layers and has 60M parameters. The network is distributed over two GPUs with only particular layers allowed to communicate between them." class="img-responsive" style="max-width:100%;"/>

AlexNet is one of the first papers to use ReLUs instead of sigmoid or tanh. The network was able to learn much faster (about 6x) and enabled the authors to train such a large network in the first place. Btw. ReLU is a complicated way to say max(0,x) https://abs.twimg.com/emoji/v2/... draggable="false" alt="😉" title="Zwinkerndes Gesicht" aria-label="Emoji: Zwinkerndes Gesicht">" title="Activation function https://abs.twimg.com/emoji/v2/... draggable="false" alt="📈" title="Tabelle mit Aufwärtstrend" aria-label="Emoji: Tabelle mit Aufwärtstrend">AlexNet is one of the first papers to use ReLUs instead of sigmoid or tanh. The network was able to learn much faster (about 6x) and enabled the authors to train such a large network in the first place. Btw. ReLU is a complicated way to say max(0,x) https://abs.twimg.com/emoji/v2/... draggable="false" alt="😉" title="Zwinkerndes Gesicht" aria-label="Emoji: Zwinkerndes Gesicht">" class="img-responsive" style="max-width:100%;"/>

AlexNet is one of the first papers to use ReLUs instead of sigmoid or tanh. The network was able to learn much faster (about 6x) and enabled the authors to train such a large network in the first place. Btw. ReLU is a complicated way to say max(0,x) https://abs.twimg.com/emoji/v2/... draggable="false" alt="😉" title="Zwinkerndes Gesicht" aria-label="Emoji: Zwinkerndes Gesicht">" title="Activation function https://abs.twimg.com/emoji/v2/... draggable="false" alt="📈" title="Tabelle mit Aufwärtstrend" aria-label="Emoji: Tabelle mit Aufwärtstrend">AlexNet is one of the first papers to use ReLUs instead of sigmoid or tanh. The network was able to learn much faster (about 6x) and enabled the authors to train such a large network in the first place. Btw. ReLU is a complicated way to say max(0,x) https://abs.twimg.com/emoji/v2/... draggable="false" alt="😉" title="Zwinkerndes Gesicht" aria-label="Emoji: Zwinkerndes Gesicht">" class="img-responsive" style="max-width:100%;"/>

AlexNet uses Local Response Normalization to improve generalization. It is similar to lateral inhibition in biology, where neurons try to increase contrast to their neighbors. Example image - A and B are the same color, but your brain sees them differently." title="Normalization https://abs.twimg.com/emoji/v2/... draggable="false" alt="🗜️" title="Komprimierung" aria-label="Emoji: Komprimierung">AlexNet uses Local Response Normalization to improve generalization. It is similar to lateral inhibition in biology, where neurons try to increase contrast to their neighbors. Example image - A and B are the same color, but your brain sees them differently.">

AlexNet uses Local Response Normalization to improve generalization. It is similar to lateral inhibition in biology, where neurons try to increase contrast to their neighbors. Example image - A and B are the same color, but your brain sees them differently." title="Normalization https://abs.twimg.com/emoji/v2/... draggable="false" alt="🗜️" title="Komprimierung" aria-label="Emoji: Komprimierung">AlexNet uses Local Response Normalization to improve generalization. It is similar to lateral inhibition in biology, where neurons try to increase contrast to their neighbors. Example image - A and B are the same color, but your brain sees them differently.">

AlexNet uses Local Response Normalization to improve generalization. It is similar to lateral inhibition in biology, where neurons try to increase contrast to their neighbors. Example image - A and B are the same color, but your brain sees them differently." title="Normalization https://abs.twimg.com/emoji/v2/... draggable="false" alt="🗜️" title="Komprimierung" aria-label="Emoji: Komprimierung">AlexNet uses Local Response Normalization to improve generalization. It is similar to lateral inhibition in biology, where neurons try to increase contrast to their neighbors. Example image - A and B are the same color, but your brain sees them differently.">

AlexNet uses Local Response Normalization to improve generalization. It is similar to lateral inhibition in biology, where neurons try to increase contrast to their neighbors. Example image - A and B are the same color, but your brain sees them differently." title="Normalization https://abs.twimg.com/emoji/v2/... draggable="false" alt="🗜️" title="Komprimierung" aria-label="Emoji: Komprimierung">AlexNet uses Local Response Normalization to improve generalization. It is similar to lateral inhibition in biology, where neurons try to increase contrast to their neighbors. Example image - A and B are the same color, but your brain sees them differently.">

A very interesting result is how the two GPUs learned fundamentally different filters in the first convolutional layer. GPU 1 learned frequency and orientation filters, while GPU 2 focused on color. This happened in every run, independent on the initialization." title="Feature maps https://abs.twimg.com/emoji/v2/... draggable="false" alt="🌠" title="Sternschnuppe" aria-label="Emoji: Sternschnuppe">A very interesting result is how the two GPUs learned fundamentally different filters in the first convolutional layer. GPU 1 learned frequency and orientation filters, while GPU 2 focused on color. This happened in every run, independent on the initialization." class="img-responsive" style="max-width:100%;"/>

A very interesting result is how the two GPUs learned fundamentally different filters in the first convolutional layer. GPU 1 learned frequency and orientation filters, while GPU 2 focused on color. This happened in every run, independent on the initialization." title="Feature maps https://abs.twimg.com/emoji/v2/... draggable="false" alt="🌠" title="Sternschnuppe" aria-label="Emoji: Sternschnuppe">A very interesting result is how the two GPUs learned fundamentally different filters in the first convolutional layer. GPU 1 learned frequency and orientation filters, while GPU 2 focused on color. This happened in every run, independent on the initialization." class="img-responsive" style="max-width:100%;"/>