My 2019 book RADICALIZED opened with a novella called Unauthorized Bread, a tale of self-determination versus technical oppression that starts with a Libyan refugee hacking her stupid smart-toaster, which locks her into buying proprietary bread.

https://arstechnica.com/gaming/2020/01/unauthorized-bread-a-near-future-tale-of-refugees-and-sinister-iot-appliances/

1/">https://arstechnica.com/gaming/20...

https://arstechnica.com/gaming/2020/01/unauthorized-bread-a-near-future-tale-of-refugees-and-sinister-iot-appliances/

1/">https://arstechnica.com/gaming/20...

I wrote that story after watching the inexorable colonization of every kind of device - from implanted defibrillators to tractors - with computerized controllers that served a variety of purposes, many of them nakedly dystopian.

2/

2/

The existence of laws like Section 1201 of the DMCA really invites companies to make "smart" versions of their devices for the sole purpose of adding DRM to them, because DMCA 1201 makes it a felony to unlock DRM, even for perfectly legal purposes.

3/

3/

That& #39;s how John Deere uses DRM: to force farmers to use (and pay for) authorized repair personnel when their tractors break down; it& #39;s how Abbott Labs uses DRM, to force people with diabetes to use their own insulin pumps with their glucose monitors.

4/

4/

It& #39;s the inkjet business-model, but for everything from artificial pancreases to coffee-makers. And because DMCA 1201 is so badly* drafted, it also puts security researchers at risk.

*Assuming you& #39;re willing to believe this isn& #39;t what the law was supposed to do all along

5/

*Assuming you& #39;re willing to believe this isn& #39;t what the law was supposed to do all along

5/

Adding networked computers to everyday gadgets is a risky business: as with any human endeavor, software is prone to error. And as with any technical pursuit, the only way to reliably root out errors is through adversarial peer review.

6/

6/

That is, to have people who want you to fail go through your stuff looking for stupid mistakes they can mock you over.

It& #39;s not enough for you to go over your own work for errors. Anyone who& #39;s ever stared right at their own typo and not seen it knows this doesn& #39;t work.

7/

It& #39;s not enough for you to go over your own work for errors. Anyone who& #39;s ever stared right at their own typo and not seen it knows this doesn& #39;t work.

7/

Nor is it sufficient for your friends to look over your work - not only will they go easy on you, but sometimes your errors come from a shared set of faulty assumptions.

8/

8/

They CAN& #39;T spot these errors: this is why no argument among Qanoners ever points out the most important fact, which is that the whole fucking thing is batshit.

9/

9/

The default for products is that ANYONE is allowed to point out their defects. If you buy a pencil and the tip breaks all the time and you do some analysis and discover that the manufacturer sucks at graphite, you can publish that analysis.

10/

10/

But DMCA 1201 prohibits this kind of disclosure if it means that you reveal flaws that might be used to disable the DRM. Security researchers get threatened by "smart device" companies all the time.

11/

11/

Just the spectre of the threat is enough to convince a lot of organizations& #39; lawyers to advise researchers not to go public with this information.

12/

12/

That means that a defect that could crash your car (or your implanted pacemaker) only gets disclosed if the company that made it authorizes the disclosure.

This is seriously bad policy.

13/

This is seriously bad policy.

13/

Companies add "smarts" to get DRM, because DRM lets them control how their customers use their products, and lets them shut down competitors who try to give control back to customers, and also silence critics who reveal the defects in their products.

14/

14/

DRM can be combined with terms of service, patents, trade secrets, binding arbitration, and other forms of "IP" to deliver near-perfect corporate control over competitors, customers and critics.

https://locusmag.com/2020/09/cory-doctorow-ip/

15/">https://locusmag.com/2020/09/c...

https://locusmag.com/2020/09/cory-doctorow-ip/

15/">https://locusmag.com/2020/09/c...

But it& #39;s worse than that, because software designed to exercise this kind of control is necessarily designed for maximum opacity: to hide what it does, how it does it, and how to turn it off.

16/

16/

This obfuscation means that when your device is compromised, malicious code can take advantage of the obscure-by-design nature of the device to run undetectably as it attacks you, your data, and your physical environment.

17/

17/

Malicious code can also leverage DRM& #39;s natural tamper-resistance to make it hard to remove malware once it has been detected. Once a device designed to control its owners has been compromised, the attacker gets to control the owner, too.

18/

18/

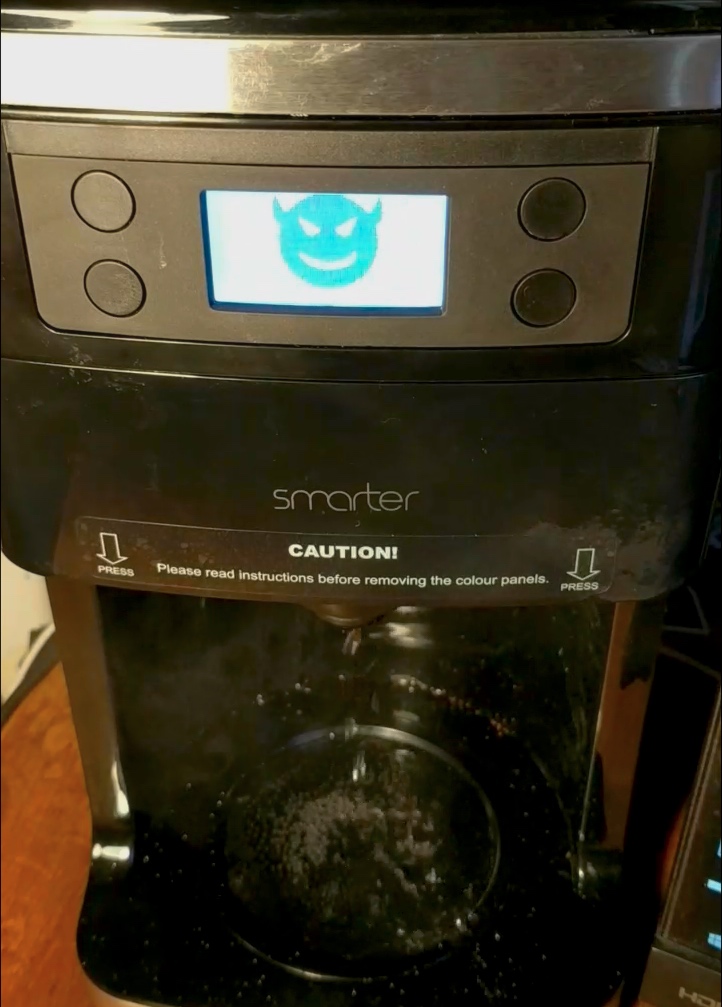

Which brings me to "Smarter," a "smart" $250 coffee maker that is remarkably insecure, allowing anyone on the same wifi network as the device to replace its firmware, as @thinkcz demonstrates in a recent proof-of-concept attack.

https://decoded.avast.io/martinhron/the-fresh-smell-of-ransomed-coffee/

19/">https://decoded.avast.io/martinhro...

https://decoded.avast.io/martinhron/the-fresh-smell-of-ransomed-coffee/

19/">https://decoded.avast.io/martinhro...

Hron& #39;s attack hijacks the machine, causing it to "turn on the burner, dispense water, spin the bean grinder, and display a ransom message, all while beeping repeatedly."

https://arstechnica.com/information-technology/2020/09/how-a-hacker-turned-a-250-coffee-maker-into-ransom-machine/

20/">https://arstechnica.com/informati...

https://arstechnica.com/information-technology/2020/09/how-a-hacker-turned-a-250-coffee-maker-into-ransom-machine/

20/">https://arstechnica.com/informati...

As @dangoodin001 points out, Hron did all this in just one week, and quite likely could find more ways to attack the device. The defects Hron identified - like the failure to use encryption in the device& #39;s communications or firmware updates - are glaring, idiotic errors.

21/

21/

As is the decision to allow for unsigned firmware updates without any user intervention. This kind of design idiocy has been repeatedly identified in MANY kinds of devices.

22/

22/

Back in 2011, I watched Ang Cui silently update the OS of an HP printer by sending it a gimmicked PDF (HP& #39;s printers received new firmware via print-jobs, ingesting everything after a Postscript comment that said, "New firmware starts here").

https://www.youtube.com/watch?v=njVv7J2azY8

23/">https://www.youtube.com/watch...

https://www.youtube.com/watch?v=njVv7J2azY8

23/">https://www.youtube.com/watch...

Read on Twitter

Read on Twitter