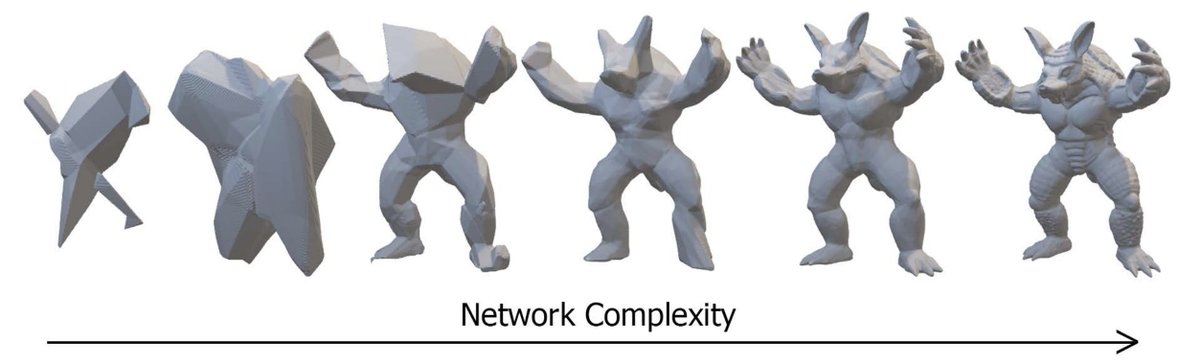

Purposefully overfit neural networks are an efficient surface representation for solid 3D shapes

In https://arxiv.org/abs/2009.09808 ">https://arxiv.org/abs/2009.... with Thomas Davies, @DerekRenderling, we make a few observations:

In https://arxiv.org/abs/2009.09808 ">https://arxiv.org/abs/2009.... with Thomas Davies, @DerekRenderling, we make a few observations:

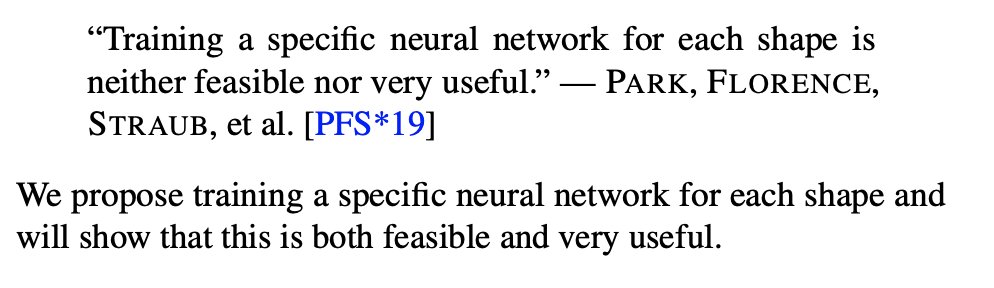

Increasing the number of weights in the overfit network increases the accuracy of the encoded shape.

For the same floating-point storage budget, storing n weights of an neural network is more expressive than storing SDF values at n regular grid nodes

Compared to storing n xyz coordinates at vertices, overfit neural networks often match the quality of decimated triangle meshes, but ...

Unlike a mesh, an overfit neural network is an implicit representation. Like a regular grid SDF and unlike a mesh, if we impose the same structure (architecture) the weights for each shape are a vectorizeable, homogeneous representation.

In a real-time rendering setup, one might optimize the network *architecture* for shader evaluation then swap in weights for different pre-overfit shapes at runtime.

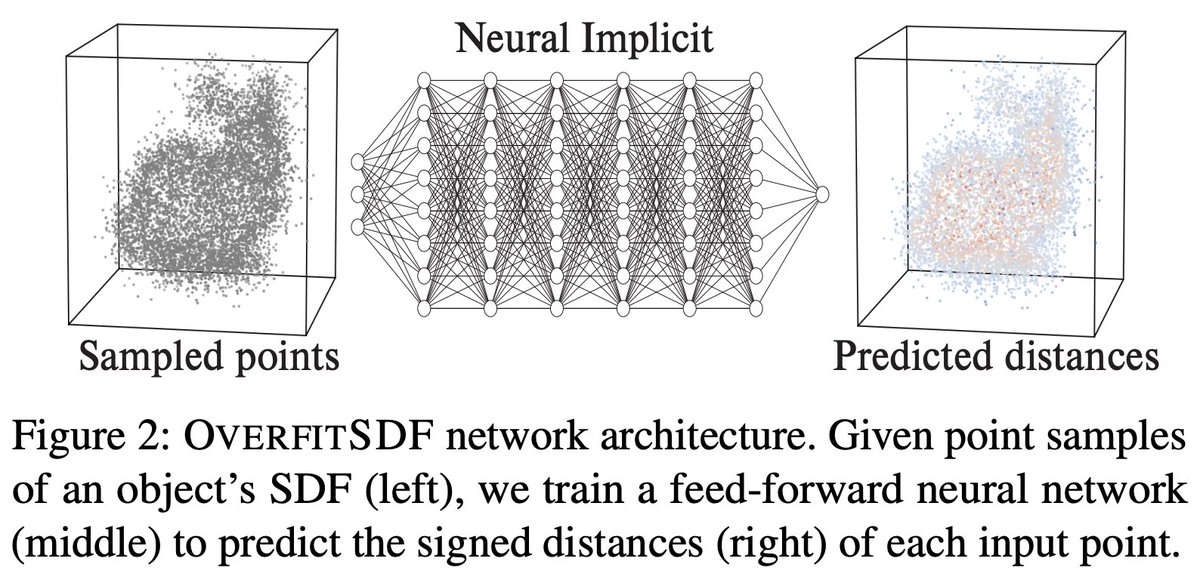

Neural implicits inherit the nice features of SDFs, like ray marching for rendering and trivial CSG operations.

Neural implicits inherit the nice features of SDFs, like ray marching for rendering and trivial CSG operations.

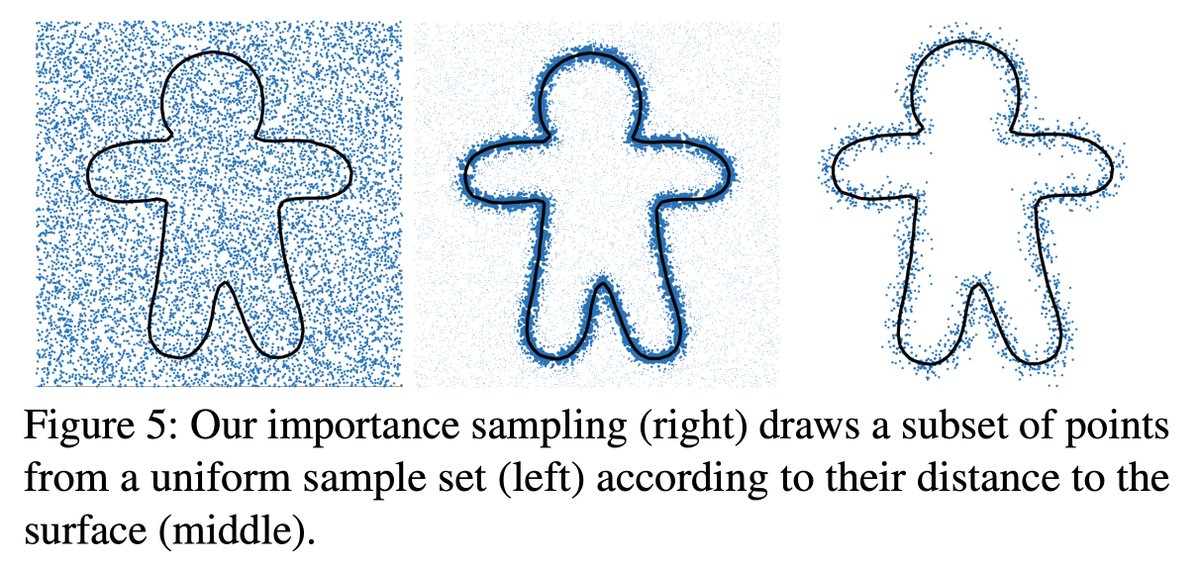

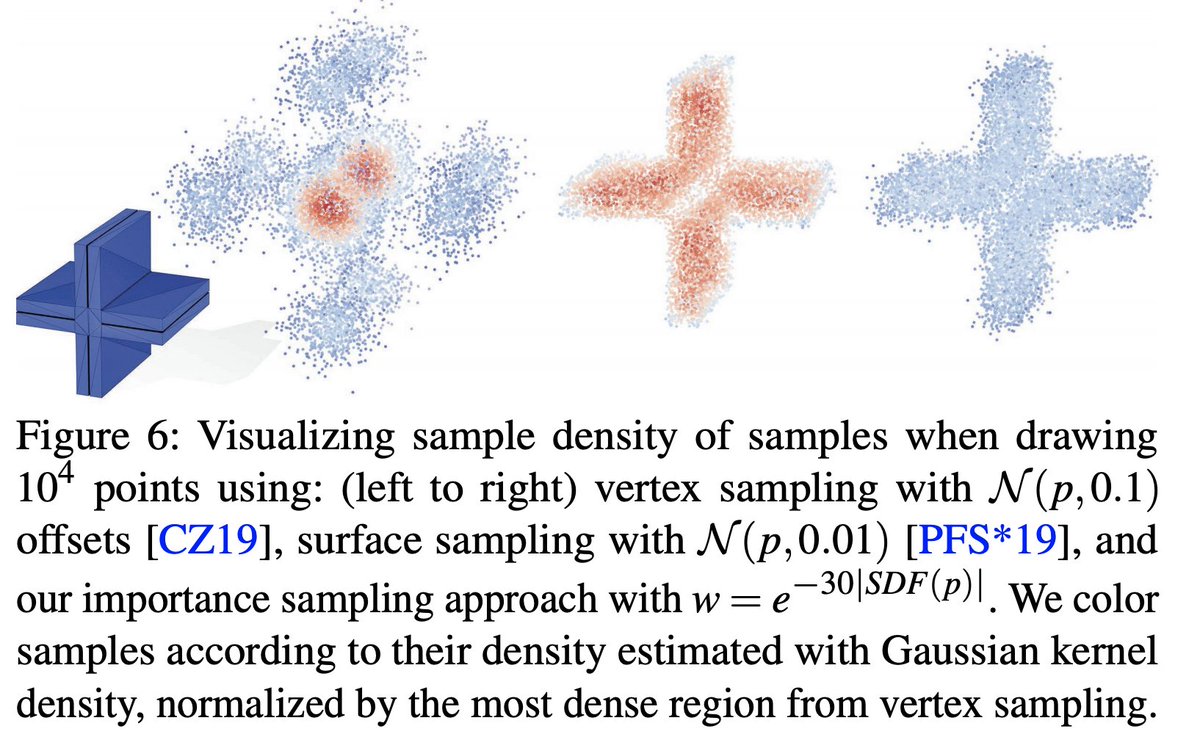

Compared to prior neural-SDFs we show that if sampling and loss definition are considered together, then one can apply importance sampling to improve near-surface accuracy.

Importance sampling also sidesteps some of the pitfalls of defining sampling via distributions placed at input shape vertices or faces.

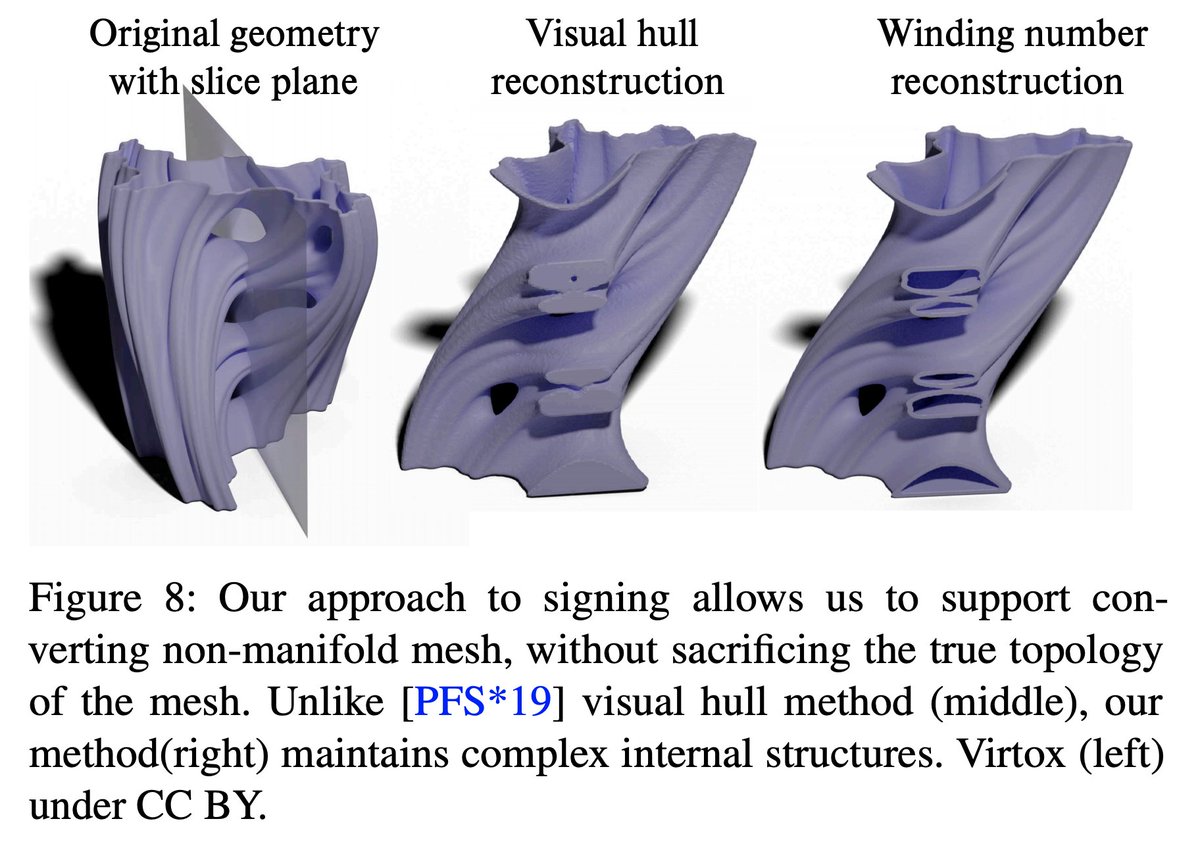

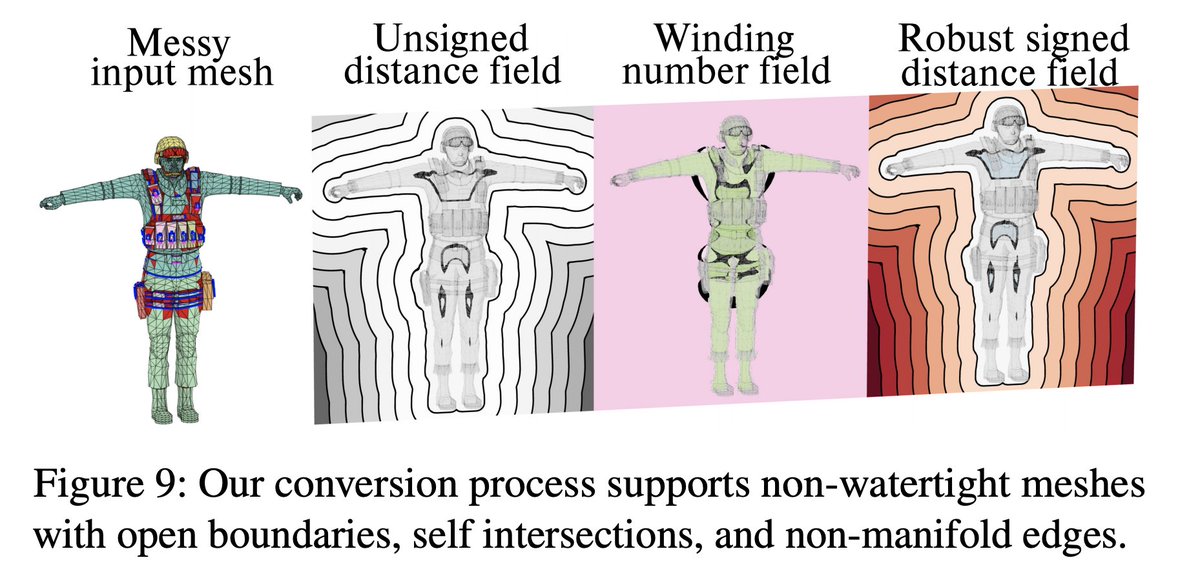

Meanwhile, using generalized winding numbers for signing positive or negative distance is more robust than visibility.

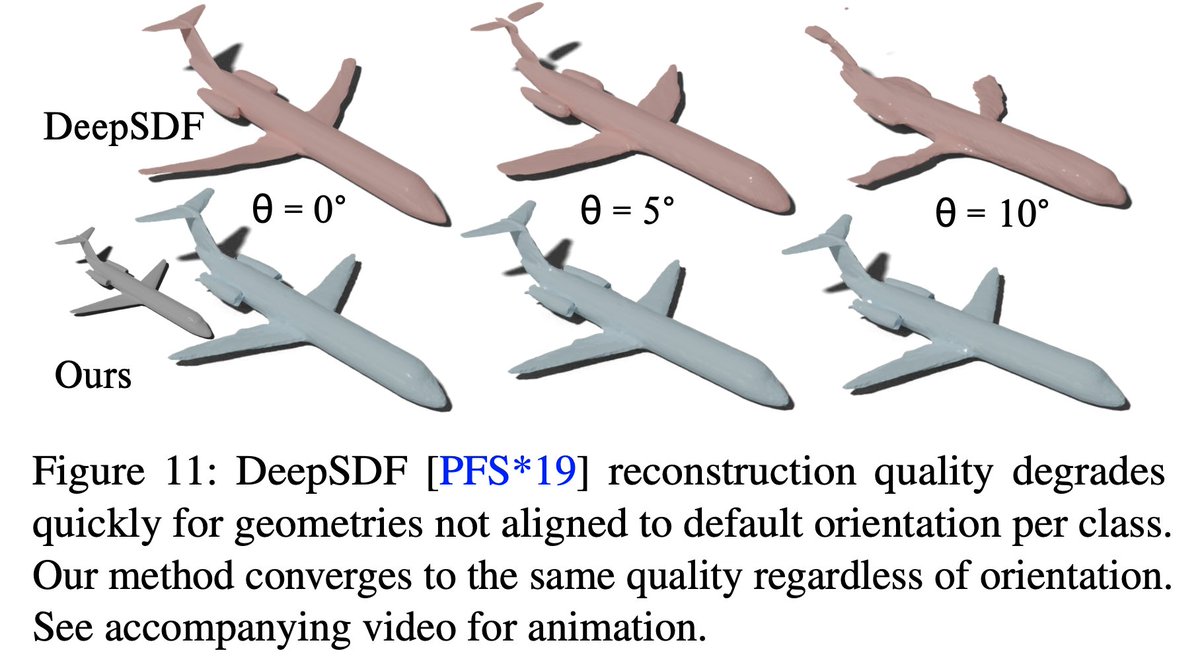

While using latent codes instead of weights has its own advantages, we discuss how sensitive methods like DeepSDF are to training data and how training scales poorly with training set size.

After all, neural networks are a particularly expressive function space.

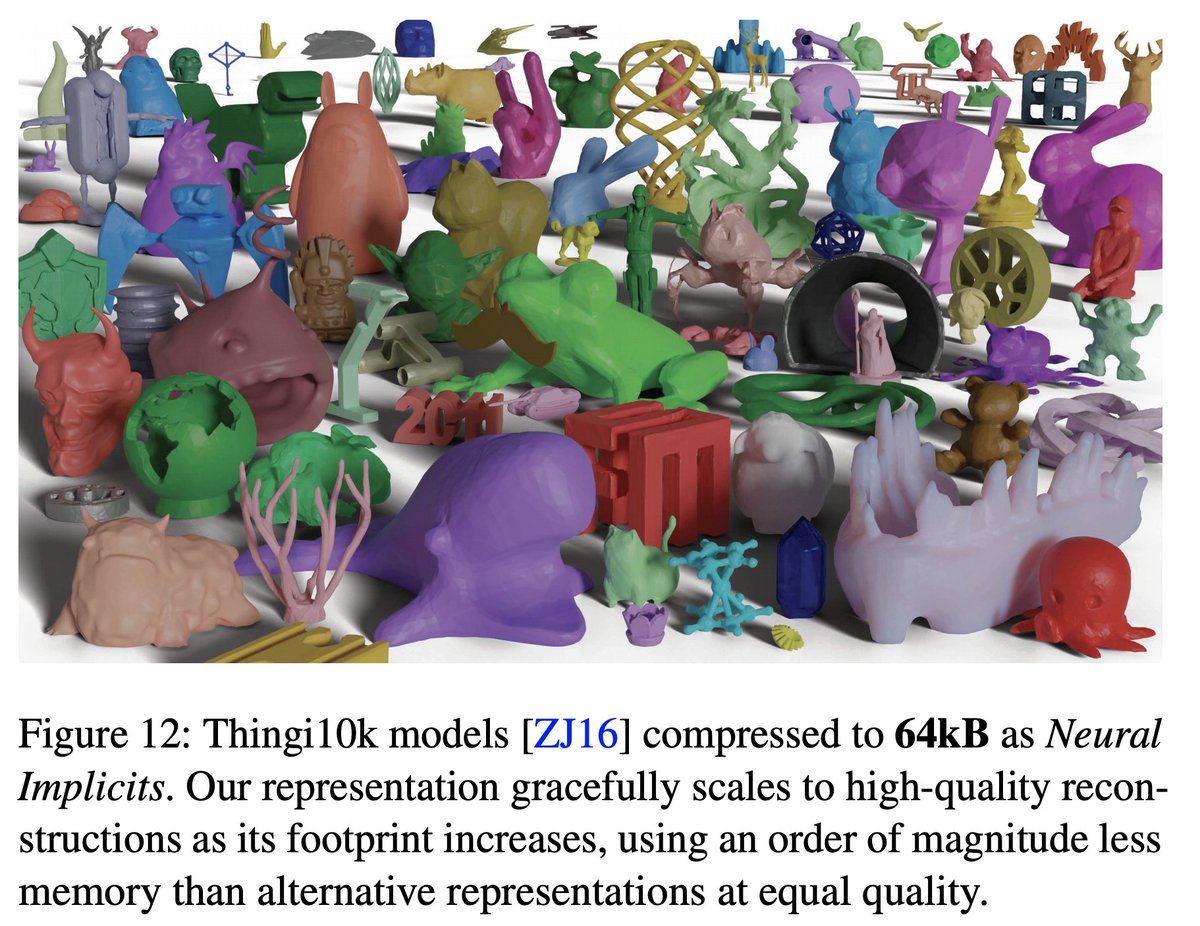

As a stress test we overfit the same architecture to the whole #Thingi10K dataset. A messy 9 GBs of .obj & .stl files with different #vertices and mesh topologies becomes a 10,000-long list of 64KB vectors

As a stress test we overfit the same architecture to the whole #Thingi10K dataset. A messy 9 GBs of .obj & .stl files with different #vertices and mesh topologies becomes a 10,000-long list of 64KB vectors

A lot of interesting opportunities for future work: animated shapes, color/texture/brdfs, swept volumes, meta networks, and more. This is an interesting (and very fast moving) area!

Read on Twitter

Read on Twitter