A recent study in @nature couldn& #39;t replicate drastic CO2 effects on coral reef #fish behaviour & empirically found no effect of #oceanacidification https://go.nature.com/3hK49UR ">https://go.nature.com/3hK49UR&q...

Our #metaanalysis of the past decade on this topic concurs https://ecoevorxiv.org/k9dby/ ">https://ecoevorxiv.org/k9dby/&qu...

Breakdown thread https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

Our #metaanalysis of the past decade on this topic concurs https://ecoevorxiv.org/k9dby/ ">https://ecoevorxiv.org/k9dby/&qu...

Breakdown thread

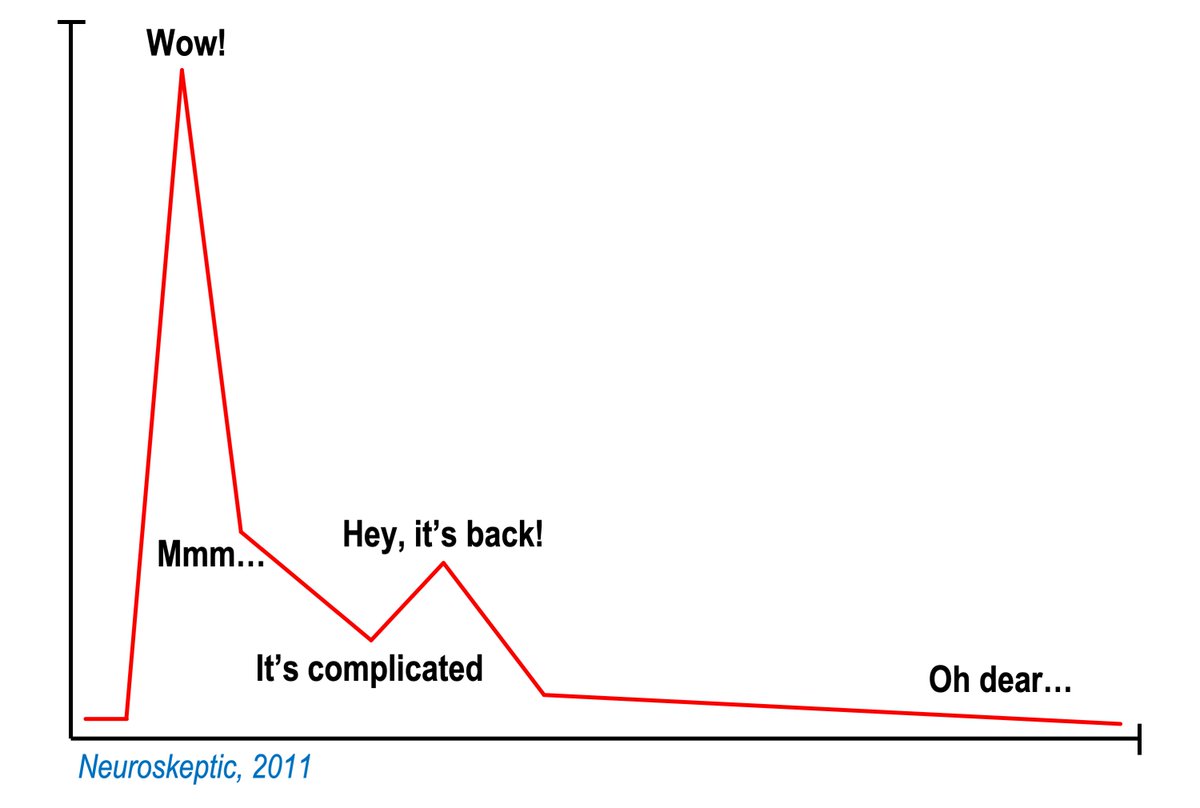

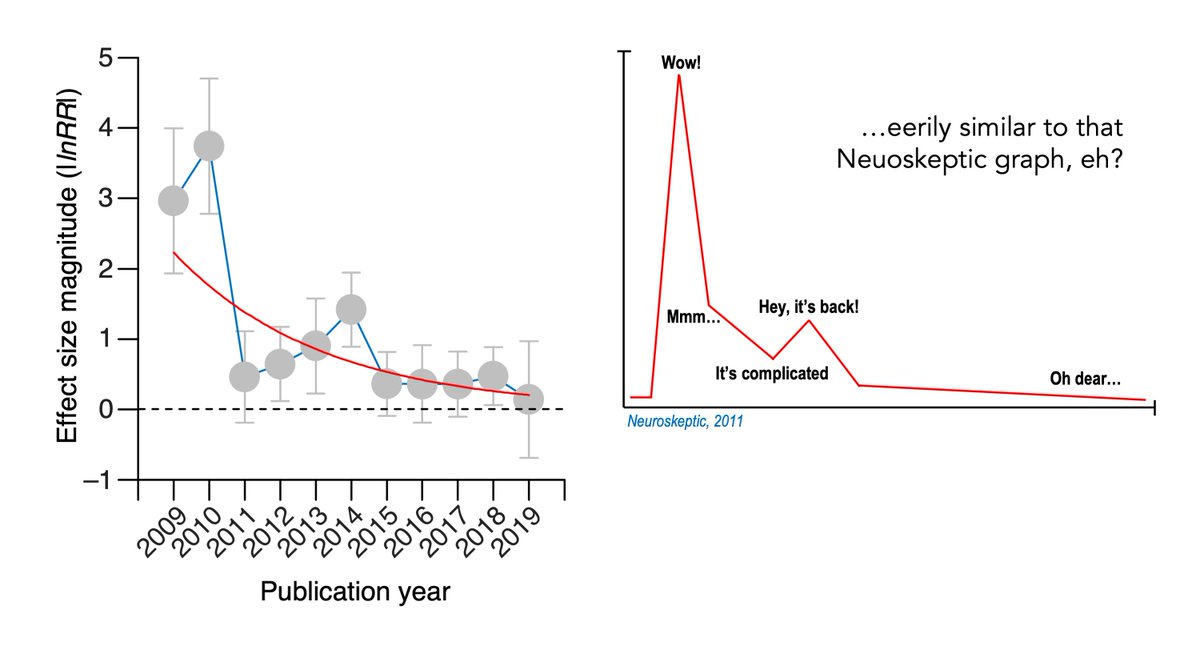

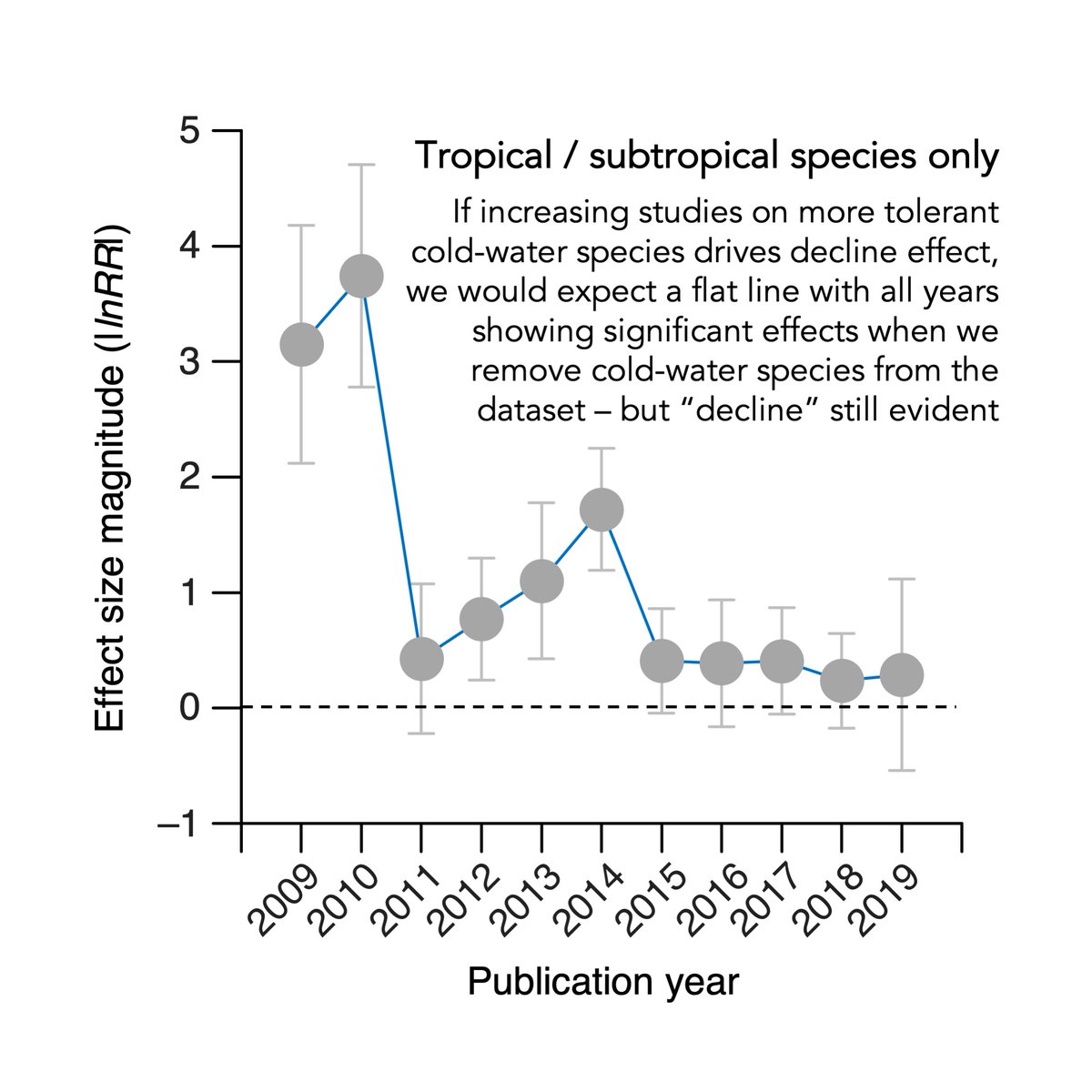

We demonstrate one of the most striking examples of the #DeclineEffect in #ecology to date, w/ reported effects of OA on fish behaviour all but disappearing over past decade

If you’ve never heard of the #DeclineEffect see: https://bit.ly/2EbZX2o ">https://bit.ly/2EbZX2o&q...

If you’ve never heard of the #DeclineEffect see: https://bit.ly/2EbZX2o ">https://bit.ly/2EbZX2o&q...

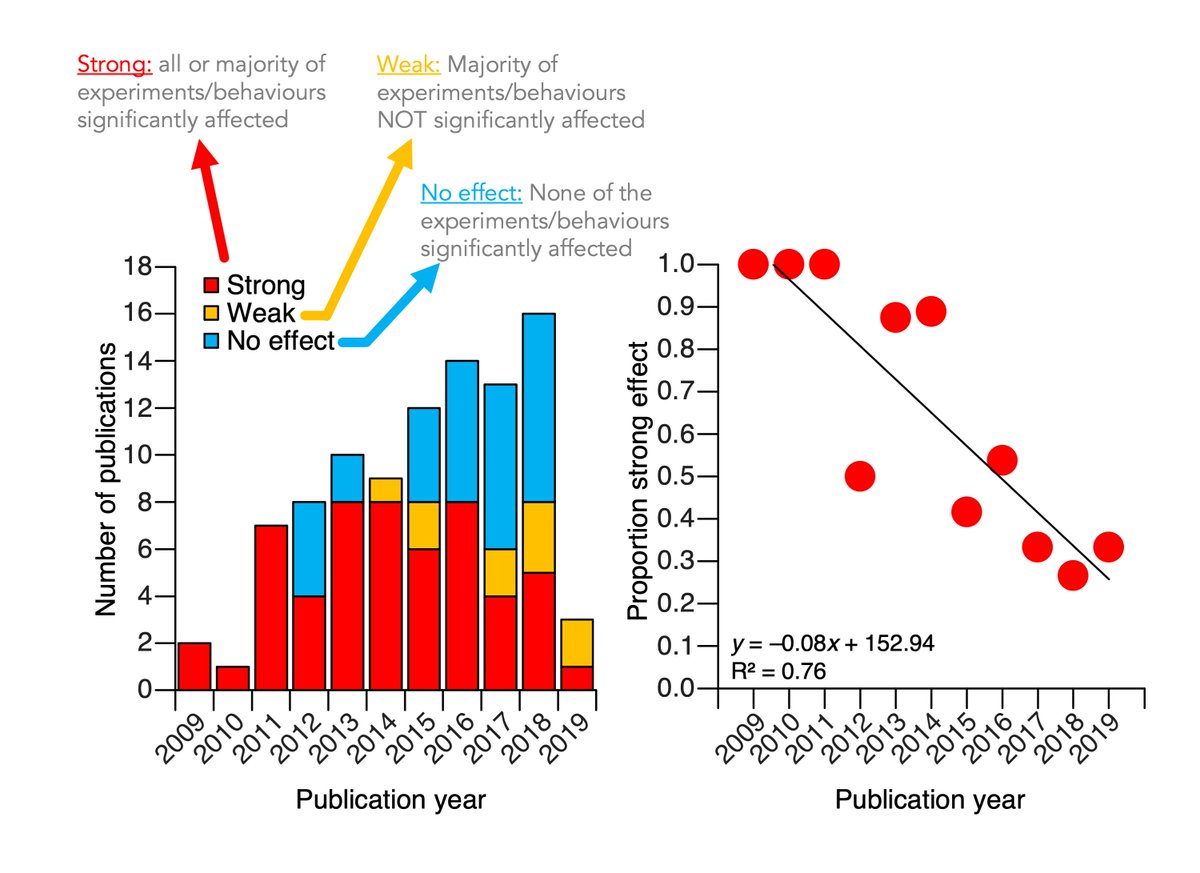

Qualitatively the number of studies reporting “strong” effects of #oceanacidification on fish behaviour have plummeted over time

Quantitatively effect size magnitudes (log response ratio) have declined from averages of 3-4 in early pioneering studies to 0.2–0.4 over past 5 years

While highly significant in early years, mean effect size magnitudes have been non-significant from zero for 4 of past 5 years

While highly significant in early years, mean effect size magnitudes have been non-significant from zero for 4 of past 5 years

To check if this #DeclineEffect was due to increasing studies on cold-water species, we removed cold-water studies (b/c these species may be more tolerant to OA than tropical coral reef species)

Nope…

Nope…

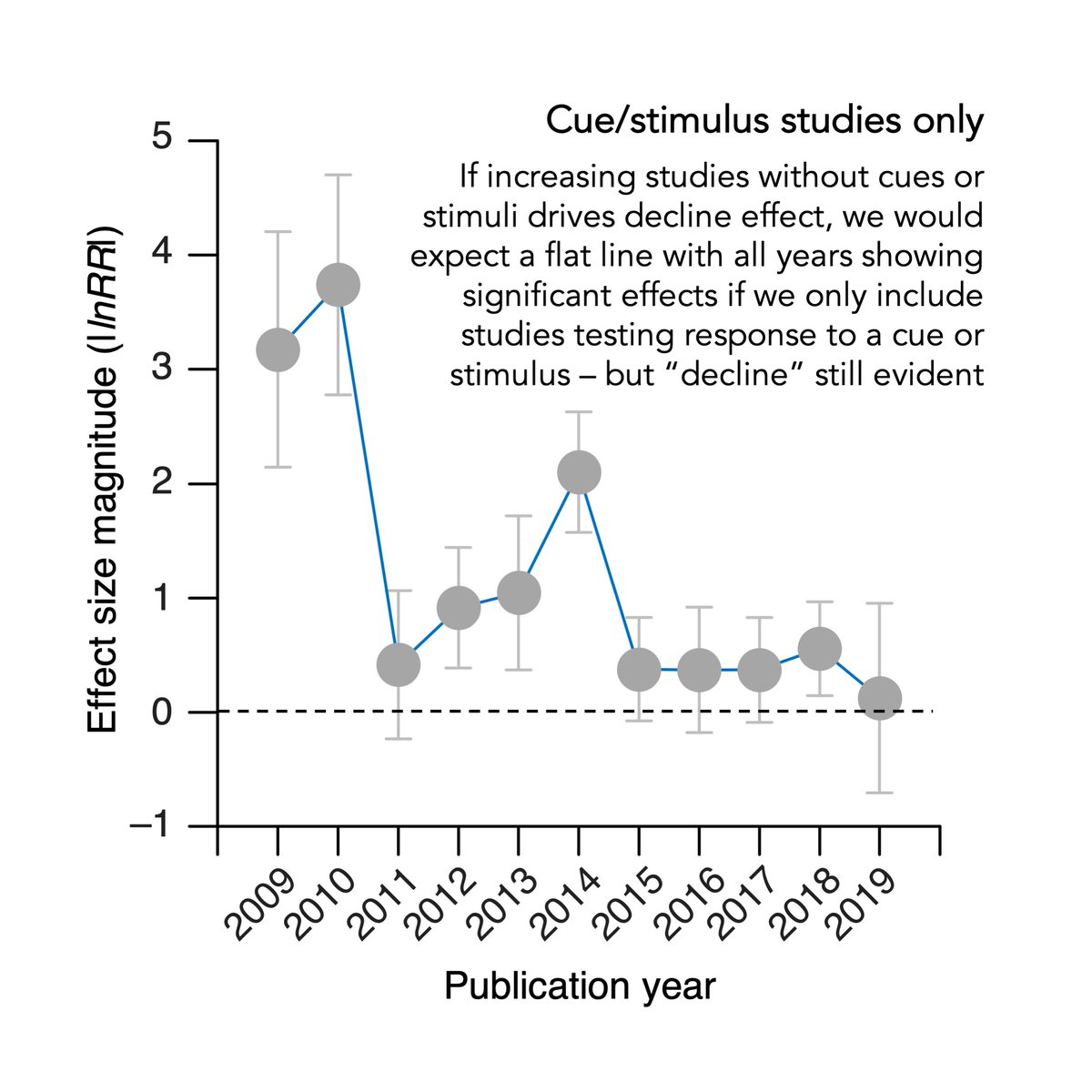

But maybe OA only has an effect when some type of cue or stimulus is involved – after all, the biggest effects are with predator cues!

Again, nope…

Again, nope…

OK, so if it’s not biological, then what could it be? Could it be… #BIAS!?  https://abs.twimg.com/emoji/v2/... draggable="false" alt="😱" title="Vor Angst schreiendes Gesicht" aria-label="Emoji: Vor Angst schreiendes Gesicht">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😱" title="Vor Angst schreiendes Gesicht" aria-label="Emoji: Vor Angst schreiendes Gesicht">

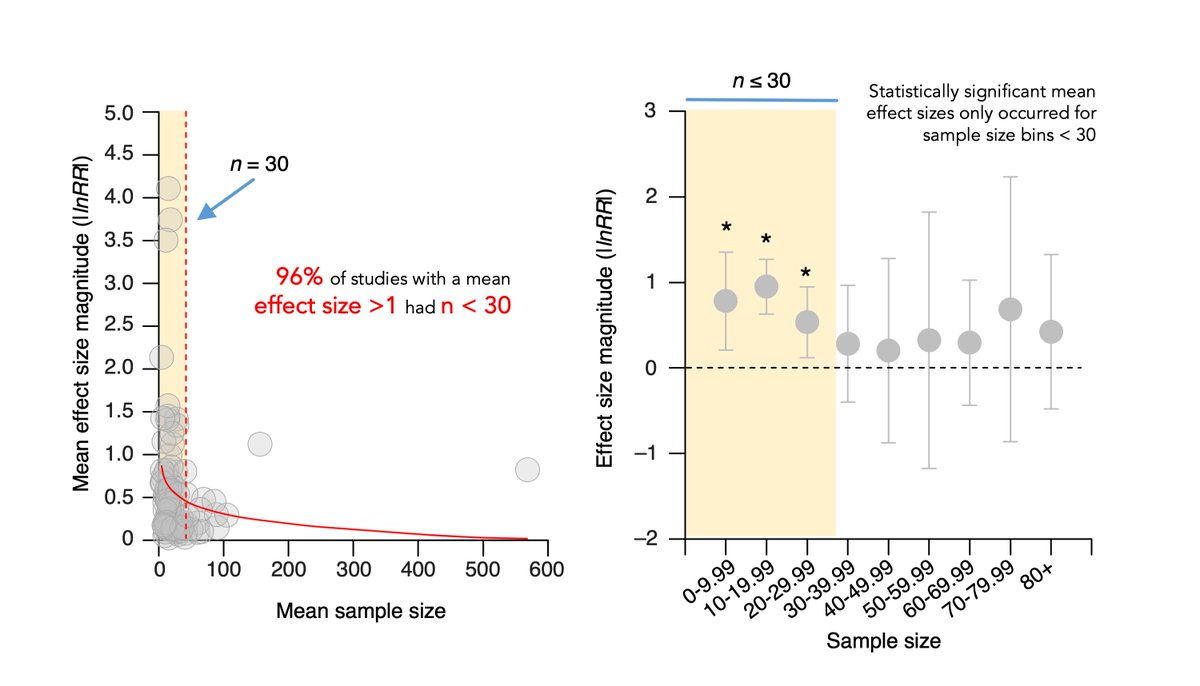

We first checked for methodological bias

Underpowered studies are prone to Type I error & can often detect strong effects when they don’t actually exist

Do studies showing super-strong effects have low sample sizes?

Yep...

Underpowered studies are prone to Type I error & can often detect strong effects when they don’t actually exist

Do studies showing super-strong effects have low sample sizes?

Yep...

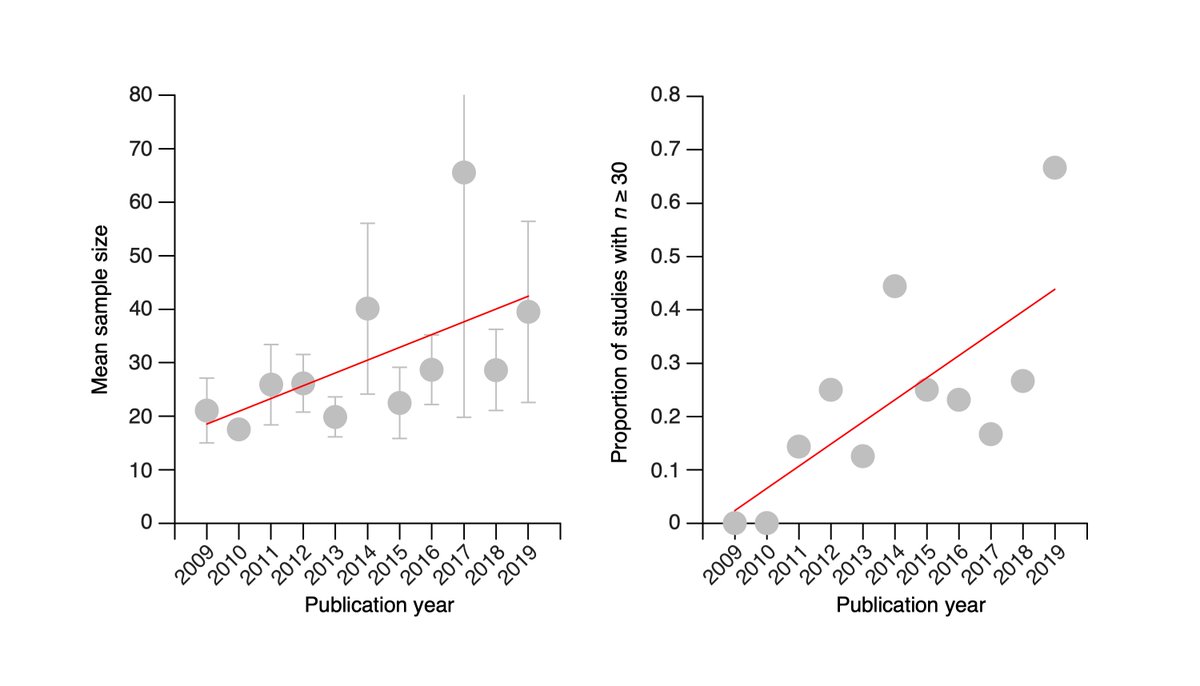

We also found that over time, avg n & proportion of studies w/ n>30 have increased

This suggests that the number of fish used in experiments partly explains the #DeclineEffect, but some high-n studies show strong effects, so n is not everything…

This suggests that the number of fish used in experiments partly explains the #DeclineEffect, but some high-n studies show strong effects, so n is not everything…

OK, but lots of fields have underpowered studies – what’s the harm?

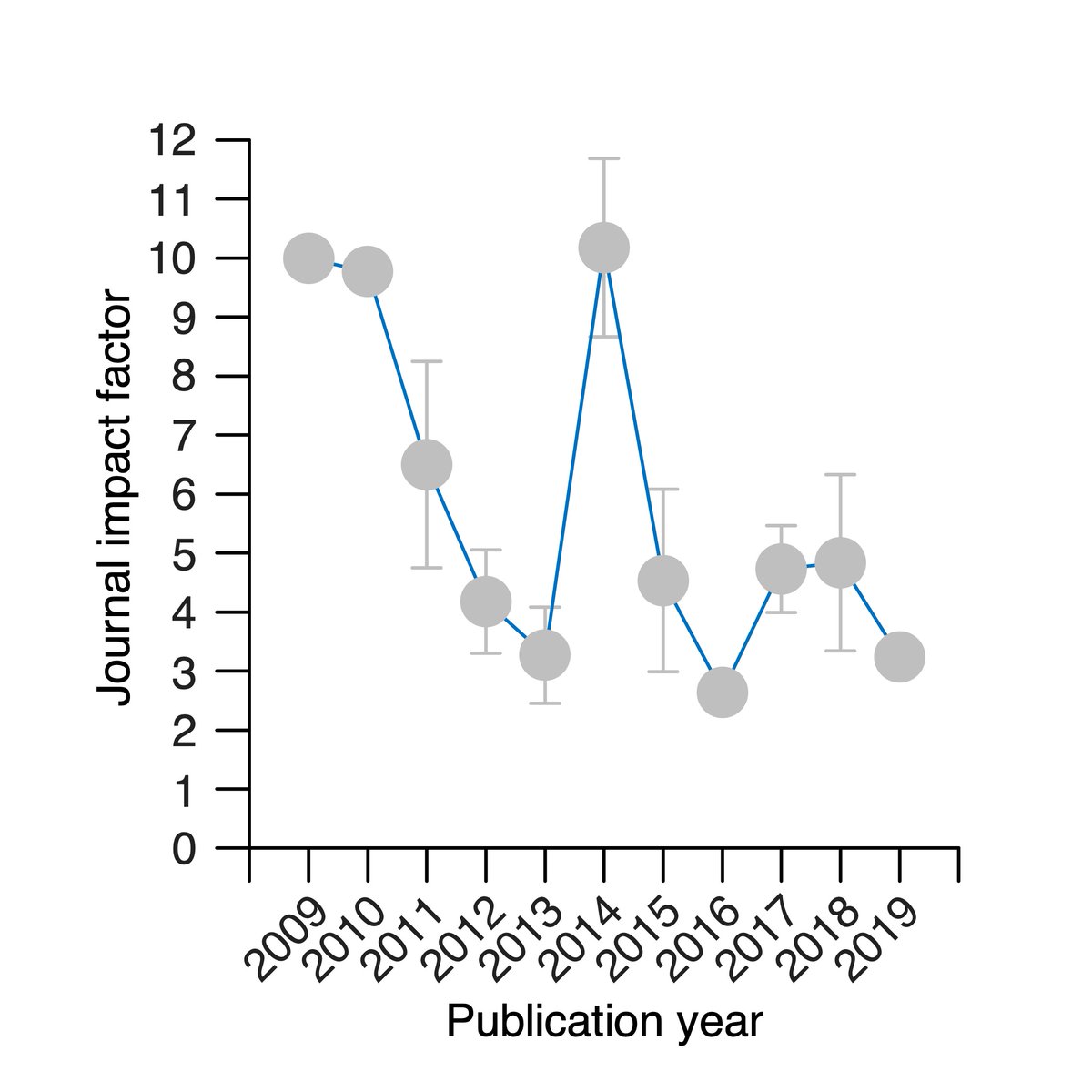

Are studies w/ super-strong effects more likely to be published in influential high IF journals & thus get more attention?

…. https://abs.twimg.com/emoji/v2/... draggable="false" alt="😬" title="Grimasse schneidendes Gesicht" aria-label="Emoji: Grimasse schneidendes Gesicht">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😬" title="Grimasse schneidendes Gesicht" aria-label="Emoji: Grimasse schneidendes Gesicht"> https://abs.twimg.com/emoji/v2/... draggable="false" alt="😬" title="Grimasse schneidendes Gesicht" aria-label="Emoji: Grimasse schneidendes Gesicht">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😬" title="Grimasse schneidendes Gesicht" aria-label="Emoji: Grimasse schneidendes Gesicht">

Are studies w/ super-strong effects more likely to be published in influential high IF journals & thus get more attention?

….

Like sample size, we also saw that the average IF of journals publishing papers decreased over time

Note the strong blip in IF for 2014 which was accompanied by a similar blip in mean effect size for that year!

Strong evidence for selective publishing

Note the strong blip in IF for 2014 which was accompanied by a similar blip in mean effect size for that year!

Strong evidence for selective publishing

This study provides strong evidence that dramatic reports of OA affecting fish behaviour are probably exaggerated &, frankly, false

The strong effects appear linked w/ methodological bias, selective publication of outstanding effects, and other unexplained phenomena

The strong effects appear linked w/ methodological bias, selective publication of outstanding effects, and other unexplained phenomena

We suggest that OA-fish behaviour studies be given more weight when n>30 fish per treatment

It is imperative that authors REPORT SAMPLE SIZE PRECISELY!!!!

A massively frustrating part of this study was trying to decipher n – 34% of studies didn’t report it adequately!!

It is imperative that authors REPORT SAMPLE SIZE PRECISELY!!!!

A massively frustrating part of this study was trying to decipher n – 34% of studies didn’t report it adequately!!

Reviewers & editors can also help here by being skeptical & critical of manuscripts reporting outstanding effects, especially those w/ n<30

We also strongly suggest that unbiased results be published early and alongside studies showing strong effects

How can we do this?

PRE-REGISTRATION! https://go.nature.com/3c2Q43v ">https://go.nature.com/3c2Q43v&q...

How can we do this?

PRE-REGISTRATION! https://go.nature.com/3c2Q43v ">https://go.nature.com/3c2Q43v&q...

It’s also important for null results to be published in high IF journals so they are given equal public weighting

A scary anecdote w/ this paper: it’s been desk rejected by 5 high IF journals that previously published those extreme OA effects, each taking >1 month to decide…

A scary anecdote w/ this paper: it’s been desk rejected by 5 high IF journals that previously published those extreme OA effects, each taking >1 month to decide…

Researchers should also incorporate best practices for behavioural studies whenever possible

for example, use published methodological guidelines such as https://bit.ly/2RAUghe ">https://bit.ly/2RAUghe&q... & use automated technologies for recording behaviour if possible

for example, use published methodological guidelines such as https://bit.ly/2RAUghe ">https://bit.ly/2RAUghe&q... & use automated technologies for recording behaviour if possible

Finally, be critical! Especially of early findings w/ large effects

Scientists are good at predicting which studies are likely to replicate & which ones won’t (see https://go.nature.com/3mtsCRK )">https://go.nature.com/3mtsCRK&q... – apply that skepticism early!!

Scientists are good at predicting which studies are likely to replicate & which ones won’t (see https://go.nature.com/3mtsCRK )">https://go.nature.com/3mtsCRK&q... – apply that skepticism early!!

Does #oceanacidification affect marine animals?

In many instances, yeah...

But in light of our results & those of the non-replication @nature study ( https://go.nature.com/3hK49UR ">https://go.nature.com/3hK49UR&q... ), direct effects of OA on fish behaviour are likely small

In many instances, yeah...

But in light of our results & those of the non-replication @nature study ( https://go.nature.com/3hK49UR ">https://go.nature.com/3hK49UR&q... ), direct effects of OA on fish behaviour are likely small

Read on Twitter

Read on Twitter " title="A recent study in @nature couldn& #39;t replicate drastic CO2 effects on coral reef #fish behaviour & empirically found no effect of #oceanacidification https://go.nature.com/3hK49UR&q... Our #metaanalysis of the past decade on this topic concurs https://ecoevorxiv.org/k9dby/&qu... Breakdown threadhttps://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>

" title="A recent study in @nature couldn& #39;t replicate drastic CO2 effects on coral reef #fish behaviour & empirically found no effect of #oceanacidification https://go.nature.com/3hK49UR&q... Our #metaanalysis of the past decade on this topic concurs https://ecoevorxiv.org/k9dby/&qu... Breakdown threadhttps://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">" class="img-responsive" style="max-width:100%;"/>

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😬" title="Grimasse schneidendes Gesicht" aria-label="Emoji: Grimasse schneidendes Gesicht">" title="OK, but lots of fields have underpowered studies – what’s the harm? Are studies w/ super-strong effects more likely to be published in influential high IF journals & thus get more attention?….https://abs.twimg.com/emoji/v2/... draggable="false" alt="😬" title="Grimasse schneidendes Gesicht" aria-label="Emoji: Grimasse schneidendes Gesicht">https://abs.twimg.com/emoji/v2/... draggable="false" alt="😬" title="Grimasse schneidendes Gesicht" aria-label="Emoji: Grimasse schneidendes Gesicht">" class="img-responsive" style="max-width:100%;"/>

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😬" title="Grimasse schneidendes Gesicht" aria-label="Emoji: Grimasse schneidendes Gesicht">" title="OK, but lots of fields have underpowered studies – what’s the harm? Are studies w/ super-strong effects more likely to be published in influential high IF journals & thus get more attention?….https://abs.twimg.com/emoji/v2/... draggable="false" alt="😬" title="Grimasse schneidendes Gesicht" aria-label="Emoji: Grimasse schneidendes Gesicht">https://abs.twimg.com/emoji/v2/... draggable="false" alt="😬" title="Grimasse schneidendes Gesicht" aria-label="Emoji: Grimasse schneidendes Gesicht">" class="img-responsive" style="max-width:100%;"/>