It& #39;s election forecast time! But can we take @FiveThirtyEight or @Economist forecasts at face value? What are forecasters& #39; goals & incentives, especially post 2016 disillusionment? Excited to share a new paper w/ @StatModeling & @CBWlezien http://www.stat.columbia.edu/~gelman/research/unpublished/forecast_incentives3.pdf">https://www.stat.columbia.edu/~gelman/r... A thread!

All models require making assumptions, many of which are hard to evaluate. Topics of Twitter debates between @NateSilver538 & @gelliottmorris are a great example of all the nuanced decisions to be made, with few opportunities to appeal to ground truth directly. 2/N

Ideally we could evaluate how well forecasts are calibrated through repeated testing. But to evaluate things like 95% CIs we need many observations of the model in practice. Rarely in reality can we identify more than gross errors. So, forecasters have some wiggle room. 3/N

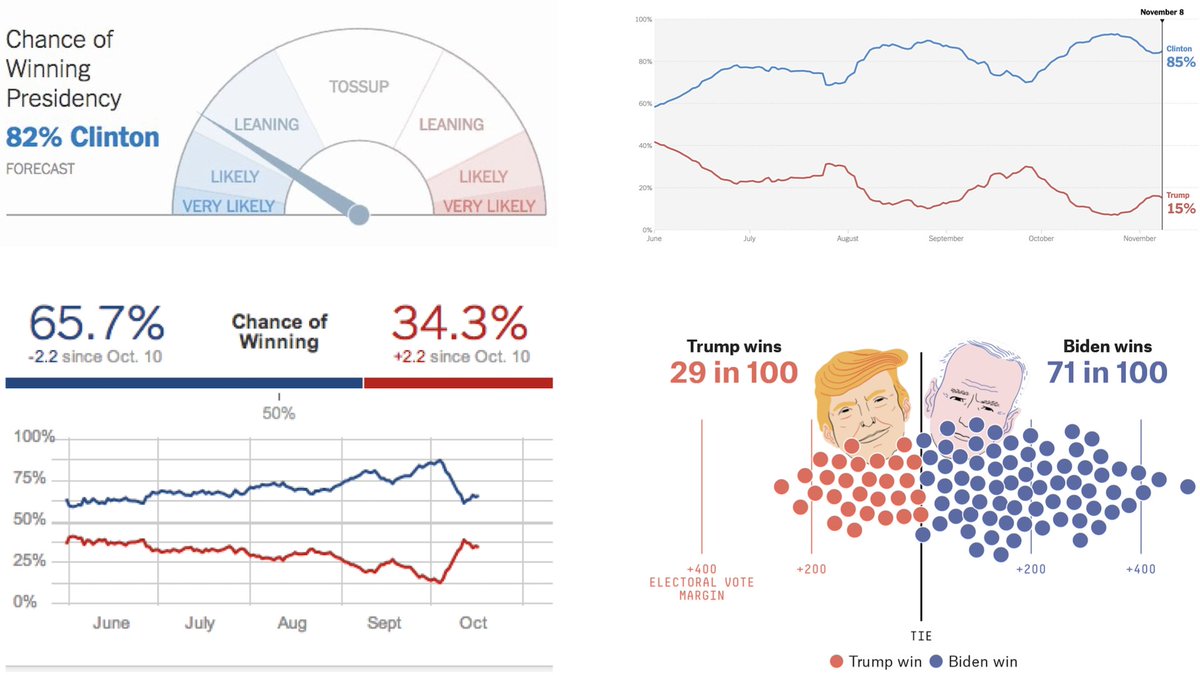

As I& #39;ve noted elsewhere, this year& #39;s election forecast displays, especially @FiveThirtyEight& #39;s, emphasize uncertainty more than ever through frequency framed visualizations and characters like Fivey Fox, to whisper in our ear that we shouldn& #39;t get too sure of anything. 4/N

Does this say something about forecasters& #39; incentives? I& #39;ve been pondering uncertainty comm& #39;s relation to data scientists& #39; incentives for awhile. There& #39;s little formal discussion of this. Election forecasts give a concrete scenario to reason about possible goals, constraints. 5/N

For one, wider intervals might be a way to reduce ‘blaming the forecaster,’ if predicted winner doesn’t match what happens. I& #39;ve observed a need to remain a bit uncertain leading news organizations to strategize about how to avoid "race calling". 6/N

So what happens if your best model is a little too certain? Do you fudge it by adjusting some parameters? Introduce some ambiguous graphics? Maybe a cartoon fox who doesn’t believe in the law of large numbers? It’s very hard to evaluate these strategies. 7/N

But there& #39;s also reasons to downplay uncertainty, some of which I discuss here: https://bit.ly/2FdBWbp Some">https://bit.ly/2FdBWbp&q... have called this the "lure of incredible certitude" Looking confident is often a goal; consider a mechanic admitting they can& #39;t tell car& #39;s problem-you& #39;d go elsewhere 7/N

Forecast ‘landscapes’ w/ competing multiple forecasts may complicate incentives. Do forecasters& #39; aim to balance each other? Is the imagined scoring function based on the expected combination of forecasts? Are tighter intervals more acceptable here? 8/N

Journalism poses interesting constraints on its own. News requires novelty. A good forecast will tend to be stable. But then what do you write about? Ideally maybe journalists discuss model assumptions in depth, but sometimes that happens more on social media instead. 9/N

We make some recommendations. Forecasters have responsibilities to recognize statistical limits of precision and evaluation of calibration, plus fundamentals in election forecasting. For example, expressing win probability to decimal pts is not statistically coherent. 10/M

They should also recognize challenges of uncertainty comm & it& #39;s evaluation. We suggest thinking about readers’ priors, decision strategies & thresholds, more direct walkthroughs of assumptions, open models, & more frank talk about inevitability of incentives in forecasting 11/N

This paper was a blast to write and I learned a bit more about political science from my co-authors! Read more here: https://statmodeling.stat.columbia.edu/ ">https://statmodeling.stat.columbia.edu/">... or just check out the paper! http://www.stat.columbia.edu/~gelman/research/unpublished/forecast_incentives3.pdf">https://www.stat.columbia.edu/~gelman/r... (end)

Read on Twitter

Read on Twitter