I want to just highlight something important that& #39;s mentioned in the latest OpenAI release, but has been said before, and stands out to me as a key motif in human feedback and alignment:

*You can& #39;t just freeze a reward model and maximize it*

1/

*You can& #39;t just freeze a reward model and maximize it*

1/

If your plan for “the system does what people want” is anything like “learn or write down what people want, then optimize that”,

you need to do two things differently than the naive version:u2028

1. The reward model should be *dynamic*

2. The optimization should be *weak*

2/

you need to do two things differently than the naive version:u2028

1. The reward model should be *dynamic*

2. The optimization should be *weak*

2/

(If this is already obvious to you, I hope this thread just provides you with more pointers to places people have already said this. And feel free to chime in with more examples of where this point has been made)

3/

3/

This comes up any time there’s a simplified model standing in for “what& #39;s better/worse", and another process optimizing against it.

Most obviously in "reward learning" alignment agendas, but also in other areas I think share this underlying structure.

4/

Most obviously in "reward learning" alignment agendas, but also in other areas I think share this underlying structure.

4/

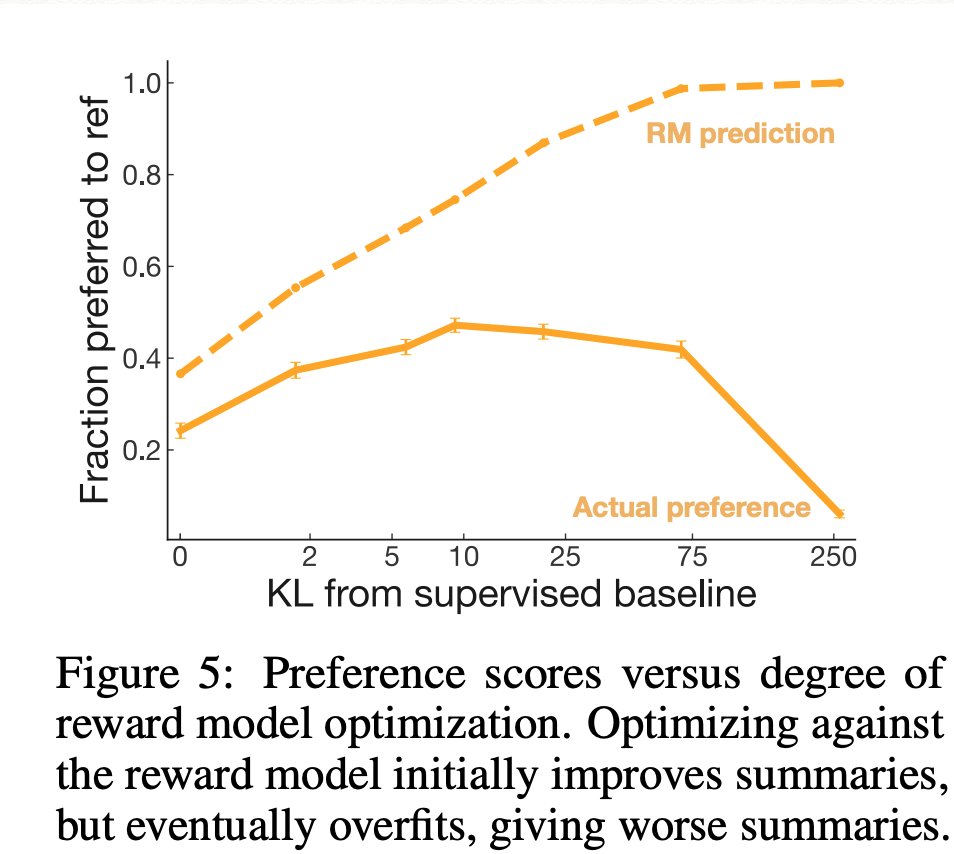

Ibarz et al. pointed this out when training Atari agents from human feedback:

https://arxiv.org/pdf/1811.06521.pdf

(red">https://arxiv.org/pdf/1811.... curve: predicted reward; blue curve: actual reward)

At some point the system learns to "hack"/"game" the learned model.

5/

https://arxiv.org/pdf/1811.06521.pdf

(red">https://arxiv.org/pdf/1811.... curve: predicted reward; blue curve: actual reward)

At some point the system learns to "hack"/"game" the learned model.

5/

Read on Twitter

Read on Twitter