VERY excited to announce new work from our team (one of the safety teams @OpenAI)!!  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🎉" title="Partyknaller" aria-label="Emoji: Partyknaller">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🎉" title="Partyknaller" aria-label="Emoji: Partyknaller">

We wanted to make training models to optimize human preferences Actually Work™.

We applied it to English abstractive summarization, and got some pretty good results.

A thread https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">: (1/n) https://twitter.com/OpenAI/status/1301914879721234432">https://twitter.com/OpenAI/st...

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">: (1/n) https://twitter.com/OpenAI/status/1301914879721234432">https://twitter.com/OpenAI/st...

We wanted to make training models to optimize human preferences Actually Work™.

We applied it to English abstractive summarization, and got some pretty good results.

A thread

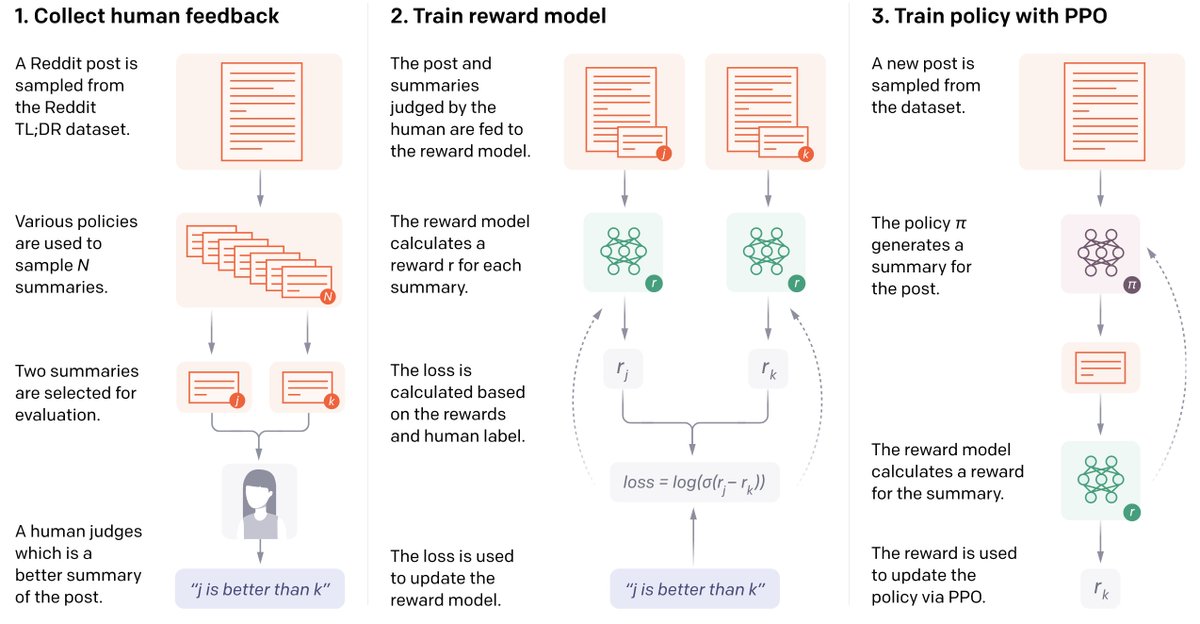

Our basic approach:

1) We collect a dataset of humans comparing two summaries.

2) We train a reward model (RM) to predict the human-preferred summary.

3) We train a summarization policy to maximize the RM & #39;reward& #39; using RL (PPO specifically). (2/n)

1) We collect a dataset of humans comparing two summaries.

2) We train a reward model (RM) to predict the human-preferred summary.

3) We train a summarization policy to maximize the RM & #39;reward& #39; using RL (PPO specifically). (2/n)

(All our models are transformers. We take an earlier version of GPT-3, and fine-tune it via supervised learning to predict the human-written TL;DRs from the Reddit TL;DR dataset. We use that to initialize all our models.)

(3/n)

(3/n)

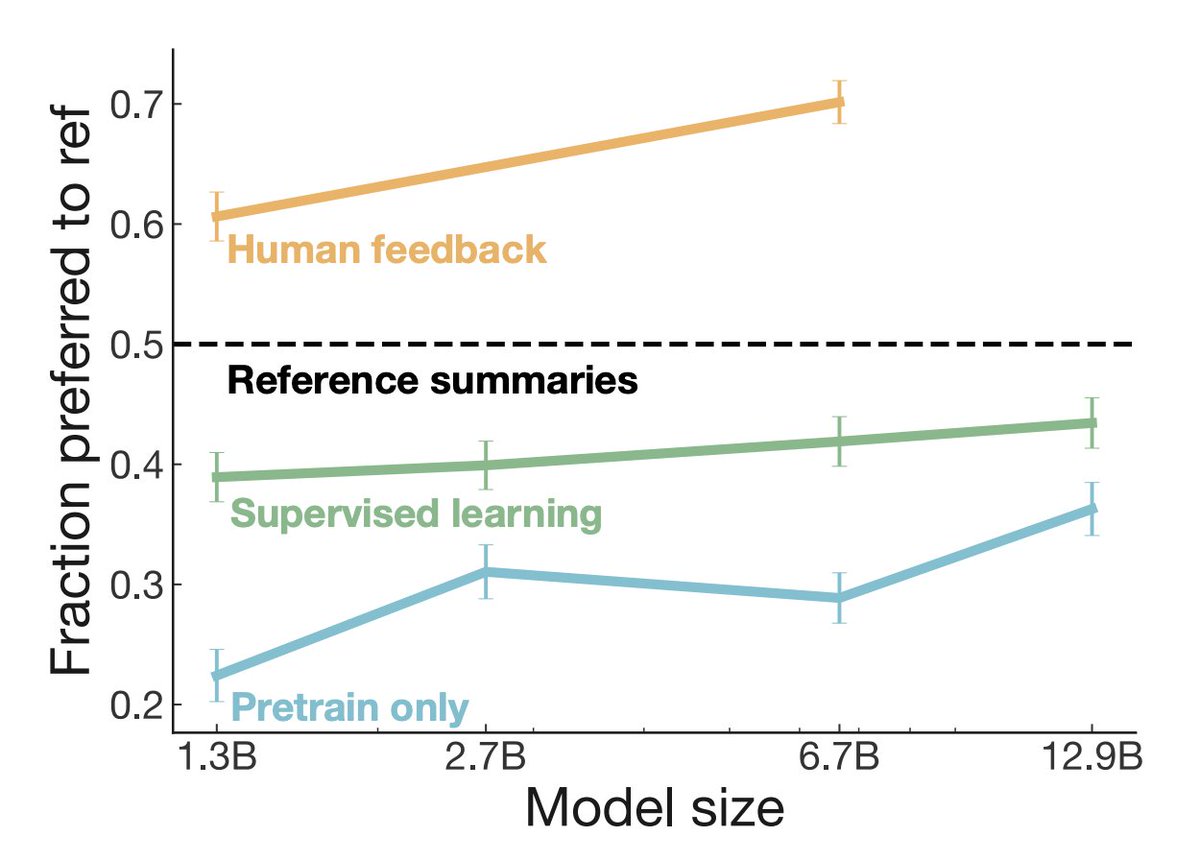

I think our results are pretty convincing. Our labelers prefer summaries from our 6.7B human feedback model ~70% of the time to the human-written reference TL;DRs.

(Note: this doesn& #39;t mean we& #39;re at & #39;human level& #39; -- TL;DRs aren& #39;t the best summaries that humans can write).

(4/n)

(Note: this doesn& #39;t mean we& #39;re at & #39;human level& #39; -- TL;DRs aren& #39;t the best summaries that humans can write).

(4/n)

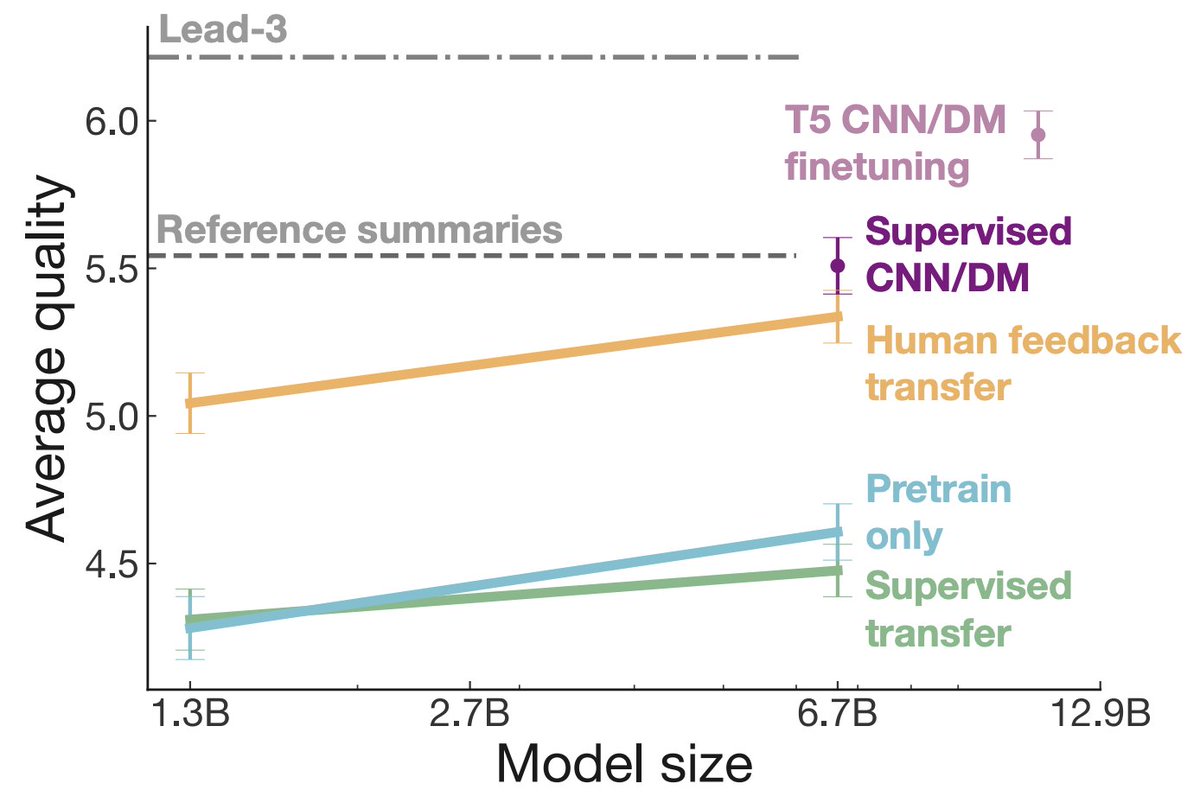

When we transferred our Reddit-trained models to summarize CNN/DM news articles, they & #39;just worked& #39;.

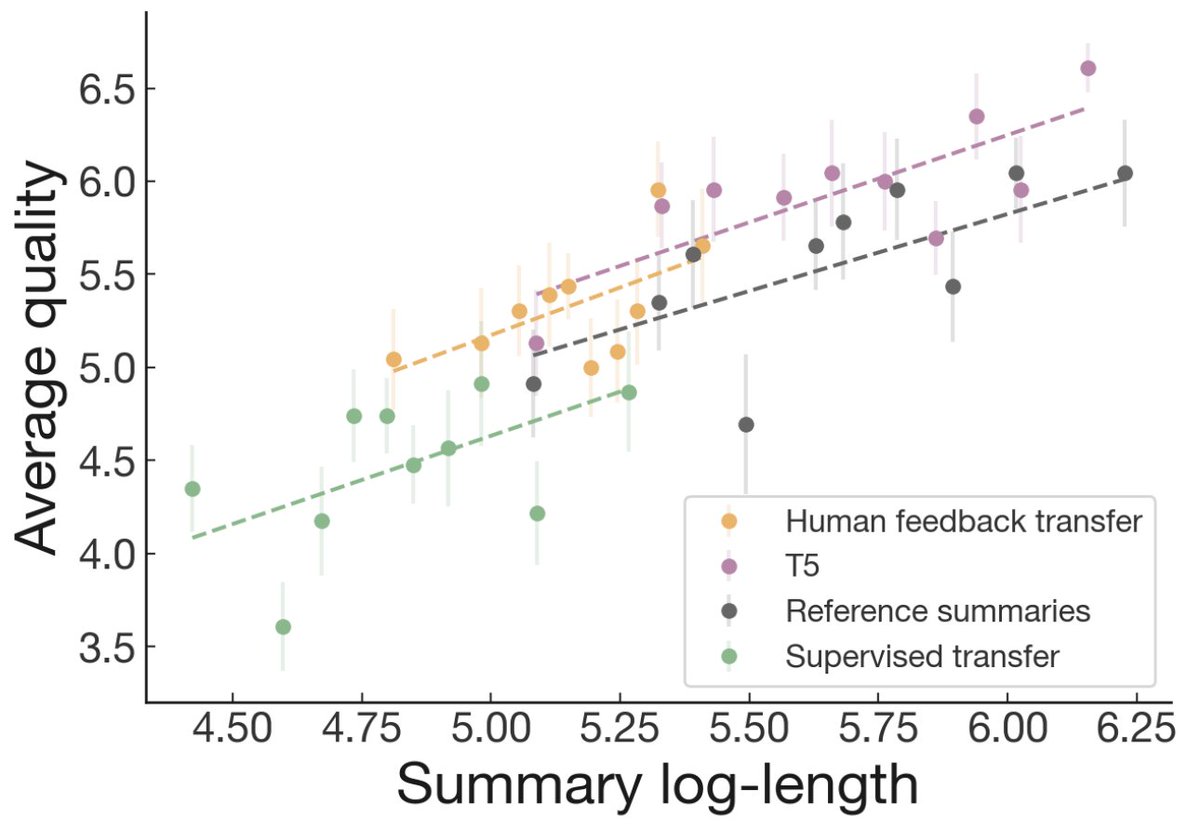

Summaries from our transfer model are >2x shorter, but they almost match supervised learning on CNN/DM. When controlling for length, we beat the CNN/DM ref summaries. (5/n)

Summaries from our transfer model are >2x shorter, but they almost match supervised learning on CNN/DM. When controlling for length, we beat the CNN/DM ref summaries. (5/n)

Fun fact: our labelers think the lead-3 baseline is better than the CNN/DM reference summaries! We checked this ourselves, and agreed with our labelers& #39; ratings.

Not sure what this means for the usefulness of CNN/DM as a dataset. (This wasn& #39;t the case for TL;DR.)

(6/n)

Not sure what this means for the usefulness of CNN/DM as a dataset. (This wasn& #39;t the case for TL;DR.)

(6/n)

Why are our results better than the last time we did this? ( https://openai.com/blog/fine-tuning-gpt-2/)

Our">https://openai.com/blog/fine... & #39;secret ingredient& #39; is working very closely with our labelers. We created an onboarding process, had a Slack channel where they could ask us questions, gave them lots of feedback, etc. (7/n)

Our">https://openai.com/blog/fine... & #39;secret ingredient& #39; is working very closely with our labelers. We created an onboarding process, had a Slack channel where they could ask us questions, gave them lots of feedback, etc. (7/n)

As a result, our researcher-labeler agreement is about the same as researcher-researcher agreement. This wasn& #39;t the case last time, when the data was collected online, which made it harder to track.

(We also scaled up model size. https://abs.twimg.com/emoji/v2/... draggable="false" alt="😇" title="Lächelndes Gesicht mit Heiligenschein" aria-label="Emoji: Lächelndes Gesicht mit Heiligenschein">)

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😇" title="Lächelndes Gesicht mit Heiligenschein" aria-label="Emoji: Lächelndes Gesicht mit Heiligenschein">)

(8/n)

(We also scaled up model size.

(8/n)

IMO one of the key takeaways of our paper is to work really closely with the humans labeling your data. This wasn& #39;t my approach at all in academia (which was more of the & #39;put it on MTurk and pray& #39; variety). But without it our results wouldn& #39;t have been nearly as good. (9/n)

Finally, why is this AI safety? Good question!

Our team& #39;s goal is to train models that are aligned with what humans really want them to do.

This isn& #39;t going to happen by default. Language models trained on the Internet will make up facts. They aren& #39;t & #39;trying& #39; to be honest. 10/n

Our team& #39;s goal is to train models that are aligned with what humans really want them to do.

This isn& #39;t going to happen by default. Language models trained on the Internet will make up facts. They aren& #39;t & #39;trying& #39; to be honest. 10/n

ML models optimize what you literally tell them to. If this is different from what you want, you& #39;ll get behavior you don& #39;t want.

This is sometimes called "reward misspecification". There are lots of examples of this happening (eg: https://openai.com/blog/faulty-reward-functions/).">https://openai.com/blog/faul...

(11/n)

This is sometimes called "reward misspecification". There are lots of examples of this happening (eg: https://openai.com/blog/faulty-reward-functions/).">https://openai.com/blog/faul...

(11/n)

For summarization, "what humans what" is fairly straightforward.

But this will get trickier when we move to harder problems + more powerful AI systems, where small differences between & #39;what& #39;s good for humans& #39; and & #39;what we& #39;re optimizing& #39; could have big consequences. (12/n)

But this will get trickier when we move to harder problems + more powerful AI systems, where small differences between & #39;what& #39;s good for humans& #39; and & #39;what we& #39;re optimizing& #39; could have big consequences. (12/n)

One encouraging thing to me about this line of work: safety/ alignment-motivated research can also be *practical*.

It can help you train models that do what you want, and avoid harmful side-effects. That makes AI systems way more useful and helpful!! (13/n)

It can help you train models that do what you want, and avoid harmful side-effects. That makes AI systems way more useful and helpful!! (13/n)

This is only part of the story though -- to optimize & #39;what humans want& #39;, we need to figure out what that is! That& #39;ll require cross-disciplinary work from a lot of fields, plus participation from people who are actually going to be affected by the technology. (14/n)

The "AI research bubble" isn& #39;t really a bubble any more. Our models are being used in the real world. It& #39;s only going to increase from here. So, the & #39;safety& #39; of a model doesn& #39;t just depend on the model itself, but on how it& #39;s deployed as part of a system that affects humans. 15/n

All of this work is with my incredible collaborators on the Reflection team: @paulfchristiano, Nisan Stiennon, Long Ouyang, Jeff Wu, Daniel Ziegler, and @csvoss, plus @AlecRad and Dario Amodei.  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔥" title="Feuer" aria-label="Emoji: Feuer">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔥" title="Feuer" aria-label="Emoji: Feuer"> https://abs.twimg.com/emoji/v2/... draggable="false" alt="❤️" title="Rotes Herz" aria-label="Emoji: Rotes Herz">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="❤️" title="Rotes Herz" aria-label="Emoji: Rotes Herz">

(fin)

(fin)

Read on Twitter

Read on Twitter