The field of neuroscience knows far too little about how nose boops affect the brain. To remedy this gaping hole in the literature, I scraped the @neuralink demo video to measure the response of neurons to nose boops. 1/

Gertrude is a happy pig with a brain implant in her somatosensory cortex. Whenever her snout is booped, she responds with a train of spikes. I downloaded the video ( ),">https://youtu.be/DVvmgjBL7... dumped frames using ffmpeg, and manually annotated boop and unboop events in ImageJ 2/

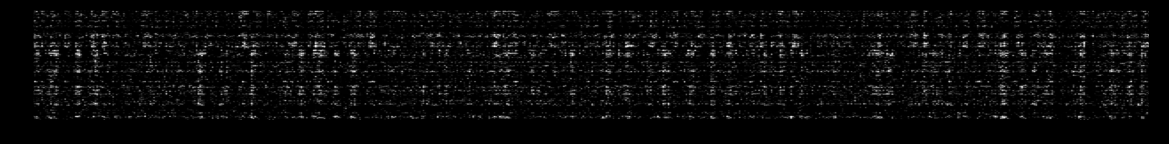

The video shows almost a minute of spike rasters (action potentials of different neurons binned at about 50Hz). Grabbing this data is as simple as loading up a frame and cropping it. Here& #39;s about 20 seconds of data. About half the raster is obscured by the pig video. 3/

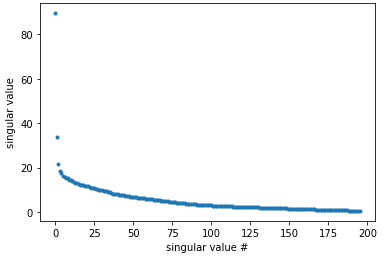

The data is very low-dimensional as attested by a singular value decomposition. I therefore focused on the tuning of the average of the spike rasters. 4/

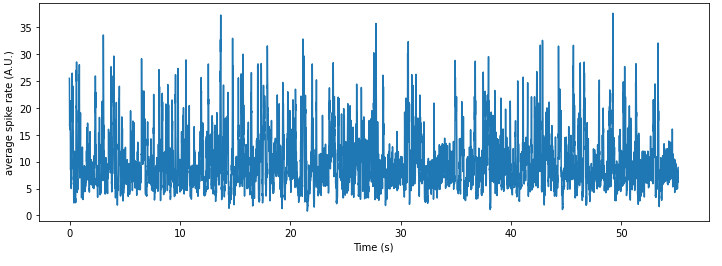

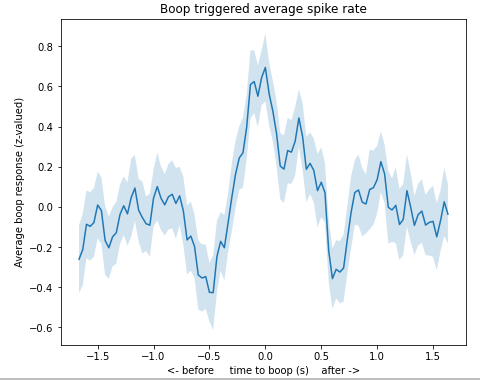

Time delaying the boops, we obtain a time lag matrix from which we can obtain a spike-triggered average, correcting for boop correlations. Lo and behold... a spike triggered average! This shows the average change in the spike response right before and after a boop. 5/

We don& #39;t know the relative timing of the pig video feed and the displayed raster, so time 0 is nominal; it& #39;s possible that the temporal response of the neurons is purely causal (after the boop) - but there might also be anticipatory inhibition of the response (around -.5). 6/

There look like there a few notches in the response, at around -.5, .2, and .7 s. There could be related to the pig& #39;s sniffing - the rapid intake of air into the snout with a specific rhythm means that boops tend to be periodic - you can see it in the vid. 7/

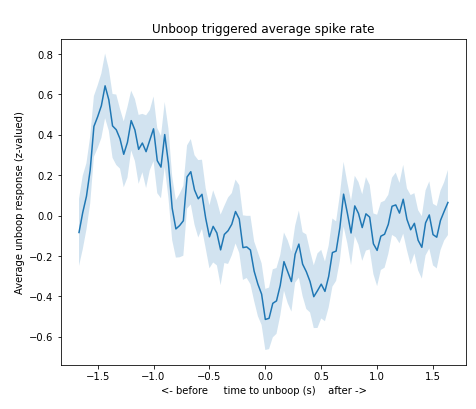

We can do the same with the removal of contact between snout and object (unboops), and obtain another an unboop spike triggered average. Unboop leads to a decrease in spike rate. 8/

P.S. the most annoying part of this analysis (and why I would not advocate analyzing found vids) was getting a good time base from the images. It turns out that the raster frame rate is uneven; on some video frames, the raster does not update, and on others it updates by a lot 9/

I tracked the red line in the raster image to get an idea of the true current frame; then I used a robust regression (Huber loss in sklearn) to get a coefficient to translate frame number to pixels on the screen. Then I could to translate everything into the same time base 10/10

Read on Twitter

Read on Twitter