@TheAuburner @AUChizad @NarrativeHater https://www.iza.org/publications/dp/13319/face-masks-considerably-reduce-covid-19-cases-in-germany-a-synthetic-control-method-approach

This">https://www.iza.org/publicati... morning I came across this paper, which estimates a significant reduction in Covid-19 cases following instances of mask mandates in Germany.

This">https://www.iza.org/publicati... morning I came across this paper, which estimates a significant reduction in Covid-19 cases following instances of mask mandates in Germany.

The authors use what is called the synthetic control method (SCM), which is a way to use observational data to estimate an effect from receiving a treatment on those who were treated. Absent a randomized control trial, the SCM might be the next-best way to quantify any effects.

The idea behind using the SCM is to ask “what would Covid cases have looked like in the absence of a mask mandate?” We can’t answer this question with a chart like what @TheAuburner provided because we’re not accounting for other confounding factors, such as anticipatory effects.

Even if we can say the mandate is an event outside of the control of people wearing the mask, inference from traditional regression will be unreliable if we can’t confidently say that infections in mandate and non-mandate states would follow a similar trend absent the mandate.

This is where the SCM has an advantage. We still have to assume that the mandate is an exogenous event, but now a synthetic control group can be created based on how well potential control units match the treated unit.

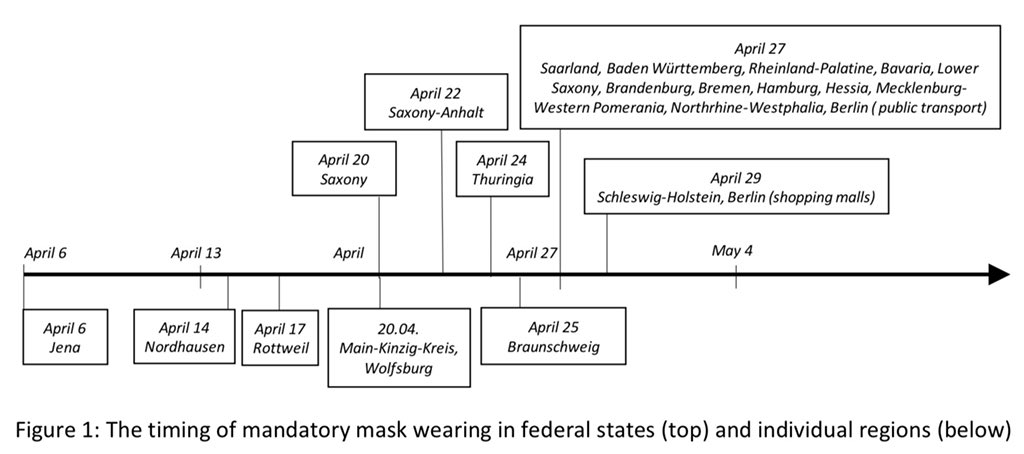

The authors focus on one German city, Jena, because their mandate began prior to the mandate from their federal state and earlier than most other municipal mandates, which allows them to use other cities as potential controls.

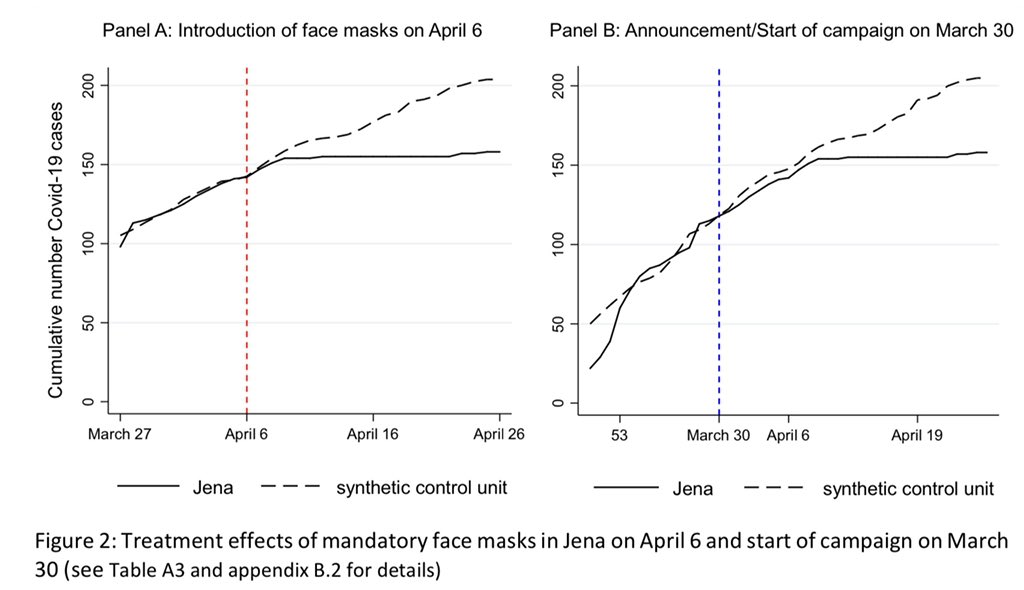

Their baseline result is in the Panel A. The synthetic control matches Jena reasonably well until the mandate begins, after which cumulative cases in Jena remain below what would be predicted by the control. Panel B addresses a point raised by @NarrativeHater...

Which is that cases level off too soon after the mandate for it to plausibly be the reason. In Panel B, they treat the announcement as the treatment period in order to capture this anticipatory effect.

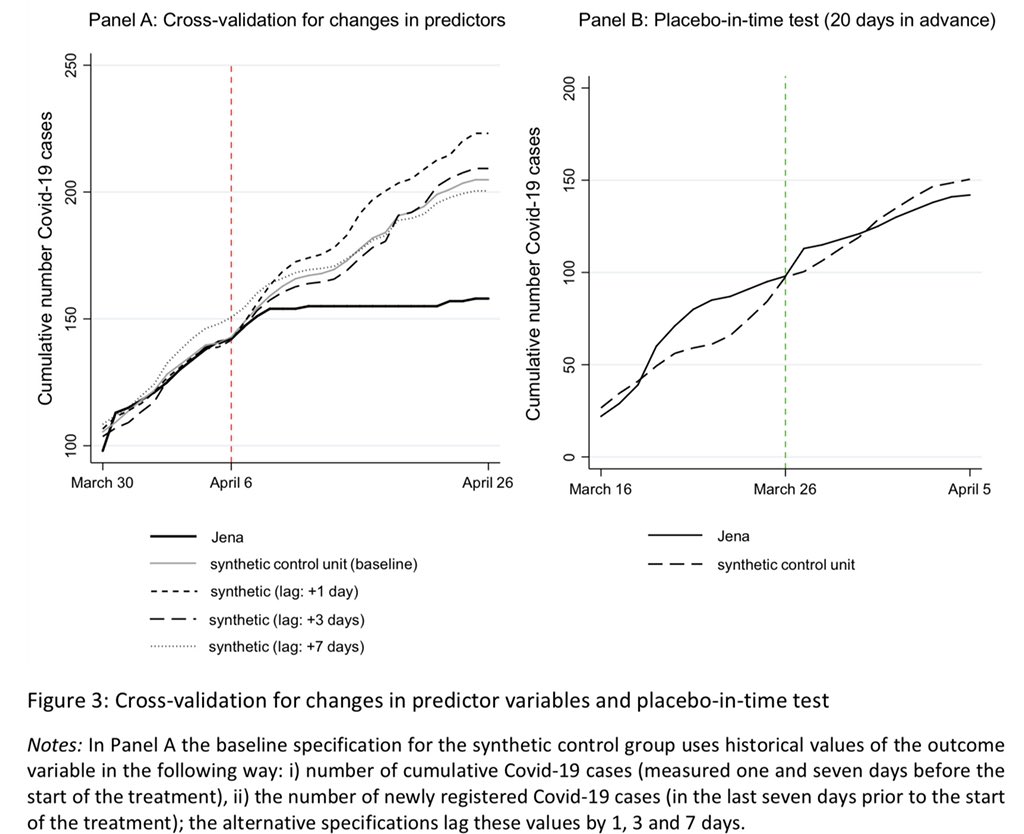

The authors try to address other potential issues as well. The SCM can be sensitive to the choice of predictor variables, the use of lagged values of the outcome variables, number of pre-treatment and post-treatment observations, pool of potential control units, etc.

Panel A addresses several of these concerns by cross-validating the results using different lags of cases prior to the mandate. They still find an effect. Panel B changes the date of the mandate and finds no effect, which bolsters their finding in the first figure.

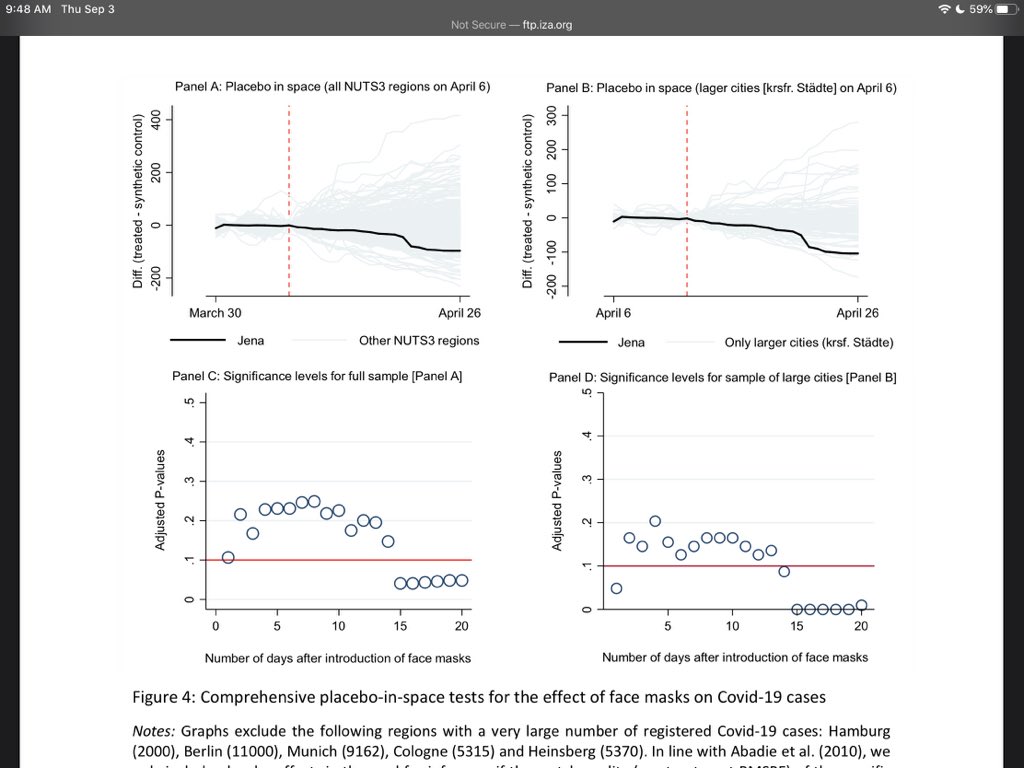

They conduct additional placebo tests where they assume mandates in cities and states that did not actually implement a mandate. The top two figures show the difference in cumulative cases between treatment and control for Jena and each placebo run. The effect in Jena is large.

The bottom two panels use the distribution of treatment effects for Jena and the placebo runs to estimate the prob. that the effect in Jena happened by chance. Using both regions and cities, the effect in Jena appears to be statistically significant following the mandate.

The do other tests that show support for the effect of the mandate. Of course, some criticisms still exist. What happens in a German city isn’t going to happen everywhere. They don’t account for other mitigating behaviors that are observable or unobservable.

I know from experience that even a well-done SCM can be sensitive. In particular, I’m not entirely convinced by how they treat staggered adoption of mandates (which I didn’t address). Still, these results seem credible and the make their data and code available.

I just thought you all would find the paper interesting. Plus, I’m going to talk about it in class and wanted to practice explaining it to people who might not have seen the SCM before.

Read on Twitter

Read on Twitter